Kubernetes/Tools

kubectl

Install kubectl

List of kubectl releases.

# List releases

curl -s https://api.github.com/repos/kubernetes/kubernetes/releases | jq -r '.[].tag_name' | sort -V

curl -s https://api.github.com/repos/kubernetes/kubernetes/releases | jq -r '[.[] | select(.prerelease == false) | .tag_name] | map(sub("^v";"")) | map(split(".")) | group_by(.[0:2]) | map(max_by(.[2]|tonumber)) | map(join(".")) | map("v" + .) | sort | reverse | .[]'

v1.30.2

v1.29.6

v1.28.11

v1.27.15

v1.26.15

# Latest

ARCH=amd64 # amd64|arm

VERSION=$(curl -s https://storage.googleapis.com/kubernetes-release/release/stable.txt); echo $VERSION

curl -LO https://storage.googleapis.com/kubernetes-release/release/$VERSION/bin/linux/$ARCH/kubectl

# Specific version

# Find specific Kubernetes release, then download kubectl

VERSION=v1.26.14; ARCH=amd64 # amd64|arm

curl -LO https://storage.googleapis.com/kubernetes-release/release/$VERSION/bin/linux/$ARCH/kubectl

sudo install ./kubectl /usr/local/bin/kubectl

# Note: sudo install := chmod +x ./kubectl; sudo mv

# Verify, kubectl should not be more than -/+ 1 minor version difference then api-server

kubectl version --short

Client Version: v1.26.14

Kustomize Version: v4.5.7

Server Version: v1.24.10

Google way

# Install kubectl if you don't already have a suitable version # https://kubernetes.io/docs/setup/release/version-skew-policy/#kubectl kubectl version --client || gcloud components install kubectl kubectl get clusterrolebinding $(gcloud config get-value core/account)-cluster-admin || kubectl create clusterrolebinding $(gcloud config get-value core/account)-cluster-admin \ --clusterrole=cluster-admin \ --user="$(gcloud config get-value core/account)"

kubectl plugin called gke-gcloud-auth-plugin

sudo apt-get install apt-transport-https ca-certificates gnupg curl curl https://packages.cloud.google.com/apt/doc/apt-key.gpg | sudo gpg --dearmor -o /usr/share/keyrings/cloud.google.gpg echo "deb [signed-by=/usr/share/keyrings/cloud.google.gpg] https://packages.cloud.google.com/apt cloud-sdk main" | sudo tee -a /etc/apt/sources.list.d/google-cloud-sdk.list sudo apt-get update sudo apt-get install google-cloud-cli # required to authenticate with GCP sudo apt-get install google-cloud-sdk-gke-gcloud-auth-plugin gcloud init

Autocompletion and kubeconfig

source <(kubectl completion bash); alias k=kubectl; complete -F __start_kubectl k

# Set default namespace

kubectl config set-context --current --namespace=dev

kubectl config set-context $(kubectl config current-context) --namespace=dev

vi ~/.kube/config

...

contexts:

- context:

cluster: kubernetes

user: kubernetes-admin

namespace: web # default namespace

name: dev-frontend

...

Add proxy-url using yq to kubeconfig

Minimum yq version required is v2.x, tested with yq 2.13.0. The example below does the file inline `-i` update.

yq -i -y --indentless '.clusters[0].cluster += {"proxy-url": "http://proxy.acme.com:8080"}' ~/.kube/$ENVIRONMENT

Get resources and cheatsheet

# Get a list of nodes

kubectl get nodes -o jsonpath="{.items[*].metadata.name}"

ip-10-10-10-10.eu-west-1.compute.internal ip-10-10-10-20.eu-west-1.compute.internal

kubectl get nodes -oname

node/ip-10-10-10-10.eu-west-1.compute.internal

node/ip-10-10-10-20.eu-west-1.compute.internal

...

# Pods sorted by node name

kubectl get pods --sort-by=.spec.nodeName -owide -A

# Watch a namespace in a convinient resources order | sts=statefulset, rs=replicaset, ep=endpoint, cm=configmap

watch -d kubectl -n dev get sts,deploy,rc,rs,pods,svc,ep,ing,pvc,cm,sa,secret,es,cronjob,job -owide --show-labels

# note es - externalsecrets

watch -d 'kubectl get pv -owide --show-labels | grep -e <eg.NAMESPACE>'

watch -d helm list -A

# Test your context by creating configMap

kubectl create configmap my-config --from-literal=key1=config1 --from-literal=key2=config2

kubectl delete configmap my-config

# Watch multiple namespaces

eval 'kubectl --context='{context1,context2}' --namespace='{ns1,ns2}' get pod;'

eval kubectl\ --context={context1,context2}\ --namespace={ns1,ns2}\ get\ pod\;

watch -d eval 'kubectl -n '{default,ingress-nginx}' get sts,deploy,rc,rs,pods,svc,ep,ing,pvc,cm,sa,secret,es,cronjob,job -owide --show-labels;'

# Auth, can-i

kubectl auth can-i delete pods

yes

Get yaml from existing object

kubectl create namespace kiali --dry-run=client -o yaml > ns.yaml

kubectl create namespace kiali --dry-run=client -o yaml | kubectl apply -f -

# Saves version revision in metadata.annotations.kubectl.kubernetes.io/last-applied-configuration={..manifest_json..}

kubectl create ns foo --save-config

# Get a yaml without status information, almost clean manifest. Deprecated '--export' before <v17.x.

kubectl -n web get pod <podName> -oyaml --export

Generate pod manifest, the most clean way I know

<syntaxhighlightjs lang=bash>

- kubectl -n foo run --image=ubuntu:20.04 ubuntu-1 --dry-run=client -oyaml -- bash -c sleep

apiVersion: v1 kind: Pod metadata:

creationTimestamp: null # <- can be deleted labels: run: ubuntu-1 name: ubuntu-1 namespace: foo

spec:

containers:

- args:

- bash

- -c

- sleep

image: ubuntu:20.04

name: ubuntu-1

resources: {} # <- can be deleted

dnsPolicy: ClusterFirst

restartPolicy: Always

status: {} # <- can be deleted </syntaxhighlightjs>

kubectl cp

Each container must be prefixed with a namespace, the copy-to-file(<filename>) must be in place. The recursive copy might be tricky.

kubectl cp [[namespace/]pod:]file/path ./<filename> -c <container_name> kubectl cp vegeta/vegeta-5847d879d8-p9kqw:plot.html ./plot.html -c vegeta

One liners

Single purpose pods

Note: --generator=deployment/apps.v1 is DEPRECATED and will be removed, use --generator=run-pod/v1 or kubectl create instead.

# Exec to deployment, no need to specify unique pod name

kubectl exec -it deploy/sleep -- curl httpbin:8000/headers

NS=mynamespace; LABEL='app.kubernetes.io/name=myvalue'

kubectl exec -n $NS -it $(kubectl get pod -l "$LABEL" -n $NS -o jsonpath='{.items[0].metadata.name}') -- bash

# Echo server

kubectl run --image=k8s.gcr.io/echoserver:1.4 hello-1 --port=8080

# Single purpose pods

kubectl run --image=bitnami/kubectl:1.21.8 kubectl-1 --rm -it -- get pods

kubectl run --image=appropriate/curl curl-1 --rm -it -- sh

kubectl run --image=ubuntu:18.04 ubuntu-1 --rm -it -- bash

kubectl create --image=ubuntu:20.04 ubuntu-2 --rm -it -- bash

kubectl run --image=busybox:1.31.0 busybox-1 --rm -it -- sh # exec and delete when completed

kubectl run --image=busybox:1.31.0 busybox-2 -- sleep 7200 # sleep, so you can exec

kubectl run --image=alpine alpine-1 --rm -it -- ping -c 1 8.8.8.8

docker run --rm -it --name alpine-1 alpine ping -c 1 8.8.8.8

# Network-multitool | https://github.com/wbitt/Network-MultiTool | Runs as a webserver, so won't complete.

kubectl run --image=wbitt/network-multitool multitool-1

kubectl create --image=wbitt/network-multitool deployment multitool

kubectl exec -it multitool-1 -- /bin/bash

kubectl exec -it deployment/multitool -- /bin/bash

docker run --rm -it --name network-multitool wbitt/network-multitool bash

# Curl

kubectl run test --image=tutum/curl -- sleep 10000

# Deprecation syntax

kubectl run --image=k8s.gcr.io/echoserver:1.4 --generator=run-pod/v1 hello-1 --port=8080 # VALID!

kubectl run --image=k8s.gcr.io/echoserver:1.4 --generator=deployment/apps.v1 hello-1 --port=8080 # <- deprecated

# Errors

# | error: --rm should only be used for attached containers

# | Error: unknown flag: --image # when kubectl create --image

Additional software

# Process and network comamnds export DEBIAN_FRONTEND=noninteractive # Ubuntu 20.04 DEBIAN_FRONTEND=noninteractive apt install -yq dnsutils iproute2 iputils-ping iputils-tracepath net-tools netcat procps # | dnsutils - nslookup, dig # | iproute2 - ip addr, ss # | iputils-ping - ping # | iputils-tracepath - tracepath # | net-tools - ifconfig # | netcat - nc # | procps - ps, top # Databases apt install -yq redis-tools apt install -yq postgresql-client # AWS cli v1 - Debian apt install python-pip pip install awscli # Network test without ping, nc or telnet (timeout 1 bash -c '</dev/tcp/127.0.0.1/22 && echo PORT OPEN || echo PORT CLOSED') 2>/dev/null

- kubectl heredocs

kubectl apply -f <(cat <<EOF EOF ) --dry-run=server

- One lines move to yamls

# kubectl exec -it ubuntu-2 -- bash

kubectl apply -f <(cat <<EOF

apiVersion: v1

kind: Pod

metadata:

# namespace: default

name: ubuntu-1

# annotations:

# kubernetes.io/psp: eks.privileged

# labels:

# app: ubuntu

spec:

containers:

- command:

- "sleep"

- "7200"

# args:

# - "bash"

image: ubuntu:20.04

imagePullPolicy: IfNotPresent

name: ubuntu-1

# securityContext:

# privileged: true

# tty: true

# dnsPolicy: ClusterFirst

# enableServiceLinks: true

restartPolicy: Never

# serviceAccount : sa1

# serviceAccountName: sa1

# nodeSelector:

# node.kubernetes.io/lifecycle: spot

EOF

) --dry-run=server

Docker - for a single missing commands

If you ever miss some commands you can use docker container package with it:

# curl - missing on minikube node that runs CoreOS minikube -p metrics ip; minikube ssh docker run appropriate/curl -- http://<NodeIP>:10255/stats/summary # check kubelet-metrics non secure endpoint

kubectl diff

Shows the differences between the current live object and the new dry-run object.

kubectl diff -f webfront-deploy.yaml

diff -u -N /tmp/LIVE-761963756/apps.v1.Deployment.default.webfront-deploy /tmp/MERGED-431884635/apps.v1.Deployment.default.webfront-deploy

--- /tmp/LIVE-761963756/apps.v1.Deployment.default.webfront-deploy 2019-10-13 17:46:59.784000000 +0000

+++ /tmp/MERGED-431884635/apps.v1.Deployment.default.webfront-deploy 2019-10-13 17:46:59.788000000 +0000

@@ -4,7 +4,7 @@

annotations:

deployment.kubernetes.io/revision: "1"

creationTimestamp: "2019-10-13T16:38:43Z"

- generation: 2

+ generation: 3

labels:

app: webfront-deploy

name: webfront-deploy

@@ -14,7 +14,7 @@

uid: ebaf757e-edd7-11e9-8060-0a2fb3cdd79a

spec:

progressDeadlineSeconds: 600

- replicas: 2

+ replicas: 1

revisionHistoryLimit: 10

selector:

matchLabels:

@@ -29,6 +29,7 @@

creationTimestamp: null

labels:

app: webfront-deploy

+ role: webfront

spec:

containers:

- image: nginx:1.7.8

exit status 1

Kubectl-plugins - Krew plugin manager

Install krew package manager for kubectl plugins, requires K8s v1.12+

(

set -x; cd "$(mktemp -d)" &&

OS="$(uname | tr '[:upper:]' '[:lower:]')" &&

ARCH="$(uname -m | sed -e 's/x86_64/amd64/' -e 's/\(arm\)\(64\)\?.*/\1\2/' -e 's/aarch64$/arm64/')" &&

KREW="krew-${OS}_${ARCH}" &&

curl -fsSLO "https://github.com/kubernetes-sigs/krew/releases/latest/download/${KREW}.tar.gz" &&

tar zxvf "${KREW}.tar.gz" &&

./"${KREW}" install krew

)

# update PATH

[ -d ${HOME}/.krew/bin ] && export PATH="${PATH}:${HOME}/.krew/bin"

# List plugins

kubectl krew search

# Install plugins

kubectl krew install sniff

# Upgrade plugins

kubectl krew upgrade

- Available kubectl plugins Github

- kubectl subcommands write your own plugin

Install kubectl plugins

kubectl ctx and kubectl ns - change context and set default namespace

kubectl krew install ctx ns

kubectl cssh - SSH into Kubernetes nodes

# Ssh to all nodes, example below for EKS v1.15.11 kubectl cssh -u ec2-user -i /git/secrets/ssh/dev.pem -a "InternalIP"

kubectl deprecations

kubectl deprecations- shows all the deprecated objects in a Kubernetes cluster allowing the operator to verify them before upgrading the cluster. It uses the swagger.json version available in master branch of Kubernetes repository (https://github.com/kubernetes/kubernetes/tree/master/api/openapi-spec) as a reference.

kubectl deprecations StatefulSet found in statefulsets.apps/v1beta1 ├─ API REMOVED FROM THE CURRENT VERSION AND SHOULD BE MIGRATED IMMEDIATELY!! -> OBJECT: myapp namespace: mynamespace1

Prior upgrade report. Script specific to EKS.

#!/bin/bash

[[ $# -eq 0 ]] && echo "no args, provide prefix for the file name" && exit 1

PREFIX=$1

TARGET_K8S_VER=v1.16.8

K8Sid=$(kubectl cluster-info | head -1 | cut -d'/' -f3 | cut -d'.' -f1)

kubectl deprecations --k8s-version $TARGET_K8S_VER > $PREFIX-$(kubectl cluster-info | head -1 | cut -d'/' -f3 | cut -d'.' -f1)-$(date +"%Y%m%d-%H%M")-from-$(kubectl version --short | grep Server | cut -f3 -d' ')-to-${TARGET_K8S_VER}.yaml

$ ./kube-deprecations.sh test $ ls -l -rw-rw-r-- 1 vagrant vagrant 29356 Jun 29 16:09 test-11111111112222222222333333333344-20200629-1609-from-v1.15.11-eks-af3caf-to-latest.yaml -rw-rw-r-- 1 vagrant vagrant 852 Jun 30 22:41 test-11111111112222222222333333333344-20200630-2241-from-v1.15.11-eks-af3caf-to-v1.16.8.yaml -rwxrwxr-x 1 vagrant vagrant 437 Jun 30 22:41 kube-deprecations.sh

kubectl df-pv

kubectl df-pv- Show disk usage (like unix df) for persistent volumes

kubectl df-pv PVC NAMESPACE POD SIZE USED AVAILABLE PERCENTUSED IUSED IFREE PERCENTIUSED rdbms-volume shared1 rdbms-d494fbf4-xrssk 2046640128 252817408 1777045504 12.35 688 130384 0.52 userdata-0 shared2 mft-0 21003583488 57692160 20929114112 0.27 749 1309971 0.06

kubectl sniff

Start a remote packet capture on pods using tcpdump.

kubectl sniff hello-minikube-7c77b68cff-qbvsd -c hello-minikube

# Flags:

# -c, --container string container (optional)

# -x, --context string kubectl context to work on (optional)

# -f, --filter string tcpdump filter (optional)

# -h, --help help for sniff

# --image string the privileged container image (optional)

# -i, --interface string pod interface to packet capture (optional) (default "any")

# -l, --local-tcpdump-path string local static tcpdump binary path (optional)

# -n, --namespace string namespace (optional) (default "default")

# -o, --output-file string output file path, tcpdump output will be redirect to this file instead of wireshark (optional) ('-' stdout)

# -p, --privileged if specified, ksniff will deploy another pod that have privileges to attach target pod network namespace

# -r, --remote-tcpdump-path string remote static tcpdump binary path (optional) (default "/tmp/static-tcpdump")

# -v, --verbose if specified, ksniff output will include debug information (optional)

The command above will open Wireshark, interesting article to follow:

kubectl neat

Print sanitized Kubernetes manifest.

kubectl get csec dummy-secret -n clustersecret -oyaml | kubectl neat apiVersion: clustersecret.io/v1 data: tls.crt: *** tls.key: *** kind: ClusterSecret matchNamespace: - anothernamespace metadata: name: dummy-secret namespace: clustersecret

Getting help like manpages kubectl explain

$ kubectl --help $ kubectl get --help $ kubectl explain --help $ kubectl explain pod.spec.containers # kubectl knows cluster version, so gives you correct schema details $ kubectl explain pods.spec.tolerations --recursive # show only fields (...) FIELDS: effect <string> key <string> operator <string> tolerationSeconds <integer> value <string>

- kubectl-commands K8s interactive kubectl command reference

Watch Containers logs

Stern

Note: https://github.com/wercker/stern repository has no activity ISSUE-140, the new community maintain repo is stern/stern

Log tailing and landscape viewing tool. It connects to kubeapi and streams logs from all pods. Thus using this external tool with clusters that have 100ts of containers can be put significant load on kubeapi.

It will re-use kubectl config file to connect to your clusters, so works oob.

- Install

# Govendor - this module manager is required

export GOPATH=$HOME/go # path where go modules can be found, used by 'go get -u <url>'

export PATH=$PATH:$GOPATH/bin # path to the additional 'go' binaries

go get -u github.com/kardianos/govendor # there will be no output

# Stern (official)

mkdir -p $GOPATH/src/github.com/stern # new link: https://github.com/stern/stern

cd $GOPATH/src/github.com/stern

git clone https://github.com/stern/stern.git && cd stern

govendor sync # there will be no output, may take 2 min

go install # no output

# Stern latest, download binary, no need for govendor

REPO=stern/stern

LATEST=$(curl --silent "https://api.github.com/repos/$REPO/releases/latest" | jq -r .tag_name | tr -d v); echo $LATEST

TEMPDIR=$(mktemp -d); FILE=stern_${LATEST}_linux_amd64

curl -L https://github.com/$REPO/releases/download/v${LATEST}/$FILE.tar.gz -o $TEMPDIR/$FILE.tar.gz

sudo tar xzvf $TEMPDIR/$FILE.tar.gz -C /usr/local/bin/ stern

- Usage

# Regex filter (pod-query) to match 2 pods patterns 'proxy' and 'gateway'

stern -n dev --kubeconfig ~/.kube/dev-config \(proxy\|gateway\) # escape to protect regex mod characters

stern -n dev --kubeconfig ~/.kube/dev-config '(proxy|gateway)' # single-quote to protect mod characters

# Template the output

stern --template '{{.Message}} ({{.NodeName}}/{{.Namespace}}/{{.PodName}}/{{.ContainerName}}){{"\n"}}' .

- Help

$ stern

Tail multiple pods and containers from Kubernetes

Usage:

stern pod-query [flags]

Flags:

-A, --all-namespaces If present, tail across all namespaces. A specific namespace is ignored even if specified with --namespace.

--color string Color output. Can be 'always', 'never', or 'auto' (default "auto")

--completion string Outputs stern command-line completion code for the specified shell. Can be 'bash' or 'zsh'

-c, --container string Container name when multiple containers in pod (default ".*")

--container-state string If present, tail containers with status in running, waiting or terminated. Default to running. (default "running")

--context string Kubernetes context to use. Default to current context configured in kubeconfig.

-e, --exclude strings Regex of log lines to exclude

-E, --exclude-container string Exclude a Container name

-h, --help help for stern

-i, --include strings Regex of log lines to include

--init-containers Include or exclude init containers (default true)

--kubeconfig string Path to kubeconfig file to use

-n, --namespace string Kubernetes namespace to use. Default to namespace configured in Kubernetes context.

-o, --output string Specify predefined template. Currently support: [default, raw, json] (default "default")

-l, --selector string Selector (label query) to filter on. If present, default to ".*" for the pod-query.

-s, --since duration Return logs newer than a relative duration like 5s, 2m, or 3h. Defaults to 48h.

--tail int The number of lines from the end of the logs to show. Defaults to -1, showing all logs. (default -1)

--template string Template to use for log lines, leave empty to use --output flag

-t, --timestamps Print timestamps

-v, --version Print the version and exit

- Usage

stern <pod> stern --tail 1 busybox -n <namespace> #this is RegEx that matches busybox1|2|etc

kubetail

Bash script that enables you to aggregate (tail/follow) logs from multiple pods into one stream. This is the same as running kubectl logs -f but for multiple pods.

Lens | Kubernetes IDE

Kubernetes client, this is not a dashboard that needs installing on a cluster. Similar to KUI but much more powerful.

# Deb curl curl https://api.k8slens.dev/binaries/Lens-5.4.1-latest.20220304.1.amd64.deb sudo apt-get install ./Lens-5.4.1-latest.20220304.1.amd64.deb # Snap snap list sudo snap install kontena-lens --classic # U16.04+, tested on U20.04 # Install from a .snap file mkdir -p ~/Downloads/kontena-lens && cd $_ snap download kontena-lens sudo snap ack kontena-lens_152.assert # add an assertion to the system assertion database sudo snap install kontena-lens_152.snap --classic # --dangerous if you do not have the assert file # download snap from https://k8slens.dev/ curl https://api.k8slens.dev/binaries/Lens-5.3.4-latest.20220120.1.amd64.snap sudo snap install Lens-5.3.4-latest.20220120.1.amd64.snap --classic --dangerous # Info $ snap info kontena-lens_152.assert name: kontena-lens summary: Lens - The Kubernetes IDE publisher: Mirantis Inc (jakolehm) store-url: https://snapcraft.io/kontena-lens contact: info@k8slens.dev license: Proprietary description: | Lens is the most powerful IDE for people who need to deal with Kubernetes clusters on a daily basis. Ensure your clusters are properly setup and configured. Enjoy increased visibility, real time statistics, log streams and hands-on troubleshooting capabilities. With Lens, you can work with your clusters more easily and fast, radically improving productivity and the speed of business. snap-id: Dek6y5mTEPxhySFKPB4Z0WVi5EPS9osS channels: latest/stable: 4.0.7 2021-01-20 (152) 107MB classic latest/candidate: 4.0.7 2021-01-20 (152) 107MB classic latest/beta: 4.0.7 2021-01-20 (152) 107MB classic latest/edge: 4.1.0-rc.1 2021-02-11 (157) 108MB classic $ snap info kontena-lens_152.snap path: "kontena-lens_152.snap" name: kontena-lens summary: Lens version: 4.0.7 classic build-date: 24 days ago, at 16:31 GMT license: unset description: | Lens - The Kubernetes IDE commands: - kontena-lens

OpenLens | Kubernetes IDE

Download binary from https://github.com/MuhammedKalkan/OpenLens

Install on Ubuntu

#!/bin/bash

SUDO=''

if (( $EUID != 0 )); then

SUDO='sudo'

fi

REPO=MuhammedKalkan/OpenLens

LATEST=$(curl --silent "https://api.github.com/repos/$REPO/releases/latest" | jq -r .tag_name | tr -d v); echo $LATEST

TEMPDIR=$(mktemp -d); FILE=OpenLens-${LATEST}.amd64.deb

curl -L https://github.com/${REPO}/releases/download/v${LATEST}/$FILE -o $TEMPDIR/$FILE

$SUDO dpkg -i $TEMPDIR/$FILE

$SUDO apt-get install -y --fix-broken

Build your own - gist

#!/bin/bash

install_deps_windows() {

echo "Installing Build Dependencies (Windows)..."

choco install -y make visualstudio2019buildtools visualstudio2019-workload-vctools

}

install_deps_darwin() {

echo "Installing Build Dependencies (Darwin)..."

xcode-select --install

if ! hash make 2>/dev/null; then

if ! hash brew 2>/dev/null; then

echo "Installing Homebrew..."

/bin/bash -c "$(curl -fsSL https://raw.githubusercontent.com/Homebrew/install/HEAD/install.sh)"

fi

echo "Installing make via Homebrew..."

brew install make

fi

}

install_deps_posix() {

echo "Installing Build Dependencies (Posix)..."

sudo apt-get install -y make g++ curl

}

install_darwin() {

echo "Killing OpenLens (if open)..."

killall OpenLens

echo "Installing OpenLens (Darwin)..."

rm -Rf "$HOME/Applications/OpenLens.app"

arch="mac"

if [[ "$(uname -m)" == "arm64" ]]; then

arch="mac-arm64" # credit @teefax

fi

cp -Rfp "$tempdir/lens/dist/$arch/OpenLens.app" "$HOME/Applications/"

rm -Rf "$tempdir"

print_alias_message

}

install_posix() {

echo "Installing OpenLens (Posix)..."

cd "$tempdir"

sudo dpkg -i "$(ls -Art $tempdir/lens/dist/*.deb | tail -n 1)"

rm -Rf "$tempdir"

print_alias_message

}

install_windows() {

echo "Installing OpenLens (Windows)..."

"$(/bin/ls -Art $tempdir/lens/dist/OpenLens*.exe | tail -n 1)"

rm -Rf "$tempdir"

print_alias_message

}

install_nvm() {

if [ -z "$NVM_DIR" ]; then

echo "Installing NVM..."

NVM_VERSION=$(curl -s https://api.github.com/repos/nvm-sh/nvm/releases/latest | sed -En 's/ "tag_name": "(.+)",/\1/p')

curl -o- https://raw.githubusercontent.com/nvm-sh/nvm/$NVM_VERSION/install.sh | bash

NVM_DIR="$HOME/.nvm"

fi

[ -s "$NVM_DIR/nvm.sh" ] && \. "$NVM_DIR/nvm.sh"

}

build_openlens() {

tempdir=$(mktemp -d)

cd "$tempdir"

if [ -z "$1" ]; then

echo "Checking GitHub API for latests tag..."

OPENLENS_VERSION=$(curl -s https://api.github.com/repos/lensapp/lens/releases/latest | sed -En 's/ "tag_name": "(.+)",/\1/p')

else

if [[ "$1" == v* ]]; then

OPENLENS_VERSION="$1"

else

OPENLENS_VERSION="v$1"

fi

echo "Using supplied tag $OPENLENS_VERSION"

fi

if [ -z $OPENLENS_VERSION ]; then

echo "Failed to get valid version tag. Aborting!"

exit 1

fi

curl -L https://github.com/lensapp/lens/archive/refs/tags/$OPENLENS_VERSION.tar.gz | tar xvz

mv lens-* lens

cd lens

NVM_CURRENT=$(nvm current)

nvm install 16

nvm use 16

npm install -g yarn

make build

nvm use "$NVM_CURRENT"

}

print_alias_message() {

if [ "$(type -t install_openlens)" != 'alias' ]; then

printf "It is recommended to add an alias to your shell profile to run this script again.\n"

printf "alias install_openlens=\"curl -o- https://gist.githubusercontent.com/jslay88/bf654c23eaaaed443bb8e8b41d02b2a9/raw/install_openlens.sh | bash\"\n\n"

fi

}

if [[ "$(uname)" == "Linux" ]]; then

install_deps_posix

install_nvm

build_openlens "$1"

install_posix

elif [[ "$(uname)" == "Darwin" ]]; then

install_deps_darwin

install_nvm

build_openlens "$1"

install_darwin

else

install_deps_windows

install_nvm

build_openlens "$1"

install_windows

fi

echo "Done!"

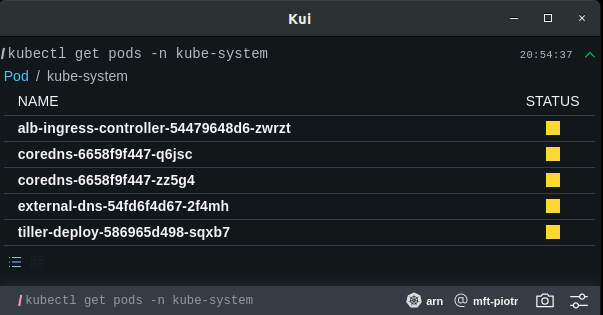

kui terminal

kui is a terminal with visualizations, provided by IBM

Install using continent install script into /opt/Kui-linux-x64/ and symlink Kui binary to /usr/local/bin/kui

REPO=kubernetes-sigs/kui LATEST=$(curl --silent "https://api.github.com/repos/$REPO/releases/latest" | jq -r .tag_name); echo $LATEST TEMPDIR=$(mktemp -d); FILE=Kui-linux-x64.zip curl -L https://github.com/$REPO/releases/download/$LATEST/Kui-linux-x64.zip -o $TEMPDIR/$FILE sudo mkdir -p /opt/Kui-linux-x64 sudo unzip $TEMPDIR/$FILE -d /opt/ # Run $> /opt/Kui-linux-x64/Kui

Run Kui as [Kubernetes plugin https://kubernetes.io/docs/tasks/extend-kubectl/kubectl-plugins/]

export PATH=$PATH:/opt/Kui-linux-x64/ # make sure Kui libs are in environment PATH kubectl kui get pods -A # -> a pop up window will show up $ kubectl plugin list The following compatible plugins are available: /opt/Kui-linux-x64/kubectl-kui

- Resources

- kui/wiki Github

popeye

Popeye is a utility that scans live Kubernetes cluster and reports potential issues with deployed resources and configurations.

# Install

REPO=derailed/popeye

RELEASE=popeye_Linux_x86_64.tar.gz

VERSION=$(curl --silent "https://api.github.com/repos/${REPO}/releases/latest" | jq -r .tag_name); echo $VERSION # latest

wget https://github.com/${REPO}/releases/download/${VERSION}/${RELEASE}

tar xf ${RELEASE} popeye --remove-files

sudo install popeye /usr/local/bin

# Usage

popeye # --out html

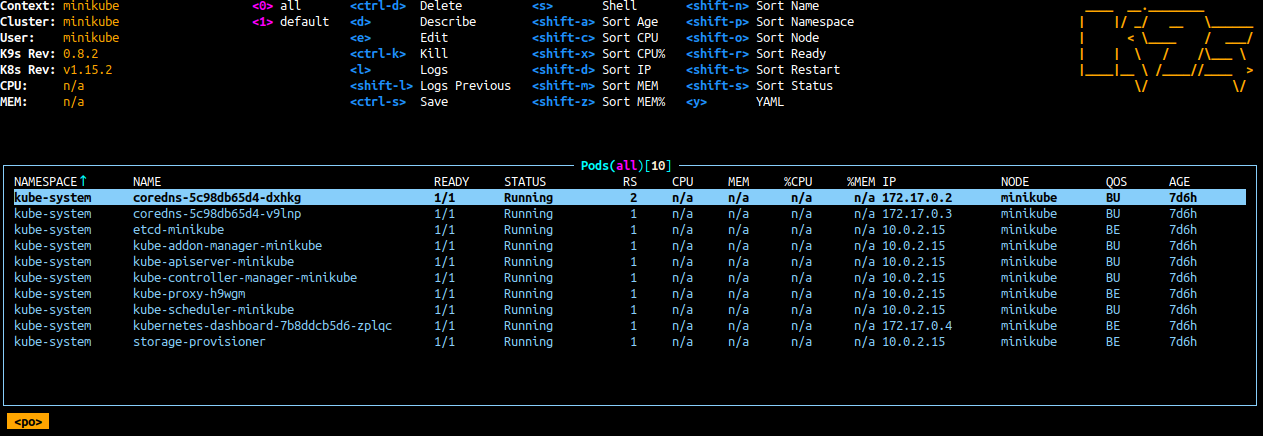

k9s

K9s provides a terminal UI to interact with Kubernetes clusters.

- Install

LATEST=$(curl --silent "https://api.github.com/repos/derailed/k9s/releases/latest" | jq -r .tag_name); echo $LATEST wget https://github.com/derailed/k9s/releases/download/$LATEST/k9s_Linux_amd64.tar.gz tar xf k9s_Linux_amd64.tar.gz --remove-files k9s sudo install k9s /usr/local/bin

- Usage

?help:nsselect namespace:nodesshow nodes

kubecolor

Kubecolor is a bash function that colorizes the output of kubectl get events -w.

# This script is not working

git clone https://github.com/droctothorpe/kubecolor.git ~/.kubecolor

echo "source ~/.kubecolor/kubecolor.bash" >> ~/.bash_profile # (or ~/.bashrc)

source ~/.bash_profile # (or ~/.bashrc)

# You can source this function instead

kube-events() {

kubectl get events --all-namespaces --watch \

-o 'go-template={{.lastTimestamp}} ^ {{.involvedObject.kind}} ^ {{.message}} ^ ({{.involvedObject.name}}){{"\n"}}' \

| awk -F^ \

-v black=$(tput setaf 0) \

-v red=$(tput setaf 1) \

-v green=$(tput setaf 2) \

-v yellow=$(tput setaf 3) \

-v blue=$(tput setaf 4) \

-v magenta=$(tput setaf 5) \

-v cyan=$(tput setaf 6) \

-v white=$(tput setaf 7) \

'{ $1=blue $1; $2=green $2; $3=white $3; } 1'

}

# Usage

kube-events

kubectl get events -A -w

kubectl get events --all-namespaces --watch -o 'go-template={{.lastTimestamp}} {{.involvedObject.kind}} {{.message}} ({{.involvedObject.name}}){{"\n"}}'

argo-rollouts

Argo Rollouts introduces a new custom resource called a Rollout to provide additional deployment strategies such as Blue Green and Canary to Kubernetes.

murre

Murre is an on-demand, scaleable source of container resource metrics for K8s. No dependencies needed, no install needed on a cluster.

goenv install 1.18 # although 1.19 is the latest and the install completes successfully it wont create the binary go install github.com/groundcover-com/murre@latest murre --sortby-cpu-util murre --sortby-cpu murre --pod kong-51xst murre --namespace dev

Kubernetes scripts

These Scripts allow you to troubleshoot and check the health status of the cluster and deployments They allow you to gather these information

- Cluster resources

- Cluster Nodes status

- Nodes Conditions

- Pods per Nodes

- Worker Nodes Per Availability Zones

- Cluster Node Types

- Pods not in running or completed status

- Top Pods according to Memory Limits

- Top Pods according to CPU Limits

- Number of Pods

- Pods Status

- Max Pods restart count

- Readiness of Pods

- Pods Average Utilization

- Top Pods according to CPU Utilization

- Top Pods according to Memory Utilization

- Pods Distribution per Nodes

- Node Distribution per Availability Zone

- Deployments without correct resources (Memory or CPU)

- Deployments without Limits

- Deployments without Application configured in Labels

Multi-node clusters

Note: Kubernetes/minikube can do this natively

Build multi node cluster for development.

On a single machine

- kube-spawn tool for creating a multi-node Kubernetes (>= 1.8) cluster on a single Linux machine

- kubernetes-dind-cluster Kubernetes multi-node cluster for developer of Kubernetes that launches in 36 seconds

- kind is a tool for running local Kubernetes clusters using Docker container “nodes”

- Vagrant full documentation in thsi article

Full cluster provisioning

- kubespray Deploy a Production Ready Kubernetes Cluster

- kops get a production grade Kubernetes cluster up and running

crictl

CLI and validation tools for Kubelet Container Runtime Interface (CRI). Used for debugging Kubernetes nodes with crictl. crictl requires a Linux operating system with a CRI runtime. Creating containers with this tool on K8s cluster, will eventually cause that Kubernetes will delete these containers.

kubediff show diff code vs what is deployed

Kubediff is a tool for Kubernetes to show you the differences between your running configuration and your version controlled configuration.

Mozilla SOPS - secret manager

- SOPS Mozilla SOPS: Secrets OPerationS, sops is an editor of encrypted files that supports YAML, JSON, ENV, INI and BINARY formats and encrypts with AWS KMS, GCP KMS, Azure Key Vault and PGP

Install

LATEST=$(curl --silent "https://api.github.com/repos/getsops/sops/releases/latest" | jq -r .tag_name); echo $LATEST

TEMPDIR=$(mktemp -d)

curl -sL https://github.com/mozilla/sops/releases/download/${LATEST}/sops-${LATEST}.linux.amd64 -o $TEMPDIR/sops

sudo install $TEMPDIR/sops /usr/local/bin/sops

Kompose (Kubernetes + Compose)

kompose is a tool to convert docker-compose files to Kubernetes manifests. kompose takes a Docker Compose file and translates it into Kubernetes resources.

# Linux curl -L https://github.com/kubernetes/kompose/releases/download/v1.21.0/kompose-linux-amd64 -o kompose sudo install ./kompose /usr/local/bin/kompose # option 1 chmod +x kompose; sudo mv ./kompose /usr/local/bin/kompose # option 2 # Completion source <(kompose completion bash) # Convert kompose convert -f docker-compose-mac.yaml WARN Restart policy 'unless-stopped' in service mysql is not supported, convert it to 'always' INFO Kubernetes file "mysql-service.yaml" created INFO Kubernetes file "cluster-dir-persistentvolumeclaim.yaml" created INFO Kubernetes file "mysql-deployment.yaml" created

- References

- kompose Github

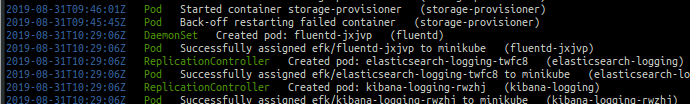

kube-iptables-tailer - ip-table drop packages logger

Allows to view iptables dropped packages, useful when working with Network Policies to identify pods trying to talk to disallowed destinations.

This project deploys kube-iptables-tailer daemonset that watches iptables log /var/log/iptables.log on each k8s-node mounted as hostPath volume. It filters the log for custom prefix, set in daemonset.spec.template.spec.containers.env and sends to cluster events.

env:

- name: "IPTABLES_LOG_PATH"

value: "/var/log/iptables.log"

- name: "IPTABLES_LOG_PREFIX"

# log prefix defined in your iptables chains

value: "my-prefix:"

$ iptables -A CHAIN_NAME -j LOG --log-prefix "EXAMPLE_LOG_PREFIX: "

Example output, when packet dropped

$ kubectl describe pods --namespace=YOUR_NAMESPACE ... Events: FirstSeen LastSeen Count From Type Reason Message --------- -------- ----- ---- ---- ------ ------- 1h 5s 10 kube-iptables-tailer Warning PacketDrop Packet dropped when receiving traffic from example-service-2 (IP: 22.222.22.222). 3h 2m 5 kube-iptables-tailer Warning PacketDrop Packet dropped when sending traffic to example-service-1 (IP: 11.111.11.111).

ksniff - pipe a pod traffic to Wireshark or Tshark

A kubectl plugin that utilize tcpdump and Wireshark to start a remote capture on any pod

flagger - canary deployments

Flagger is a Kubernetes operator that automates the promotion of canary deployments using Istio, Linkerd, App Mesh, NGINX, Skipper, Contour or Gloo routing for traffic shifting and Prometheus metrics for canary analysis.

Kubeval

Kubeval is used to validate one or more Kubernetes configuration files, and is often used locally as part of a development workflow as well as in CI pipelines.

# Install wget https://github.com/instrumenta/kubeval/releases/latest/download/kubeval-linux-amd64.tar.gz tar xf kubeval-linux-amd64.tar.gz sudo cp kubeval /usr/local/bin # Usage $> kubeval my-invalid-rc.yaml WARN - my-invalid-rc.yaml contains an invalid ReplicationController - spec.replicas: Invalid type. Expected: integer, given: string $> echo $? 1

kubeconform - improved Kubeval

Kubeconform is a Kubernetes manifests validation tool.

# Install wget https://github.com/yannh/kubeconform/releases/latest/download/kubeconform-linux-amd64.tar.gz tar xf kubeconform-linux-amd64.tar.gz sudo install kubeconform /usr/local/bin # Show version kubeconform -v v0.4.14

Observability

KUR8 - like Elastic.io EFK dashboards

Note: I've deployed v1.0.0 to monitoring ns along with already existing service

kube-prometheus-stack-prometheus:9090 but the application was crashing

CPU Load pods

# Repeat the command times x CPU cat /proc/cpuinfo | grep processor | wc -l # count processors yes > /dev/null &

References

- kubectl overview - resources types, Namespaced, kinds K8s docs

- kubetail Bash script that enables you to aggregate (tail/follow) logs from multiple pods into one stream. This is the same as running "kubectl logs -f " but for multiple pods.

- kubectx kubens Kubernetes config switches for context and setting up default namespace

- manages different ver kubectl blog

- kubectl Kubectl Conventions

Cheatsheets

- cheatsheet-kubernetes-A4 by dennyzhang

Other projects