Kubernetes/Ingress controller

The Ingress resource type was introduced in Kubernetes version 1.1. The Kubernetes cluster must have an Ingress controller deployed in order for you to be able to create Ingress resources. What is the Ingress controller? The Ingress controller is deployed as a Docker container on top of Kubernetes. Its Docker image contains a load balancer like nginx or HAProxy and a controller daemon. The controller daemon receives the desired Ingress configuration from Kubernetes. It generates an nginx or HAProxy configuration file and restarts the load balancer process for changes to take effect. In other words, Ingress controller is a load balancer managed by Kubernetes.

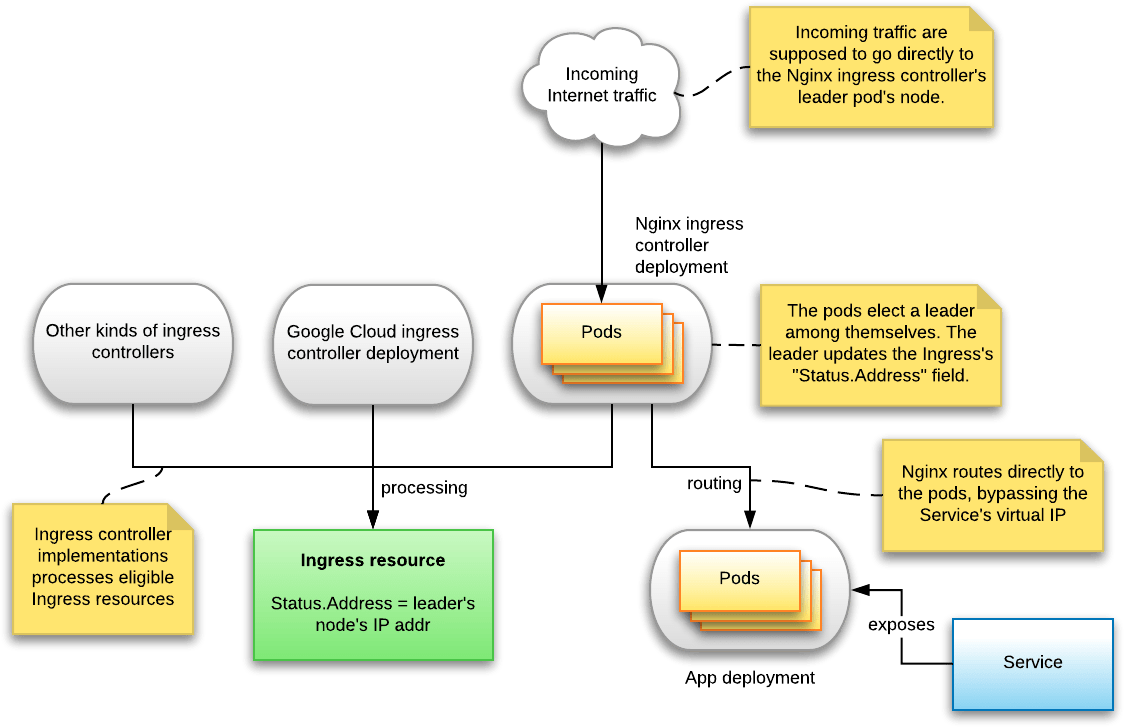

Ingress resources don't do anything by themselves: they are processed by ingress controllers, which vanilla Kubernetes does not provide by default.

- Ingress vs Loadbalancer service

The difference between the LoadBalancer service and the Ingress in how the traffic routing is realized. In the case of the LoadBalancer service, the traffic that enters through the external load balancer is forwarded to the kube-proxy that in turn forwards the traffic to the selected pods. The Ingress load balancer forwards the traffic straight to the selected pods which is more efficient.

Ingress object

Example below has been fetched from Minikube

stern nginx --all-namespaces nginx

kube-system ingress-nginx-controller-xx controller ------------------------------------------------------------ kube-system ingress-nginx-controller-xx controller NGINX Ingress controller kube-system ingress-nginx-controller-xx controller Release: 0.32.0 kube-system ingress-nginx-controller-xx controller Build: git-446845114 kube-system ingress-nginx-controller-xx controller Repository: https://github.com/kubernetes/ingress-nginx kube-system ingress-nginx-controller-xx controller nginx version: nginx/1.17.10 kube-system ingress-nginx-controller-xx controller ------------------------------------------------------------

$ kubectl get ingresses ingress-with-auth -oyaml

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

annotations:

kubernetes.io/ingress.class: nginx # useful annotation

nginx.ingress.kubernetes.io/auth-realm: Authentication Required - foo

nginx.ingress.kubernetes.io/auth-secret: basic-auth

nginx.ingress.kubernetes.io/auth-type: basic

name: ingress-with-auth

namespace: default

spec:

# backend: # <Object> default backend

serviceName: # <string>

servicePort: # <string>

rules: # <[]object> list of host rules, if not specified sent to default backend

- host: echo-1.ingress.k8s.acme.cloud # fqdn of a network host, IPs are not allowed

http: # `:` is not respected because ports are not allowed

paths: # currently the port of an Ingress is http:80 and :443 for https

- backend:

serviceName: http-svc

servicePort: 80

path: /

# tls: # <[]Object> TLS configuration, currently supports a single TLS port:443

# - secretName: tls-certificate # secret used to terminate SSL traffic on 443

# hosts: # optional rules.host is used if unspecified; must match names in tlsSecret

# - echo-1.ingress.k8s.acme.cloud

status:

loadBalancer:

ingress:

- ip: 172.17.0.2 # Kubernetes API server IP

# public IP address on which this Ingress is available

# this is nginx-controller pod (leader) node IP

Status.Address update is a background goroutine that runs once a minute, queries the IP address of the node on which the Nginx ingress controller is running, and simply updates the Status.Address to that value.

Simple /etc/hosts entry will make your local machine to access an application exposed via Ingress

172.17.0.2 echo-1.ingress.k8s.acme.cloud

Interesting fact is that the ingress IP is actually the leader pod node's ip

$ kubectl get nodes -owide

NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

minikube-v1.15 Ready master 172m v1.15.11 172.17.0.2 <none> Ubuntu 19.10 5.3.0-59-generic docker://19.3.2

$ minikube ssh # ssh to Kubernetes node

docker@minikube-v1:~$ ip a | grep "inet "

inet 127.0.0.1/8 scope host lo

inet 172.18.0.1/16 brd 172.18.255.255 scope global docker0

inet 172.17.0.2/16 brd 172.17.255.255 scope global eth0 # <- K8s node ip

The ingress-nginx-controller pod exclusively binds to listen on ports :80 and :443 on the node is running

# $ minikube addons enable ingress # Running

$ sudo ss -lntp | tail +1 | sort -k4

State Recv-Q Send-Q Local Address:Port Peer Address:Port

LISTEN 0 128 *:10250 *:* users:(("kubelet",pid=5342,fd=28))

LISTEN 0 128 *:10251 *:* users:(("kube-scheduler",pid=6070,fd=3))

LISTEN 0 128 *:10252 *:* users:(("kube-controller",pid=6023,fd=3))

LISTEN 0 128 *:10256 *:* users:(("kube-proxy",pid=6679,fd=10))

LISTEN 0 128 *:2376 *:* users:(("dockerd",pid=88,fd=6))

LISTEN 0 128 *:30012 *:* users:(("kube-proxy",pid=6679,fd=14))

LISTEN 0 128 *:30932 *:* users:(("kube-proxy",pid=6679,fd=13))

LISTEN 0 128 *:32392 *:* users:(("kube-proxy",pid=6679,fd=11))

LISTEN 0 128 # -> *:443 *:* users:(("docker-proxy",pid=8486,fd=4)) # <- added by nginx-controller

LISTEN 0 128 # -> *:80 *:* users:(("docker-proxy",pid=8505,fd=4)) # <- added by nginx-controller

LISTEN 0 128 *:8443 *:* users:(("kube-apiserver",pid=5917,fd=3))

LISTEN 0 128 [::]:111 [::]:* users:(("rpcbind",pid=77,fd=6),("systemd",pid=1,fd=47))

LISTEN 0 128 [::]:22 [::]:* users:(("sshd",pid=89,fd=4))

LISTEN 0 128 [::]:37289 [::]:* users:(("rpc.statd",pid=3050,fd=11))

LISTEN 0 128 0.0.0.0:111 0.0.0.0:* users:(("rpcbind",pid=77,fd=4),("systemd",pid=1,fd=45))

LISTEN 0 128 0.0.0.0:22 0.0.0.0:* users:(("sshd",pid=89,fd=3))

LISTEN 0 128 0.0.0.0:39729 0.0.0.0:* users:(("rpc.statd",pid=3050,fd=9))

LISTEN 0 128 127.0.0.1:10248 0.0.0.0:* users:(("kubelet",pid=5342,fd=21))

LISTEN 0 128 127.0.0.1:10257 0.0.0.0:* users:(("kube-controller",pid=6023,fd=5))

LISTEN 0 128 127.0.0.1:10259 0.0.0.0:* users:(("kube-scheduler",pid=6070,fd=5))

LISTEN 0 128 127.0.0.1:2379 0.0.0.0:* users:(("etcd",pid=5867,fd=5))

LISTEN 0 128 127.0.0.1:2381 0.0.0.0:* users:(("etcd",pid=5867,fd=10))

LISTEN 0 128 127.0.0.1:38999 0.0.0.0:* users:(("kubelet",pid=5342,fd=9))

LISTEN 0 128 127.0.0.1:40073 0.0.0.0:* users:(("containerd",pid=87,fd=8))

LISTEN 0 128 172.17.0.2:10249 0.0.0.0:* users:(("kube-proxy",pid=6679,fd=12))

LISTEN 0 128 172.17.0.2:2379 0.0.0.0:* users:(("etcd",pid=5867,fd=6))

LISTEN 0 128 172.17.0.2:2380 0.0.0.0:* users:(("etcd",pid=5867,fd=3))

LISTEN 0 128 172.17.0.2:2381 0.0.0.0:* users:(("etcd",pid=5867,fd=12))

Nginx ingress controller

- Events changes watch

Nginx controller listens for events on the following resource types changes:

- Ingresses

- Endpoints

- Secrets

- ConfigMaps

Events get into the queue represented by controller.syncQueue and the internal/ingress/controller/controller.go queue handler function, function syncIngress(). This function collects all necessary information to regenerate the Nginx config file: it fetches all relevant Ingress objects and looks up associated Pods' IP addresses that the Ingresses should route to.

- Build new configuration and reload

syncIngress() then calls internal/ingress/controller/nginx.go function OnUpdate() to actually write out the new Nginx config file and to reload Nginx.

- Ingress

Status.Addressdetermination

A background goroutine which, once a minute, queries the IP address of the node on which the Nginx ingress controller is running, and simply updates the "Status.Address" to that value. With multiple controller pods running, the leader's IP is used.

- Not using Services

ingress-nginx-controller does not route traffic to the associated Service's virtual IP address. Instead it routes directly to the pods' IP addresses, using the endpoints API Resources. This allows for session affinity, custom load balancing algorithms, less overhead, such as conntrack entries for iptables DNAT.

- Resources

Resources

- Kubernetes Ingress Explained For Beginners by KodeKloud