Kubernetes/Google GKE

Create a cluster

gcloud container clusters create \

--cluster-version=1.13 --image-type=UBUNTU \

--disk-type=pd-ssd --disk-size=10GB \

--no-enable-cloud-logging --no-enable-cloud-monitoring \

--addons=NetworkPolicy --issue-client-certificate \

--region europe-west1-b --username=admin cluster-1

# ----cluster-ipv4-cidr *.*.*.*

NAME LOCATION MASTER_VERSION MASTER_IP MACHINE_TYPE NODE_VERSION NUM_NODES STATUS

cluster-1 europe-west1-b 1.13.7-gke.8 35.205.145.199 n1-standard-1 1.13.7-gke.8 3 RUNNING

#Free up some resources

watch -d kubectl get pods --all-namespaces

kubectl -n kube-system scale --replicas=0 deployment/kube-dns-autoscaler

kubectl -n kube-system scale --replicas=1 deployment/kube-dns

GKE creates a LimitRange resource in the default namespace, called limits, which gives Pods a 100m CPU request if not specified. This default is too high for some of the services, which can cause workloads not to schedule.

kubectl get limitrange limits NAME CREATED AT limits 2019-07-13T12:55:57Z kubectl delete limitrange limits limitrange "limits" deleted

Resize cluster

To save some money you can resize you cluster to zero nodes, because for running Control-Plane nodes you are not billed. Example below resizes cluster-1 cluster.

$ gcloud container clusters resize cluster-1 --size=0 Pool [default-pool] for [cluster-1] will be resized to 0. Do you want to continue (Y/n)? y Resizing cluster-1...done. Updated [https://container.googleapis.com/v1/projects/responsive-sun-123456/zones/europe-west1-b/clusters/cluster-1]

All pods will get terminated and required pods eg. kube-dns, metrics-server will change status to pending. They will get rescheduled once nodes are added to the cluster.

Cluster commands

gcloud container clusters list gcloud container clusters describe cluster-1 gcloud container clusters delete cluster-1

Ssh to a node

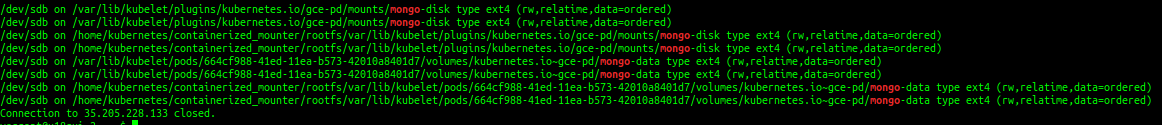

kubectl get nodes $ gcloud compute ssh gke-cluster-2-jan2020-default-pool-3e5d4a20-ctdg -- mount | grep mongo WARNING: The public SSH key file for gcloud does not exist. WARNING: The private SSH key file for gcloud does not exist. WARNING: You do not have an SSH key for gcloud. WARNING: SSH keygen will be executed to generate a key. Enter passphrase (empty for no passphrase): Enter same passphrase again: Updating project ssh metadata...-Updated [https://www.googleapis.com/compute/v1/projects/responsive-sun-123456]. Updating project ssh metadata...done. Waiting for SSH key to propagate. Warning: Permanently added 'compute.6068607934627948415' (ECDSA) to the list of known hosts. ############################################################################## # WARNING: Any changes on the boot disk of the node must be made via # DaemonSet in order to preserve them across node (re)creations. # Node will be (re)created during manual-upgrade, auto-upgrade, # auto-repair or auto-scaling. # See https://cloud.google.com/kubernetes-engine/docs/concepts/node-images#modifications # for more information. ############################################################################## Connection to 35.205.228.133 closed. # After ~10 min since PD disk has been created $> gcloud compute ssh gke-cluster-2-jan2020-default-pool-3e5d4a20-ctdg -- mount | grep mongo (...) /dev/sdb on /var/lib/kubelet/plugins/kubernetes.io/gce-pd/mounts/mongo-disk type ext4 (rw,relatime,data=ordered) /dev/sdb on /var/lib/kubelet/plugins/kubernetes.io/gce-pd/mounts/mongo-disk type ext4 (rw,relatime,data=ordered) /dev/sdb on /home/kubernetes/containerized_mounter/rootfs/var/lib/kubelet/plugins/kubernetes.io/gce-pd/mounts/mongo-disk type ext4 (rw,relatime,data=ordered) /dev/sdb on /home/kubernetes/containerized_mounter/rootfs/var/lib/kubelet/plugins/kubernetes.io/gce-pd/mounts/mongo-disk type ext4 (rw,relatime,data=ordered) /dev/sdb on /var/lib/kubelet/pods/664cf988-41ed-11ea-b573-42010a8401d7/volumes/kubernetes.io~gce-pd/mongo-data type ext4 (rw,relatime,data=ordered) /dev/sdb on /var/lib/kubelet/pods/664cf988-41ed-11ea-b573-42010a8401d7/volumes/kubernetes.io~gce-pd/mongo-data type ext4 (rw,relatime,data=ordered) /dev/sdb on /home/kubernetes/containerized_mounter/rootfs/var/lib/kubelet/pods/664cf988-41ed-11ea-b573-42010a8401d7/volumes/kubernetes.io~gce-pd/mongo-data type ext4 (rw,relatime,data=ordered) /dev/sdb on /home/kubernetes/containerized_mounter/rootfs/var/lib/kubelet/pods/664cf988-41ed-11ea-b573-42010a8401d7/volumes/kubernetes.io~gce-pd/mongo-data type ext4 (rw,relatime,data=ordered) Connection to 35.205.228.133 closed.

Convenience screenshot, please note mount binds are duplicated

List disks

$> gcloud compute disks list --filter="name=( 'mongo-disk' )" NAME LOCATION LOCATION_SCOPE SIZE_GB TYPE STATUS mongo-disk europe-west1-b zone 10 pd-standard READY $> gcloud compute disks list mongo-disk # old syntax, still working as of 29/01/2020

Cluster update

gcloud container clusters update cluster-2 --enable-autoscaling --node-pool=default-pool --min-nodes=3 --max-nodes=5 \ # [--zone [COMPUTE_ZONE] --project [PROJECT_ID]] # Disable autoscaling if you want to scale to zero as otherwise it may have undesirabel effects gcloud container clusters update cluster-2 --no-enable-autoscaling --node-pool=default-pool gcloud container clusters resize [CLUSTER_NAME] --node-pool [POOL_NAME] --num-nodes [NUM_NODES]

Configure kubectl

$ gcloud container clusters get-credentials cluster-1 #--zone <europe-west1-b> --project <project> Fetching cluster endpoint and auth data. kubeconfig entry generated for cluster-1

$ cat ~/.kube/config

apiVersion: v1

clusters:

- cluster:

certificate-authority-data:

AABBCCFF112233

server: https://35.205.145.199

name: gke_responsive-sun-246311_europe-west1-b_cluster-1

contexts:

- context:

cluster: gke_responsive-sun-246311_europe-west1-b_cluster-1

user: gke_responsive-sun-246311_europe-west1-b_cluster-1

name: gke_responsive-sun-246311_europe-west1-b_cluster-1

current-context: gke_responsive-sun-246311_europe-west1-b_cluster-1

kind: Config

preferences: {}

users:

- name: gke_responsive-sun-246311_europe-west1-b_cluster-1

user:

auth-provider:

config:

cmd-args: config config-helper --format=json

cmd-path: /usr/lib/google-cloud-sdk/bin/gcloud

expiry-key: '{.credential.token_expiry}'

token-key: '{.credential.access_token}'

name: gcp

Make yourself a cluster administrator

As the creator of the cluster, you might expect to be a cluster administrator by default, but on GKE, this isn’t the case.

kubectl create clusterrolebinding cluster-admin-binding \

--clusterrole cluster-admin \

--user $(gcloud config get-value account)