Kubernetes/Amazon EKS

EKS updates, integrations

- Introducing security groups for pods 09 SEP 2020, v1.17

Get EKS kubectl config

aws eks update-kubeconfig --name <EKSCLUSTER-NAME> --kubeconfig ~/.kube/<EKSCLUSTER-NAME>-config

kubectl

Install

curl -LO https://storage.googleapis.com/kubernetes-release/release/$(curl -s https://storage.googleapis.com/kubernetes-release/release/stable.txt)/bin/linux/amd64/kubectl && sudo install kubectl /usr/local/bin/kubectl

Auto completion

source <(kubectl completion bash)

Cheatsheet

# Control plane

kubectl get componentstatus

NAME STATUS MESSAGE ERROR

scheduler Healthy ok

controller-manager Healthy ok

etcd-0 Healthy {"health": "true"}

# Cluster info

kubectl cluster-info

kubectl config view #show configuration of K8s cluster also known as KUBECONFIG

kubectl get nodes

kubectl describe nodes

kubectl api-resources -w wide #all type resources available to the cluster

# Others mixed

kubectl get namespaces

kubectl get pods --watch

kubectl describe pod <pod-name> #shows events

kubectl run letskube-deployment --image=acrtest.azurecr.io/letskube:v2 --port=80 --replicas=3

## List Pods

kubectl get pods --show-labels --all-namespaces

kubectl get pods --field-selector=status.phase!=Running -n dev # list faulty pods

kubectl get pods --field-selector status.phase=Running --all-namespaces

## list Serviecs

kubectl get services --field-selector metadata.namespace=default # = is the same as ==

#Deployment

kubectl expose deployment letskube-deployment --type=NodePort

kubectl destribe deployment letskube-deployment

kubectl delete deployment letskube-deployment

kubectl get deployment letskube-deployment -o yaml

# Service

kubectl create -f .\letskubedeploy.yml

kubectl get service <serviceName> -o wide --watch #for EXTERNAL-IP to be allocated

kubectl describe service <serviceName>

# Scale

kubectl scale --replicas=55 deployment/letskube-deployment

#Config

KUBECONFIG=~/.kube/config #default config file

#Useful commands

alias kubectl="k"

k get all #displays pods,srv,deployments and replica sets

k get all --namespace=default #same as the above as default ns is "default"

#this is set in ~/.kube/config "contexts:" block

- context:

cluster: mycluster

namespace: default

k get all --all-namespaces #display all namespaces, by default --all-namespaces=false

k get all -o wide #additional details are: pod IP and node, selector and images, -o <go-template>

k get all --all-namespaces -o wide

k get <pods|services|rs>

k get events

#Run commands inside a pods

k exec -it <podID> <command>

#Edit "inline" configMaps

k edit configmap -n kube-system <configMap>

- Access a pod

A port forward is tunnel provided by the running kubectl port-forward program from your computer into the cluster, this doesn’t make the hello world accessible publicly. Keep this command below running in your terminal and connect your browser to http://localhost:8080/

kubectl port-forward pod/hello-world-pod 8080:80

Simple deployments

# Create a pod named "nginx", using image "nginx"

cat << EOF | kubectl create -f -

apiVersion: v1

kind: Pod

metadata:

name: nginx

spec:

containers:

- name: nginx

image: nginx

EOF

#Clean up

$ k delete pod nginx

Multipurpose container to run a commands

cat << EOF | kubectl create -f -

apiVersion: v1

kind: Pod

metadata:

name: busybox

spec:

containers:

- name: busybox

image: radial/busyboxplus:curl

args:

- sleep

- "1000"

EOF

kubectl get services --all-namespaces

NAMESPACE NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

aat1 nginx NodePort 172.10.10.81 <none> 8243:31662/TCP 1

kubectl exec busybox -- curl 172.10.10.81:8243

References

- kubectl cheatsheet kubernetes.io

kubectx

kubectxhelps you switch between clusters back and forthkubenshelps you switch between Kubernetes namespaces smoothly

git clone https://github.com/ahmetb/kubectx.git ~/.kubectx COMPDIR=$(pkg-config --variable=completionsdir bash-completion) ln -sf ~/.kubectx/completion/kubens.bash $COMPDIR/kubens ln -sf ~/.kubectx/completion/kubectx.bash $COMPDIR/kubectx cat << FOE >> ~/.bashrc #kubectx and kubens export PATH=\$PATH:~/.kubectx FOE

K8s cluster installation

partial notes not really linked with anything else when running a cluster on managed platform

- Preview client/server binaries

- Go and download latest binaries

- Extract

- Run in

kubernetes\cluster\get-kube-binaries.sh

This script downloads and installs the Kubernetes client and server. (and optionally test) binaries, It is intended to be called from an extracted Kubernetes release tarball. We automatically choose the correct client binaries to download.

Azure AKS

Setting up kubectl

Powershell

$env:KUBECONFIG="$env:HOMEPATH\.kube\aksconfig" PS1 C:\> kubectl config current-context #show current context, default cluster managed by the kubectl PS1 C:\> Get-Content $env:KUBECONFIG | sls context contexts: - context: current-context: aks-test-cluster

Bash

export KUBECONFIG=~/.kube/aksconfig

Intro into Amazon EKS

This intro information are valid at the time of writting this section, see Amazon AWS Containers Roadmap to track the new features.

AWS EKS supports only Kubernetes version 1.10.3.

By default, Amazon EKS provides AWS CloudFormation templates to spin up your worker nodes with the Amazon EKS-optimized AMI. This AMI is built on top of Amazon Linux 2. The AMI is configured to work with Amazon EKS out of the box and it includes Docker 17.06.2-ce (with overlay2 as a Docker storage driver), Kubelet 1.10.3, and the AWS authenticator. The AMI also launches with specialized Amazon EC2 user data that allows it to discover and connect to your cluster's control plane automatically.

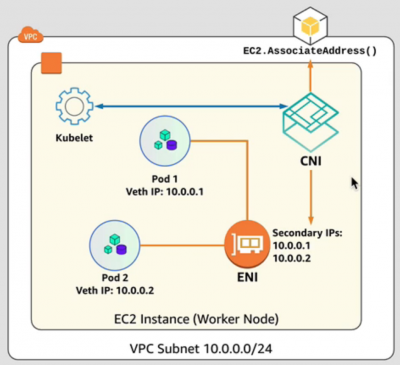

The AWS VPC container network interface (CNI) plugin is responsible for providing pod networking in Kubernetes using Elastic Network Interfaces (ENI) on AWS. Amazon EKS works with Calico by Tigera to integrate with the CNI plugin to provide fine grained networking policies.

The Amazon EKS service is available at the time of writting this in Novmeber 2018 only in following regions:

- US East (N. Virginia) - us-east-1

- US East (Ohio) - us-east-2

- US West (Oregon) - us-west-2

- EU (Ireland) - eu-west-1

Architecture diagram

Bootstrap/create EKS Cluster

Additional tools

- Bootstraping required

- kubectl

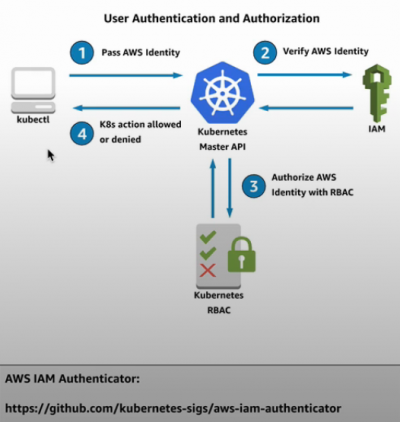

- aws-iam-authenticator for AWS CLI <1.16.156

- awscli

- eksctl by Waveworks

- jq

- Optional

- kube2iam - provide IAM credentials to containers running inside a kubernetes cluster based on annotations.

Install kubectl Kubernetes client

mkdir -p ~/.kube # config location ## Download the latest version sudo curl -L https://storage.googleapis.com/kubernetes-release/release/$(curl -s https://storage.googleapis.com/kubernetes-release/release/stable.txt)/bin/linux/amd64/kubectl -o /usr/local/bin/kubectl ## Download 1.10.3 version from AWS S3 hosted location sudo curl --location -o /usr/local/bin/kubectl "https://amazon-eks.s3-us-west-2.amazonaws.com/1.10.3/2018-07-26/bin/linux/amd64/kubectl" sudo chmod +x /usr/local/bin/kubectl kubectl version --short --client kubectl <operation> <object> <resource_name> <optional_flags>

Note: If you're running the AWS CLI version 1.16.156 or later, then you don't need to install the authenticator. Instead, you can use the aws eks get-token command. For more information, see Create kubeconfig manually.

Install aws-iam-authenticator if AWS CLI is <1.16.156

## Option 1. Use 'go' to download binary then 'mv' to one of directories in $PATH go get -u -v github.com/kubernetes-sigs/aws-iam-authenticator/cmd/aws-iam-authenticator sudo mv ~/go/bin/aws-iam-authenticator /usr/local/bin/aws-iam-authenticator ## Option 2. Download directly to /usr/bin/aws-iam-authenticator sudo curl https://amazon-eks.s3-us-west-2.amazonaws.com/1.12.7/2019-03-27/bin/linux/amd64/aws-iam-authenticator -o /usr/bin/aws-iam-authenticator sudo curl https://amazon-eks.s3.us-west-2.amazonaws.com/1.15.10/2020-02-22/bin/linux/amd64/aws-iam-authenticator -o /usr/bin/aws-iam-authenticator chmod +x /usr/bin/aws-iam-authenticator aws-iam-authenticator help

Install jq, configure awscli, install eksctl

sudo yum -y install jq # Amazon Linux

sudo apt -y install jq # Ubuntu

# Configure awscli. These instruction are reference of being executed on AWS Cloud9

rm -vf ${HOME}/.aws/credentials

export AWS_REGION=$(curl -s 169.254.169.254/latest/dynamic/instance-identity/document | jq -r .region)

echo "export AWS_REGION=${AWS_REGION}" >> ~/.bash_profile

aws configure set default.region ${AWS_REGION}

aws configure get default.region

# Install eksctl by Waveworks

curl --location "https://github.com/weaveworks/eksctl/releases/download/latest_release/eksctl_$(uname -s)_amd64.tar.gz" | tar xz -C /tmp

sudo mv -v /tmp/eksctl /usr/local/bin

eksctl version

Bootstrap EKS Cluster

# Create EKS cluster

# Docs at https://eksctl.io/

[default=2] [default=us-west-2] [default=m5.large]

$ eksctl create cluster --name=eksworkshop-eksctl --nodes=3 --node-ami=auto --region=${AWS_REGION} --node-type=m5.large

2018-11-24T12:54:41Z [ℹ] using region eu-west-1

2018-11-24T12:54:42Z [ℹ] setting availability zones to [eu-west-1b eu-west-1a eu-west-1c]

2018-11-24T12:54:42Z [ℹ] subnets for eu-west-1b - public:192.168.0.0/19 private:192.168.96.0/19

2018-11-24T12:54:42Z [ℹ] subnets for eu-west-1a - public:192.168.32.0/19 private:192.168.128.0/19

2018-11-24T12:54:42Z [ℹ] subnets for eu-west-1c - public:192.168.64.0/19 private:192.168.160.0/19

2018-11-24T12:54:43Z [ℹ] using "ami-00c3b2d35bdddffff" for nodes

2018-11-24T12:54:43Z [ℹ] creating EKS cluster "eksworkshop-eksctl" in "eu-west-1" region

2018-11-24T12:54:43Z [ℹ] will create 2 separate CloudFormation stacks for cluster itself and the initial nodegroup

2018-11-24T12:54:43Z [ℹ] if you encounter any issues, check CloudFormation console or try 'eksctl utils describe-stacks --region=eu-west-1 --name=eksworkshop-eksctl'

2018-11-24T12:54:43Z [ℹ] creating cluster stack "eksctl-eksworkshop-eksctl-cluster"

2018-11-24T13:06:38Z [ℹ] creating nodegroup stack "eksctl-eksworkshop-eksctl-nodegroup-0"

2018-11-24T13:10:16Z [✔] all EKS cluster resource for "eksworkshop-eksctl" had been created

2018-11-24T13:10:16Z [✔] saved kubeconfig as "/home/ec2-user/.kube/config"

2018-11-24T13:10:16Z [ℹ] the cluster has 0 nodes

2018-11-24T13:10:16Z [ℹ] waiting for at least 3 nodes to become ready

2018-11-24T13:10:47Z [ℹ] the cluster has 3 nodes

2018-11-24T13:10:47Z [ℹ] node "ip-192-168-13-5.eu-west-1.compute.internal" is ready

2018-11-24T13:10:47Z [ℹ] node "ip-192-168-41-230.eu-west-1.compute.internal" is ready

2018-11-24T13:10:47Z [ℹ] node "ip-192-168-79-54.eu-west-1.compute.internal" is ready

2018-11-24T13:10:47Z [ℹ] kubectl command should work with "/home/ec2-user/.kube/config", try 'kubectl get nodes'

2018-11-24T13:10:47Z [✔] EKS cluster "eksworkshop-eksctl" in "eu-west-1" region is ready

Configure kubectl, make sure awscli has been configured already

aws eks update-kubeconfig --name <EKSCLUSTER-NAME> --kubeconfig ~/.kube/<EKSCLUSTER-NAME>-config Added new context arn:aws:eks:eu-west-1:111111111111:cluster/EKSCLUSTER-NAME to /home/vagrant/.kube/EKSCLUSTER-NAME-config export KUBECONFIG=~/.kube/EKSCLUSTER-NAME-config kubectl config view

Kubectl operations

# Verify EKS cluster nodes

kubectl get nodes

NAME STATUS ROLES AGE VERSION

ip-192-168-13-5.eu-west-1.compute.internal Ready <none> 1h v1.10.3

ip-192-168-41-230.eu-west-1.compute.internal Ready <none> 1h v1.10.3

ip-192-168-79-54.eu-west-1.compute.internal Ready <none> 1h v1.10.3

# Get info about the cluster

eksctl get cluster --name=eksworkshop-eksctl --region=${AWS_REGION} NAME VERSION STATUS CREATED VPC SUBNETS SECURITYGROUPS

eksworkshop-eksctl 1.10 ACTIVE 2018-11-24T12:55:28Z vpc-0c97f8a6dabb11111 subnet-05285b6c692711111,subnet-0a6626ec2c0111111,subnet-0c5e839d106f11111,subnet-0d9a9b34be5511111,subnet-0f297fefefad11111,subnet-0faaf1d3dedd11111 sg-083fbc37e4b011111

Deploy the Official Kubernetes Dashboard

# Deploy dashboard from official config sources. Also can download a files and deploy. kubectl create -f https://raw.githubusercontent.com/kubernetes/dashboard/master/src/deploy/recommended/kubernetes-dashboard.yaml # Create kube-proxy to enable accedd to the application (dashboard) from Internet # start the proxy in the background, listen on port 8080, listen on all interfaces, and will disable the filtering of non-localhost requests kubectl proxy --port=8080 --address='0.0.0.0' --disable-filter=true & W1124 14:47:55.308424 14460 proxy.go:138] Request filter disabled, your proxy is vulnerable to XSRF attacks, please be cautious Starting to serve on [::]:8080

Install info type "plugins"

Installing Heapster and InfluxDB

kubectl apply -f https://raw.githubusercontent.com/kubernetes/heapster/master/deploy/kube-config/influxdb/heapster.yaml kubectl apply -f https://raw.githubusercontent.com/kubernetes/heapster/master/deploy/kube-config/influxdb/influxdb.yaml kubectl apply -f https://raw.githubusercontent.com/kubernetes/heapster/master/deploy/kube-config/rbac/heapster-rbac.yaml

aws-auth | Managing users or IAM roles for your cluster

<syntaxhighlightjs lang=yaml>

- kubectl -n kube-system edit aws-auth

apiVersion: v1 kind: ConfigMap metadata:

name: aws-auth namespace: kube-system

data:

mapRoles: |

- rolearn: <ARN of instance role (not instance profile)>

username: system:node:Template:EC2PrivateDNSName # <- required

groups:

- system:bootstrappers

- system:nodes

- rolearn: arn:aws:iam::[hidden]:role/CrossAccountAdmin

username: aws-admin-user

groups:

- system:masters

</syntaxhighlightjs> The 'username' can actually be set to about anything. It appears to only be important if there are custom roles and bindings added to your EKS cluster.

Create admin user and roles

Create administrative account and role binding <syntaxhighlightjs lang=yaml> kubectl apply -f eks-admin-service-account.yaml #create admin account cat << EOF > eks-admin-service-account.yaml apiVersion: v1 kind: ServiceAccount metadata:

name: eks-admin #<-service account name namespace: kube-system #<-within this namespace

EOF

kubectl apply -f eks-admin-cluster-role-binding.yaml #create role binding to assosiate eks-admin account with Admin role cat << EOF > eks-admin-cluster-role-binding.yaml apiVersion: rbac.authorization.k8s.io/v1beta1 kind: ClusterRoleBinding metadata:

name: eks-admin

roleRef:

apiGroup: rbac.authorization.k8s.io kind: ClusterRole name: cluster-admin #<- create the new role name

subjects: - kind: ServiceAccount

name: eks-admin #<- associate with the account we created earlier namespace: kube-system

EOF </syntaxhighlightjs>

Add/Modify users permissons

kubectl edit -n kube-system configmap/aws-auth

Access the dashboard

When running from local machine (laptop) proxy is required, read more...

kubectl proxy --address 0.0.0.0 --accept-hosts '.*' &

Generate temporary token to login to dashboard

aws-iam-authenticator token -i eksworkshop-eksctl --token-only aws-iam-authenticator token -i eksworkshop-eksctl --token-only | jq -r .status.token #returns only token

Go to webbrowser, point to kube-proxy and append to the URL following path

/api/v1/namespaces/kube-system/services/https:kubernetes-dashboard:/proxy/ #full url http://localhost:8001/api/v1/namespaces/kube-system/services/https:kubernetes-dashboard:/proxy/#!/login

select token sign-in and paste token to login in.

Deploy applications

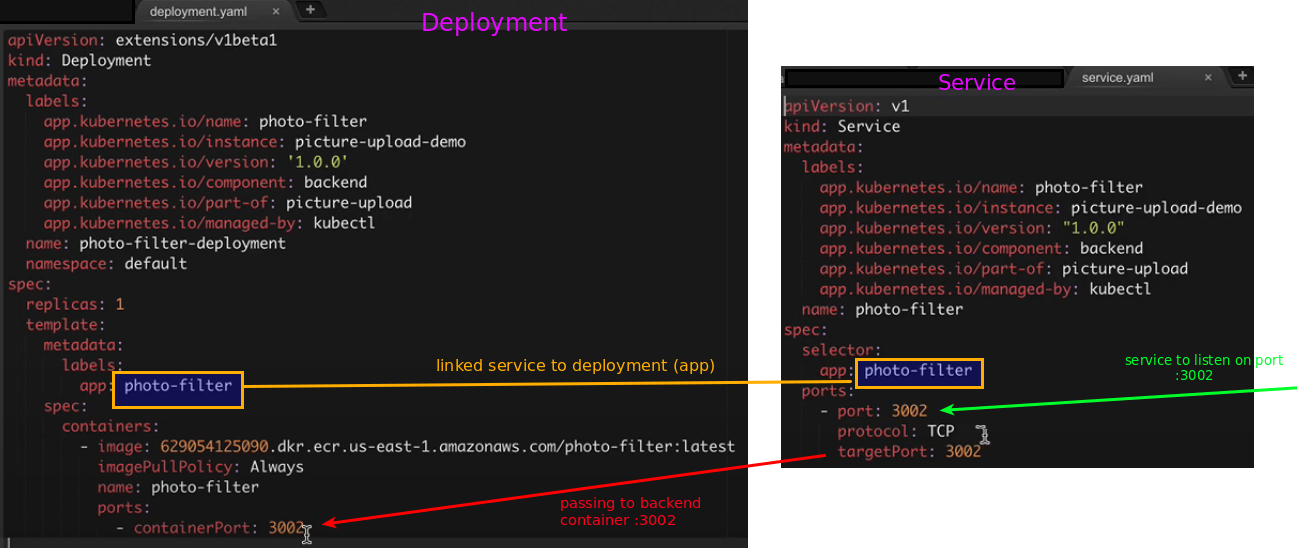

Sample dependency diagram - service and application

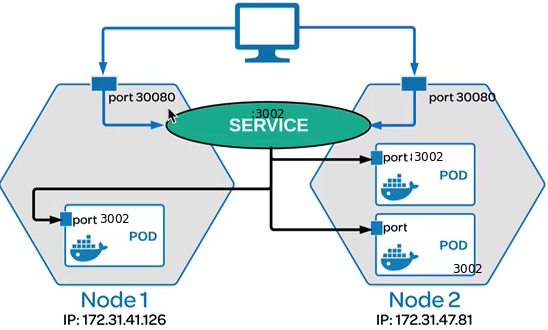

The service below is only available within the cluster because we haven't specified the ServiceType, so it assumed to be ClusterIP type. This exposes the service on the cluster internal IP only.

Diagram

Deployments

Deploy ecsdemo-* applications

The containers listen on port 3000, and native service discovery will be used to locate the running containers and communicate with them.

# Download deployable sample applications mkdir ~/environment #place of deployables to EKS, applications, policies etc cd ~/environment git clone https://github.com/brentley/ecsdemo-frontend.git git clone https://github.com/brentley/ecsdemo-nodejs.git git clone https://github.com/brentley/ecsdemo-crystal.git ### Deploy applications # NodeJS Backend API cd ecsdemo-nodejs kubectl apply -f kubernetes/deployment.yaml kubectl apply -f kubernetes/service.yaml kubectl get deployment ecsdemo-nodejs # watch progress # Crystal Backend API cd ~/environment/ecsdemo-crystal kubectl apply -f kubernetes/deployment.yaml kubectl apply -f kubernetes/service.yaml kubectl get deployment ecsdemo-crystal

Before deploying frontend application let's see how service differs between backend and frontend services

| frontend service (ecsdemo-frontend.git) | backend service (ecsdemo-nodejs.git) |

|---|---|

apiVersion: v1

kind: Service

metadata:

name: ecsdemo-frontend

spec:

selector:

app: ecsdemo-frontend

type: LoadBalancer

ports:

- protocol: TCP

port: 80

targetPort: 3000

|

apiVersion: v1

kind: Service

metadata:

name: ecsdemo-nodejs

spec:

selector:

app: ecsdemo-nodejs

type: ClusterIP <-- this is default

ports:

- protocol: TCP

port: 80

targetPort: 3000

|

Notice there is no need to specific service type describe for backend because the default type is ClusterIP. This Exposes the service on a cluster-internal IP. Choosing this value makes the service only reachable from within the cluster. Thus forntend has type: LoadBalancer

The frontend service will attempt to create ELB thus requires access to the elb service. This is controlled by IAM service role that needs creating if does not exist.

aws iam get-role --role-name "AWSServiceRoleForElasticLoadBalancing" || aws iam create-service-linked-role --aws-service-name "elasticloadbalancing.amazonaws.com"

Deploy frontend service

cd ecsdemo-frontend kubectl apply -f kubernetes/deployment.yaml kubectl apply -f kubernetes/service.yaml kubectl get deployment ecsdemo-frontend # Get service address kubectl get service ecsdemo-frontend -o wide ELB=$(kubectl get service ecsdemo-frontend -o json | jq -r '.status.loadBalancer.ingress[].hostname') curl -m3 -v $ELB #You can also open this in a webrowser

Scale backend services

kubectl scale deployment ecsdemo-nodejs --replicas=3 kubectl scale deployment ecsdemo-crystal --replicas=3 kubectl get deployments NAME DESIRED CURRENT UP-TO-DATE AVAILABLE AGE ecsdemo-crystal 3 3 3 3 38m ecsdemo-frontend 1 1 1 1 20m ecsdemo-nodejs 3 3 3 3 40m # Watch scaling in action $ i=3; kubectl scale deployment ecsdemo-nodejs --replicas=$i; kubectl scale deployment ecsdemo-crystal --replicas=$i $ watch -d -n 0.5 kubectl get deployments

Check the browser you should now see traffic flowing to multiple frontend services.

Delete the applications

cd ecsdemo-frontend kubectl delete -f kubernetes/service.yaml kubectl delete -f kubernetes/deployment.yaml cd ecsdemo-crystal kubectl delete -f kubernetes/service.yaml kubectl delete -f kubernetes/deployment.yaml cd ecsdemo-nodejs kubectl delete -f kubernetes/service.yaml kubectl delete -f kubernetes/deployment.yaml

Networking using Calico

- Install

Below will install Calico manifest. This creates the daemon sets in the kube-system namespace.

wget https://raw.githubusercontent.com/aws/amazon-vpc-cni-k8s/master/config/v1.2/calico.yaml kubectl apply -f calico.yaml kubectl get daemonset calico-node --namespace=kube-system

See more details on the eksworkshop.com website.

Network policy demo

Before creating network polices, we will create the required resources.

mkdir calico_resources && cd calico_resources wget https://eksworkshop.com/calico/stars_policy_demo/create_resources.files/namespace.yaml kubectl apply -f namespace.yaml # create namespace # Download manifest for orher resources wget https://eksworkshop.com/calico/stars_policy_demo/create_resources.files/management-ui.yaml wget https://eksworkshop.com/calico/stars_policy_demo/create_resources.files/backend.yaml wget https://eksworkshop.com/calico/stars_policy_demo/create_resources.files/frontend.yaml wget https://eksworkshop.com/calico/stars_policy_demo/create_resources.files/client.yaml kubectl apply -f management-ui.yaml kubectl apply -f backend.yaml kubectl apply -f frontend.yaml kubectl apply -f client.yaml kubectl get pods --all-namespaces

Resources we created:

- A namespace called stars

- frontend and backend replication controllers and services within stars namespace

- A namespace called management-ui

- Replication controller and service management-ui for the user interface seen on the browser, in the management-ui namespace

- A namespace called client

- client replication controller and service in client namespace

Pod-to-Pod communication

In Kubernetes, the pods by default can communicate with other pods, regardless of which host they land on. Every pod gets its own IP address so you do not need to explicitly create links between pods. This is demonstrated by the management-ui.

$ cat management-ui.yaml

kind: Service

metadata:

name: management-ui

namespace: management-ui

spec:

type: LoadBalancer

ports:

- port: 80

targetPort: 9001

# Get Management UI dns name

kubectl get svc -o wide -n management-ui

If you open the URL you see Visual Start of connectiona between PODs B-C-F. The UI here shows the default behavior, of all services being able to reach each other.

Apply network policies

By default all Pods can talk to each other what is not what we shuld allow in produciton environemtns. So, let's apply policies:

cd calico_resources

wget https://eksworkshop.com/calico/stars_policy_demo/apply_network_policies.files/default-deny.yaml

cat default-deny.yaml #not all output showing below

kind: NetworkPolicy

apiVersion: networking.k8s.io/v1

metadata:

name: default-deny

spec:

podSelector:

matchLabels: {}

# Create deny policies to followign name spaces 'stars' and 'client'. Web browser won't show anything as UI won't have access to pods.

kubectl apply -n stars -f default-deny.yaml

kubectl apply -n client -f default-deny.yaml

# Create allow policies

wget https://eksworkshop.com/calico/stars_policy_demo/apply_network_policies.files/allow-ui.yaml

wget https://eksworkshop.com/calico/stars_policy_demo/apply_network_policies.files/allow-ui-client.yaml

cat allow-ui.yaml

kind: NetworkPolicy

apiVersion: extensions/v1beta1

metadata:

namespace: stars

name: allow-ui

spec:

podSelector:

matchLabels: {}

ingress:

- from:

- namespaceSelector:

matchLabels:

role: management-ui

cat allow-ui-client.yaml

kind: NetworkPolicy

apiVersion: networking.k8s.io/v1

metadata:

namespace: client

name: allow-ui

spec:

podSelector:

matchLabels: {}

ingress:

- from:

- namespaceSelector:

matchLabels:

role: management-ui

kubectl apply -f allow-ui.yaml

kubectl apply -f allow-ui-client.yaml

# The website should start showing connection star again but Pods cannot communicate to each other.

Allow Directional Traffic

Network policies in Kubernetes use labels to select pods, and define rules on what traffic is allowed to reach those pods. They may specify ingress or egress or both. Each rule allows traffic which matches both the from and ports sections.

# Download cd calico_resources wget https://eksworkshop.com/calico/stars_policy_demo/directional_traffic.files/backend-policy.yaml wget https://eksworkshop.com/calico/stars_policy_demo/directional_traffic.files/frontend-policy.yaml

| backend-policy | frontend-policy |

|---|---|

$ cat backend-policy.yaml:

kind: NetworkPolicy

apiVersion: networking.k8s.io/v1

metadata:

namespace: stars

name: backend-policy

spec:

podSelector:

matchLabels:

role: backend

ingress:

- from:

- podSelector:

matchLabels:

role: frontend

ports:

- protocol: TCP

port: 6379

|

$ cat frontend-policy.yaml

kind: NetworkPolicy

apiVersion: networking.k8s.io/v1

metadata:

namespace: stars

name: frontend-policy

spec:

podSelector:

matchLabels:

role: frontend

ingress:

- from:

- namespaceSelector:

matchLabels:

role: client

ports:

- protocol: TCP

port: 80

|

Apply policies

# allow traffic from frontend service to the backend service apply the manifest kubectl apply -f backend-policy.yaml # allow traffic from the client namespace to the frontend service kubectl apply -f frontend-policy.yaml

Let’s have a look at the backend-policy. Its spec has a podSelector that selects all pods with the label role:backend, and allows ingress from all pods that have the label role:frontend and on TCP port 6379, but not the other way round. Traffic is allowed in one direction on a specific port number.

The frontend-policy is similar, except it allows ingress from namespaces that have the label role: client on TCP port 80.

Clean up

Remove deleting the namespaces and uninstalling Calico

kubectl delete ns client stars management-ui #delete namespaces kubectl calico.yaml #uninstall Calico kubectl delete -f https://raw.githubusercontent.com/aws/amazon-vpc-cni-k8s/master/config/v1.2/calico.yaml

Health Checks

By default, Kubernetes will restart a container if it crashes for any reason. Addtionally you can use probes:

- Liveness probes are used to know when a pod is alive or dead. A pod can be in a dead state for different reasons while Kubernetes kills and recreates the pod when liveness probe does not pass.

- Readiness probes are used to know when a pod is ready to serve traffic. Only when the readiness probe passes, a pod will receive traffic from the service. When readiness probe fails, traffic will not be sent to a pod until it passes.

- liveness probe

In the example below kublet is instructed to send HTTP GET request to the server hosting this Pod and if the handler for the servers /health returns a success code, then the Container is considered healthy.

mkdir healthchecks; cd $_

$ cat << EOF > liveness-app.yaml

apiVersion: v1

kind: Pod

metadata:

name: liveness-app

spec:

containers:

- name: liveness

image: brentley/ecsdemo-nodejs

livenessProbe:

httpGet:

path: /health

port: 3000

initialDelaySeconds: 5

periodSeconds: 5

EOF

# Create a pod from the manifrst

kubectl apply -f liveness-app.yaml

# Show the pod event history

kubectl describe pod liveness-app

NAME READY STATUS RESTARTS AGE

liveness-app 1/1 Running 0 54s

# Intrduce failure. Send a kill signal to the application process in docker runtime

kubectl exec -it liveness-app -- /bin/kill -s SIGUSR1 1

kubectl get pod liveness-app

NAME READY STATUS RESTARTS AGE

liveness-app 1/1 Running 1 11m

# Get logs

kubectl logs liveness-app # use -f for log tailing

kubectl logs liveness-app --previous # previous container logs

- readiness probe

cd healthchecks

cat << EOF > readiness-deployment.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: readiness-deployment

spec:

replicas: 3

selector:

matchLabels:

app: readiness-deployment

template:

metadata:

labels:

app: readiness-deployment

spec:

containers:

- name: readiness-deployment

image: alpine

command: ["sh", "-c", "touch /tmp/healthy && sleep 86400"]

readinessProbe:

exec:

command:

- cat

- /tmp/healthy

initialDelaySeconds: 5

periodSeconds: 3

EOF

# create a deployment to test readiness probe

kubectl apply -f readiness-deployment.yaml

# Verify

kubectl get pods -l app=readiness-deployment

kubectl describe deployment readiness-deployment | grep Replicas:

# Introduce failure by deleting the file used by the probe

kubectl exec -it readiness-deployment-<POD-NAME> -- rm /tmp/healthy

kubectl get pods -l app=readiness-deployment

NAME READY STATUS RESTARTS AGE

readiness-deployment-59dcf5956f-jfpf6 1/1 Running 0 9m

readiness-deployment-59dcf5956f-mdqc6 0/1 Running 0 9m #traffic won't be routed to it

readiness-deployment-59dcf5956f-wfwgn 1/1 Running 0 9m

kubectl describe deployment readiness-deployment | grep Replicas:

Replicas: 3 desired | 3 updated | 3 total | 2 available | 1 unavailable

# Recreate the probe file

kubectl exec -it readiness-deployment-<YOUR-POD-NAME> -- touch /tmp/healthy

- Clean up

kubectl delete -f liveness-app.yaml,readiness-deployment.yaml

In the example above we use a text file but instead you can use tcpSocket

readinessProbe:

tcpSocket:

port: 8080

Delete EKS cluster

As the running cluster costs $0.20 per hour it make sense to kill it. The command below will run CloudForamtion and delete stack named eksctl-eksworkshop-eksctl-cluster

eksctl delete cluster --name=eksworkshop-eksctl

ECR Elastic Container Registry

Fully-managed Docker container registry

aws ecr get-login --no-include-email --region us-east-1 #returns Docker command to add repository to your docker-client

#credentials are valid for 12 hours

docker login -u AWS -p ey[**hash**]Z9 https://111111111111.dkr.ecr.eu-west-1.amazonaws.com

Create repository

aws ecr create-repository --repository-name hello

Repository endpoint

AWS account ID region repo-name tag

\ | | /

111111111111.dkr.ecr.eu-west-1.amazon.com/hello:latest

Kubernetes plugins

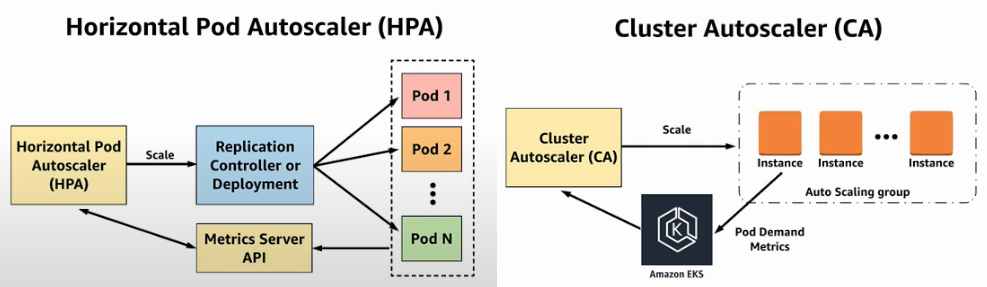

Auto-Scaling in Kubernetes

There are 2 major available solutions to scale Kubernetes cluster based on demanded load.

- Horizontal Pod Autoscaler (HPA) - native Kubernetes component to scale Deployment or ReplicaSet based on CPU or other metrics

- Cluster Autoscaler (CA) - plugin to auto-scale worker-nodes of Kubernetes cluster

Horizontal Pod Autoscaler (HPA)

Steps below demonstrate how to deploy HPA to EKS.

# Install HELM curl https://raw.githubusercontent.com/kubernetes/helm/master/scripts/get > get_helm.sh chmod +x get_helm.sh ./get_helm.sh

Setup Tiller the Helm server-side component. It requires ServiceAccount

<syntaxhighlightjs lang=yaml>

cat << EOF > tiller-rbac.yaml

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: tiller #<- name of this service account namespace: kube-system

--- apiVersion: rbac.authorization.k8s.io/v1beta1 kind: ClusterRoleBinding metadata:

name: tiller

roleRef:

apiGroup: rbac.authorization.k8s.io kind: ClusterRole name: cluster-admin #<- assigned role

subjects:

- kind: ServiceAccount name: tiller

namespace: kube-system </syntaxhighlightjs>

Deploy Tiller

kubectl apply -f tiller-rbac.yaml

Deploy Metric Server, cluster wide aggregator resource usage data. Metrics are collected by kublet on each of the nodes and it can dictate scaling behavior of deployments.

helm install stable/metrics-server --name metrics-server --version 2.0.4 --namespace metrics` kubectl get apiservice v1beta1.metrics.k8s.io -o yaml #verify "all checks passed"

Create load and enable HPA autoscale

kubectl run php-apache --image=k8s.gcr.io/hpa-example --requests=cpu=200m --expose --port=80 # --requests=cpu=200m :- allocate 200 mili-cores to a pod # Set "php-apache" deployment to hpa-autoscale (horizontal pod autoscale) based on "--cpu-percent" metric kubectl autoscale deployment php-apache --cpu-percent=50 --min=1 --max=10 #Check status kubectl get hpa #Run load test kubectl run -i --tty load-generator --image=busybox /bin/sh while true; do wget -q -O - http://php-apache; done #In another terminal watch the scaling effect kubectl get hpa -w NAME REFERENCE TARGETS MINPODS MAXPODS REPLICAS AGE php-apache Deployment/php-apache 0%/50% 1 10 1 1m php-apache Deployment/php-apache 321%/50% 1 10 1 2m #<- load container started php-apache Deployment/php-apache 410%/50% 1 10 4 3m php-apache Deployment/php-apache 131%/50% 1 10 4 4m php-apache Deployment/php-apache 90%/50% 1 10 8 5m php-apache Deployment/php-apache 43%/50% 1 10 10 12m php-apache Deployment/php-apache 0%/50% 1 10 10 14m php-apache Deployment/php-apache 0%/50% 1 10 1 16m #<- load container stopped

Cluster Autoscaler (CA)

Complimentary projects:

- awslabs/karpenter Video

- kubernetes-sigs/descheduler

- kube-spot-termination-notice-handler, helm - watches only spot notifications and then drains the node it's running on

- aws-node-termination-handler - handles more events but requires EventBridge and SQS

- k8s-spot-rescheduler move nodes from on-demand instances to spot instances when space is available.

Cluster Autoscaler (CA) allows to scale worker nodes works with all major public clouds. Below it's AWS deployment example. There is a number considerations then using it, all below is in context of EKS:

- use the correct version of CA for K8s, eg. note Helm for v1.18 changed location

- instance types should have the same amount of RAM and number of CPU cores, since this is fundamental to CA's scaling calculations. Using mismatched instances types can produce unintended results

- ensure cluster nodes have the same capacity; for spot instances fleet /or overrides instance types in ASG these should have the same vCPU and memory

- ensure every pod has resource requests defined, and set close to actual usage

- specify PodDisruptionBudget for kube-system pods and for application pods

- avoid using the Cluster autoscaler with more than 1000 node clusters

- ensure resource availability for the cluster autoscaler pod

- over-provision cluster to ensure head room for critical pods

Allow IAM Instance role attached to EKS-ec2-worker-instances or IRSA to interact with ASG; policy:

<syntaxhighlightjs lang=json>

{

"Version": "2012-10-17",

"Statement": [

{

"Action": [

"autoscaling:DescribeAutoScalingGroups",

"autoscaling:DescribeAutoScalingInstances",

"autoscaling:DescribeLaunchConfigurations",

"autoscaling:DescribeTags",

"autoscaling:SetDesiredCapacity",

"autoscaling:TerminateInstanceInAutoScalingGroup",

"ec2:DescribeLaunchTemplateVersions"

],

"Resource": "*",

"Effect": "Allow"

}

]

} </syntaxhighlightjs>

Deploy Autoscaler and Metrics server

# Optinal

SPOT_TERMINATION_HANDLER_VERSION=1.4.9

helm search repo stable/k8s-spot-termination-handler --version $SPOT_TERMINATION_HANDLER_VERSION

helm repo update

helm upgrade --install k8s-spot-termination-handler stable/k8s-spot-termination-handler \

--version $SPOT_TERMINATION_HANDLER_VERSION \

--namespace kube-system

helm repo add google-stable https://kubernetes-charts.storage.googleapis.com/ # K8s v1.17> autoscaler, metric-server

#helm repo add autoscaler https://kubernetes.github.io/autoscaler # K8s v1.18+ autoscaler

helm repo update

METRICS_SERVER_VERSION=2.11.1

helm upgrade --install metrics-server google-stable/metrics-server \

--version $METRICS_SERVER_VERSION \

--namespace kube-system

CLUSTER_AUTOSCALER_VERSION=8.0.0

helm upgrade --install aws-cluster-autoscaler google-stable/cluster-autoscaler \

--version $CLUSTER_AUTOSCALER_VERSION \

--set autoDiscovery.clusterName=$CLUSTER \

--set awsRegion=$AWS_REGION \

--set cloudProvider=aws \

--namespace cluster-autoscaler \

--create-namespace

# IRSA

--set rbac.serviceAccountAnnotations."eks\.amazonaws\.com/role-arn"=$ROLE_ARN

--set rbac.serviceAccount.name="cluster-autoscaler" \

Example of Nginx deployment to create a load

<syntaxhighlightjs lang=yaml>

cat << EOF > nginx-autoscaler.yaml

apiVersion: extensions/v1beta1

kind: Deployment

metadata:

name: nginx-autoscaler

spec:

replicas: 1

template:

metadata:

labels:

service: nginx

app: nginx

spec:

containers:

- image: nginx

name: nginx-scaleout

resources:

limits:

cpu: 250m

memory: 256Mi

requests:

cpu: 250m

memory: 256Mi

EOF </syntaxhighlightjs>

References:

References

- cluster-autoscaler git repo

References

- eksworkshop Official Amazon EKS Workshop

- Awesome-Kubernetes Git repo

- Amazon EKS worker node Packer build Git repo

- Use an HTTP Proxy to Access the Kubernetes API K8s docs

- EKS versions AWS docs