Docker

Containers taking a world J

Installation

General procedure:

- Make sure you don't have docker already installed from your packet manager

- The /var/lib/docker may be

To install the latest version of Docker with curl:

curl -sSL https://get.docker.com/ | sh

CentOS

sudo yum install bash-completion bash-completion-extras #optional, requires you log out

sudo yum install -y yum-utils device-mapper-persistent-data lvm2 #utils

sudo yum-config-manager --add-repo https://download.docker.com/linux/centos/docker-ce.repo #docker-ee.repo for EE edition

# --enable docker-ce-{edge|test} #for beta releases

sudo yum update

sudo yum clean all #not sure why this command is here

sudo yum install docker-ce

#old: sudo yum install -y --setopt=obsoletes=0 docker-ce-17.03.1.ce-1.el7.centos docker-ce-selinux-17.03.1.ce-1.el7.centos

sudo systemctl enable docker && sudo systemctl start docker && sudo systemctl status docker

yum-config-manager --disable jenkins #disable source to prevent accidental update ?jenkins?

Ubuntu 24.04

# Add Docker's official GPG key:

sudo apt-get update

sudo apt-get install ca-certificates curl

sudo install -m 0755 -d /etc/apt/keyrings

sudo curl -fsSL https://download.docker.com/linux/ubuntu/gpg -o /etc/apt/keyrings/docker.asc

sudo chmod a+r /etc/apt/keyrings/docker.asc

# Add the repository to Apt sources:

echo \

"deb [arch=$(dpkg --print-architecture) signed-by=/etc/apt/keyrings/docker.asc] https://download.docker.com/linux/ubuntu \

$(. /etc/os-release && echo "${UBUNTU_CODENAME:-$VERSION_CODENAME}") stable" | \

sudo tee /etc/apt/sources.list.d/docker.list > /dev/null

sudo apt-get update

# Install the latest version

sudo apt-get install docker-ce docker-ce-cli containerd.io docker-buildx-plugin docker-compose-plugin

# Install a specific version

## List the available versions:

apt-cache madison docker-ce | awk '{ print $3 }'

5:28.3.3-1~ubuntu.24.04~noble

5:28.3.2-1~ubuntu.24.04~noble

VERSION_STRING=5:28.3.3-1~ubuntu.24.04~noble

sudo apt-get install docker-ce=$VERSION_STRING docker-ce-cli=$VERSION_STRING containerd.io docker-buildx-plugin docker-compose-plugin

# Manage Docker as a non-root user

sudo groupadd docker

sudo usermod -aG docker $USER

newgrp docker # activate the group without logging off

Ubuntu 16.04, 18.04, 20.04

# Optional, clear out config files

sudo rm /etc/systemd/system/docker.service.d/docker.conf

sudo rm /etc/systemd/system/docker.service

sudo rm /etc/default/docker #environment file

# New docker package is called now 'docker-ce'

sudo apt-get remove docker docker-engine docker.io containerd runc docker-ce # start fresh

sudo apt-get -yq install apt-transport-https ca-certificates curl gnupg-agent software-properties-common # apt over HTTPs

curl -fsSL https://download.docker.com/linux/ubuntu/gpg | sudo apt-key add - # Docker official GPG key

sudo apt-key fingerprint 0EBFCD88 #verify

#add the repository

sudo add-apt-repository "deb [arch=amd64] https://download.docker.com/linux/ubuntu $(lsb_release -cs) stable" # or {edge|test}

sudo apt-get update # optional

# Option 1 - install latest

sudo apt-get install docker-ce docker-ce-cli containerd.io

# Option 2 - install fixed version

sudo apt-cache madison docker-ce # display available versions

sudo apt-get install docker-ce=<VERSION_STRING> docker-ce-cli=<VERSION_STRING> containerd.io

sudo apt-get install docker-ce=18.09.0~3-0~ubuntu-bionic docker-ce-cli=18.09.0~3-0~ubuntu-bionic containerd.io

sudo apt-mark hold docker-ce docker-ce-cli containerd.io

sudo apt-mark showhold # show packages that version upgrade has been put on hold

# Unhold

sudo apt-mark unhold docker-ce docker-ce-cli containerd.io

Newer versions (>18.09.0) of Docker come with 3 packages:

containerd.io- daemon to interface with the OS API (in this case, LXC - Linux Containers), essentially decouples Docker from the OS, also provides container services for non-Docker container managersdocker-ce- Docker daemon, this is the part that does all the management work, requires the other two on Linuxdocker-ce-cli- CLI tools to control the daemon, you can install them on their own if you want to control a remote Docker daemon

Example of how to run Jenkins docker image

Add a user to docker group

Add your user to docker group to be able to run docker commands without need of sudo as the docker.socket is owned by group docker.

sudo usermod -aG docker $(whoami) # log in to the new docker group (to avoid having to log out / log in again) newgrp docker

Reason

[root@piotr]$ ls -al /var/run/docker.sock srw-rw----. 1 root docker 7 Jan 09:00 docker.sock

HTTP proxy

Configure docker if you run behind a proxy server. In this example CNTLM proxy runs on the host machine listening on localhost:3128. This example overrides the default docker.service file by adding configuration to the Docker systemd service file.

First, create a systemd drop-in directory for the docker service:

sudo mkdir /etc/systemd/system/docker.service.d sudo vi /etc/systemd/system/docker.service.d/http-proxy.conf [Service] Environment="HTTP_PROXY=http://proxy.example.com:80/" Environment="HTTP_PROXY=http://172.31.1.1:3128/" #overrides previous entry Environment="HTTPS_PROXY=http://172.31.1.1:3128/" # If you have internal Docker registries that you need to contact without proxying you can specify them via the NO_PROXY environment variable Environment="NO_PROXY=localhost,127.0.0.1,10.6.96.172,proxy.example.com:80"

Flush changes:

$ sudo systemctl daemon-reload

Verify that the configuration has been loaded:

$ systemctl show --property=Environment docker Environment=HTTP_PROXY=http://proxy.example.com:80/

Restart Docker:

$ sudo systemctl restart docker

Docker create and run, basic options

# It will create a container but won't start it up docker container create -it --name="my-container" ubuntu:latest /bin/bash docekr container start my-container docker run -it --name="mycentos" centos:latest /bin/bash # -i :- interactive mode (attach to STDIN) \command to execute when instantiating container # -t :- attach to the current terminal (sudo TTY) # -d :- disconnect mode, daemon mode, detached mode, running the task in the background # -p :- publish to host exposed container port [ host_port(8080):container_exposedPort(80) ] # --rm :- remove container after command has been executed # --name="name_your_container" # -e|--env MYVAR=123 exports/passing variable to the container, echo $MYVAR will have a value 123 # --privileged :- option will allow Docker to perform actions normally restricted, # like binding a device path to an internal container path.

Docker inspect

inspect image

docker image inspect centos:6

docker image inspect centos:6 --format '{{.ContainerConfig.Hostname}}' #just a single value

docker image inspect centos:6 --format '{{json .ContainerConfig}}' #json key/value output

docker image inspect centos:6 --format '{{.RepoTags}}' #shows all associated tags with the image

Using --format is similar to jq

inspect container

Shows current configuration state of a docker container or an image.

docker inspect <container_name> | grep IPAddress

"SecondaryIPAddresses": null,

"IPAddress": "172.17.0.3",

"IPAddress": "172.17.0.3",

Attach/exec to a docker process

If you are running eg. /bin/bash as a command you can get attached to this running docker process. Note that when you exit the container will stop.

docker attach mycentos

To avoid stopping a container on exit of attach command we can use exec command.

docker exec -it mycentos /bin/bash

Attaching directly to a running container and then exiting the shell will cause the container to stop. Executing another shell in a running container and then exiting that shell will not stop the underlying container process started on instantiation.

Entrypoint, CMD, PID1 and tini

Entrypoint and receiving signals

Reciving signals and handling them within containers here Docker it's the same important as for any other application. Remember containers it's a group of processes running on your host so you need to take care of signals send to your applications.

As a principal container management tool eg. docker stop sends a configurable (in Dockerfile) signal to the entrypoint of your application where SIGTERM - 15 - Termination (ANSI) is default.

- ENTRYPOINT syntax

# exec form, require JSON array; IT SHOULD ALWAYS BE USED ENTRYPOINT ["/app/bin/your-app", "arg1", "arg2"] # shell form, it always runs as a subcommand of '/bin/sh -c', thus your application will never see any signal sent to it ENTRYPOINT "/app/bin/your-app arg1 arg2"

- ENTRYPOINT is a shell script

If application is started by shell script regular way, your shell spawns your application in a new process and you won’t receive signals from Docker. Therefore we need to tell shell to replace itself with your application using the exec command, check also exec syscall. Use:

/app/bin/my-app # incorrect, signal won't be received by 'my-app' exec /app/bin/my-app # correct way

- ENTRYPOINT exec with a pipe commands causing starting a subshell

If you exec piping will force a command to be run in a subshell with the usual consequence: no signals to an app.

exec /app/bin/your-app | tai64n # here you want to add timestamps by piping through tai64n,

# causing running your command in a subshell

- Let another program to be PID1 and handle signalling

- tini

- dump-init

ENTRYPOINT ["/tini", "-v", "--", "/app/bin/docker-entrypoint.sh"]

tini and dumb-init are also able to proxy signals to process groups which technically allows you to pipe your output.However, your pipe target receives that signal at the same time so you can’t log anything on cleanup lest you crave race conditions and SIGPIPEs. So, it's better to avoid logging at termination at all.

- Change signal that will terminate your container process

Listen for SIGTERM or set STOPSIGNAL in your Dockerfile.

vi Dockerfile STOPSIGNAL SIGINT # this will trigger container termination process if someone press Ctrl^C

- References

- Why Your Dockerized Application Isn’t Receiving Signals

- runit alternative to tini

Tini

It's a tiny but valid init for containers:

- protects you from software that accidentally creates zombie processes

- ensures that the default signal handlers work for the software you run in your Docker image

- does so completely transparently! Docker images that work without Tini will work with Tini without any changes

- Docker 1.13+ has Tini included, to enable Tini, just pass the

--initflag to docker run

- Understanding Tini

After spawning your process, Tini will wait for signals and forward those to the child process, and periodically reap zombie processes that may be created within your container. When the "first" child process exits (/your/program in the examples above), Tini exits as well, with the exit code of the child process (so you can check your container's exit code to know whether the child exited successfully).

Add Tini - general dynamicly-linked library (in the 10KB range)

ENV TINI_VERSION v0.18.0

ADD https://github.com/krallin/tini/releases/download/${TINI_VERSION}/tini /tini

RUN chmod +x /tini

ENTRYPOINT ["/tini", "--"]

# Run your program under Tini

CMD ["/your/program", "-and", "-its", "arguments"]

# or docker run your-image /your/program ...

Add Tini to Alpine based image

RUN apk add --no-cache tini # Tini is now available at /sbin/tini ENTRYPOINT ["/sbin/tini", "--"]

Existing entrypoint

ENTRYPOINT ["/tini", "--", "/docker-entrypoint.sh"]

- References

Mount directory in container

We can mount host directory into docker container so the content will be available from the container

docker run -it -v /mnt/sdb1:/opt/java pio2pio/java8 # syntax: -v /path/on/host:/path/in/container

Build image

Dockerfile

Each line RUN creates a container so if possible, we should join lines so it ends up with less layers.

$ wget jkd1.8.0_111.tar.gz

$ cat Dockerfile <<- EOF #'<<-' heredoc with '-' minus ignores <tab> indent

ARG TAGVERSION=6 #only command allowed b4 FROM

FROM ubuntu:${TAGVERSION}

FROM ubuntu:latest #defines base image eg. ubuntu:16.04

LABEL maintainer="myname@gmail.com" #key/value pair added to a metadata of the image

ARG ARG1=value1

ENV ENVIRONMENT="prod"

ENV SHARE /usr/local/share #define env variables with syntax ENV space EnvironmetVariable space Value

ENV JAVA_HOME $SHARE/java

# COPY jkd1.8.0_111.tar.gz /tmp #works only with files, copy a file to container filesystem, here to /tmp

# ADD http://example.com/file.txt

ADD jkd1.8.0_111.tar.gz / #add files into the image root folder, can add also URLs

# SHELL ["executable","params"] #overrides /bin/sh -c for RUN,CMD, etc..

# Executes commands during build process in a new layer E.g., it is often used for installing software packages

RUN mv /jkd1.8.0_111.tar.gz $JAVA_HOME

RUN apt-get update

RUN ["apt-get", "update", "-y"] #in json array format, allows to run a commands but does not require shell executable

VOLUME /mymount_point #this command does not mount anything from a host, just creates a mountpoint

EXPOSE 80 #it doesn't automatically map the port to a hosts

#containers usually don't have system maangement eg. systemctl/service/init.d as designed to run as single process

#entry point becomes main command that start the main proces

ENTRYPOINT apachectl "-DFOREGROUND" #think about it as the MAIN_PURPOSE_OF_CONTAINER command.

# It's always run by default it cannot be overridden

#Single command that will run after the image has been created. Only one per dockerfile, can be overriden.

CMD ["/bin/bash"]

# STOPSIGNAL

EOF

Build

docker build --tag myrepo/java8 . #-f point to custom Dockerfile name eg. -f Dockerfile2 # myrepo dockerhub username, java8 -image name, # . directory where is the Dockerfile docker build -t myrepo/java8 . --pull --no-cache --squash # --pull regardless a local copy of an image can exist force to download a new image # --no-cache don't use cache to build, forcing to rebuild all interim containers # --squash after the build squash all layers into a single layer. docker images #list images docker push myrepo/java8 #upload the image to DockerHub repository

squash is enabled only on docker demon with experimental features enabled.

Manage containers and images

Run a container

When you run a container you will create a new container from a image that has been already build/ is available then put in running state

- -d detached mode, the container will continue to run after the CMD or passed on command exited

- -i interactive mode, allows you to login in /ssh to the container

# docker container run [OPTIONS] IMAGE [COMMAND] [ARG...] # usage docker container run -it --name mycentos centos:6 /bin/bash docker run -it pio2pio/java8 #container section command is optional # -i :- run in interactive mode, then run command /bin/bash # --rm :- will delete container after run # --publish | -p 80:8080 :- publish exposed container port 80-> to 8080 on the docker-host # --publish-all | -P :- publish all exposed container ports to random port >32768

List images

ctop #top for containers docker ps -a #list containers docker image ls #list images docker images #short form of the command above docker images --no-trunc docker images -q #--quiet docker images --filer "before=centos:6" # List exposed ports on a container docker port CONTAINER [PRIVATE_PORT[/PROTOCOL]] docker port web2 80/tcp -> 0.0.0.0:81

Search images in remote repository

Search the DockerHub for images,. You may require to do `docker login` first

IMAGE=ubuntu docker search $IMAGE NAME DESCRIPTION STARS OFFICIAL AUTOMATED ubuntu Ubuntu is a Debian-based Linux operating sys… 8206 [OK] dorowu/ubuntu-desktop-lxde-vnc Ubuntu with openssh-server and NoVNC 210 [OK] rastasheep/ubuntu-sshd Dockerized SSH service, built on top of offi… 167 [OK] IMAGE=apache docker search $IMAGE --filter stars=50 # search images that have 50 or more stars docker search $IMAGE --limit 10 # display top 10 images

List all available tags

IMAGE=nginx

wget -q https://registry.hub.docker.com/v1/repositories/${IMAGE}/tags -O - | sed -e 's/[][]//g' -e 's/"//g' -e 's/ //g' | tr '}' '\n' | awk -F: '{print $3}' | sort -V

Pull images

<name>:<tag> docker pull hello-world:latest # pull latest docker pull --all hello-world # pull all tags docker pull --disable-content-trust hello-world # disable verification docker images --digests #displays sha256: digest of an image # Dangling images - transitional images

from Amazon ECR

- Docker login to ECR service using IAM

docker login does not support native IAM authentication methods. Therefore use a command below that will retrieve, decode, and convert the authorization IAM token into a pre-generated docker login command. Therefore produced login credentials will assume your current IAM User/Role permissions. If your current IAM user can only pull from ECR, after login with docker login you still won't be able to push image to the registry. Example error you may get is not authorized to perform: ecr:InitiateLayerUpload

Login to ECR service, your IAM user requires to have relevant pull/push permissions

eval $(aws ecr get-login --region eu-west-1 --no-include-email)

# aws ecr get-login # generates below docker command with the login token

# docker login -u AWS -p **token** https://$ACCOUNT.dkr.ecr.us-east-1.amazonaws.com # <- output

Docker login to ECR singular repository, min awscli v1.17

ACCOUNT=111111111111 REPOSITORY=myrepo aws ecr get-login-password --region eu-west-1 | docker login --username AWS --password-stdin $ACCOUNT.dkr.ecr.eu-west-1.amazonaws.com/$REPOSITORY

push to Amazon ECR

# List images $ docker images REPOSITORY TAG IMAGE ID CREATED SIZE ansible-aws 2.0.1 b09807c20c96 5 minutes ago 570MB 111111111111.dkr.ecr.eu-west-1.amazonaws.com/ansible-aws 1.0.0 9bf35fe9cc0e 4 weeks ago 515MB # Tag an image 'b09807c20c96' docker tag b09807c20c96 111111111111.dkr.ecr.eu-west-1.amazonaws.com/ansible-aws:2.0.1 # List images, to verify your newly tagged one $ docker images REPOSITORY TAG IMAGE ID CREATED SIZE 111111111111.dkr.ecr.eu-west-1.amazonaws.com/ansible-aws 2.0.1 b09807c20c96 6 minutes ago 570MB # <- new tagged image ansible-aws 2.0.1 b09807c20c96 6 minutes ago 570MB 111111111111.dkr.ecr.eu-west-1.amazonaws.com/ansible-aws 1.0.0 9bf35fe9cc0e 4 weeks ago 515MB # Push an image to ECR docker push 111111111111.dkr.ecr.eu-west-1.amazonaws.com/ansible-aws:2.0.1 The push refers to repository [111111111111.dkr.ecr.eu-west-1.amazonaws.com/ansible-aws] 2c405c66e675: Pushed ... 77cae8ab23bf: Layer already exists 2.0.1: digest: sha256:111111111193969807708e1f6aea2b19a08054f418b07cf64016a6d1111111111 size: 1796

Save and import image

In course to move a image to another filesystem we can save it into .tar

# Export docker image save myrepo/centos:v2 > mycentos.v2.tar tar -tvf mycentos.v2.tar # Import docker image import mycentos.v2.tar <new_image_name> # Load from a stream docker load < mycentos.v2.tar #or --input mycentos.v2.tar to avoid redirections

Export aka commit container into image

Let's say we wanto modify stock image centos:6 by installing Apache interactivly, set to autostart then export as an new image. Let's do it!

docker pull centos:6 docker container run -it --name apache-centos6 centos:6 # Interactively do: yum -y update; yum install -y httpd; chkconfig httpd on; exit # Save container changes - option1 docker commit -m "added httpd daemon" -a "Piotr" b237d65fd197 newcentos:withapache #creates new image from a container's changes docker commit -m "added httpd daemon" -a "Piotr" <container_name> <repo>/<image>:<tag> # -a :- author # Save container changes - option2 docker container export apache-centos6 > apache-centos6.tar docker image import apache-centos6.tar newcentos:withapache docker images REPOSITORY TAG IMAGE ID CREATED SIZE newcentos withapache ea5215fb46ed 50 seconds ago 272MB docker image history newcentos:withapache IMAGE CREATED CREATED BY SIZE COMMENT ea5215fb46ed 2 minutes ago 272MB Imported from -

I am unsure what is a difference in creation of images from a container between:

docker container commitdocker container export- this seems creates smaller image

Tag images

Tags are used to usually to name Official image with a new name that we are planning to modify. This allows to create a new image, run a new container from a tag, delete the original image without affecting the new image or container started from the new tagged image.

docker image tag #long version docker tag centos:6 myucentos:v1 #this will create a duplicate of centos:6 named myucentos:v1

Tagging allows to modify repository name and maanges references to images located on a filesystem.

History of an image

We can display history of layers that created the image by showing interim images build in creation order. It shows only layers created on a local filesystem.

docker image history myrepo/centos:v2

Stop and delete all containers

docker stop $(docker ps -aq) && docker rm $(docker ps -aq)

Delete image

$ docker images REPOSITORY TAG IMAGE ID CREATED SIZE company-repo 0.1.0 f796d7f843cc About an hour ago 888MB <none> <none> 04fbac2fdf48 3 hours ago 565MB ubuntu 16.04 7aa3602ab41e 3 weeks ago 115MB # Delete image $ docker rmi company-repo:0.1.0 Untagged: company-repo:0.1.0 Deleted: sha256:e5cca6a080a5c65eacff98e1b17eeb7be02651849b431b46b074899c088bd42a .. Deleted: sha256:bc7cda232a2319578324aae620c4537938743e46081955c4dd0743a89e9e8183 # Prune image - delete dangling (temp/interim) images. # These are not associated with end-product image or containers. docker image prune docker image prune -a #remove all images not associated with any container

Cleaning up space by removing docker objects

This applied both to docker container and swarm systems.

docker system df #show disk usage

TYPE TOTAL ACTIVE SIZE RECLAIMABLE

Images 1 0 131.7MB 131.7MB (100%)

Containers 0 0 0B 0B

Local Volumes 0 0 0B 0B

Build Cache 0 0 0B 0B

docker network ls #note all networks below are system created, so won't get removed

NETWORK ID NAME DRIVER SCOPE

452b1c428209 bridge bridge local

528db1bf80f1 docker_gwbridge bridge local

832c8c6d73a5 host host local

t8jxy5vsy5on ingress overlay swarm

815a9c2c4005 none null local

docker system prune #removes objects created by a user only, on the current node only

#add --volumes to remove them as well

WARNING! This will remove:

- all stopped containers

- all networks not used by at least one container

- all dangling images

- all dangling build cache

Are you sure you want to continue? [y/N]

docker system prune -a --volumes #remove all

Docker Volumes

Docker's 'copy-on-write' philosophy drives both performance and efficiency. It's only the top layer that is writable and it's a delta of underlying layer.

Volumes can be mounted to your container instances from your underlying host systems.

_data volumes, since they are not controlled by the storage driver (since they represent a file/directory on the host filesystem /var/lib/docker), are able to bypass the storage driver. As a result, their contents are not affected when a container is removed.

Volumes are data mounts created on a host in /var/lib/docker/volumes/ directory and refereed by name in a Dockerfile.

docker volume ls #list volumes created by VOLUME directive in a Dockerfile

sudo tree /var/lib/docker/volumes/ #list volumes on host-side

docker volume create my-vol-1

docker volume inspect my-vol-1

[

{

"CreatedAt": "2019-01-17T08:47:01Z",

"Driver": "local",

"Labels": {},

"Mountpoint": "/var/lib/docker/volumes/my-vol-1/_data",

"Name": "my-vol-1",

"Options": {},

"Scope": "local"

}

]

Using volumes with Swarm services

docker container run --name web1 -p 80:80 --mount source=my-vol-1,target=/internal-mount --replicas 3 httpd #container docker service create --name web1 -p 80:80 --mount source=my-vol-1,target=/internal-mount --replicas 3 httpd #swarm service # --mount --volumes|-v is not supported with services, this will replicate volumes across swarm when needed, # but it will not replicate files docker exec -it web1 /bin/bash #connect to the container roor@c123:/ echo "Created when connected to container: volume-web1" > /internal-mount/local.txt; exit # prove the file is on a host filesystem created volume user@dockerhost$ cat /var/lib/docker/volumes/my-vol-1/_data/local.txt

- Host storage mount

Bind mapping is binding host filesystem directory to a container directory. It's not mouting volume that it'd require a mount point and volume on a host.

mkdir /home/user/web1 echo "web1 index" > /home/user/web1/index.html docker container run -d --name testweb -p 80:80 --mount type=bind,source=/home/user/web1,target=/usr/local/apache2/htdocs httpd curl http://localhost:80

Removing service is not going to remove the volume unless you delete the volume itself. It that case will be removed from all swarms.

Networking

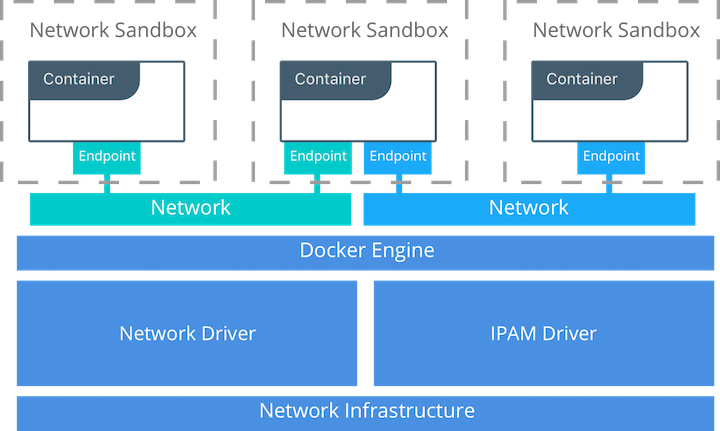

Container Network Model

It's a concept of network implementation that is built on multiple private networks across multiple hosts overlayed and managed by IPAM. Protocol that keeps track and provision addesses.

Main 3 components:

- sandbox - contains the configuration of a container's network stack, incl. management of interfaces, routing and DNS. An implementation of a Sandbox could be a eg. Linux Network Namespace. A Sandbox may contain many endpoints from multiple networks.

- endpoint - joins a Sandbox to a Network. Interfaces, switches, ports, etc and belong to only one network at the time. The Endpoint construct exists so the actual connection to the network can be abstracted away from the application. This helps maintain portability.

- network - a clollection of endpoints that can communicate directly (bridges, VLANs, etc.) and can consist of 1toN endpoints

- IPAM (Internet Protocol Address Management)

Managing addesees across multiple hosts on a separate physical networks while providing routing to the underlaying swarm networks externally is the IPAM prblem for Docker. Depends on the netwok driver choice, IPAM is handled at different layers in the stack. Network drivers enable IPAM through DHCP drivers or plugin drivers so the complex implementation that would be normally overlapping addesses is supported.

- References

Publish exposed container/service ports

- Publishing modes

- host

- set using

--publish mode=host,8080:80, makes ports available only on the undelaying host system not outside the host the service may exist; defits routing mesh so user is responsible for routing - ingress

- responsible for routing mesh makes sure all published ports are avaialble on all hosts in the swarm cluster regardless is a service replica running on it or not

# List exposed ports on a container

docker port CONTAINER [PRIVATE_PORT[/PROTOCOL]]

docker port web2

80/tcp -> 0.0.0.0:81

# Publish port

host : container

\ : /

docker container run -d --name web1 --publish 81:80 httpd

# --publish | -p :- publish to host exposed container port

# 81 :- port on a host, can use range eg. 81-85, so based on port availability port will be used

# 80 :- exposed port on a container

ss -lnt

State Recv-Q Send-Q Local Address:Port Peer Address:Port

LISTEN 0 100 127.0.0.1:25 *:*

LISTEN 0 128 *:22 *:*

LISTEN 0 100 ::1:25 :::*

LISTEN 0 128 :::81 :::*

LISTEN 0 128 :::22 :::*

docker container run -d --name web1 --publish-all 81:80 httpd

# --publish-all | -P publish all cotainer exposed ports to random ports above >32768

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

c63efe9cbb94 httpd "httpd-foreground" 2 sec.. Up 1 s 80/tcp testweb #port exposed but not published

cb0711134eb5 httpd "httpd-foreground" 4 sec.. Up 2 s 0.0.0.0:32769->80/tcp testweb1 #port exposed and published to host:32769

Network drivers

Default network for a single host docker-host is bridge network.

- List of Native (part of Docker Engine) Network Drivers

- bridge

- default on stand-alone hosts, it's private network internal to the host system, all containers on this host using Bridge network can communicate, external access is granted by port exposure or static-routes added with teh host as the gateway for that network

- none

- used when a container does not need any networkng, still can be accessed from the host using

docker attach [containerID]ordocker exec -it [containerID]commands - host

- aka Host Only Networking, only accessable via underlaying host, access to services can be provided by exposing ports to the host system

- overlay

- swarm scope driver, allows communication to all Docker Daemons in a cluster, self-extending if needed, maanged by Swarm manager, it's default mode of Swarm communication

- ingress

- extended network across all nodes in the cluster; special overlay network that load balances netowrk traffic amongst a given service's working nodes; maintains a list of all IP addresses from nodes that participate in that service (using the IPVS module) and when a request comes in, routes to one of them for the indicated service; provides routing mesh' that allows services to be exposed to the external network without having replica running on every node in the Swarm

- docker gateway bridge

- special bridge network that allows overlay networks (incl. ingress) access an individual DOcker daemon's physical network; every container run within a service is connected to the local Docerk daemon's host network; automatically created when Docker is initialised or joined by

docker swarm initordocker joincommands.

- Docker interfaces

docker0- adapter is installed by default during Docker setup and will be assigned an address range that will determine the local host IPs available to the containers running on it

- Create bridge network

docker network ls #default networks list

NETWORK ID NAME DRIVER SCOPE

130833da0920 bridge bridge local

528db1bf80f1 docker_gwbridge bridge local

832c8c6d73a5 host host local

t8jxy5vsy5on ingress overlay swarm #'ingress' special network 1 per cluster

815a9c2c4005 none null local

docker network inspect bridge #bridge is a default network containers are deployed to

docker container run -d web1 -p 8080:80 httpd #expose container port :80 -> :8080 on the docker-home

docker container inspect web1 | grep IPAdd

docker container inspect --format="{{.NetworkSettings.Networks.bridge.IPAddress}}" web1 #get container ip

curl http://$(IPAddr)

- Create bridge network

docker network create --driver=bridge --subnet=192.168.1.0/24 --opt "com.docker.network.driver.mtu"=1501 deviceeth0 docker network ls docker network inspect deviceeth0

- Create overlay network

docker network create --driver=overlay --subnet=192.168.1.0/24 --gateway=192.168.1.1 overlay0 docker network ls NETWORK ID NAME DRIVER SCOPE 130833da0920 bridge bridge local 528db1bf80f1 docker_gwbridge bridge local 832c8c6d73a5 host host local t8jxy5vsy5on ingress overlay swarm 815a9c2c4005 none null local 2x6bq1czzdc1 overlay0 overlay swarm

- Inspect network

docker network inspect overlay0

[

{

"Name": "overlay0",

"Id": "2x6bq1czzdc102sl6ge7gpm3w",

"Created": "2019-01-19T11:24:02.146339562Z",

"Scope": "swarm",

"Driver": "overlay",

"EnableIPv6": false,

"IPAM": {

"Driver": "default",

"Options": null,

"Config": [

{

"Subnet": "192.168.1.0/24",

"Gateway": "192.168.1.1"

}

]

},

"Internal": false,

"Attachable": false,

"Ingress": false,

"ConfigFrom": {

"Network": ""

},

"ConfigOnly": false,

"Containers": null,

"Options": {

"com.docker.network.driver.overlay.vxlanid_list": "4097"

},

"Labels": null

}

]

- Inspect container network

docker container inspect testweb --format {{.HostConfig.NetworkMode}}

overlay0

docker container inspect testweb --format {{.NetworkSettings.Networks.dev_bridge.IPAddress}}

192.168.1.3

Connect/disconnect from a network can be done when a container is running. Connect won't disconnect from a current network.

docker network connect --ip=192.168.1.10 deviceeth0 web1

docker container inspect --format="{{.NetworkSettings.Networks.bridge.IPAddress}}" web1

docker container inspect --format="{{.NetworkSettings.Networks.deviceeth0.IPAddress}}" web1

curl http://$(IPAddr)

docker network disconnect deviceeth0 web1

Overlay network in Swarm cluster

Overlay network can be created/removed/updated like any other docker objects. It allows inter-service(containers) communication, where --gateway ip address is used to reach to outside eg. Inernet or the host network. When creating the overlay network on the manager host it will get recreated on worker nodes only when is referenced by any service that is using it. See below.

swarm-mgr$ docker network create --driver=overlay --subnet=192.168.1.0/24 --gateway=192.168.1.1 overlay0 swarm-mgr$ docker service create --name web1 -p 8080:80 --network=overlay0 --replicas 2 httpd uvxymzdkcfwvs2oznbnk7nv03 overall progress: 2 out of 2 tasks 1/2: running [==================================================>] 2/2: running [==================================================>] swarm-wkr$ docker network ls NETWORK ID NAME DRIVER SCOPE ba175ebd2a6f bridge bridge local a5848f607d8c docker_gwbridge bridge local fccfb9c1fdc3 host host local t8jxy5vsy5on ingress overlay swarm 127b10783faa none null local 2x6bq1czzdc1 overlay0 overlay swarm # remove network, only affected newly created servces not the running onces swarm-mgr$ docker service update --network-rm=overlay0 web1

DNS

docker container run -d --name testweb1 -P --dns=8.8.8.8 \

--dns=8.8.4.4 \

--dns-search "mydomain.local" \

httpd

# -P :- publish-all exposed ports to random port >32768

docker container exec -it testweb1 /bin/bash -c 'cat /etc/resolv.conf'

search us-east-2.compute.internal

nameserver 8.8.8.8

nameserver 8.8.4.4

# System wide settings, requires docker.service restart

cat > /etc/docker/daemon.json <<EOF

{

"dns": ["8.8.8.8", "8.8.4.4"]

}

EOF

sudo systemctl restart docker.service #required

Examples

Lint - best practices

$ docker run --rm -i hadolint/hadolint < Dockerfile /dev/stdin:9:16 unexpected newline expecting "\ ", '=', a space followed by the value for the variable 'MAC_ADDRESS', or at least one space

Default project

As good practice all Docker files should be source controlled. The basic self explanatory structure can looks like below, and skeleton be created with a commend below:

mkdir APROJECT && d=$_; touch $d/{build.sh,run.sh,Dockerfile,README.md,VERSION};mkdir $d/assets; touch $_/{entrypoint.sh,install.sh}

└── APROJECT

├── assets

│ ├── entrypoint.sh

│ └── install.sh

├── build.sh

├── Dockerfile

├── README.md

└── VERSION

Dockerfile

Dockerfile it is simply a build file.

Semantics

entrypoint- Container config: what to start when this image is ran.

entrypointandcmd- Docker allows you to define an Entrypoint and Cmd which you can mix and match in a Dockerfile. Entrypoint is the executable, and Cmd are the arguments passed to the Entrypoint. The Dockerfile schema is quite lenient and allows users to set Cmd without Entrypoint, which means that the first argument in Cmd will be the executable to run.

User management

RUN addgroup --gid 1001 jenkins -q RUN adduser --gid 1001 --home /tank --disabled-password --gecos '' --uid 1001 jenkins # --gid add user to group 1001 # --gecos parameter is used to set the additional information. In this case it is just empty. # --disabled-password it's like --disabled-login, but logins are still possible (for example using SSH RSA keys) but not using password authentication USER jenkins:jenkins #sets user for next RUN, CMD and ENTRYPOINT command WORKDIR /tank #changes cwd for next RUN, CMD, ENTRYPOINT, COPY and ADD

Multiple stage build

Introduced in Docker 17.06, allows to use multiple FROM statements allowing for multi stage builds.

FROM microsoft/aspnetcore-build AS build-env WORKDIR /app # copy csproj and restore as distinct layers COPY *.csproj ./ RUN dotnet restore # copy everything else and build COPY . ./ RUN dotnet publish -c Release -o output # build runtime image FROM microsoft/aspnetcore WORKDIR /app COPY --from=build-env /app/output . #multi stage: copy files from previous container [as build-env] ENTRYPOINT ["dotnet", "LetsKube.dll"]

Squash an image

Docker uses Union filesystem that allows multiple volumes (layers) to share common and override changes by applying them on top layer.

There is no official way to flatten layers to a single storage layer or minimize an image size (2017). Below it's just practical approach.

- Start container from an image

- Export a container to

.tarwith all it's file systems - Import container with new image name

Once the process completes and original image gets deleted the new image docker image history command will show only one layer. Often the image will be smaller.

# run a container from an image docker run myweb:v3 # export container to .tar docker export <contr_name> > myweb.v3.tar docker save <image id> > image.tar #not verified command docker import myweb.v3.tar myweb:v4 docker load myweb.v3.tar #not verified command

- Resources

- docker-squash GitHub

Gracefully stop / kill a container

all below are only notes

Trap ctrl_c then kill/rm container.

- --init

- --sig-proxy this only works when --tty=false but by default is true

Proxy

If you are behind corporate proxy, you should use Docker client ~/.docker/config.json config file. It requires Docker

17.07 minimum version.

{

"proxies":

{

"default":

{

"httpProxy": "http://10.0.0.1:3128",

"httpsProxy": "http://10.0.0.1:3128",

"noProxy": "localhost,127.0.0.1,*.test.example.com,.example2.com"

}

}

}

More you can find here

Insecure proxy

These can be added to different places, the order is based on latest practices and versioning

- docker-ce 18.6

{

"insecure-registries" : [ "localhost:443","10.0.0.0/8", "172.16.0.0/12", "192.168.0.0/16" ]

}

sudo systemctl daemon-reload sudo systemctl restart docker sudo systemctl show docker | grep Env docker info #check Insecure Registries

- Using environment file, prior version 18

$ sudo vi /etc/default/docker

DOCKER_HOME='--graph=/tank/docker'

DOCKER_GROUP='--group=docker'

DOCKER_LOG_DRIVER='--log-driver=json-file'

DOCKER_STORAGE_DRIVER='--storage-driver=btrfs'

DOCKER_ICC='--icc=false'

DOCKER_IPMASQ='--ip-masq=true'

DOCKER_IPTABLES='--iptables=true'

DOCKER_IPFORWARD='--ip-forward=true'

DOCKER_ADDRESSES='--host=unix:///var/run/docker.sock'

DOCKER_INSECURE_REGISTRIES='--insecure-registry 10.0.0.0/8 --insecure-registry 172.16.0.0/12 --insecure-registry 192.168.0.0/16'

DOCKER_OPTS="${DOCKER_HOME} ${DOCKER_GROUP} ${DOCKER_LOG_DRIVER} ${DOCKER_STORAGE_DRIVER} ${DOCKER_ICC} ${DOCKER_IPMASQ} ${DOCKER_IPTABLES} ${DOCKER_IPFORWARD} ${DOCKER_ADDRESSES} ${DOCKER_INSECURE_REGISTRIES}"

$ sudo vi /etc/systemd/system/docker.service.d/docker.conf

[Service]

EnvironmentFile=-/etc/default/docker

ExecStart=/usr/bin/dockerd $DOCKER_HOME $DOCKER_GROUP $DOCKER_LOG_DRIVER $DOCKER_STORAGE_DRIVER $DOCKER_ICC $DOCKER_IPMASQ $DOCKER_IPTABLES $DOCKER_IPFORWARD $DOCKER_ADDRESSES $DOCKER_INSECURE_REGISTRIES

$ sudo vi /etc/systemd/system/docker.service

[Unit]

Description=Docker Application Container Engine

Documentation=https://docs.docker.com

After=network-online.target docker.socket firewalld.service

Wants=network-online.target

Requires=docker.socket

[Service]

EnvironmentFile=-/etc/default/docker

Type=notify

# the default is not to use systemd for cgroups because the delegate issues still

# exists and systemd currently does not support the cgroup feature set required

# for containers run by docker

ExecStart=/usr/bin/dockerd -H fd://

ExecReload=/bin/kill -s HUP $MAINPID

LimitNOFILE=1048576

# Having non-zero Limit*s causes performance problems due to accounting overhead

# in the kernel. We recommend using cgroups to do container-local accounting.

LimitNPROC=infinity

LimitCORE=infinity

# Uncomment TasksMax if your systemd version supports it.

# Only systemd 226 and above support this version.

TasksMax=infinity

TimeoutStartSec=0

# set delegate yes so that systemd does not reset the cgroups of docker containers

Delegate=yes

# kill only the docker process, not all processes in the cgroup

KillMode=process

# restart the docker process if it exits prematurely

Restart=on-failure

StartLimitBurst=3

StartLimitInterval=60s

[Install]

WantedBy=multi-user.target

Run docker without sudo

Adding a user to docker group should be sufficient. However on apparmor, SELinux or a filesystem with ACL enabled additional permissions might be required in respect to access a socket file.

$ ll /var/run/docker.sock srw-rw---- 1 root docker 0 Sep 6 12:31 /var/run/docker.sock= # ACL $ sudo getfacl /var/run/docker.sock getfacl: Removing leading '/' from absolute path names # file: var/run/docker.sock # owner: root # group: docker user::rw- group::rw- other::--- # Grant ACL to jenkns user $ sudo setfacl -m user:username:rw /var/run/docker.sock $ sudo getfacl /var/run/docker.sock getfacl: Removing leading '/' from absolute path names # file: var/run/docker.sock # owner: root # group: docker user::rw- user:jenkins:rw- group::rw- mask::rw- other::---

- References

References

- my-container-wont-stop-on-ctrl-c-and-other-minor-tragedies

- PID1 in container aka tinit

- understanding-volumes-docker

Docker Enterprise Edition

Components:

- Docker daemon (fka "Engine")

- Docker Trusted Registry (DTR)

- Docker Universal Control Plane (UCP)

Docker Swarm

Swarm - sizing

- Universal Control Plane (UCP)

This is for only Enterpsise Edition

- ports managers, workers in/out

Hardware requirments:

- 8gb RAM for managers or DTR Docker Trsuted Registry

- 4gb RAM for workers

- 3gb free space

Performance Consideration (Timing)

Component Timeout(ms) Configurable Raft consensus between manager nodes 3000 no Gossip protocol for overlay networking 5000 no etcd 500 yes RethinkDB 10000 no Stand-alone swarm 90000 no

Compatibility Docker EE

- Docker Engine 17.06+

- DTR 2.3+

- UCP 2.2+

Swarm with single host manager

# Initialise Swarm

docker swarm init --advertise-addr 172.31.16.10 #Iyou get SWMTKN-token

To add a worker to this swarm, run the following command:

docker swarm join --token SWMTKN-1-1i2v91qbj0pg88dxld15vpx3e74qm5clk7xkcrg6j3xknedqui-dh60f4j09itiqjfhqa196ufvo 172.31.16.10:2377

To add a manager to this swarm, run 'docker swarm join-token manager' and follow the instructions.

# Join tokens

docker swarm join-token manager #display manager join-token, run on manager

docker swarm join-token worker #display worker join-token, run on manager

# Join worker, run new-worker-node

# -> swarm cluster id <-> this part is mgr/wkr <- -> mgr node <-

docker swarm join --token SWMTKN-1-1i2v91qbj0pg88dxld15vpx3e74qm5clk7xkcrg6j3xknedqui-dh60f4j09itiqjfhqa196ufvo 172.31.16.10:2377

# Join another manager, run on new-manager-node

docker swarm join-token manager #run on primary manager if you wish add another manager

# in output you get a token. You notice that 1st part up to dash identifies Swarm cluster and the other part is role id.

# join to swarm (cluster), token will identify a role in the cluster manager or worker

docker swarm join --token SWMTKN-xxxx

docker swarm join --token SWMTKN-1-1i2v91qbj0pg88dxld15vpx3e74qm5clk7xkcrg6j3xknedqui-dh60f4j09itiqjfhqa196ufvo 172.31.16.10:2377

This node joined a swarm as a worker.

Check Swarm status

docker node ls [cloud_user@ip-172-31-16-10 swarm-manager]$ docker node ls ID HOSTNAME STATUS AVAILABILITY MANAGER STATUS ENGINE VERSION 641bfndn49b1i1dj17s8cirgw * ip-172-31-16-10.mylabserver.com Ready Active Leader 18.09.0 vlw7te728z7bvd7ulb3hn08am ip-172-31-16-94.mylabserver.com Ready Active 18.09.0 docker system info | grep -A 7 Swarm Swarm: active NodeID: 641bfndn49b1i1dj17s8cirgw Is Manager: true ClusterID: 4jqxdmfd0w5pc4if4fskgd5nq Managers: 1 Nodes: 2 Default Address Pool: 10.0.0.0/8 SubnetSize: 24

- Troubleshooting

sudo systemctl disable firewalld && sudo systemctl stop firewalld # CentOS sudo -i; printf "\n10.0.0.11 mgr01\n10.0.0.12 node01\n" >> /etc/hosts # Add nodes to hosts file; exit

Swarm cluster

docker node update -availability drain [node] #drain services for Manager Only nodes docker service update --force [service_name] #force re-balance services across cluster docker swarm leave #node leaves a cluster

Locking / unlocking swarm cluster

Logs used by Swarm manager are encrypted on disk. Access to nodes gives access to keys that encrypt them. It further protects cluster as requires a unlocking key when restarting manager/nodes.

docker swarm init --auto-lock=true #initialize with docker swarm update --auto-lock=true #update current swarm # both will produce unlock token STKxxx docker swarm unlock #it'll ask for the unlock token docker swarm update --auto-lock=false #disable key locking

If you have access to a manager you can always get unlock key using:

docker swarm unlock-key

Key management

docker swarm unlock-key --rotate #could be in a cron

Backup and restore swarm cluster

This priocess describes how to backup whole cluster configuration so can be restored on a new set of servers.

Create docker apps running across swarm

docker service create --name bkweb --publish 80:80 --replicas 2 httpd $ docker service ls ID NAME MODE REPLICAS IMAGE PORTS q9jki3n2hffm bkweb replicated 2/2 httpd:latest *:80->80/tcp $ docker service ps bkweb #note containers run on 2 different nodes ID NAME IMAGE NODE DESIRED STATE CURRENT STATE j964jm1lq3q5 bkweb.1 httpd:latest server2c.mylabserver.com Running Running about a minute ago jpjx3mk7hhm0 bkweb.2 httpd:latest server1c.mylabserver.com Running Running about a minute ago

- Backup state files

sudo -i cd /var/lib/docker/swarm cat docker-state.json #contains info about managers, workers, certificates, etc.. cat state.json sudo systemctl stop docker.service # Backup swarm cluster, this file can be then used to recover whole swarm cluster on another set of servers sudo tar -czvf swarm.tar.gz /var/lib/docker/swarm/ #the running docker containers should be brought up as they were before stopping the service systemctl start docker

Recover using swarm.tar backup

# scp swarm.tar to recovery node - what it'd be a node with just installed docker sudo rm -rf /var/lib/docker/swarm sudo systemctl stop docker # Option1 untar directly sudo tar -xzvf swarm.tar.gz -C /var/lib/docker/swarm # Option2 copy recursivly, -f override if a file exists tar -xzvf swarm.tar.gz; cd /var/lib/docker cp -rf swarm/ /var/lib/docker/ sudo systemctl start docker docker swarm init --force-new-cluster # produces the exactly same token # you should join all required nodes to new manager ip # scale services down to 1, then scale up so get distributed to other nodes

Run containers as a services

Docker container has a number limitation therefore running as a service where Cluster Manager: Swarm or Kubernetes manages networking, access, loadbalancing etc.. is a way to scale with ease. The service is using eg. mesh routing to deal with access to containers.

Swarm nodes setup 1 manager and 2 workers

ID HOSTNAME STATUS AVAILABILITY MANAGER STATUS ENGINE VERSION 641bfndn49b1i1dj17s8cirgw * swarm-mgr-1.example.com Ready Active Leader 18.09.1 vlw7te728z7bvd7ulb3hn08am swarm-wkr-1.example.com Ready Active 18.09.1 r8h7xmevue9v2mgysmld59py2 swarm-wkr-2.example.com Ready Active 18.09.0

Create and run a service

docekr pull httpd docker service create --name serviceweb --publish 80:80 httpd # --publish|-p -expose a port on all containers in the running cluster docker service ls ID NAME MODE REPLICAS IMAGE PORTS vt0ftkifbd84 serviceweb replicated 1/1 httpd:latest *:80->80/tcp docker service ps serviceweb #show nodes that a container is running on, here on mgr-1 node ID NAME IMAGE NODE DESIRED STATE CURRENT STATE ERROR PORTS e6rx3tzgp1e5 serviceweb.1 httpd:latest swarm-mgr-1.example.com Running Running about

When running as a service even if a container runs on a single node (replica=1) the container can be accessed from any of swarm nodes. It's because service exposed port has been exposed to extended mesh private network that the container is running on.

[user@swarm-mgr-1 ~]$ curl -k http://swarm-mgr-1.example.com <html><body><h1>It works!</h1></body></html> [user@swarm-mgr-1 ~]$ curl -k http://swarm-wkr-1.example.com <html><body><h1>It works!</h1></body></html> [user@swarm-mgr-1 ~]$ curl -k http://swarm-wkr-2.example.com <html><body><h1>It works!</h1></body></html>

Service update, can be done to limits, volumes, env-variables and more...

docker service scale devweb=3 #or docker service update --replicas 3 serviceweb #--detach=false shows visual progress in older versions, default in v18.06 serviceweb overall progress: 3 out of 3 tasks 1/3: running [==================================================>] 2/3: running [==================================================>] 3/3: running [==================================================>] verify: Service converged # Limits(soft limit) and reservations(hard limit), this causes to start new services(containers) docker service update --limit-cpu=.5 --reserve-cpu=.75 --limit-memory=128m --reserve-memory=256m serviceweb

Templating service names

This allows to control eg. hostname in a cluster. Useful for big clusters to easier identify services where they run from hostname.

docker service create --name web --hostname"{{.Node.ID}}-{{.Service.Name}}" httpd

docker service ps --no-trunc web

docker inspect --format="{{}.Config.Hostname}" web.1.ab10_serviceID_cd

aa_nodeID_bb-web

Node lables for task/service placement

docker node ls ID HOSTNAME STATUS AVAILABILITY MANAGER STATUS ENGINE VERSION 641bfndn49b1i1dj17s8cirgw * swarm-mgr-1.example.com Ready Active Leader 18.09.1 vlw7te728z7bvd7ulb3hn08am swarm-wkr-1.example.com Ready Active 18.09.1 r8h7xmevue9v2mgysmld59py2 swarm-wkr-2.example.com Ready Active 18.09.1 docker node inspect 641bfndn49b1i1dj17s8cirgw --pretty ID: 641bfndn49b1i1dj17s8cirgw Hostname: swarm-mgr-1.example.com Joined at: 2019-01-08 12:16:56.277717163 +0000 utc Status: State: Ready Availability: Active Address: 172.31.10.10 Manager Status: Address: 172.31.10.10:2377 Raft Status: Reachable Leader: Yes Platform: Operating System: linux Architecture: x86_64 Resources: CPUs: 2 Memory: 3.699GiB Plugins: Log: awslogs, fluentd, gcplogs, gelf, journald, json-file, local, logentries, splunk, syslog Network: bridge, host, macvlan, null, overlay Volume: local Engine Version: 18.09.1 TLS Info: TrustRoot: -----BEGIN CERTIFICATE----- MIIBajCCARCgAwIBAgIUKXz3wtc8OA8uzTo1pO86ko+PB+EwCgYIKoZIzj0EAwIw .. -----END CERTIFICATE----- Issuer Subject: MBMxETAPBgNVBAMTCHN3YX.....h Issuer Public Key: MFkwEwYHKoZIzj0CAQYIKoZIzj0DAQcDQgAEy......==

Apply label to a node

docker node update --label-add node-env=testnode r8h7xmevue9v2mgysmld59py2 docker node inspect r8h7xmevue9v2mgysmld59py2 --pretty | grep -B1 -A2 Labels ID: r8h7xmevue9v2mgysmld59py2 Labels: - node-env=testnode Hostname: swarm-wkr-1.example.com

How to use it. Run a service with --constraint option that pins services to run on a node meeting given criteria. In our case to run on a node where node.labels.node-env == testnode. Note that all replicas are running on the same node unlike they'd be distributed across the cluster.

docker service create --name constraints -p 80:80 --constraint 'node.labels.node-env == testnode' --replicas 3 httpd #node.role, node.id, node.hostname zrk15vfdaitc1rvw9wqh2s0ot overall progress: 3 out of 3 tasks 1/3: running [==================================================>] 2/3: running [==================================================>] 3/3: running [==================================================>] verify: Service converged [cloud_user@mrpiotrpawlak1c ~]$ docker service ls ID NAME MODE REPLICAS IMAGE PORTS zrk15vfdaitc constraints replicated 3/3 httpd:latest *:80->80/tcp [user@swarm-wkr-2 ~]$ docker service ps constraints ID NAME IMAGE NODE DESIRED STATE CURRENT STATE ERROR PORTS y5z4mt99uzpo constraints.1 httpd:latest swarm-wkr-2.example.com Running Running 41 seconds ago zqbn4ips969q constraints.2 httpd:latest swarm-wkr-2.example.com Running Running 41 seconds ago vnb10jcs2915 constraints.3 httpd:latest swarm-wkr-2.example.com Running Running 41 seconds ago

Scaling services

These commands be issued on a manager node

docker pull docker nginx docker service create --name web --publish 80:80 httpd docker service ps web #there is only 1 replica docker service update --replicas 3 web #update to 3 replicas docker service create --name nginx --publish 5901:80 nginx elinks http://swarm-mgr-1.com:5901 #nginx website will be presented # scale is equivalent to update --replicas command for a single or multiple services docker service scale web=3 nginx=3 docker service ls

Replicated services vs global services

- Global Replicated

- mode runs at least one copy of a service on each swarm node, even if you join another node the service will coverage there as well. In global mode you cannot use

update --replicatsorscalecommands. It is not possible to update the mode type. - Replicated mode

- allows for grater control and flexibility of running number of services.

# creates a single service running across whole cluster in replicated mode docker service create --name web --publish 80:80 httpd # run in a global node docker service create --name web --publish 5901:80 --mode global httpd docker service ls #note distinct mode names: global and replicated

Docker compose and deploy to Swarm

Install

sudo yum install epel sudo yum install pip sudo pip install --upgrade pip # install docker CE or EE to avoid Python libs conflits sudo pip install docker-compose # Troubleshooting ## Err: Cannot uninstall 'requests'. It is a distutils installed project... pip install --ignore-installed requests

Dockerfile

cat >Dockerfile <<EOF FROM centos:latest RUN yum install -y httpd RUN echo "Website1" >> /var/www/html/index.html EXPOSE 80 ENTRYPOINT apachectl -DFOREGROUND EOF

Docker compose file

cat >docker-compose.yml <<EOF

version: '3'

services:

apiweb1:

image: httpd_1:v1

build: .

ports:

- "81:80"

apiweb2:

image: httpd_1:v1

ports:

- "82:80"

load-balancer:

image: nginx:latest

ports:

- "80:80"

EOF

Run docker compose, on the current node only

docker-compose up -d WARNING: The Docker Engine you're using is running in swarm mode. Compose does not use swarm mode to deploy services to multiple nodes in a swarm. All containers will be scheduled on the current node. To deploy your application across the swarm, use `docker stack deploy`. Creating compose_apiweb2_1 ... done Creating compose_apiweb1_1 ... done Creating compose_load-balancer_1 ... done docker ps CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES 14f8b6b10c2d nginx:latest "nginx -g 'daemon of…" 2 minutesUp 2 min 0.0.0.0:80->80/tcp compose_load-balancer_1 e9b5b37fe4e5 httpd_1:v1 "/bin/sh -c 'apachec…" 2 minutesUp 2 min 0.0.0.0:81->80/tcp compose_apiweb1_1 28ee22a8eae0 httpd_1:v1 "/bin/sh -c 'apachec…" 2 minutesUp 2 min 0.0.0.0:82->80/tcp compose_apiweb2_1 # Verify curl http://localhost:81 curl http://localhost:82 curl http://localhost:80 #nginx # Prep before deploying docker-compose to Swarm. Also images needs to be build before hand. # Docker stack does not support building images docker-compose down --volumes #save everything to storage volumes Stopping compose_load-balancer_1 ... done Stopping compose_apiweb1_1 ... done Stopping compose_apiweb2_1 ... done Removing compose_load-balancer_1 ... done Removing compose_apiweb1_1 ... done Removing compose_apiweb2_1 ... done Removing network compose_default

- Deploy compose to Swarm

docker stack deploy --compose-file docker-compose.yml customcompose-stack #customcompose-stack is a prefix for service name Ignoring unsupported options: build Creating network customcompose-stack_default Creating service customcompose-stack_apiweb1 Creating service customcompose-stack_apiweb2 Creating service customcompose-stack_load-balancer docker stack services customcompose-stack #or docker service ls ID NAME MODE REPLICAS IMAGE PORTS k7wwkncov49p customcompose-stack_apiweb1 replicated 0/1 httpd_1:v1 *:81->80/tcp nl0j5folpmha customcompose-stack_apiweb2 replicated 0/1 httpd_1:v1 *:82->80/tcp x6p14gmpjyra customcompose-stack_load-balancer replicated 1/1 nginx:latest *:80->80/tcp docker stack rm customcompose-stack #remove stack

Selecting a Storage Driver

Go to Docker version matrix to verify what drivers are supported on your platform. Changing storage driver is destructive and you loose all containers volumes. Therefore you need to export/backup then re-import after the storage driver change.

- CentOS

Device mapper is officialy supported on CentOS. It can be used on a disc as blockstorage it uses loopback adapter to provide that. Or can be blockstorage devive allowing Docker to mamange it.

docker info --format '{{json .Driver}}'

docker info -f '{{json .}}' | jq .Driver

docker info | grep Storage

sudo touch /etc/docker/daemon.json

sudo vi /etc/docker/daemon.json #additional options are available

{

"storage-driver":"devicemapper"

}

Preserving any current images, requires export/backup and re-import after the storage driver change.

docker images sudo systemctl docker restart ls -l /var/lib/docker/devicemapper #new location to storing images

Note, in /var/lib/docker new directory devicemapper has been created to store images from now on.

- Update 2019 - Docker Engine 18.09.1

WARNING: the devicemapper storage-driver is deprecated, and will be removed in a future release.

WARNING: devicemapper: usage of loopback devices is strongly discouraged for production use.

Use `--storage-opt dm.thinpooldev` to specify a custom block storage device.

Selecting a logginig driver

Available list of drivers can be seen on Docker documentation page. Most popular are:

- none - No logs are available for the container and docker logs does not return any output.

- json-file - (default) the logs are formatted as JSON. The default logging driver for Docker.

- syslog - Writes logging messages to the syslog facility. The syslog daemon must be running on the host machine.

- journald - Writes log messages to journald. The journald daemon must be running on the host machine.

- fluentd - Writes log messages to fluentd (forward input). The fluentd daemon must be running on the host machine.

- awslogs - Writes log messages to Amazon CloudWatch Logs.

- splunk - Writes log messages to splunk using the HTTP Event Collector.

- etwlogs - (Windows) Writes log messages as Event Tracing for Windows (ETW) events

docker info | grep logging docker container run -d --name <webjson> --logg-driver json-file httpd #per docker container setup docker logs <testjson> docker container run -d --name <web> httpd #start new container docker logs -f _testweb_ #display standard-out logs docker service log -f <web> #for swarm all container replicas logs

Enable syslog logginig driver

sudo vi /etc/rsyslog.conf #uncomment below $ModLoad imudp $UDPServerRun 514 sudo systemctl start rsyslog

Change logging driver. Then standard output won't be available after the change.

{

"log-driver": "syslog",

"log-opts": {

"syslog-address": "udp://172.31.10.1"

}

}

sudo systemctl restart docker

docker info | grep logging

tail -f /var/log/messages #this will show all logging eg. access logs for httpd server

Docker daemon logs

System level logs

# CentOS

/var/messages | grep -i docker

# Ubuntu

sudo journalctl -u docker.service --no-hostname

sudo journalctl -u docker -o json | jq -cMr '.MESSAGE'

sudo journalctl -u docker -o json | jq -cMr 'select(has("CONTAINER_ID") | not) | .MESSAGE'

/var/log/syslog | grep -i docker

- Docker container or service logs

docker container logs [OPTIONS] containerID #single container logs docker service logs [OPTIONS] service|task #agregate logs across all cluster deployed container replicas

Container life-cycle policies - eg. autostart

docker container run -d --name web --restart <on-failure|unless-stopped|no|none(default)|always> httpd # --restart -restart on crash or exit 1 or service or system reboot

Definitions:

- always - it will restart container always, even if stopped manually, restarting docker-deamon will start container

- unless-stopped - it will restart container always unless stopped manually by

docker container stop - on-failure - restart if container exits with non-zero exit code

Universal Control Plance - UCP

It's an application what allow to see all operational details for Swarm cluster when using Docker EE editin. 30 days trial is available.

- Communication between Docker Engine, UCP and DTR (Docker Trusted Registry)

- over TCP/UDP - depends on a port, and whether a response is required, or if a message is a notification

- IPC - interprocess communication (intra-host), services on the same node

- API - over TCP, uses API directlyto query or update components in a cluster

- References

Install/uninstall UCP image: docker/ucp

OS support:

- UCP 2.2.11 is supported running on RHEL 7.5 and Ubuntu 18.04

For labs purpose, we can use eg. ucp.example.com the domain example.com is included in UCP and DTR wildcard self-signed certificate.

Install on a manager node

export UCP_USERNAME=ucp-admin export UCP_PASSWORD=ucp-admin export UCP_MGR_NODE_IP=172.31.101.248 docker container run --rm -it --name ucp \ -v /var/run/docker.sock:/var/run/docker.sock docker/ucp:2.2.15 \ install --host-address=$UCP_MGR_NODE_IP --interactive --debug # --rm :- because this container will be only transitinal container # -it :- because installation we want interactive # -v :- link the container with a file on a host # --san :- add subject alternative names to certificates (e.g. --san www1.acme.com --san www2.acme.com) # --host-address :- IP address or network interface name to advertise to other nodes # docker/ucp:2.2.11 :- image version # --dns :- custom DNS servers for the UCP containers # --dns-search :- ustom DNS search domains for the UCP containers # --admin-username "$UCP_USERNAME" --admin-password "$UCP_PASSWORD" #seems these are not supported, although are in a guide

If not provided you will be asked for:

- Admin password during the process

- You may enter additional aliases (SANs) now or press enter to proceed with the above list:

- Additinall aliases: ucp ucp.example.com

DEBU[0062] User entered: ucp ucp.ciscolinux.co.uk DEBU[0062] Hostnames: [host1c.mylabserver.com 127.0.0.1 172.17.0.1 172.31.101.248 ucp ucp.ciscolinux.co.uk]

You may want to add DNS entries in /etc/hosts for

- ucp or ucp.example.com pointing to manager public ip

- dtr or dtr.example.com pointing a worker node public IPs.

- Verify

- connect to https://ucp.example.com:443.

docker psshould see a number of containers running now, they need to see each other therefore we usedhostsentries to allow this.

- Uninstall

docker container run --rm -it --name ucp \ -v /var/run/docker.sock:/var/run/docker.sock \ docker/ucp uninstall-ucp --interactive INFO[0000] Your engine version 18.09.1, build 4c52b90 (4.15.0-1031-aws) is compatible with UCP 3.1.2 (b822777) INFO[0000] We're about to uninstall from this swarm cluster. UCP ID: t0ltwwcw5tdbtjo2fxlzmj8p4 Do you want to proceed with the uninstall? (y/n): y INFO[0000] Uninstalling UCP on each node... INFO[0031] UCP has been removed from this cluster successfully. INFO[0033] Removing UCP Services

Install DTR Docker Trusted Repository image: docker/dtr

It's recommended for single core systems to wait 5 minutes after UCP deployment to relese more cpu cycle. You can see the load may peaking up at 1.0 using w command.

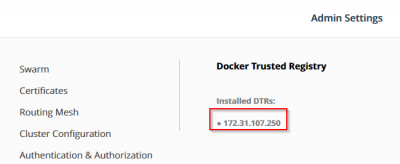

Connect to UCP service https://ucp.example.com, login with creds created. Uload a license.lic file. Go to Admin Settings > Docker Trusted Registry > Pick one of UCP Nodes [worker] You may disable TLS verification on self-signed certificate

Run a given command on a node you want to install DTR. UCP_NODE in lab environment can cause a few issues. For a convinience to avoid avoid port conflict :80,:443 use different node that UCP is instaled. Eg. dns user2c.mylabserver.com or private IP.

export UCP_NODE=wkr-172.31.107.250 #for convinince, to avoid port conflict :80,:443 use worker IP export UCP_USERNAME=ucp-admin export UCP_PASSWORD=ucp-admin export UCP_URL=https://ucp.example.com:443 #avoid using example.com to avoid SSL name validation issues docker pull docker/dtr # Optional. Download UCP public certificate curl -k https://ucp.ciscolinux.co.uk/ca > ucp-ca.pem docker container run -it --rm docker/dtr install \ --ucp-node $UCP_NODE --ucp-url $UCP_URL --debug \ --ucp-username $UCP_USERNAME --ucp-password $UCP_PASSWORD \ --ucp-insecure-tls # --ucp-ca "$(cat ucp-ca.pem)" # --ucp-node :- hostname/IP of the UCP node (any node managed by UCP) to deploy DTR. Random by default # --ucp-url :- the UCP URL including domain and port.

It will ask for if not specified:

- ucp-password: you know it from UCP installation step

Sygnificiant installation logs

.. INFO[0006] Only one available UCP node detected. Picking UCP node 'user2c.labserver.com' .. INFO[0006] verifying [80 443] ports on user2c.labserver.com .. INFO[0000] Using default overlay subnet: 10.1.0.0/24 INFO[0000] Creating network: dtr-ol INFO[0000] Connecting to network: dtr-ol .. INFO[0008] Generated TLS certificate. dnsNames="[*.com *.*.com example.com *.dtr *.*.dtr]" domains="[*.com *.*.com 172.17.0.1 example.com *.dtr *.*.dtr]" ipAddresses="[172.17.0.1]" .. INFO[0073] You can use flag '--existing-replica-id 10e168476b49' when joining other replicas to your Docker Trusted Registry Cluster

- Verify by logging in to https://dtr.example.com

DTR installation process above has also installed a number of containers on maanger/worker nodes named ucp-agent and number of containers on dedicated DTR node.

You can verify DTR by logging to https://dtr.example.com with UCP credentials ucp-admin and the same password if you haven't changed any commands above. Then you should be presented with registry.docker.io like theme. Any images stored there will be trusted from a perspective of our organisation.

- Verify by going to UCP https://ucp.example.com, admin settings > Docker Trusted Registry

Backup UCP and DTR configuration

This is build into UCP. The process is to start a special container to export UCP configuration to tar file. This can be run as cron job.

docker container run --log-driver non --rm -i --name ucp -v /var/run/docker.sock:/var/run/docker.sock docker/ucp backup > backup.tar # --rm it's transitional container # -i run interactivly # At first run it will error with --id m79xxxxxxxxx, asking to re-run teh command with this id. # Restore command docker container run --log-driver non --rm -i --name ucp -v /var/run/docker.sock:/var/run/docker.sock docker/ucp restore --id m79xxx < backup.tar

- DTR

Durign a backup DTR will not be available.

docker container run --log-driver non --rm docker/dtr backup --ucp-insecure-tls --ucp-url <ucp_server_dns:443> --ucp-username admin --ucp-password <password> > dtr-backup.tar # will you be asked for: # Choose a replica to back up from: enter # Restore command docker container run --log-driver non --rm docker/dtr restore --ucp-insecure-tls --ucp-url <ucp_server_dns:443> --ucp-username admin --ucp-password <password> < dtr-backup.tar

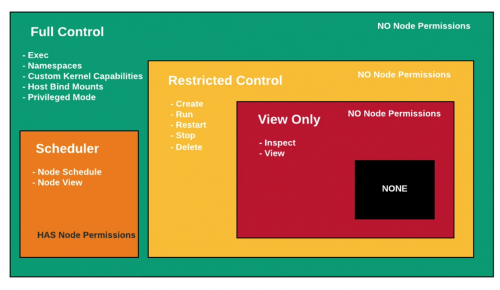

UCP RBAC

The main concept is:

- administrators can make changes to the UCP swarm/kubernetes, User Management, Orgainisation, Team and Roles

- users - range of access from Full Control of resources to no access

Note that only Scheduler role allows access to Node to view nodes. Plus schedule workloads of course.

UCP Client bundle

UCP client bundle allows to export a bundle containing a certificate and environment settings that will poind docker-client to UCP in order to use a cluster, create images and services.

- Download bundle

- Create a user with priviliges that yuo wish docker-client to run as

- Download a client budle from User Profile > Client bundle > + New Client Bundle

- File

ucp-bundle-[username].zip will get downloaded

unzip ucp-bundle-bob.zip Archive: ucp-bundle-bob.zip extracting: ca.pem extracting: cert.pem extracting: key.pem extracting: cert.pub extracting: env.sh extracting: env.ps1 extracting: env.cmd cat env.sh export COMPOSE_TLS_VERSION=TLSv1_2 export DOCKER_TLS_VERIFY=1 export DOCKER_CERT_PATH="$PWD" export DOCKER_HOST=tcp://3.16.143.49:443 # # Bundle for user bob # UCP Instance ID t0ltwwcw5tdbtjo2fxlzmj8p4 # # This admin cert will also work directly against Swarm and the individual # engine proxies for troubleshooting. After sourcing this env file, use # "docker info" to discover the location of Swarm managers and engines. # and use the --host option to override $DOCKER_HOST # # Run this command from within this directory to configure your shell: # eval $(<env.sh) eval $(<env.sh) # apply ucp-bundle docker images # to list UCP managed images

- In my lab I had to update DOCKER_HOST from public IP to private IP

Err: error during connect: Get https://3.16.143.49:443/v1.39/images/json: x509: certificate is valid for 127.0.0.1, 172.31.101.248, 172.17.0.1, not 3.16.143.49

export DOCKER_HOST=tcp://172.31.101.248:443

- Verify if you have permissions to create a service

docker service create --name test111 httpd Error response from daemon: access denied: no access to Service Create, on collection swarm

- Add Grants to the user

- Go to User Management > Granst > Create Grant

- Base on a Roles, select Full Control

- Select Subjects, All Users, select the user

- Click Create

- Re-run service create command that should succeed now. This service can be managed now also within UCP console.

Docker Secure Registry | image: registry

Docker provides a special docker image that can be used to manage docker imagages both internally or externally thus steps below include securing the access with SSL certificate.

Create certificate

mkdir ~/{auth,certs}

# create self-signed certificate for Docker Repository

mkdir certs; cd _$ #cd to last argument in history

openssl req -newkey rsa:4096 -nodes -sha256 -keyout repo-key.pem -x509 -days 365 -out repo-cer.pem -subj /CN=myrepo.com

# trusted-certs docker client directory, docker client looks for trusted certs when conencting to reomote repo

sudo mkdir -p /etc/docker/certs.d/myrepo.com:5000 #port 5000 is a default port

sudo cp repo-cer.pem /etc/docker/certs.d/myrepo.com:5000/ca.crt

ca.crt is default/required CAroot trustcert file name, that the docker client (docker login API) uses when conencting to remote repository. In our case we trust any cert signed by CA=ca.crt when connecting to myrepo.com:5000 as same certs (selfsigned), got installed in repository:2 container via -v /certs/ option.

Optional for development purposes to add doamin myrepo.com to hostfile binding to local interface ip address.

sudo -i; echo "172.16.10.10 myrepo.com" >> /etc/hosts; exit

Optional add insecure-registry entry

sudo vi /etc/docker/deamon.json

{

"insecure-registries" : [ "myrepo.com:5000"]

}

Pull special Docker Registry image

mkdir -p ~/auth #authentication directory, used when deploying local repository docker pull registry:2 docker run --entrypoint htpasswd registry:2 -Bbn reg-admin Passw0rd123 > ~/auth/htpasswd # -Bbn -parameters # reg-admin -user # Passw0rd123 -password string for basic htpasswd authentication method, the hashed password will be displayed to STDOUT $ cat ~/auth/htpasswd reg-admin:$2y$05$DnTWDHp7uTwaDrw4sXpUbuDDIlLwu3c8MEMsHPjK/AcUMdK/TD6fO

Run Registry container

cd ~

docker run -d -p 5000:5000 --name myrepo \

-v $(pwd)/certs:/certs \

-e REGISTRY_HTTP_TLS_CERTIFICATE=/certs/repo-cer.pem \

-e REGISTRY_HTTP_TLS_KEY=/certs/repo-key.pem \

-v $(pwd)/auth:/auth \

-e REGISTRY_AUTH=htpasswd \

-e REGISTRY_AUTH_HTPASSWD_REALM="Registry Realm" \

-e REGISTRY_AUTH_HTPASSWD_PATH=/auth/htpasswd \

registry:2

# -v -indicate where our certificates will be mounted within a container

# -e REGISTRY_HTTP_TLS_CERTIFICATE -path to cert inside the container

# -v $(pwd)/auth:/auth -mounting authentication directory where a file with password is

# -e REGISTRY_AUTH htpasswd -setting up to use 'htpasswd' authentication method

# registry:2 -image name, positinal params

Verify

docker pull alpine

docker tag alpine myrepo.com:5000/aa-alpine #create a tagged image (copy) on a local filesystem,

# it must be prefixed with the private repo name '/' image name you want to upload as

docker logout # if logged in to another repository

docker login myrepo.com:5000/aa-alpine #login to a repository that runs as a container, stays login untill logout/reboot

docker login myrepo.com:5000/aa-alpine --username=rep-admin --password Passw0rd123

docker push myrepo.com:5000/aa-alpine

docker image rmi alpine myrepo.com:5000/aa-alpine #delete image stored locally

docker pull myrepo.com:5000/aa-alpine #pull image from a container repository

# List private-repository images

curl --insecure -u "reg-admin:password" https://myrepo.com:5000/v2/_catalog

{"repositories":["aa-alpine"]}

wget --no-check-certificate --http-user=reg-admin --http-password=password https://myrepo.com:5000/v2/_catalog

cat _catalog

{"repositories":["my-alpine","myalpine","new-aa-busybox"]}

# List tags

curl --insecure -u "reg-admin:password" https://myrepo.com:5000/v2/aa-alpine/tags/list

{"name":"myalpine","tags":["latest"]}

curl --insecure -u "reg-admin:password" https://myrepo.com:5000/v2/aa-alpine/manifests/latest #entire image metadata

Note. There is no easy way to delete images from repository:2 container.

Docker push

- Login to a docker repository

docker info | grep -B1 Registry #check if you are logged in to docker.hub repository WARNING: No swap limit support Registry: https://index.docker.io/v1/ docker login docker info | grep -B1 Registry Username: pio2pio Registry: https://index.docker.io/v1/

- Tag and push an image

# docker tag local-image:tagname new-repo:tagname #create a local copy of an image # docker push new-repo:tagname docker pull busybox docker --tag busybox:latest pio2pio/testrepo docker push pio2pio/testrepo The push refers to repository [docker.io/pio2pio/testrepo] 683f499823be: Mounted from library/busybox latest: digest: sha256:bbb143159af9eabdf45511fd5aab4fd2475d4c0e7fd4a5e154b98e838488e510 size: 527

- Docker Content Trust

All images are implicitly trusted by your Docker daemon. Buy can set that ONLY signed images are allowed. You can configure your systems for trusting only image tags that have been signed.

export=DOCKER_CONTENT_TRUST=1 #enable system to sign an image during push process docker build -t myrepo.com:5000/untrusted.latest docker push myrepo.com:5000/untrusted.latest ... No tag specified, skipping trust metadata push # 2nd attempt, with a tag specified now docker push myrepo.com:5000/untrusted.latest:latest Error: error contacting notary server: x509: certificate signed by unknown authority docker pull myrepo.com:5000/untrusted.latest:latest Error: error contacting notary server: x509: certificate signed by unknown authority

- Errors explained

Err: No tag specified, skipping trust metadata push

- Explenation: When image gets signed is signed by a tag. Thereofre if you skip a tag it won't get signed and metada get skipped.

Err: error contacting notary server: x509: certificate signed by unknown authority

- when uploading the image gets uploaded, but it is not trusted becasue signed with self-signed CA

- when downloading, and

DOCKER_CONTENT_TRUST=1is enabled, the image cannot be downloaded because is untrusted

Theory

What is a docker

Docker is a container runtime platform, where Swarm is a container orchestration platform.

Security

Mutually Authenticated TLS