Linux Cluster with HAProxy and Keepalived

HAproxy guide

The goal of the team behind HAProxy is to provide a “free, very fast and reliable solution” for load-balancing TCP and HTTP-based applications. Because of this HAProxy is considered by many to be the de facto standard when it comes to software-based load balancing and is currently being used by sites such as GitHub, Reddit, Twitter and Tumblr to name a few.

It has been designed to run on Linux, Solaris, FreeBSD, OpenBSD as well as AIX platforms. While it’s designed to run on most x86-64 hardware that has limited resources, it will perform best when provided enterprise-grade hardware such as 10+ Gig NIC’s and Xeon class CPU’s or similar.

Terminology

Below are a few of the key terms and concepts you should understand when working with HAProxy. When working with load balancers, these are the key concepts that will apply to all solutions.

Frontend

Frontend, within the context of HAProxy, dictates where and how incoming traffic is routed to machines behind HAProxy. They allow you to setup rules (ACL’s) that will “watch” for specific URL syntax inbound and outbound of the load balancer and intelligently route a user’s traffic as needed.

Furthermore, the frontend is where you will configure what IP and ports that HAProxy will listen for traffic on as well as configuring HTTPS on those respective ports.

Example frontend:

frontend http-in

bind *:80

default_backend appX-backend

This frontend example shows that we are only opening port 80 for incoming requests and redirecting all traffic to the default backend called appX-backend.

Backend

As the name suggests, a backend within the context of HAProxy is a group of resources that is home to your data or applications. These backend resources are where traffic will get routed by the rules you have configured in your frontends.

Backend resource groups can consist of a single server or multiple servers but in the context of load balancers, it is assumed that a minimum of two are being used. The more backend resources you can add to a group; the lower the load on each individual resource will be while increasing the number of users you can serve at any given time.

Lastly, just as with the frontend, you will also configure the IP and ports that your backend resources are listening for requests on.

Example backend:

backend appX-backend

balance roundrobin

server appX_01 192.168.2.2:8080 check

server appX_02 192.168.2.3:8080 check

In this example, the backend is called appX-backend and it contains two servers that are being accessed using roundrobin which is explained later in this guide.

ACL (Access Control List)

In the context of HAProxy, ACLs are the backbone of more in-depth and complex configurations that contain multiple frontends as well as multiple backends and need very precise routing.

With ACLs, you have the ability to parse through requests and do a multitude of different actions such as rewriting and redirecting all traffic requests as needed. Because HAProxy has the ability to load balance over Layer 4 or Layer 7 in the OSI model, you can effectively configure it to handle a number of different uses at the same time with multiple frontends and backend.

Example ACL:

frontend http-in

bind *:80

acl url_appX path_beg -i /appX/

use_backend appX-backend if url_appX

default_backend appZ-backend

This example is rather simple in that it evaluates the incoming request for the resource path which is the context immediately after the first / such as http://example.com/appX. In this scenario, if the incoming request is http://example.com/appX, the request is sent to the backend called appX-backend whereas all other requests will default to the appZ-backend.

Algorithms

HAProxy comes with a fairly large number of options when it comes to choosing the method in which you want requests to be served to your backend resources. Below are a few of the more common ones as well as a short description of how each will work.

Round Robin

Each server is used in a never-ending line, starting with the first one listed in a given backend until the end of that list is reached at which point the next request will go back to the first resource again. By default, HAProxy will use this algorithm if one is not specified when building a backend.

Least Connection

Each resource in a given backend is evaluated to determine which one has the least number of active connections. The resource with the lowest number will receive the next request. The developers of HAProxy state that this algorithm is a great option for connections that are expected to last a long time such as LDAP and SQL but not for HTTP.

Install

Guide created on CentOS 7.6 (Core)

sudo yum install -y haproxy sudo systemctl enable haproxy.service # check firewall sudo firewall-cmd --list-all [sudo] password for cloud_user: public (active) target: default icmp-block-inversion: no interfaces: eth0 sources: services: dhcpv6-client http ssh # <- note http:80 ports: # is allowed protocols: masquerade: no forward-ports: source-ports: icmp-blocks: rich rules: # add permanent ingress rule to allow port 8080 sudo firewall-cmd --permanent --add-port=8080/tcp sudo firewall-cmd --reload ... ports: 8080/tcp ...

Structure

Files & Folders

Below are a number of the directories you can expect to find in a default HAProxy installation on Debian-based OS but regardless of the host OS, the files will be essentially the same with relation to what they do. While there are a number of files and directories listed, the one that will matter the most is the haproxy.cfg file located at /etc/haproxy/haproxy.cfg.

- /usr/sbin/haproxy - Default location of the binary.

- /usr/share/doc/haproxy - Built-in documentation.

- /etc/init.d/haproxy - init script used to control the HAProxy process/service.

- /etc/default/haproxy - File that is sourced by both the initscript for haproxy.cfg file location.

- /etc/haproxy - Default location of the haproxy.cfg file which determines all functions of HAProxy.

Configuration File

HAProxy functions are completely controlled by the haproxy.cfg file. This file is where you will build your frontends and backends as well as other various settings which are described below. For example, here is an example of a basic haproxy.cfg file.

global

log 127.0.0.1 local0

daemon

maxconn 256

#---------------------------------------------------------------------

# common defaults that all the 'listen' and 'backend' sections will

# use if not designated in their block

#---------------------------------------------------------------------

defaults

mode http

timeout connect 5000ms

timeout client 50000ms

timeout server 50000ms

option forwardfor

option http-server-close

#---------------------------------------------------------------------

# main frontend which proxys to the backends

#---------------------------------------------------------------------

frontend http-in

bind *:80

rspadd X-Forwarded-Host:\ http:\\\\example.com

default_backend appZ-backend

backend appZ-backend

balance roundrobin

server appZ_01 IP_OF_MACHINE_01:8080 check

server appZ_02 IP_OF_MACHINE_02:8080 check

server appZ_03 IP_OF_MACHINE_03:8080 check

server appZ_04 IP_OF_MACHINE_04:8080 check

With this example, you can see how the different pieces discussed are brought together to create a working configuration. While the introduction of the global and default fields is new, the syntax should be similar enough to the frontend and backend that you are able to determine what their functions are. With that said, below are a few explanations for a few of these new lines that may not seem immediately obvious if you have never seen them before. mode http

As mentioned previously, HAProxy has the ability to load balance using layer 4 or 7 in the OSI model. With that in mind and by setting the mode to http, HAProxy can now inspect HTTP headers for all requests and modify and redirect per each request. The other option is to use tcp as the mode which allows HAProxy to route per IP and port; ignoring all HTTP headers.

Reference: https://cbonte.github.io/haproxy-dconv/1.6/configuration.html#mode

timeout 's

HAProxy has the ability to specify timeouts for various aspects of standard HTTP/TCP protocols. In the example, the configuration file has been configured to limit connection (time to wait for a successful connection attempt), client (maximum client side inactivity time) and server (maximum server side inactivity time) time frames.

By setting various timeout options, HAProxy can efficiently manage the amount of resources it needs to continually consume which can be freed for new connections. Because there are a large number of timeouts available, referencing the documentation will provide a much more thorough overview of what is possible.

Reference: https://cbonte.github.io/haproxy-dconv/1.6/configuration.html#timeout

option forwardfor

Enables the use of X-Forwarded-For headers by HAProxy.

option http-server-close

This option allows you to configure how HAProxy handles connections from the server side. HAProxy by default runs in keep-alive mode which means that connections are kept open and in an idle state. By using this option, we can force HAProxy to close these connections so that they are not consuming resources.

Reference: https://cbonte.github.io/haproxy-dconv/1.6/configuration.html#option%20http-server-close

rspadd X-Forwarded-Host:\

As the forwardfor option enables the use of the X-Forwarded-For header, it must now be configured and that is what rspadd is used for. One of the more common uses of this is when hosting various applications behind HAProxy and you want to ensure that your end users only see responses coming from HAProxy and not the machines behind it which can occasionally occur with Asynchronous processes.

Usage

To bring the above information into further context, below are a few basic example configurations to help illustrate how one would setup HAProxy for different scenarios.

Active/Passive

This configuration can be used when you need to route all traffic to one machine until it goes offline - at which time, HAProxy will automatically begin to route traffic to the second machine.

backend appZ-backend

server appZ_01 192.168.2.2:8080 check

server appZ_02 192.168.2.3:8080 check backup

ACL Request

With this configuration, you can route a given incoming request to different backends as needed. If you run multiple applications behind HAProxy, this will allow you to add additional backend resources as needed to each individual application instead of increasing both at the same time.

frontend http-in

bind *:80

rspadd X-Forwarded-Host:\ http:\\\\example.com

acl url_appY path_beg -i /appY/

use_backend appY-backend if url_appY

default_backend appZ-backend

backend appY-backend

balance roundrobin

server appY_01 192.168.2.2:8080 check

server appY_02 192.168.2.3:8080 check

backend appZ-backend

server appZ_01 192.168.2.4:8080 check

server appZ_02 192.168.2.5:8080 check backup

Configuration of HAProxy

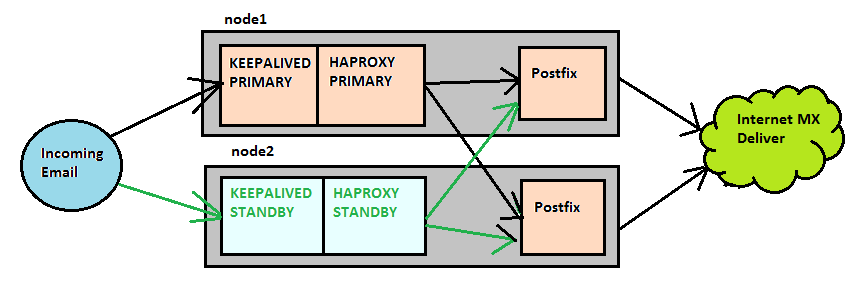

Application Stack With HAproxy, Keepalived and postfix example

Outgoing Mail is directed via a dns record dc1server00.home.local or dc2server00.home.local dependant on the datacenter to the vrrp address that is maintained by keepalived.

Haproxy is listening on port 25 (smtp) on both nodes in an active/standby fashion and is configured to direct connections to postfix on both nodes (Active/Active), therefore both nodes in the cluster are live and servicing emails at all times.

Postfix will then via normal MX delivery, deliver the email to the customer.

Keepalived

Keepalived uses the vrrp(2) protocol to maintain a virtual ip address that is able to float between any node in a cluster, for this system there is a primary and backup server set by priority in the configuration. keepalived is configured to monitor that haproxy is running on the master, if it isn’t running then the priority of the master will be reduced and the backup which will now be of higher priority will takeover the floating address.

Setup, on both servers in the cluster:

Install keepalived via the yum unix command (as root)

#yum install keepalived

add the following line to the bottom of /etc/sysctl.conf. This allows processes to bind to sockets on the machine even if the ip does not exist.

net.ipv4.ip_nonlocal_bind = 1

# sysctl -p

Enable keepalived to be started up on boot.

# systemctl enable keepalived.service

| DC1 | DC2 |

|---|---|

cat /etc/keepalived/keepalived.conf

# GLOBAL SETTINGS

global_defs {

notification_email {

support@example.com #Set from Ansible haproxy deployment

}

notification_email_from keepalive@dc1server.home.local

smtp_server 172.31.101.115 # ip address for smtp_relay

smtp_connect_timeout 20

}

vrrp_script chk_haproxy {

script "systemctl status haproxy" # verify the pid existence

interval 2 # check every 2 seconds

weight 2 # add 2 points of prio if OK

}

vrrp_instance dc1server

state MASTER

interface eth0

virtual_router_id 50

priority 101

advert_int 1

track_interface {

eth0

}

track_script {

chk_haproxy weight 20

}

authentication {

auth_type PASS

auth_pass SOMEPASSWORD!

}

virtual_ipaddress {

10.0.10.3 dev eth0

}

}

|

cat /etc/keepalived/keepalived.conf

# GLOBAL SETTINGS

global_defs {

notification_email {

support@example.com # set from Ansible haproxy deployment

}

notification_email_from keepalive@dc2server.home.local

smtp_server 172.31.101.115 # ip address for robot

smtp_connect_timeout 20

}

vrrp_script chk_haproxy {

script "systemctl status haproxy" # verify the pid existence

interval 2 # check every 2 seconds

weight 2 # add 2 points of priority if OK

}

vrrp_instance dc2server

state MASTER

interface eth0

virtual_router_id 50

priority 101

advert_int 1

track_interface {

eth0

}

track_script {

chk_haproxy weight 20

}

authentication {

auth_type PASS

auth_pass SOMEPASS

}

virtual_ipaddress {

10.0.20.3 dev eth0

}

}

|

HAproxy

The function of HAproxy is to load balance connections to postfix on either node in the cluster, This allows for an “Active/Active” setup. Because there is no way to tell the workload of what has been submitted to each node I have opted for a simple Round Robin load balancer algorithm. it will invoke an smtp HELO to check if the postfix ports are available and listening. Haproxy will be configured to present statistics and the ability to drain servers via a browser at http://hostname:9000/ .

- Setup on both servers in the cluster

install haproxy via the yum command

# yum install haproxy

enable haproxy to be started up on boot.

# systemctl enable haproxy.service

| DC1 | DC2 |

|---|---|

cat /etc/haproxy/haproxy.cfg

global

chroot /var/lib/haproxy

pidfile /var/run/haproxy.pid

maxconn 4000

user haproxy

group haproxy

daemon

stats socket /var/lib/haproxy/stats

log 172.31.0.254 local2

ssl-default-bind-ciphers kEECDH+aRSA+AES:kRSA+AES:+AES256:RC4-SHA

defaults

mode tcp

log global

option tcplog

option dontlognull

option redispatch

retries 3

timeout queue 1m

timeout connect 10s

timeout client 1m

timeout server 1m

timeout check 10s

maxconn 3000

listen stats

bind *:9000 ssl crt /etc/pki/tls/private/

mode http

stats enable

stats hide-version

stats uri /

stats realm HAProxy\ Statistics

stats auth admin:p@ssw0rd

stats admin if TRUE

frontend smtpdc1

bind 10.0.10.3:25

use_backend emailservice

backend emailservice

description Emailservice

balance roundrobin

option tcplog

option smtpchk HELO haproxy

server dc1server01 10.0.10.1:25 check

server dc1server02 10.0.10.2:25 check

|

cat /etc/haproxy/haproxy.cfg

global

chroot /var/lib/haproxy

pidfile /var/run/haproxy.pid

maxconn 4000

user haproxy

group haproxy

daemon

stats socket /var/lib/haproxy/stats

log 172.31.0.254 local2

ssl-default-bind-ciphers kEECDH+aRSA+AES:kRSA+AES:+AES256:RC4-SHA

defaults

mode tcp

log global

option tcplog

option dontlognull

option redispatch

retries 3

timeout queue 1m

timeout connect 10s

timeout client 1m

timeout server 1m

timeout check 10s

maxconn 3000

listen stats

bind *:9000 ssl crt /etc/pki/tls/private/

mode http

stats enable

stats hide-version

stats uri /

stats realm HAProxy\ Statistics

stats auth admin:p@ssw0rd

stats admin if TRUE

frontend smtpdc1

bind 10.0.20.3:25

use_backend emailservice

backend emailservice

description Emailservice

balance roundrobin

option tcplog

option smtpchk HELO haproxy

server dc2server01 10.0.20.1:25 check

server dc2server02 10.0.20.2:25 check

|

Troubleshooting

[root@dc1server01 ~]# fgrep -i keep /var/log/messages* /var/log/messages-20160822:Aug 22 09:30:58 dc1server01 Keepalived[1141]: Starting Keepalived v1.2.13 (11/18,2014) /var/log/messages-20160822:Aug 22 09:30:58 dc1server01 Keepalived[1563]: Starting Healthcheck child process, pid=1564 /var/log/messages-20160822:Aug 22 09:30:58 dc1server01 Keepalived[1563]: Starting VRRP child process, pid=1565 /var/log/messages-20160822:Aug 22 09:30:58 dc1server01 Keepalived_vrrp[1565]: Netlink reflector reports IP 10.0.10.1 added /var/log/messages-20160822:Aug 22 09:30:58 dc1server01 Keepalived_vrrp[1565]: Netlink reflector reports IP fe80::215:5dff:fee3:5004 added /var/log/messages-20160822:Aug 22 09:30:58 dc1server01 Keepalived_vrrp[1565]: Registering Kernel netlink reflector /var/log/messages-20160822:Aug 22 09:30:58 dc1server01 Keepalived_vrrp[1565]: Registering Kernel netlink command channel /var/log/messages-20160822:Aug 22 09:30:58 dc1server01 Keepalived_vrrp[1565]: Registering gratuitous ARP shared channel /var/log/messages-20160822:Aug 22 09:30:58 dc1server01 Keepalived_vrrp[1565]: Opening file '/etc/keepalived/keepalived.conf'. /var/log/messages-20160822:Aug 22 09:30:58 dc1server01 Keepalived_vrrp[1565]: Truncating auth_pass to 8 characters /var/log/messages-20160822:Aug 22 09:30:58 dc1server01 Keepalived_vrrp[1565]: Configuration is using : 66367 Bytes /var/log/messages-20160822:Aug 22 09:30:58 dc1server01 Keepalived_vrrp[1565]: Using LinkWatch kernel netlink reflector... /var/log/messages-20160822:Aug 22 09:30:58 dc1server01 Keepalived_vrrp[1565]: VRRP sockpool: [ifindex(2), proto(112), unicast(0), fd(10,11)] /var/log/messages-20160822:Aug 22 09:30:58 dc1server01 Keepalived_vrrp[1565]: VRRP_Script(chk_haproxy) succeeded /var/log/messages-20160822:Aug 22 09:30:58 dc1server01 Keepalived_healthcheckers[1564]: Netlink reflector reports IP 10.0.10.1 added /var/log/messages-20160822:Aug 22 09:30:58 dc1server01 Keepalived_healthcheckers[1564]: Netlink reflector reports IP fe80::215:5dff:fee3:5004 added /var/log/messages-20160822:Aug 22 09:30:58 dc1server01 Keepalived_healthcheckers[1564]: Registering Kernel netlink reflector /var/log/messages-20160822:Aug 22 09:30:58 dc1server01 Keepalived_healthcheckers[1564]: Registering Kernel netlink command channel /var/log/messages-20160822:Aug 22 09:30:58 dc1server01 Keepalived_healthcheckers[1564]: Opening file '/etc/keepalived/keepalived.conf'. /var/log/messages-20160822:Aug 22 09:30:58 dc1server01 Keepalived_healthcheckers[1564]: Configuration is using : 7586 Bytes /var/log/messages-20160822:Aug 22 09:30:58 dc1server01 Keepalived_healthcheckers[1564]: Using LinkWatch kernel netlink reflector... /var/log/messages-20160822:Aug 22 09:30:58 dc1server01 Keepalived_vrrp[1565]: VRRP_Instance(dc1server) Transition to MASTER STATE /var/log/messages-20160822:Aug 22 09:30:58 dc1server01 Keepalived_vrrp[1565]: VRRP_Instance(dc1server) Received higher prio advert /var/log/messages-20160822:Aug 22 09:30:58 dc1server01 Keepalived_vrrp[1565]: VRRP_Instance(dc1server) Entering BACKUP STATE /var/log/messages-20160822:Aug 22 09:30:58 dc1server01 Keepalived_healthcheckers[1564]: Netlink reflector reports IP fe80::215:5dff:fee3:5004 added /var/log/messages-20160822:Aug 22 09:30:58 dc1server01 Keepalived_vrrp[1565]: Netlink reflector reports IP fe80::215:5dff:fee3:5004 added /var/log/messages-20160822:Aug 22 09:38:29 dc1server01 Keepalived_vrrp[1565]: VRRP_Instance(dc1server) Transition to MASTER STATE /var/log/messages-20160822:Aug 22 09:38:30 dc1server01 Keepalived_vrrp[1565]: VRRP_Instance(dc1server) Entering MASTER STATE /var/log/messages-20160822:Aug 22 09:38:30 dc1server01 Keepalived_vrrp[1565]: VRRP_Instance(dc1server) setting protocol VIPs. /var/log/messages-20160822:Aug 22 09:38:30 dc1server01 Keepalived_vrrp[1565]: VRRP_Instance(dc1server) Sending gratuitous ARPs on eth0 for 10.0.10.3 /var/log/messages-20160822:Aug 22 09:38:30 dc1server01 Keepalived_healthcheckers[1564]: Netlink reflector reports IP 10.0.10.3 added /var/log/messages-20160822:Aug 22 09:38:35 dc1server01 Keepalived_vrrp[1565]: VRRP_Instance(dc1server) Sending gratuitous ARPs on eth0 for 10.0.10.3 /var/log/messages-20160822:Aug 22 09:44:53 dc1server01 Keepalived_vrrp[1565]: VRRP_Instance(dc1server) Received lower prio advert, forcing new election

[root@dc2server01 ~]# fgrep -i keep /var/log/messages* /var/log/messages:Aug 22 10:53:04 dc2server01 Keepalived_vrrp[1234]: VRRP_Script(chk_haproxy) failed /var/log/messages:Aug 22 10:53:05 dc2server01 Keepalived_vrrp[1234]: VRRP_Instance(dc2server) Received higher prio advert /var/log/messages:Aug 22 10:53:05 dc2server01 Keepalived_vrrp[1234]: VRRP_Instance(dc2server) Entering BACKUP STATE /var/log/messages:Aug 22 10:53:05 dc2server01 Keepalived_vrrp[1234]: VRRP_Instance(dc2server) removing protocol VIPs. /var/log/messages:Aug 22 10:53:05 dc2server01 Keepalived_healthcheckers[1233]: Netlink reflector reports IP 10.0.20.3 removed /var/log/messages:Aug 22 11:04:49 dc2server01 Keepalived_vrrp[1234]: VRRP_Script(chk_haproxy) succeeded

- Multiline config

You can't do multiline syntax in the haproxy.cfg defined format documentation