Kubernetes/Deployment, ReplicaSet and Pod

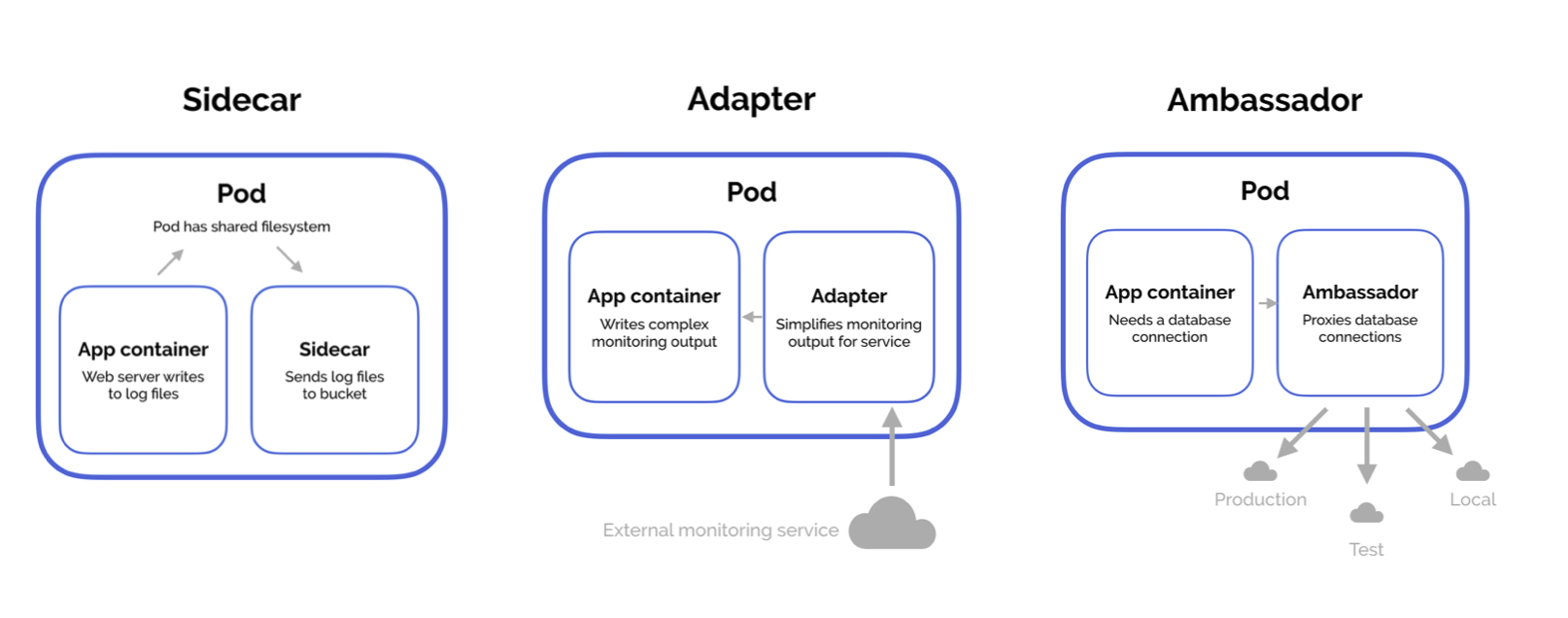

Multi-Container Pod Design Patterns in Kubernetes

There are three common design patterns and use-cases for combining multiple containers into a single pod. We’ll walk through the sidecar pattern, the adapter pattern, and the ambassador pattern. Look to the end of the post for example YAML files for each of these.

- Sidecar pattern - common sidecar containers are logging utilities, sync services, watchers, and monitoring agents

- Adapter pattern

- Ambassador pattern

Pods

Basic pod that displays pod name and node name that it is running on

---

apiVersion: v1

kind: Pod

metadata:

name: hello-world-pod

spec:

containers:

- name: hello-world-container

image: paulbouwer/hello-kubernetes:1.5

Port forward to access that pod

$> kubectl port-forward hello-world-pod 8080:8080

$> links2 http://localhost:808

Hello world!

pod: hello-world-pod

node: Linux (4.15.0-1044-gke)

Deployment

Deployment kind provides all required application life-cycle for your applications. This is achieved by managing objects like ReplicaSet and Pod.

Example YAMLs below compare Deployment, ReplicaSet and Pod

| Deployment | ReplicaSet (individual manifest) | Pod (manifest) |

|---|---|---|

apiVersion: apps/v1

kind: Deployment

metadata:

name: kubeapp-deployment

# labels: <lines to verify>

# app: kubeapp

# tier: frontend

spec:

replicas: 3

selector:

matchLabels:

app: kubeapp

template:

metadata:

name: kubeapp

labels:

app: kubeapp

spec:

containers:

- image: nginx:1.16.0

name: app

|

apiVersion: apps/v1

kind: ReplicaSet

metadata:

name: kubeapp-replicaset

labels:

app: kubeapp

tier: frontend

spec:

replicas: 3

selector:

matchLabels:

tier: frontend

template:

metadata:

labels:

tier: frontend

spec:

containers:

- image: nginx:1.16.0

name: main |

apiVersion: v1

kind: Pod

metadata:

name: kuebapp-pod1

labels:

app: kubeapp

tier: frontend

spec:

containers:

- image: nginx:1.16.0

name: main

command: ['echo']

args: ['Nginx pod']

- containerPort: 80

|

containerPort the purpose of defining is purely for documentation. It is only used by other developers to understand the port that the container listens to. Kubernetes borrows this idea from docker which does the same with EXPOSE command.

From Docker documentation: The EXPOSE instruction does not actually publish the port. It functions as a type of documentation between the person who builds the image and the person who runs the container...

Create Deployment

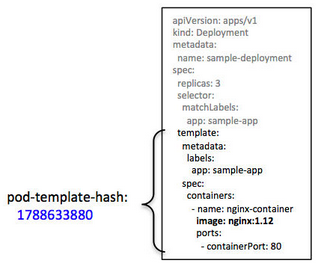

Pod hash

Note ReplicaSet, SELECTOR label pod-template-hash=6c5948bf66 that also matches pod's names

$ kubectl get all -owide -n sample-app NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES pod/sample-deployment-6c5948bf66-5bqhw 1/1 Running 0 114s 10.244.2.10 ip-10-0-1-102 <none> <none> pod/sample-deployment-6c5948bf66-t5vg5 1/1 Running 0 114s 10.244.1.11 ip-10-0-1-103 <none> <none> pod/sample-deployment-6c5948bf66-xv6cb 1/1 Running 0 114s 10.244.1.10 ip-10-0-1-103 <none> <none> NAME READY UP-TO-DATE AVAILABLE AGE CONTAINERS IMAGES SELECTOR deployment.apps/sample-deployment 3/3 3 3 114s nginx-container nginx:1.12 app=sample-app NAME DESIRED CURRENT READY AGE CONTAINERS IMAGES SELECTOR replicaset.apps/sample-deployment-6c5948bf66 3 3 3 114s nginx-container nginx:1.12 app=sample-app,pod-template-hash=6c5948bf66

Create a Deployment and ReplicaSets

Create a deployment with a record (for rollbacks). Deployment appends a string of numbers to the end of the name, that is a hash of Pod template and the deployment. Deployment creates a ReplicaSet, that manages a number of Pods.

- Using cli

# create a pod kubectl run --generator=run-pod/v1 <pod_name> --image=<image_of_the_container_of_the_pod> # default generator 'deployment' and other than 'run-pod' generators are deprecated in v1.15 kubectl run --generator=deployment/apps.v1 <deployment_name> --image=<image_to_use_in_the_container_of_the_deployment_pod> # advised way to create deployment is using create, then scale replica as by default it is '1' kubectl create deployment webapp --image=nginx --dry-run --output=yaml > webapp-deployment.yaml # create manifest kubectl -n web create deployment webapp --image=nginx kubectl -n web scale deployment webapp --replicas=3

- Using manifests

kubectl create -f kubeapp-deployment.yaml --record # records in a revision history, so it's easy to rollback kubectl rollout status deployments kubeapp # check the status of the rollout deployment "kubeapp" successfully rolled out kubectl get replicasets # name is <deploymentName> with appended <PodTemplateHash> NAME DESIRED CURRENT READY AGE kubeapp-674dd4d9cd 3 3 3 93s kubectl get pods # name is <ReplicaSetName> with appended <generated-podID> NAME READY STATUS RESTARTS AGE kubeapp-674dd4d9cd-f2rk8 1/1 Running 0 3m36s kubeapp-674dd4d9cd-gnq7v 1/1 Running 0 5m51s kubeapp-674dd4d9cd-kc6lt 1/1 Running 0 3m37s

Deployment operations

Scale up your deployment by adding more replicas:

kubectl scale deployment kubeapp --replicas=5

kubectl get pods #notice ReplicaSet has different hash than Pods, as it's different object

NAME READY STATUS RESTARTS AGE

kubeapp-674dd4d9cd-f2rk8 1/1 Running 0 3m36s

kubeapp-674dd4d9cd-gnq7v 1/1 Running 0 5m51s

kubeapp-674dd4d9cd-kc6lt 1/1 Running 0 3m37s

kubeapp-674dd4d9cd-n5wnp 1/1 Running 0 5m51s

kubeapp-674dd4d9cd-ptt26 1/1 Running 0 3m36s

#Expose the deployment and provide it a service

kubectl expose deployment kubeapp --port 80 --target-port 80 --type NodePort

service/kubeapp exposed

kubectl get service

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubeapp NodePort 10.111.72.150 <none> 80:31472/TCP 11m

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 18d

#Set the minReadySeconds attribute to your deployment, slows down deployment, so can see changes in realtime

kubectl patch deployment kubeapp -p '{"spec": {"minReadySeconds": 10}}'

kubectl edit deployments.apps kubeapp #see the change above

#Use kubectl apply to update a deployment. It modifies an existing object to align to the new YAML, or creates if does not exists

kubectl describe deployments.apps kubeapp | grep Image #Before

Image: nginx:1.16.0

kubectl apply -f kubeapp-deployment.yaml #update the deployment file image from - image: nginx:1.16.0 -> 1.17.1

kubectl describe deployments.apps kubeapp | grep Image #After

Image: nginx:1.17.1

#Use kubectl replace to replace an existing deployment. It updates existing object, the object must exist before hand

kubectl replace -f kubeapp-deployment.yaml

Rolling update and undo

Rolling update to prevent application downtime

#Run this curl look while the update happens: while true; do curl http://<NodeIP>; done #Perform the rolling update: kubectl set image deployments/kubeapp app=nginx:1.17.1 --v 6 #--v verbose output #Find that new ReplicaSet has been created kubectl describe replicasets kubeapp-[hash] kubectl get replicasets NAME DESIRED CURRENT READY AGE kubeapp-674dd4d9cd 0 0 0 43m kubeapp-99c897449 0 0 0 6m19s kubeapp-d79844ffd 3 3 3 14m #new deployment #Look at the rollout history kubectl rollout history deployment kubeapp deployment.extensions/kubeapp REVISION CHANGE-CAUSE 3 kubectl create --filename=kubeapp-deployment.yaml --record=true 4 <none> #this is empty because no --record was set during kubectl execution 5 kubectl create --filename=kubeapp-deployment.yaml --record=true

Note, without --record, file applied will be empty. All deployments are being recorded.

$ kubectl rollout history -n sock-shop deployment deployment.extensions/carts ... deployment.extensions/catalogue-db REVISION CHANGE-CAUSE 1 kubectl apply --filename=. --record=true deployment.extensions/front-end REVISION CHANGE-CAUSE 2 kubectl apply --filename=front-end-dep.yaml --record=true 3 kubectl apply --filename=front-end-dep.yaml --record=true

Undo the rollout and roll back to the previous version. This is possible because deployment keeps a revision-history. The history is stored with underlying RelicaSet.

kubectl rollout undo deployment kubeapp --to-revision=2 #specific version if needed kubectl rollout undo deployments kubeapp #this has been applied kubectl get replicasets NAME DESIRED CURRENT READY AGE kubeapp-674dd4d9cd 0 0 0 46m kubeapp-99c897449 3 3 3 8m52s #rolled back to previous replicaset kubeapp-d79844ffd 0 0 0 16m #Pause the rollout in the middle of a rolling update (canary release) kubectl rollout pause deployment kubeapp #Resume the rollout after the rolling update looks good kubectl rollout resume deployment kubeapp

Statefulset

When each pod is important

| Statefulset | ReplicaSet |

|---|---|

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: kubeweb

spec:

# headless svc, creates ordinarIndex names (stable networkID)

# in form: <sts>-<$index>.<svc>.<ns>.svc.cluster.local

serviceName: "nginx" # <- headless service

replicas: 2

selector:

matchLabels:

app: kubenginx

template:

metadata:

labels:

app: kubenginx

spec:

containers:

- name: nginx

image: nginx

ports:

- containerPort: 80

name: web

volumeMounts:

- name: www

mountPath: /usr/share/nginx/html

volumeClaimTemplates:

- metadata:

name: www

spec:

accessModes: [ "ReadWriteOnce" ]

resources:

requests:

storage: 0.5Gi

|

apiVersion: apps/v1

kind: ReplicaSet

metadata:

name: kubeapp-replicaset

labels:

app: kubeapp

tier: frontend

spec:

replicas: 3

selector:

matchLabels:

tier: frontend

template:

metadata:

labels:

tier: frontend

spec:

containers:

- name: main

image: nginx:1.16.0

|

As mentioned above this is headless service

apiVersion: v1

kind: Service

metadata:

name: nginx

labels:

app: nginx

spec:

ports:

- port: 80

name: web

clusterIP: None # <- makes headless

selector:

app: nginx

Test, when you remove the service, names below won't resolve.

# Exec to any of pods # _<sts>_-#._svc_.__ns___.svc.cluster.local watch dig +short kubeweb-0.nginx.default.svc.cluster.local kubeweb-0.nginx.default.svc.cluster.local 10.35.66.166 10.35.65.194