Linux shell/Commands

One liners and aliases

find . -type d -exec chmod 0755 {} \; #find dirs and change their permissions

df -h # disk free shows available disk space for all mounted partitions

du -sch .[^.]* * |sort -h # disk usage summary including hidden files

du -skh * --exclude /data | sort -h # disk usage summary for each directory and sort using human readable size

free -m # displays the amount of free and used memory in the system

lsb_release -a # prints version information for the Linux release you're running

tload # system load on text terminal

# Aliases

alias ll='ls -al --file-type --color --group-directories-first' #group dirs first

# SSH

ssh-keygen -o -a 100 -t ed25519

# -o :- save the key in new format (the old one is really weak) by using -o and additionally to specify to use many KDF function rounds to secure the key using

# Sudo

sudo su - jenkins #switch user taking current environment variables

# List processes

ps -ef --forest | grep nginx

# Create 100% cpu load, no I/O overloaded so your terminal is still accessible

timeout 10 yes>/dev/null&

cp, mv, mkdir with {,} brace expansion

cp /etc/httpd/httpd.conf{,.bak} #-> cp /etc/httpd/httpd.conf /etc/httpd/httpd.conf.bak

mv /etc/httpd/httpd.conf{.bak,} #-> mv /etc/httpd/httpd.conf.bak /etc/httpd/httpd.conf

mv /etc/httpd/httpd.{conf.bak,conf} #-> mv /etc/httpd/httpd.conf.bak /etc/httpd/httpd.conf

mkdir -p /apache-jail/{usr,bin,lib64,dev}

echo foo{1,2,3}.txt #-> foo1.txt foo2.txt foo3.txt

Tests

Bash test

# [ -v varname ] True if the shell variable varname is set (has been assigned a value); bash>4.2 # [ -z string ] True if the length of string is zero.

Copy with progress bar

- scp with progress bar

scp -v ~/local/file.tar.gz ubuntu@10.46.77.156:~ #note '-v' option

rsync -r -v --progress -e ssh ~/local/file.tar.gz ubuntu@remote-server.exe:~

sending incremental file list

file.tar.gz

6,389,760 10% 102.69kB/s 0:08:40

- rsync and cp

rsync -aP #copy with progress can be also aliased alias cp='rsync -aP' cp -rv old-directory new-directory #shows progress bar

- PV does not preserve permissions and does not handle attributes

pv ~/kali.iso | cat - /media/usb/kali.iso #equals cp ~/kali.iso /media/usb/kali.iso pv ~/kali.iso > /media/usb/kali.iso #equals cp ~/kali.iso /media/usb/kali.iso pv access.log | gzip > access.log.gz #shows gzip compressing progress

PV can be imagined as CAT command piping '|' output to another command with a bar progress and ETA times. -c makes sure one pv output is not use to write over to another, -N creates a named stream. Find more at How to use PV pipe viewer to add progress bar to cp, tar, etc..

$ pv -cN source access.log | gzip | pv -cN gzip > access.log.gz source: 760MB 0:00:15 [37.4MB/s] [=> ] 19% ETA 0:01:02 gzip: 34.5MB 0:00:15 [1.74MB/s] [ <=> ]

Copy files between remote systems quick

List SSH MACs, Ciphers, and KexAlgorithms

ssh -Q cipher; ssh -Q mac; ssh -Q kex

Rsync

Basic syntax of rsync command and examples of comping over ssh.

rsync -options source destination rsync -axvPW user@remote-srv:/remote/path/from /local/path/to rsync -axvPWz user@remote-srv:/remote/path/from /local/path/to #compresses before transfer # -a :archive mode, archive mode allows copying files recursively and it also preserves symbolic links, file permissions, user & group ownerships and timestamps # -x, --one-file-system :don't cross filesystem boundaries # -P, --progress :progress bar # -W, --whole-file :copy files whole (w/o delta-xfer algorithm) # -z, -compress :files before transfer, consumes ~70% of CPU # -r :copies data recursively (but don’t preserve timestamps and permission while transferring data # -h :human-readable, output numbers in a human-readable format # -v :verbose # --remove-source-files :removes source file once copied

Rsync over ssh

rsync -avPW -e ssh $SOURCE $USER@$REMOTE:$DEST rsync -avPW -e ssh /local/path/from remote@server.com:/remote/path/to # -e, --rsh=COMMAND :specify the remote shell to use # -a, --archive :archive mode; equals -rlptgoD (no -H,-A,-X)

Tar over ssh

Copy from a local server (data source) to a remote server. It TARs a folder but we do not specify an archive name "-" so it redirects (tar stream) via the pipe "|" to ssh, where extracts the tarball at the remote server.

tar -cf - /path/to/dir | ssh user@remote-srv-copy-to 'tar -xvf - -C /path/to/remotedir'

Coping from local server (the data source) to a remote server as a single compressed .tar.gz file

tar czf - -C /path/to/source files-and-folders | ssh user@remote-srv-copy-to "cat - > /path/to/archive/backup.tar.gz"

Coping from a remote server to local server (where you execute the command). This will execute tar on remote server and redirects "-" to STDOUT to extract locally.

ssh user@remote-srv-copy-from "tar czpf - /path/to/data" | tar xzpf - -C /path/to/extract/data

-c; -f --file; - -create a new archive; archive name; 'dash' means STDOUT - -redirect to STDOUT -C, --directory=DIR -change to directory DIR, cd to the specified directory at the destination -x -v -f -extract; -dispaly files on a screen; archive_name

- References

Listing a directory in the form of a tree

$ tree ~ $ ls -R | grep ":$" | sed -e 's/:$//' -e 's/[^-][^\/]*\//--/g' -e 's/^/ /' -e 's/-/|/' $ alias lst='ls -R | grep ":$" | sed -e '"'"'s/:$//'"'"' -e '"'"'s/[^-][^\/]*\//--/g'"'"' -e '"'"'s/^/ /'"'"' -e '"'"'s/-/|/'"'" $ ls -R | grep ":$" | sed -e 's/:$//' -e 's/[^-][^\/]*\// /g' -e 's/^/ /' #using spaces, doesn't list .git

A directory statistics: size, files count and files types based on an extension

find . -type f | sed 's/.*\.//' | sort | uniq -c | sort -n | tail -20; echo "Total files: " | tr --delete '\n'; find . -type f | wc -l; echo "Total size: " | tr --delete '\n' ; du -sh

Constantly print tcp connections count in line

while true; do echo -n `ss -at | wc -l`" " ; sleep 3; done

Stop/start multiple services in a loop

cd /etc/init.d for i in $(ls servicename-*); do service $i status; done for i in $(ls servicename-*); do service $i restart; done

Linux processes

List all signals

kill -l 1) SIGHUP 2) SIGINT 3) SIGQUIT 4) SIGILL 5) SIGTRAP 6) SIGABRT 7) SIGBUS 8) SIGFPE 9) SIGKILL 10) SIGUSR1 11) SIGSEGV 12) SIGUSR2 13) SIGPIPE 14) SIGALRM 15) SIGTERM 16) SIGSTKFLT 17) SIGCHLD 18) SIGCONT 19) SIGSTOP 20) SIGTSTP 21) SIGTTIN 22) SIGTTOU 23) SIGURG 24) SIGXCPU 25) SIGXFSZ 26) SIGVTALRM 27) SIGPROF 28) SIGWINCH 29) SIGIO 30) SIGPWR 31) SIGSYS 34) SIGRTMIN 35) SIGRTMIN+1 36) SIGRTMIN+2 37) SIGRTMIN+3 38) SIGRTMIN+4 39) SIGRTMIN+5 40) SIGRTMIN+6 41) SIGRTMIN+7 42) SIGRTMIN+8 43) SIGRTMIN+9 44) SIGRTMIN+10 45) SIGRTMIN+11 46) SIGRTMIN+12 47) SIGRTMIN+13 48) SIGRTMIN+14 49) SIGRTMIN+15 50) SIGRTMAX-14 51) SIGRTMAX-13 52) SIGRTMAX-12 53) SIGRTMAX-11 54) SIGRTMAX-10 55) SIGRTMAX-9 56) SIGRTMAX-8 57) SIGRTMAX-7 58) SIGRTMAX-6 59) SIGRTMAX-5 60) SIGRTMAX-4 61) SIGRTMAX-3 62) SIGRTMAX-2 63) SIGRTMAX-1 64) SIGRTMAX

Unlock a user on the FTP server

pam_tally2 --user <uid> #this will show you the number of failed logins pam_tally2 --user <uid> --reset #this will reset the count and let the user in

Tail log files

tail-f-the-output-of-dmesg or install multitail

tail -f /var/log/{messages,kernel,dmesg,syslog} #old school but not perfect

less +F /var/log/syslog #equivalent tail -f but allows for scrolling

watch 'dmesg | tail -50' # approved by man dmesg

watch 'sudo dmesg -c >> /tmp/dmesg.log; tail -n 40 /tmp/dmesg.log' #tested, but experimental

- less

- multifile monitor to see what’s happening in the second file, you need to first

Ctrl-cto go to normal mode, then type:nto go to the next buffer, and thenFagain to go back to the watching mode.

Colourize log files

- BAT, supports syntax highlighting for a large number of programming and markup languages

# Ubuntu <19.10 wget https://github.com/sharkdp/bat/releases/download/v0.12.1/bat_0.12.1_amd64.deb sudo dpkg -i bat_0.12.1_amd64.deb bat deployment.yaml -l yaml # Ubuntu 19.10+, notice the command is 'batcat' due to collision with another command 'bat' sudo apt install bat # Ubuntu Eoan 19.10 or Debian unstable sid batcat deployment.yaml -l yaml # Usage bat kubernetes/application.yml

- CCZE, a fast log colorizer written in C, intended to be a drop-in replacement for colorize

sudo apt install ccze # log colorizer # -c, --color KEY=COLOR :- set the color of the keyword KEY to COLOR, like one would do in one of the configuration files # -A --raw-ansi :- ANSI instead curses # -m --mode :- mode curses, ansi, html # -h --html :- instead of colorising the input onto the console, output it in HTML format instead # -l :- list of plugins

- GRC, Generic Colouriser by default for these commands ping, traceroute, gcc, make, netstat, diff, last, ldap, and cvs

sudo apt install grc # frontend for generic colouriser grcat(1) grc tail -f /var/log/apache/access.log /var/log/apache/error.log

Big log files

Clear a file content

Therefore clearing logs from this location, will release space on / partition

cd /chroot/httpd/usr/local/apache2/logs > mod_jk.log #zeroize the file

Clear a part of a file

You can use time commands to measure time lapsed to execute the command

$ wc -l catalina.out #count lines

3156616 catalina.out

$ time split -d -l 1000000 catalina.out.tmp catalina.out.tmp- #split tmp file every 1000000th line prefixing files

#with catalina.out.tmp-##, -d specify ## numeric sequence

$ time tail -n 300000 catalina.out > catalina.out.tmp #creates a copy of the file with 300k last lines $ time cat catalina.out.tmp > catalina.out #clears and appends tmp file content to the current open file

Locked file by a process does not release free space back to a file system

When you delete a file you in fact deleting an inode pointer to a disk block. If there is still a file handle attached to it the files system will not see the file but the space will not be freed up. One way to re evaluate the space is to send HUP signal to the process occupying the file handle. This is due to how Unix works, if a process is still using a file - the system system shouldn't be trying to get rid of it.

kill -HUP <PID>

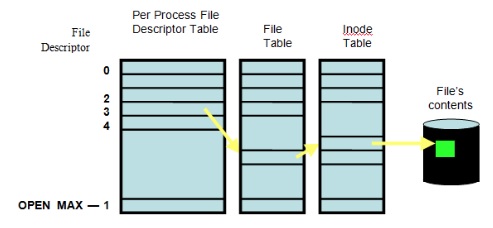

Diagram is showing File Descriptor (fd) table, File table and Inode table, finally pointing to a block device where data is stored.

- Search for deleted files are still held open.

$ lsof | grep deleted COMMAND PID TID USER FD TYPE DEVICE SIZE/OFF NODE NAME mysqld 2230 mysql 4u REG 253,2 793825 /var/tmp/ibXwbu5H (deleted) mysqld 2230 mysql 5u REG 253,2 793826 /var/tmp/ibfsqdZz (deleted)

$ lsof | grep DEL COMMAND PID TID USER FD TYPE DEVICE SIZE/OFF NODE NAME httpd 54290 apache DEL REG 0,4 32769 /SYSV011246e6 httpd 54290 apache DEL REG 0,4 262152 /SYSV0112b646

Timeout commands after certain time

Ping multiple hosts and terminate ping after 2 seconds, helpful when a server is behind firewall and no responses to ICMP returns

$ for srv in `cat nodes.txt`;do timeout 2 ping -c 1 $srv; done

Time manipulation

Replace unix timestamps in logs to human readable date format

user@laptop:/var/log$ tail dmesg | perl -pe 's/(\d+)/localtime($1)/e' [ Thu Jan 1 01:00:29 1970.168088] b43-phy0: Radio hardware status changed to DISABLED [ Thu Jan 1 01:00:29 1970.308597] tg3 0000:09:00.0: irq 44 for MSI/MSI-X [ Thu Jan 1 01:00:29 1970.344378] IPv6: ADDRCONF(NETDEV_UP): eth0: link is not ready [ Thu Jan 1 01:00:29 1970.344745] IPv6: ADDRCONF(NETDEV_UP): eth0: link is not ready user@laptop:/var/log$ tail dmesg [ 29.168088] b43-phy0: Radio hardware status changed to DISABLED [ 29.308597] tg3 0000:09:00.0: irq 44 for MSI/MSI-X [ 29.344378] IPv6: ADDRCONF(NETDEV_UP): eth0: link is not ready [ 29.344745] IPv6: ADDRCONF(NETDEV_UP): eth0: link is not ready

Convert seconds to human readable

sec=4717.728; eval "echo $(date -ud "@$sec" +'$((%s/3600/24))d:%-Hh:%Mm:%Ss')" # '-' hyphen at %-H it means do not pad 0d:1h:18m:37s

- References

tai64n Toward a Unified Timestamp with explicit precision

tai64n reads lines from stdin and prefixes with a timestamp in 12-byte TAI64N labels to stdout

sudo apt-get install daemontools

- tai64n

- puts a precise timestamp on each line

- tai64nlocal

- scans stdin for '@' followed precise TAI64N timestamps and converts to a human-readable format; timestamp is converted to local time in ISO format: YYYY-MM-DD HH:MM:SS.SSSSSSSSS

| tai64n | tai64nlocal |

|---|---|

for i in {1..5}; do echo hello $i | tai64n; done

@400000005e36d56736b9e14c hello 1

@400000005e36d56737330cd4 hello 2

@400000005e36d56737bc5944 hello 3

@400000005e36d56738081fb4 hello 4

@400000005e36d567383e1c04 hello 5

|

for i in {1..5}; do echo hello $i | tai64n; done | tai64nlocal

2020-02-02 13:57:49.918151500 hello 1

2020-02-02 13:57:49.926092500 hello 2

2020-02-02 13:57:49.935090500 hello 3

2020-02-02 13:57:49.940056500 hello 4

2020-02-02 13:57:49.943594500 hello 5

|

- References

- What is a TAI64 time format? Stackoverfolow

Time stamp with precision log() function

log() {

local msg="$1"

if [ -n "$msg" ]; then

level="${2:-INFO}"

printf "%s - %s - %s\n" "$(date +"%Y-%m-%dT%T.%3N")" "$level" "$msg" >&2

fi

}

The .%3N is not supported by POSIX /bin/sh.

Timestamp in the filename

echo $(date +"%Y%m%d-%H%M") #20180618-1136 echo $(date +"%Y%m%dT%H%M") #20180618T1136 echo -n $(date +"%Y%m%d-%H%M") # no new line date +"%Y%m%d-%H%M"|tr -d "\n" # no new line

Examples of use

# Backup dir with all Kubernetes manifests $> cp -r manifests_dir backup/dist-$(git rev-parse --abbrev-ref HEAD)-$(date +"%Y%m%dT%H%M")-$(git rev-parse HEAD | cut -c1-8) # Kubernetes manifest tagging for multiple iteration comparison $> cp application.yml application-$(git rev-parse --abbrev-ref HEAD)-$(date +"%Y%m%dT%H%M")-$(git rev-parse HEAD | cut -c1-8).yml $> ls -l # ___name____ _____branch____________ ___time______ _commit_ -rw-rw-r-- 1 piotr piotr 275158 Jan 23 12:23 application-IPN-1000_splitHeartbeat-20200123T1223-aaaabbbb.yml # Terraform, note "!!:1" it's bash expansion to recall a previous command 1st argument, so it will be action eg. apply $> terraform apply -var-file=<FILENAME> | tee "!!:1"-$(git rev-parse --abbrev-ref HEAD)-$(date +"%Y%m%dT%H%M")-$(git rev-parse HEAD | cut -c1-8).log $> ls -l # action _________branch_______ _____time____ _commit_ -rw-rw-r-- 1 vagrant vagrant 29794 Jan 31 15:24 apply-IPN-1111_acmValidation-20200131T1524-aaaabbbb.log

Reference:

- shell-cmd-date-without-new-line-in-the-end Stackoverflow

Replace numbers into human readable units

numfmt is a standard Ubuntu package that allows to convert numbers into the human readable units.

numfmt --to iec 1024 # -> 1.0K numfmt --to iec 1024000 # -> 1000K numfmt --to iec 1024000000 # -> 977M # Convert numbers in column 5 kubectl get vpa -A --no-headers | tr -s " " | numfmt --to iec --field=5 --invalid=ignore | column -t

Sed - Stream Editor

Replace, substitute

sed -i 's/pattern/substituteWith/g' ./file.txt #substitutes ''pattern'' with ''substituteWith'' in each matching line sed 's/A//g' #substitutes 'A' with an empty string '/g' - globally; all it means it will remove 'A' from whole string ##Type of operation # s/ -substitute ##Commands # /p printing modified content, print only the changes to the console # /w substitute and write matching patterns into a file $ sed -n "s/(name)/Mark/pw log2.txt" birthday.txt # /i -insensitive search # /g -global replace, all matches on all lines in a file # /2 -nth replace, replace 2nd occurrence in each line sed "s/cha/foo/2" log.txt # /d -delete any line matching a pattern ##Option flags # -i editing in place # -i.bak saves original file with .bak extension # -n suppress the default output to STD out sed 's/pattern/substituteWith/w matchingPattern.txt' ./file.txt

Substitute every file grepp'ed (-l returns a list of matched files)

for i in `grep -iRl 'dummy is undefined or' *`;do sed -i 's/dummy is undefined or/dummy is defined and/' $i; sleep 1; done

- Matching lines

#line matching pattern # \ _ then perform subsequent /s search and replace # \ / $ sed "/foo/s/./*/g" birthday.txt #replace any line containing 'foo' with '*'

Replace a string between XML tags. It will find String Pattern between <Tag> and </Tag> then it will substitute /s findstring with replacingstring globally /g within the Pattern String.

sed -i '/<Tag>/,/<\/Tag>/s/findstring/replacingstring/g' file.xml

Replace 1st occurance

sed '0,/searchPattern/s/searchPattern/substituteWith/g' ./file.txt #0,/searchPattern -index0 of the pattern first occurrence

Remove all lines until first match

sed -r -i -n -e '/Dynamic,$p' resolv.conf #-r regex, -i inline, so no need to print lines(quiet,silient) #-e expression, $p print to the EOFile

Regex search and remove (replace with empty string) all xml tags.

sed 's/<[^>]*>//' ./file.txt # [] -regex characters class, [^>] -negate, so following character is not > and <> won't be matched,

References

Sed manual Official GNU

Awk - language for processing text files

Remove duplicate keys from known_host file

awk '!seen[$0]++' ~/.ssh/known_hosts > /tmp/known_hosts; mv -f /tmp/known_hosts ~/.ssh/

Useful packages

- ARandR Screen Layout Editor - 0.1.7.1

Manage users

- Interactive

sudo adduser piotr

- Scripting

sudo useradd -m piotr # -m creates $HOME directory if does not exists sudo useradd -m piotr -s /bin/bash -d /sftp/piotr # -s define default shell # -d define $HOME directory

User groups

Add user to a group

In ubuntu adding a user to group admin will grant the root privileges. Adding them to sudo group will allow to execute any command

sudo usermod -a -G nameofgroup nameofuser #requires to login again

In RedHat/CentOS add a user to a group 'wheel' to grant him sudo access

sudo usermod -a -G wheel <nameofuser> #requires to login again # -a add # -G list of supplementary groups # -g primary group groups <nameofuser> # list all groups, 1st is a primary-group

Change user primary group and other groups

To assign a primary group to an user

sudo usermod -g primarygroupname username

To assign secondary groups to a user (-a keeps already existing secondary groups intact otherwise they'll be removed)

sudo usermod -a -G secondarygroupname username From man-page: ... -g (primary group assigned to the users) -G (Other groups the user belongs to), supplementary groups -a (Add the user to the supplementary group(s)) ...

Modify group

Rename a group name -n

sudo groupmod -n <new-group-name> <old-group>

Show USB devices

lsusb -t #shows USB tree

Copy and Paste in terminal

In Linux X graphical interface this works different then in Windows you can read more in X Selections, Cut Buffers, and Kill Rings. When you select some text this becomes the Primary selection (not the Clipboard selection) then Primary selection can be pasted using the middle mouse button. Note however that if you close the application offering the selection, in your case the terminal, the selection is essentially "lost".

Option 1 works in X

- select text to copy then use your mouse middle button or press a wheel

Option 2 works in Gnome Terminal and a webterminal

Ctrl+Shift+corCtrl+Insert- copyCtrl+Shift+vorShift+Insert- paste

Option 3 Install Parcellite GTK+ clipboard manager

sudo apt-get install parcellite

then in the settings check "use primary" and "synchronize clipboards"

Generate random password

cat /dev/urandom|tr -dc "a-zA-Z0-9"|fold -w 48|head -n1 openssl rand -base64 24 pwgen 32 1 #pwgen <length> <num_of_passwords>

xargs - running command once per item in array

-p- print all commands and prompt for confirmation-t- print commands while executing

declare -a ARRAY=(item1 item2 item2)

echo "${ARRAY[@]}" | xargs -0 -t -n1 -I {} . "${CWD}/{}"

# Insert args in the middle

dig wp.pl +short | xargs -I% nc -zv % 443

# | -I replace-str - replace occurrences of replace-str in the initial-arguments with names read from standard input

Localization - Change a keyboard layout

setxkbmap gb

at, atd - schedule a job

At can execute command at given time. It's important to remember 'at' can only take one line and by default it uses limited /bin/sh shell.

service atd status # check if at demon is running at 01:05 AM # schedule a job, this will drop into at> prompt, use C^d to exit atq # list job queue at -c <job number> # cat the job_number atrm <job_number> # deletes job mail # at command emails a user who scheduled a job with its output

whoami, who and w

# 'whoami' - print effective userid vagrant@ubuntu-bionic ~ $ whoami vagrant vagrant@ubuntu-bionic ~ $ sudo su - root@ubuntu-bionic:~$ whoami # print effective userid root # 'who' - show all users who has a login-tty, shows who is logged on, even if 'su' has been used it will print the logged on userid # who = whoami(userid) + login-tty root@ubuntu-bionic:~$ who vagrant pts/0 2020-02-02 19:02 (10.0.2.2) # 'who' vs 'who am i' root@ubuntu-bionic:~$ who # shows all logged sessions for the user, but this shows multiple termianl logins vagrant pts/0 2020-02-02 19:02 (10.0.2.2) vagrant pts/1 2020-02-02 19:13 (10.0.2.2) root@ubuntu-bionic:~$ who am i # shows only the current terminal session vagrant pts/0 2020-02-02 19:02 (10.0.2.2) ## 'who arg1 arg2' 2 parameters followed by who, it will print the current login-tty user, no matters what the params are # 'w' - show who is logged on and what they are doing # w = who(logged on sessions) + what they are doing root@ubuntu-bionic:~$ w 19:19:17 up 45 min, 2 users, load average: 0.02, 0.02, 0.00 USER TTY FROM LOGIN@ IDLE JCPU PCPU WHAT vagrant pts/0 10.0.2.2 19:02 0.00s 0.24s 0.00s sshd: vagrant [priv] vagrant pts/1 10.0.2.2 19:13 5:50 0.08s 0.08s -bash

which and whereis

which pwd #returns binary path only whereis pwd #returns binary path and paths to man pages

Linux Shell

- wiki.bash-hackers.org

- Reference Cards tldp.org/LDP

- How to handle white spaces Explains white spacing, eval and how to deal with building complex commands in scripts

Syntax checker

Common tool is to use shellchecker. Here below it's a reference how to install stable version on Ubuntu

sudo apt install xz-utils #pre req

export scversion="stable" # or "v0.4.7", or "latest"

wget "https://storage.googleapis.com/shellcheck/shellcheck-${scversion}.linux.x86_64.tar.xz"

tar --xz -xvf shellcheck-"${scversion}".linux.x86_64.tar.xz

cp shellcheck-"${scversion}"/shellcheck /usr/bin/

shellcheck --version

Special variables

$_The last argument of the previous command.$-Flags passed to script (using set)$?Exit status of a command, function, or the script itself last executed$$The process ID of the current shell. For shell scripts, this is the process ID under which they are executing.$!PID (process ID) of last job run in background$0The filename of the current script.$#arguments vount supplied to a script.$@All the arguments are individually double quoted. If a script receives two arguments, $@ is equivalent to $1 $2.$*All the arguments are double quoted. If a script receives two arguments, $* is equivalent to $1 $2.

- Note on

$*and$@

$* and $@ when unquoted are identical and expand into the arguments.

"$*" is a single word, comprising all the arguments to the shell, joined together with spaces. For example '1 2' 3 becomes "1 2 3".

"$@" is identical to the arguments received by the shell, the resulting list of words completely match what was given to the shell. For example '1 2' 3 becomes "1 2" "3"

Example

#!/bin/bash

function print_args() {

echo "-- Unquoted, asterisk --" ; for i in $* ;do echo $i; done

echo "-- Quoted, asterisk --" ; for i in "$*" ;do echo $i; done

echo "-- Unquoted, atpersand --"; for i in $@ ;do echo $i; done

echo "-- Quoted, atpersand --"; for i in "$@" ;do echo $i; done

}

print_args "a" "b c" "d e f"

Special Bash variables

PROMPT_COMMAND:- run a shell command (value of this variable) every time your prompt is displayedHISTTIMEFORMAT:- once this variable is set, new history entries record the time along with the command; eg.HISTTIMEFORMAT='%d/%m/%y %T 'SHLVL:- variable tracks how deeply nested you are in the bash shell, useful in scripts where you’re not sure whether you shouldexitLINENO:- reports the number of commands that have been run in the session so far. This is most often used in debugging scripts. By inserting lines like:echo DEBUG:$LINENOyou can quickly determine where in the script you are (or are not) getting to.REPLYrecordsread INPUT, so no need to read into a variable asecho $INPUTis the same asecho $REPLYTMOUT:- if nothing is typed in for the number of seconds this is set to, then the shell will exit

Resources

- TLDP Internal Variables

dirname, basename, $0

Option 1

#/bin/bash

PRG=$0 #relative path with program name

BASENAME=$(basename $0) #strip directory and suffix from filenames, here it's own script name

DIRNAME=$(dirname $0) #strip last component from file name, returns relative path to the script

printf "PRG=$0 --> computed full path: $PRG\n"

printf "BASENAME=\$(basename \$0) --> computed name of script: $BASENAME\n"

printf "DIRNAME=\$(dirname \$0) --> computed dir of script: $DIRNAME\n"

# Path from sourced file, this is bash only so won't work in containers /bin/sh

source $(dirname "${BASH_SOURCE[0]}")/../../env

Option 2

CWD="$( cd "$( dirname "${BASH_SOURCE[0]}" )" && pwd )"

CWD="$(dirname $(realpath $0))"

Option 3 TLK and Expect

Shebang

The shebang line has never been specified as part of POSIX, SUS, LSB or any other specification. AFAIK, it hasn't even been properly documented. There is a rough consensus about what it does: take everything between the ! and the \n and exec it. The assumption is that everything between the ! and the \n is a full absolute path to the interpreter. There is no consensus about what happens if it contains whitespace.

- Some operating systems simply treat the entire thing as the path. After all, in most operating systems, whitespace or dashes are legal in a path.

- Some operating systems split at whitespace and treat the first part as the path to the interpreter and the rest as individual arguments.

- Some operating systems split at the first whitespace and treat the front part as the path to the interpeter and the rest as a single argument (which is what you are seeing).

- Some even don't support shebang lines at all.

Thankfully, 1. and 4. seem to have died out, but 3. is pretty widespread, so you simply cannot rely on being able to pass more than one argument.

And since the location of commands is also not specified in POSIX or SUS, you generally use up that single argument by passing the executable's name to env so that it can determine the executable's location; e.g.:

#!/usr/bin/env gawk

This still assumes a particular path for env, but there are only very few systems where it lives in /bin, so this is generally safe. The location of env is a lot more standardized than the location of gawk or even worse something like python or ruby or spidermonkey. Which means that you cannot actually use any arguments at all.

Starting with coreutils 8.30 you can use

#!/usr/bin/env -S command arg1 arg2 ...

Bash shell good practices

#!/usr/bin/env bashis more portable than#!/bin/bashset -x, set -o xtrace-trace what gets executedset -u, set -o nounset-exit a script if you try to use an uninitialised variableset -e, set -o errexit-make your script exit when a command fails, then add|| trueto commands that you allow to failset -o pipefail-On a slightly related note, by default bash takes the error status of the last item in a pipeline, which may not be what you want. For example, false | true will be considered to have succeeded. If you would like this to fail, then you can use set -o pipefail to make it fail.

Unofficial bash strict mode

#!/bin/bash set -o errexit set -o nounset set -o pipefail set -euo pipefail # one liner

Explain:

set -e- immediately exit if any command has a non-zero exit statusset -u- affects variables. When set, a reference to any variable you haven't previously defined - with the exceptions of $* and $@ - is an errorset -o pipefail- any command in a pipeline fails, that return code will be used as the return code of the whole pipeline. By default, the pipeline's return code is that of the last command.

Script path - execute a script not in current working directory

When executing a script not in current working directory then you may want the script do following:

- Change CWD to the script directory. Because the script will run in sub-shell your CWD after the script exits out does not change.

# Option 1

SCRIPT_PATH="$(cd "$(dirname "${BASH_SOURCE[0]}")" && pwd)"

cd "$SCRIPT_PATH" || exit 1

# Option 2 - from K8s repo tree

SCRIPT_ROOT=$(dirname ${BASH_SOURCE})/..

# Option 3

DIR="$(dirname $(realpath $0))" # this works also if you reference symlinks and will return hardfile path

# Option 4 - magic variables for current file & dir

__dir="$(cd "$(dirname "${BASH_SOURCE[0]}")" && pwd)"

__file="${__dir}/$(basename "${BASH_SOURCE[0]}")"

__base="$(basename ${__file} .sh)"

__root="$(cd "$(dirname "${__dir}")" && pwd)" # <-- change this as it depends on your app

arg1="${1:-}"

Testing frameworks

- Bash Infinity standard library and a boilerplate framework for writing tools using bash

file descriptors

File descriptors are numbers, refereed also as a numbered streams, the first three FDs are reserved by system:

0 - STDIN, 1 - STDOUT, 2 - STDERR

echo "Enter a file name to read: "; read FILE exec 5<>$FILE #open/assign a file for '>' writing and reading '<' while read -r SUPERHERO; do #-r read a file echo "Superhero Name: $SUPERHERO" done <&5 #'&' describes that '5' is a FD, reads the file and redirects into while loop echo "File Was Read On: `date`" >&5 #write date to the file exec 5>&- #close '-' FD and close out all connections to it

Remove empty lines

cat blanks.txt | awk NF # [1] cat blanks.txt | grep -v '^[[:blank:]]*$' # [2] grep -e "[[:blank:]]" blanks

- [1] The command

awk NFis shorthand forawk 'NF != 0', or 'print those lines where the number of fields is not zero': - [2] The POSIX character class [:blank:] includes the whitespace and tab characters. The asterisk (*) after the class means 'any number of occurrences of that expression'. The initial caret (^) means that the character class begins the line. The dollar sign ($) means that any number of occurrences of the character class also finish the line, with no additional characters in between.

References

Here-doc | heredoc - cat redirection to create a file

Example of how to use heredoc and redirect STDOUT to create a file.

| Standard | Literal | Remove white-spaces |

|---|---|---|

cat >file.txt <<EOF cat << EOF > file.txt wp.pl bbc.co.uk EOF |

$ cat >file <<'EOF' echo "$ABC=home_dir" EOF If you don't want ANY of the doc to be expanded or any special characters interpreted, quote the label with single quotes. |

cat >file.txt <<-EOF wp.pl bbc.co.uk EOF Change the here-doc operator to |

Explanation

<<EOFindicates everything following is to be treated as input until the stringEOFis reached.- the

catcommand is used to print out a block of text >hosts.txtredirects the output fromcatto the file

echo | print on-screen

Printing the value of a variable. The \\n may be omitted if a following newline is not desired. The quotation marks are essential.

printf %s\\n "$var" echo "$var" # is NOT a valid substitute, NEVER use echo like this

Expansions - string manipulation

- parameter-substitution tldp.org/LDP

- Using "${a:-b}" for variable assignment in scripts unix.stackexchange.com

String manipulation | parameter substitution

# Strip the longest match ## from the beginning(hashbang) of the string matching pattern(`* `). It matches any number of characters and a <space> .

host="box-1.acme.cloud 22"; echo "${host##* }" # -> 22 # pattern `* `

host="https://google.com" ; echo "${host##*/}" # -> google.com # pattern `*/`

# Strip the longest match %% from end(percentage) of the string, matching pattern `<space>` and `*` anything (fileglob )

host="box-1.acme.cloud 22"; echo "${host%% *}" # -> box-1.acme.cloud # pattern ` *`

host="api-uat-1" ; echo "${host%%-*}" # -> api # pattern `-*`

# Strip the longest match (%%) from end of string (percentage), [0-9]* regex matching any digit

vpc=dev02; echo "${vpc%%[0-9]*}" # -> dev # pattern `[0-9]*`

# Strip end of string '.aws.company.*'; file globing allowed or ".* " is a regEx unsure

host="box-1.prod1.midw.prod20.aws.acme.cloud"; echo ${host%.aws.acme.*}

Default value and parameter substitution

PASSWORD="${1:-data123}" #assign $1 arg if exists and is not zero length, otherwise expand to default value "data123" string

PASSWORD="${1:=data123}" #if $1 is not set or is empty, evaluate expression to "data123"

PASSWORD="${var:=$DEFAULT}"

Search and Replace

Expansion operators:

/ - substitutes 1st match

// - substitutes all matches

# Example 1. Replace all '_' with '/' which is a common way turning a string into a path

STRING=secret_loc-a_branch1_branch2_key1

echo "path: ${STRING//_//}"

path: secret/loc-a/branch1/branch2/key1

# Example 2. Replace 1st match '-' with '_'

STRING=gke-dev

echo ${STRING/-/_} # -> gke_dev

tr - translate

Replaces/translates one set of characters from STDIN to how are they look in STDOUT; eg: char classes are:

echo "Who is the standard text editor?" |tr [:lower:] [:upper:] echo 'ed, of course!' |tr -d aeiou #-d deletes characters from a string echo 'The ed utility is the standard text editor.' |tr -s astu ' ' # translates characters into spaces echo ‘extra tabs – 2’ | tr -s [:blank:] #supress white spaces tr -d '\r' < dosfile.txt > unixfile.txt #remove carriage returns <CR>

sort

# sort TSV by second column sort -t$'\t' -k2 # -t$'\t' tab as a column/key delimiter, note single quotes # to insert literal TAB do Ctrl+v, then TAB

Skip 1st line to preserve a header.

ps aux | (sed -u 1q; sort) | column -s, -t # -u -unbuffered # 1q -print first line (header) and quit (leaving the rest of the input to sort)

The -u switch (unbuffered) is required for those seds (notably, GNU's) that would otherwise read the input in chunks, thereby consuming data that you want to go through sort instead.

Words of the form $'string' are treated specially. The word expands to string, with backslash-escaped characters replaced as specified by the ANSI C standard.

uniq

This command removes repeated lines. Therefore the file need to be sorted first.

cat file | uniq -u # remove only reoccuring duplications cat file | sort | uniq -u # display only uniq lines

Continue

https://www.ibm.com/developerworks/aix/library/au-unixtext/index.html Next colrm

paste

Paste can join 2 files side by side to provide a horizontal concatenation.

paste -d$';' -s # -d delimiter to be used in the output. It'll replace \n or TAB # -s serialization, if imput are files or a list of new lines, this will join all lines

Functions

Syntax:

- the standard

Bourne shell /bin/shuses the function name followed immediately by a pair of parentheses () and curly brackets {} around the code itself bashandkshthe function name is preceded by the keyword functionfunction myFunc. Thefunctionkeyword is optional unless the function name is also an alias. If thefunctionkeyword is used, parentheses are then optional.

Parameters are passed to functions in the same manner as to scripts using $1, $2, $3 positional parameters and using them inside the function. $@ returns the array of all positional parameters $1, $2, etc.

funcLastLoginInDays () {

echo "$USERNAME - logged in $1 days ago"

return 147

}

#eg. of calling a function with a parameter

funcLastLoginInDay $USERNAME; echo $? #last commands will show a return numeric code

Execution scope

Functions can be declared to run in the current shell using curly braces to scope the body.

my_func () {

# Executes within the current environment

}

Whereas functions declared using brackets run in a new subshell.

my_func () ( # Executes within a new shell )

Forward declaration

Functions need to be defined before they are used. This is commonly achieved using the following pattern:

main() { foo; } #main function code, includes other functions. Visually it's at the top of a script

foo () { echo "Step_1"; } #other functions block

main "$@" #execute main() function

Private functions

Functions that are private to a script should be named with a __ prefix and unset at the end of the script.

__my_func () {

}

unset -f __my_func

Bash variable scoping

- Environment variable - defined in ENV of a shell

- Script Global Scope variable - defined within a script

- Function local variable - defined within a function and will become available/defined in Global Scope only after the function has been called

Variables global to shell and all subshells

Name the variable in upper case and using underscores.

export MY_VARIABLE

Variables local within a shell script

Variables declared with the local keyword are local to the declaring function and all functions called from that function.

child_function () {

echo "$foo"

local foo=2

echo "$foo"

}

main () {

local foo=1

echo "$foo"

child_function

echo "$foo"

}

main

#### Actual output

# 1

# 1

# 2

# 1

Variables global within a shell script

Two mechanisms can be used to prevent variables declared within a script from leaking into the calling shell environment.

unseteach variable at the end of the script

g_variable=something

...

unset g_variable

- Declare each variable from within a subshell scoped main

# Scope to subshell

main () (

# Global to current subshell

g_variable=something

)

unset all environment variables starting with a leading string

unset ${!prefix@}- expands to the names of variables whose names begin with

prefix [...]When@is used and the expansion appears within double quotes, each variable name expands to a separate word unset $(compgen -v prefix)- expands to all variables matching the prefix

unset $(env | grep string |awk -F'=' '{print $1}')- search with string grep will fetch both environment and fetch the name of environment variable then pass to unset command

Environment scoping

Querying variables and functions in scope

# List all functions declared within the current environment compgen -A function # List all variables declared wtihin the current environment compgen -v

getopt(s) - argument parameters parsers

getopt- a command line utility provided by the util-linux package

#TODO example

getopts- Bash buildin, does not support long options, uses the$OPTARGvariable for options that have arguments

# parse parameters into the script

while getopts ":u:h" OPTIONS; do

case "${OPTIONS}" in

u) URL=${OPTARG} ;;

h) HELP="true"

esac

done

# : - first colon has special meaning

# u: - parameter u requires argument value to be passed on because ':' follows 'u'

# h - parameter h does not require a value to be passed on

- References

set options

set -o noclobber # > prevents overriding a file if already exists set +o noclobber # enable clobber back on

short version of checking command line parameters

Checking 3 positional parameters. Run with: ./check one

#!/bin/bash

: ${3?"Usage: $1 ARGUMENT $2 ARGUMENT $3 ARGUMENT"}

IFS and delimiting

$IFS Internal Field Separator is an environment variable defining default delimiter. It can be changed at any time in a global scope of within subshell by assigning a new value, eg:

echo $IFS # displays the current delimiter, by default it's 'space' $IFS=',' #changes delimiter into ',' coma

Counters

These counters do not require invocation, they always start from 1.

#!/bin/bash COUNTER=0 #defined only to satisfy while test function; otherwise exception [: -lt: unary operator expected while [ $COUNTER -lt 5 ]; do (( COUNTER++ )) # COUNTER=$((COUNTER+1)) # let COUNTER++ echo $COUNTER done

Loops

Bash shell - for i loop

for i in {1..10}; do echo $i; done

for i in {1..10..2}; do echo $i; done #step function {start..end..increment} will print 1 3 5 7 9

for i in 1 2 3 4 5 6 7 8 9 10; do echo $i; done

for i in $(seq 1 10); do echo $i; done

for ((i=1; i <= 10 ; i++)); do echo $i; done

for host in $(cat hosts.txt); do ssh "$host" "$command" >"output.$host"; done a01.prod.com a02.prod.com for host in $(cat hosts.txt); do ssh "$host" 'hostname; grep Certificate /etc/httpd/conf.d/*; echo -e "\n"'; done

Dash shell - for i loop

#!/bin/dash wso2List="esb dss am mb das greg" #by default white space separates items in the list structures for i in $wso2List; do echo "Product: $i" done

Bash shell - while loop

while true; do tail /etc/passwd; sleep 2; clear; done

| Read a file | Read a variable |

|---|---|

FILE="hostlist.txt" #a list of hostnames, 1 host per line # optional: IFS= -r while IFS= read -r LINE; do (( COUNTER++ )) echo "Line: $COUNTER - Host_name: $LINE" done < "$FILE" #redirect the file content into loop |

PLACES='Warsaw London' while read LINE; do (( COUNTER++ )) echo "Line: $COUNTER - Line_content: $LINE" done <<< "$PLACES" #inject multi-line string variable, ref. heredoc |

-roption passed to read command prevents backslash escapes from being interpretedIFS=option before read command to prevent leading/trailing whitespace from being trimmed -

Loop until counter expression is true

while [ $COUNT -le $DISPLAYNUMBER ]; do

echo "Hello World - $COUNT"

COUNT="$(expr $COUNT + 1)"

done

Arrays

Simple array

hostlist=("am-mgr-1" "am-wkr-1" "am-wkr-2" "esb-mgr-1") #array that is actually just a list data type

for INDEX in ${hostlist[@]}; do #@ expands to every element in the list

printf "${hostlist[INDEX]}\n" #* expands to match any string, so it matches every element as well

done

arr=()Create an empty arrayarr=(1 2 3)Initialize array${arr[2]}Retrieve third element${arr[@]}Retrieve all elements${!arr[@]}Retrieve array indices${#arr[@]}Calculate array sizearr[0]=3Overwrite 1st elementarr+=(4)Append value(s)str=$(ls)Savelsoutput as a stringarr=( $(ls) )Savelsoutput as an array of files${arr[@]:s:n}Retrieve n elements starting at index secho ${array[@]/*[aA]*/}Remove strings[items] that contain 'a' or 'A'

Display array all items

#!/bin/bash

array=($(cat -))

echo "${array[@]}" # display array without '\n' because STDIN is line by line

# Test

$(for i in {1..5}; do echo $i; done) | ./script.sh

Display specific indexes

echo "${array[@]:3:5}"

Associative arrays, key=value pairs

#!/bin/bash

ec2type () { #function declaration

declare -A array=( #associative array declaration, bash 4

["c1.medium"]="1.7 GiB,2 vCPUs" #double quotation is required

["c1.xlarge"]="7.0 GiB,8 vCPUs"

["c3.2xlarge"]="15.0 GiB,8 vCPUs"

)

echo -e "${array[$1]:-NA,NA}" #lookups for a value $1 (eg. c1.medium) in the array,

} #if nothing found (:-) expands to default "NA,NA" string

ec2type $1 #invoke the function passing 1st argument received from a command line

### Usage

$ ./ec2types.sh c1.medium

1.7 GiB,2 vCPUs

Convert a string into array, remember to leave the variable unquoted. This will fail if $string has globbing characters in. It can be avoided by set -f && array=($string) && set +f. The array items will be delimited by a space character.

array=($string) read -a array <<< $string IFS=' ' read -a array <<< "$string" #avoid fileglob expansions for *,? and [], variable is double-quoted

if statement

if [ "$VALUE" -eq 1 ] || [ "$VALUE" -eq 5 ] && [ "$VALUE" -gt 4 ]; then echo "Hello True" elif [ "$VALUE" -eg 7 ] 2>/dev/null; then echo "Hello 7" else echo "False" fi

case statement

case $MENUCHOICE in

1)

echo "Good choice!"

;; #end of that case statements so it does not loop infinite

2)

echo "Better choice"

;;

*)

echo "Help: wrong choice";;

esac

traps

First argument is a command to execute, followed by events to be trapped

trap 'echo "Press Q to exit"' SIGINT SIGTERM

trap 'funcMyExit' EXIT #run a function on script exit

trap "{ rm -f $LOCKFILE; }" EXIT #delete a file on exit

#!/bin/bash

trap ctrl_c INT # trap ctrl-c and call ctrl_c()

function ctrl_c() {

echo "** Trapped CTRL-C **"

}

debug mode

You can enable debugging mode anywhere in your scrip and disable many times

set -x #starts debug echo "Command to debug" set +x #stops debug

Or debug in sub-shell by running with -x option eg.

$ bash -x ./script.sh

Additionally you can disable globbing with -f

- Execute a script line by line

set -x trap read debug < YOUR CODE HERE >

- Execute a script line by line (improved version)

If your script is reading content from files, the above listed will not work. A workaround could look like the following example.

#!/usr/bin/env bash echo "Press CTRL+C to proceed." trap "pkill -f 'sleep 1h'" INT trap "set +x ; sleep 1h ; set -x" DEBUG < YOUR CODE HERE >

Install Bash debugger

apt-get install bashdb bashdb your_command.sh #type step, and then hit carriage return after that

References

errors

syntax error: unexpected end of file

This often can be caused by CRLF line terminators. Just run dos2unix script.sh.

Another thing to check:

- terminate bodies of single-line functions with semicolon

I.e. this innocent-looking snippet will cause the same error:

die () { test -n "$@" && echo "$@"; exit 1 }

To make the dumb parser happy:

die () { test -n "$@" && echo "$@"; exit 1; }

# Applies also to below, lack of last ; will produce the error

[[ "$#" == 1 ]] && [[ "$arg" == [1,2,3,4] ]] && printf "%s\n" "blah" || { printf "%s\n" "blahblah"; usage; }

if [ -f ~/.git-completion.bash ]; then . ~/.git-completion.bash; fi;

Create a big file

Use dd tool that will create a file of size count*bs bytes

- 1024 bytes * 1024 count = 1048576 Bytes = 1Mb

- 1024 bytes * 10240 count = 10Mb

- 1024 bytes * 102400 count = 100Mb

- 1024 bytes * 1024000 count = 1G

dd if=/dev/zero bs=1024 count=10240 of=/tmp/10mb.zero #creates 10MB zero'd dd if=/dev/urandom bs=1048576 count=100 of=/tmp/100mb.bin #creates 100MB random file dd if=/dev/urandom bs=1048576 count=1000 of=1G.bin status=progress

dd in GNU Coreutils 8.24+ (Ubuntu 16.04 and newer) got a new status option to display the progress:

dd if=/dev/zero bs=1048576 count=1000 of=1G.bin status=progress 1004535808 bytes (1.0 GB, 958 MiB) copied, 6.01483 s, 167 MB/s 1000+0 records in 1000+0 records out 1048576000 bytes (1.0 GB, 1000 MiB) copied, 15.4451 s, 67.9 MB/s

Colours

# Option 1

echo_b(){ echo -e "\e[1;34m${@}\e[0m"; }

echo_g(){ echo -e "\e[1;32m${@}\e[0m"; }

echo_p(){ echo -e "\e[1;35m${@}\e[0m"; }

echo_r(){ echo -e "\e[1;31m${@}\e[0m"; }

echo_b " -h Print this message"

# Option 2

red="\e[31m"

red_bold="\e[1;31m" # '1' makes it bold

blue_bold="\e[1;34m"

green_bold="\e[1;32m"

light_yellow="\e[93m"

reset="\e[0m"

echo -e "${green_bold}Hello world!${reset}"

# If you wish to unset, add the common prefix to above variables eg 'c_', then:

unset ${!c_@}

# Option 3

red=$'\e[1;31m' # '1' makes it bold

grn=$'\e[1;32m'

yel=$'\e[1;33m'

blu=$'\e[1;34m'

mag=$'\e[1;35m'

cyn=$'\e[1;36m'

end=$'\e[0m'

printf "%s\n" "Text in ${red}red${end}, white and ${blu}blue${end}.

Colours function using a terminal settings rather than ANSI color control sequences

if test -t 1; then # if terminal

ncolors=$(which tput > /dev/null && tput colors) # supports color

if test -n "$ncolors" && test $ncolors -ge 8; then

termcols=$(tput cols)

bold="$( tput bold)"

underline="$(tput smul)"

standout="$( tput smso)"

normal="$( tput sgr0)" # reset to default font

black="$( tput setaf 0)"

red="$( tput setaf 1)"

green="$( tput setaf 2)"

yellow="$( tput setaf 3)"

blue="$( tput setaf 4)"

magenta="$( tput setaf 5)"

cyan="$( tput setaf 6)"

white="$( tput setaf 7)"

fi

fi

How to use it's the same as using ANSI color control sequences. Use ${colorName}Text${normal}

print_bold() {

title="$1"

text="$2"

echo "${red}================================${normal}"

echo -e " ${bold}${yellow}${title}${normal}"

echo -en " ${text}"

echo "${red}================================${normal}"

}

References

- Bash colours ANSI/VT100 Control sequences

Run scripts from website

The script available via http/s as usual needs begin with shebang and can be executed in fly without a need to download to a file system using cURL or wget.

curl -sL https://raw.githubusercontent.com/pio2pio/project/master/setup_sh | sudo -E bash - wget -qO- https://raw.githubusercontent.com/pio2pio/project/master/setup_sh | sudo -E bash -

Run local scripts remotly

Use the -s option, which forces bash (or any POSIX-compatible shell) to read its command from standard input, rather than from a file named by the first positional argument. All arguments are treated as parameters to the script instead. If you wish to use option parameters like eg. --silent true make sure you put -- before arg so it is interpreted as an argument to test.sh instead of bash.

ssh user@remote-addr 'bash -s arg' < test.sh ssh user@remote-addr bash -sx -- -p am -c /tmp/conf < ./support/scripts/sanitizer.sh # -- signals the end of options and disables further option processing. # any arguments after the -- are treated as filenames and arguments # -p am -c /tmp/conf -these are arguments passed onto the sanitizer.sh script

References

Scripts - solutions

Check connectivity

#!/bin/bash

red="\e[31m"; green="\e[32m"; blue="\e[34m"; light_yellow="\e[93m"; reset="\e[0m"

if [ "$1" != "onprem" ]; then

echo -e "${blue}Connectivity test to servers: on-prem${reset}"

declare -a hosts=(

wp.pl

rzeczpospolita.pl

)

else

echo -e "${blue}Connectivity test to Polish news servers: polish-news${reset}"

declare -a hosts=(

wp.pl

rzeczpospolita.pl

)

fi

#################################################

echo -e "From server: ${blue}$(hostname -f) $(hostname -I)${reset}"

for ((i = 0; i < ${#hosts[@]}; ++i)); do

RET=$(timeout 3 nc -z ${hosts[$i]} 22 2>&1) \

&& echo -e "[OK] $i ${hosts[$i]}" \

|| echo -e "${red}[ER] $i ${hosts[$i]}\t[ERROR: \

$(if [ $? -eq 124 ]; then echo "timeout maybe filtered traffic"; else \

if [ -z "$RET" ]; then echo "unknown"; else echo -e "$RET"; fi; fi)]${reset}"

sleep 0.3

done

# Working as well

#for host in "${hosts[@]}"; do

# RET=$(timeout 3 nc -z ${host} 22 2>&1) && echo -e "[OK] ${host}" || echo -e "${red}[ER] ${host}\t[ERROR: \

# $(if [ $? -eq 124 ]; then echo "timeout maybe filtered traffic"; else \

# if [ -z "$RET" ]; then echo "unknown"; else echo -e "$RET"; fi; fi)]${reset}"

# sleep 0.3

#done

LOCAL UBTUNU MIRROR

rsync -a --progress rysnc://archive.ubuntu.com/ubuntu /opt/mirror/ubuntu - command to create local mirror. ls /opt/mirror/ubuntu - shows all files

Bash

Delete key gives ~ ? Add the following line to your $HOME/.inputrc (might not work if added to /etc/inputrc )

"\e[3~": delete-char

Process substitution

Process substitution expands output of commands to a file. Depends on OS default /.../fd descriptor or temporary file is used to store the content. But the syntax works without using any file names thus looks simpler. There are also situations that a command requires a file and | pipe cannot be used.

source <(kubectl completion bash)

The example sources a file but syntaticly there is no file when using the process substitution construction.

References

- BashPitfalls awesome!