Difference between revisions of "Terraform"

(→AWS) |

|||

| Line 79: | Line 79: | ||

<source lang="json"> | <source lang="json"> | ||

vi provider.tf | vi provider.tf | ||

## Local state --------------------------------- | |||

## Local state | |||

# terraform { | # terraform { | ||

# version = "~> 1.0" | # version = "~> 1.0" | ||

| Line 87: | Line 86: | ||

# } | # } | ||

## Remote S3 state ----------------------------- | |||

## Remote S3 state | |||

terraform { | terraform { | ||

version = "~> 1.0" | version = "~> 1.0" | ||

| Line 94: | Line 92: | ||

profile "use1-infra-agent-build-ftr" | profile "use1-infra-agent-build-ftr" | ||

backend "s3" { | backend "s3" { | ||

bucket = "tfstate-${var.vpc}-${var.vpc-env}-${var.account-id}" #must exist beforehand | bucket = "tfstate-${var.vpc}-${var.vpc-env}-${var.account-id}" # must exist beforehand | ||

key = "aws/${var.vpc}/state" | key = "aws/${var.vpc}/state" | ||

region = "${var.region}" | region = "${var.region}" | ||

| Line 103: | Line 101: | ||

} | } | ||

} | } | ||

# | # --------------------------------------------- | ||

provider "aws" { | provider "aws" { | ||

profile = "CrossAccount_Terraform" | profile = "CrossAccount_Terraform" | ||

# shared_credentials_file = "/home/piotr/.aws/credentials" | # shared_credentials_file = "/home/piotr/.aws/credentials" | ||

assume_role = { | assume_role = { | ||

role_arn = "arn:aws:iam::222222222222:role/CrossAccount_Terraform" #assume role in target account | role_arn = "arn:aws:iam::222222222222:role/CrossAccount_Terraform" # assume role in target account | ||

} | } | ||

region = "us-east-1" | region = "us-east-1" | ||

Revision as of 16:07, 2 February 2020

This article is about utilising a tool from HashiCorp called Terraform to build infrastructure as a code. Meaning to spin up AWS instances, setup security groups, VPC and any other cloud based infrastructure component.

Install terraform

wget https://releases.hashicorp.com/terraform/0.11.11/terraform_0.11.11_linux_amd64.zip unzip terraform_0.11.11_linux_amd64.zip sudo mv ./terraform /usr/local/bin

Manage versions of Teraform

You can use tfenv project.

tfenv install 0.11.11 tfenv use 0.11.11

Basic configuration

When terraform is run it looks for .tf file where configuration is stored. The look up process is limited to a flat directory and never leaves the directory that runs from. Therefore if you wish to address a common file a symbolic-link needs to be created within the directory you have .tf file.

$ vi example.tf

provider "aws" {

access_key = "AK01234567890OGD6WGA"

secret_key = "N8012345678905acCY6XIc1bYjsvvlXHUXMaxOzN"

region = "eu-west-1"

}

resource "aws_instance" "webserver" {

ami = "ami-405f7226"

instance_type = "t2.nano"

}

Since version 10.8.x major changes and features have been introduced including split of providers binary. Now each provider is a separate binary. Please see below example for Azure provider and other internal Terraform developed providers.

Azure

Terraform credentials

export ARM_SUBSCRIPTION_ID="YOUR_SUBSCRIPTION_ID"

export ARM_TENANT_ID="TENANT_ID"

export ARM_CLIENT_ID="CLIENT_ID"

export ARM_CLIENT_SECRET="CLIENT_SECRET"

export TF_VAR_client_id=${ARM_CLIENT_ID}

export TF_VAR_client_secret=${ARM_CLIENT_SECRET}

Example, how to source credentials

export VAULT_CLIENT_ADDR=http://10.1.1.1:8200

export VAULT_TOKEN=11111111-1111-1111-1111-1111111111111

vault read -format=json -address=$VAULT_CLIENT_ADDR secret/azure/subscription | jq -r '.data | .subscription_id, .tenant_id'

vault read -format=json -address=$VAULT_CLIENT_ADDR secret/azure/${application} | jq -r '.data | .client_id, .client_secret'

Terraform providers, modules and backend config

$ vi providers.tf

provider "azurerm" {

version = "1.10.0"

subscription_id = "${var.subscription_id}"

tenant_id = "${var.tenant_id}"

client_id = "${var.client_id}"

client_secret = "${var.client_secret}"

}

# HashiCorp special providers https://github.com/terraform-providers

provider "template" { version = "1.0.0" }

provider "external" { version = "1.0.0" }

provider "local" { version = "1.1.0" }

terraform {

backend "local" {}

}

AWS

Note that backend s3 stanza in terraform provider and aws provider requires separate specific AWS credentials. They cannot read creds from others provider block. However setting environment variables would solve this, but makes difficult to assume role.

vi provider.tf

## Local state ---------------------------------

# terraform {

# version = "~> 1.0"

# required_version = "= 0.11.11"

# backend "local" {}

# }

## Remote S3 state -----------------------------

terraform {

version = "~> 1.0"

required_version = "= 0.11.11"

profile "use1-infra-agent-build-ftr"

backend "s3" {

bucket = "tfstate-${var.vpc}-${var.vpc-env}-${var.account-id}" # must exist beforehand

key = "aws/${var.vpc}/state"

region = "${var.region}"

}

assume_role = {

# assume role in target account with correct permissions to s3 bucket

role_arn = "arn:aws:iam::222222222222:role/CrossAccount_Terraform"

}

}

# ---------------------------------------------

provider "aws" {

profile = "CrossAccount_Terraform"

# shared_credentials_file = "/home/piotr/.aws/credentials"

assume_role = {

role_arn = "arn:aws:iam::222222222222:role/CrossAccount_Terraform" # assume role in target account

}

region = "us-east-1"

allowed_account_ids = [ "111111111111", "222222222222" ]

}

provider "template" {

version = "~> 1.0.0"

}

Plan / apply

Meaning of markings in a plan output

For now, here they are, until we get it included in the docs better:

+create-destroy-/+replace (destroy and then create, or vice-versa if create-before-destroy is used)~update in-place<=applies only to data resources. You won't see this one often, because whenever possible Terraform does reads during the refresh phase. You will see it, though, if you have a data resource whose configuration depends on something that we don't know yet, such as an attribute of a resource that isn't yet created. In that case, it's necessary to wait until apply time to find out the final configuration before doing the read.

Plan and apply

Apply stage, if runs first time will create terraform.tfstate after all changes are done. This file should not be modified manually. It's used to compare what is out in cloud already so the next time APPLY stage runs it will look at the file and execute only necessary changes.

| terraform plan | terraform apply |

|---|---|

$ terraform plan Refreshing Terraform state in-memory prior to plan... The refreshed state will be used to calculate this plan, but will not be persisted to local or remote state storage. <...> + aws_instance.webserver ami: "ami-405f7226" associate_public_ip_address: "<computed>" availability_zone: "<computed>" ebs_block_device.#: "<computed>" ephemeral_block_device.#: "<computed>" instance_state: "<computed>" instance_type: "t2.nano" ipv6_addresses.#: "<computed>" key_name: "<computed>" network_interface_id: "<computed>" placement_group: "<computed>" private_dns: "<computed>" private_ip: "<computed>" public_dns: "<computed>" public_ip: "<computed>" root_block_device.#: "<computed>" security_groups.#: "<computed>" source_dest_check: "true" subnet_id: "<computed>" tenancy: "<computed>" vpc_security_group_ids.#: "<computed>" |

$ terraform apply aws_instance.webserver: Creating... ami: "" => "ami-405f7226" associate_public_ip_address: "" => "<computed>" availability_zone: "" => "<computed>" ebs_block_device.#: "" => "<computed>" ephemeral_block_device.#: "" => "<computed>" instance_state: "" => "<computed>" instance_type: "" => "t2.nano" ipv6_addresses.#: "" => "<computed>" key_name: "" => "<computed>" network_interface_id: "" => "<computed>" placement_group: "" => "<computed>" private_dns: "" => "<computed>" private_ip: "" => "<computed>" public_dns: "" => "<computed>" public_ip: "" => "<computed>" root_block_device.#: "" => "<computed>" security_groups.#: "" => "<computed>" source_dest_check: "" => "true" subnet_id: "" => "<computed>" tenancy: "" => "<computed>" vpc_security_group_ids.#: "" => "<computed>" aws_instance.webserver: Still creating... (10s elapsed) aws_instance.webserver: Creation complete (ID: i-0eb33af34b94d1a78) Apply complete! Resources: 1 added, 0 changed, 0 destroyed. The state of your infrastructure has been saved to the path below. This state is required to modify and destroy your infrastructure, so keep it safe. To inspect the complete state use the `terraform show` command. State path: |

Show

$ terraform show aws_instance.webserver: id = i-0eb33af34b94d1a78 ami = ami-405f7226 associate_public_ip_address = true availability_zone = eu-west-1c disable_api_termination = false (...) source_dest_check = true subnet_id = subnet-92a4bbf6 tags.% = 0 tenancy = default vpc_security_group_ids.# = 1 vpc_security_group_ids.1039819662 = sg-5201fb2b $> terraform destroy Do you really want to destroy? Terraform will delete all your managed infrastructure. There is no undo. Only 'yes' will be accepted to confirm. Enter a value: yes aws_instance.webserver: Refreshing state... (ID: i-0eb33af34b94d1a78) aws_instance.webserver: Destroying... (ID: i-0eb33af34b94d1a78) aws_instance.webserver: Still destroying... (ID: i-0eb33af34b94d1a78, 10s elapsed) aws_instance.webserver: Still destroying... (ID: i-0eb33af34b94d1a78, 20s elapsed) aws_instance.webserver: Still destroying... (ID: i-0eb33af34b94d1a78, 30s elapsed) aws_instance.webserver: Destruction complete Destroy complete! Resources: 1 destroyed.

After the instance has been terminated the terraform.tfstate looks like below:

vi terraform.tfstate

{

"version": 3,

"terraform_version": "0.9.1",

"serial": 1,

"lineage": "c22ccad7-ff26-4b8a-bf19-819477b45202",

"modules": [

{

"path": [

"root"

],

"outputs": {},

"resources": {},

"depends_on": []

}

]

}

AWS credentials profiles and variable files

Instead to reference secret_access keys within .tf file directly we can use AWS profile file. This file will be look at for the profile variable we specify in variables.tf file. Note: there is no double quotes.

$ vi ~/.aws/credentials #AWS credentials file with named profiles [terraform-profile1] #profile name aws_access_key_id = AAAAAAAAAAA aws_secret_access_key = BBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBB

Then we can now remove the secret_access keys from the main .tf file (example.tf) and amend as follows:

vi provider.tf

terraform {

version = "~> 1.0"

required_version = "= 0.11.11"

region = "eu-west-1"

backend "s3" {} # in this case all s3 details are passed as ENV vars

}

provider "aws" {

version = "~> 1.57"

# Static credentials - provided directly

access_key = "AAAAAAAAAAA"

secret_key = "BBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBB"

# Shared Credentials file - $HOME/.aws/credentials, static credentials are not needed then

# profile = "terraform-profile1" #profile name in credentials file, acc 111111111111

# shared_credentials_file = "/home/user1/.aws/credentials" #if different than default

# If specified, assume role in another account using the user credentials

# defined in the profile above

# assume_role {

# role_arn = "${var.aws_xaccount_role}" #variable version

# role_arn = "arn:aws:iam::222222222222:role/CrossAccountSignin_Terraform"

# }

# allowed_account_ids = [ "111111111111", "222222222222" ]

}

provider "template" {

version = "~> 1.0.0"

}

and create a variable file to reference it

$ vi variables.tf

variable "region" {

default = "eu-west-1"

}

variable "profile" {} #variable without a default value will prompt to type in the value. And that should be 'terraform-profile1'

Run terraform

$ terraform plan -var 'profile=terraform-profile1' #this way value can be set $ terraform plan -destroy -input=false

Example

Prerequisites are:

- ~/.aws/credential file exists

- variables.tf exist, with context below:

If you remove default value you will be prompted for it.

$ vi variables.tf

variable "region" { default = "eu-west-1" }

variable "profile" {

description = "Provide AWS credentials profile you want to use, saved in ~/.aws/credentials file"

default = "terraform-profile" }

variable "key_name" {

description = <<DESCRIPTION

Provide name of the ssh private key file name, ~/.ssh will be search

This is the key assosiated with the IAM user in AWS. Example: id_rsa

DESCRIPTION

default = "id_rsa" }

variable "public_key_path" {

description = <<DESCRIPTION

Path to the SSH public keys for authentication. This key will be injected

into all ec2 instances created by Terraform.

Example: ~./ssh/terraform.pub

DESCRIPTION

default = "~/.ssh/id_rsa.pub" }

Terraform .tf file

$ vi example.tf

provider "aws" {

region = "${var.region}"

profile = "${var.profile}"

}

resource "aws_vpc" "vpc" {

cidr_block = "10.0.0.0/16"

}

# Create an internet gateway to give our subnet access to the open internet

resource "aws_internet_gateway" "internet-gateway" {

vpc_id = "${aws_vpc.vpc.id}"

}

# Give the VPC internet access on its main route table

resource "aws_route" "internet_access" {

route_table_id = "${aws_vpc.vpc.main_route_table_id}"

destination_cidr_block = "0.0.0.0/0"

gateway_id = "${aws_internet_gateway.internet-gateway.id}"

}

# Create a subnet to launch our instances into

resource "aws_subnet" "default" {

vpc_id = "${aws_vpc.vpc.id}"

cidr_block = "10.0.1.0/24"

map_public_ip_on_launch = true

tags {

Name = "Public"

}

}

# Our default security group to access

# instances over SSH and HTTP

resource "aws_security_group" "default" {

name = "terraform_securitygroup"

description = "Used for public instances"

vpc_id = "${aws_vpc.vpc.id}"

# SSH access from anywhere

ingress {

from_port = 22

to_port = 22

protocol = "tcp"

cidr_blocks = ["0.0.0.0/0"]

}

# HTTP access from the VPC

ingress {

from_port = 80

to_port = 80

protocol = "tcp"

cidr_blocks = ["10.0.0.0/16"]

}

# outbound internet access

egress {

from_port = 0

to_port = 0

protocol = "-1" # all protocols

cidr_blocks = ["0.0.0.0/0"]

}

}

resource "aws_key_pair" "auth" {

key_name = "${var.key_name}"

public_key = "${file(var.public_key_path)}"

}

resource "aws_instance" "webserver" {

ami = "ami-405f7226"

instance_type = "t2.nano"

key_name = "${aws_key_pair.auth.id}"

vpc_security_group_ids = ["${aws_security_group.default.id}"]

# We're going to launch into the public subnet for this.

# Normally, in production environments, webservers would be in

# private subnets.

subnet_id = "${aws_subnet.default.id}"

# The connection block tells our provisioner how to

# communicate with the instance

connection {

user = "ubuntu"

}

# We run a remote provisioner on the instance after creating it

# to install Nginx. By default, this should be on port 80

provisioner "remote-exec" {

inline = [

"sudo apt-get -y update",

"sudo apt-get -y install nginx",

"sudo service nginx start"

]

}

}

Run a plan

$ terraform plan

var.key_name

Name of the AWS key pair

Enter a value: id_rsa #name of the key_pair

var.profile

AWS credentials profile you want to use

Enter a value: terraform-profile #aws profile in ~/.aws/credentials file

var.public_key_path

Path to the SSH public keys for authentication.

Example: ~./ssh/terraform.pub

Enter a value: ~/.ssh/id_rsa.pub #path to the matching public key

Refreshing Terraform state in-memory prior to plan...

The refreshed state will be used to calculate this plan, but will not be

persisted to local or remote state storage.

The Terraform execution plan has been generated and is shown below.

Resources are shown in alphabetical order for quick scanning. Green resources

will be created (or destroyed and then created if an existing resource

exists), yellow resources are being changed in-place, and red resources

will be destroyed. Cyan entries are data sources to be read.

+ aws_instance.webserver

ami: "ami-405f7226"

associate_public_ip_address: "<computed>"

availability_zone: "<computed>"

ebs_block_device.#: "<computed>"

ephemeral_block_device.#: "<computed>"

instance_state: "<computed>"

instance_type: "t2.nano"

ipv6_addresses.#: "<computed>"

key_name: "${aws_key_pair.auth.id}"

network_interface_id: "<computed>"

placement_group: "<computed>"

private_dns: "<computed>"

private_ip: "<computed>"

public_dns: "<computed>"

public_ip: "<computed>"

root_block_device.#: "<computed>"

security_groups.#: "<computed>"

source_dest_check: "true"

subnet_id: "${aws_subnet.default.id}"

tenancy: "<computed>"

vpc_security_group_ids.#: "<computed>"

+ aws_internet_gateway.internet-gateway

vpc_id: "${aws_vpc.vpc.id}"

+ aws_key_pair.auth

fingerprint: "<computed>"

key_name: "id_rsa"

public_key: "ssh-rsa AAAAB3NzaC1yc2EAAAADAQABAAABAQDfc piotr@ubuntu"

<...omitted...>

Plan: 7 to add, 0 to change, 0 to destroy.

Plan a single target

$ terraform plan -target=aws_ami_from_instance.golden

Terraform apply

$ terraform apply

$ terraform show #preview the current status with aws_instance.webserver: id = i-09c1c665cef284235 ami = ami-405f7226 <...> aws_security_group.default: id = sg-b14bb1c8 description = Used for public instances egress.# = 1 <...> aws_subnet.default: id = subnet-6f4f510b <...> aws_vpc.vpc: id = vpc-9ba0b7ff <...>

Apply a single resource using -target <resource>

$> terraform apply -target=aws_ami_from_instance.golden

Debug using terraform console

This command provides an interactive command-line console for evaluating and experimenting with expressions. This is useful for testing interpolations before using them in configurations, and for interacting with any values currently saved in state. Terraform console will read configured state even if it is remote.

$> terraform console #-state=path # note I have 'tfstate' available; this could be remote state

> var.vpc_cidr # <- new syntax

10.123.0.0/16

> "${var.vpc_cidr}" # <- old syntax

10.123.0.0/16

> aws_security_group.tf_public_sg.id # interpolate from state

sg-04d51b5ae10e6f0b0

> help

The Terraform console allows you to experiment with Terraform interpolations.

You may access resources in the state (if you have one) just as you would

from a configuration. For example: "aws_instance.foo.id" would evaluate

to the ID of "aws_instance.foo" if it exists in your state.

Type in the interpolation to test and hit <enter> to see the result.

To exit the console, type "exit" and hit <enter>, or use Control-C or

Control-D.

Example

$ echo "aws_iam_user.notif.arn" | terraform console arn:aws:iam::123456789:user/notif

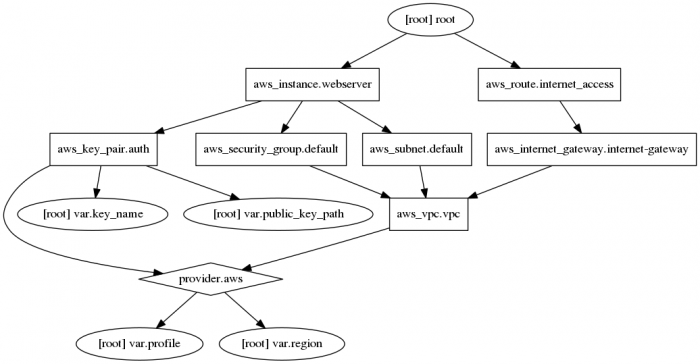

Visualise configuration

Create visualised file. You may need to install sudo apt-get install graphviz if it is not in your system.

$ terraform graph | dot -Tpng > example.png

Terraform destroy

Run destroy command to delete all resources that were created

$ terraform destroy aws_key_pair.auth: Refreshing state... (ID: id_rsa) aws_vpc.vpc: Refreshing state... (ID: vpc-9ba0b7ff) <...> Destroy complete! Resources: 7 destroyed.

Destroy a single resource

$ terraform show $ terraform destroy -target=aws_ami_from_instance.golden

Remote state

Enable

Create s3 bucket with unique name, enable versioning and choose a region.

Then configure terraform:

$ terraform remote config \

-backend=s3 \

-backend-config="bucket=YOUR_BUCKET_NAME" \

-backend-config="key=terraform.tfstate" \

-backend-config="region=YOUR_BUCKET_REGION" \

-backend-config="encrypt=true"

Remote configuration updated

Remote state configured and pulled.

After running this command, you should see your Terraform state show up in that S3 bucket.

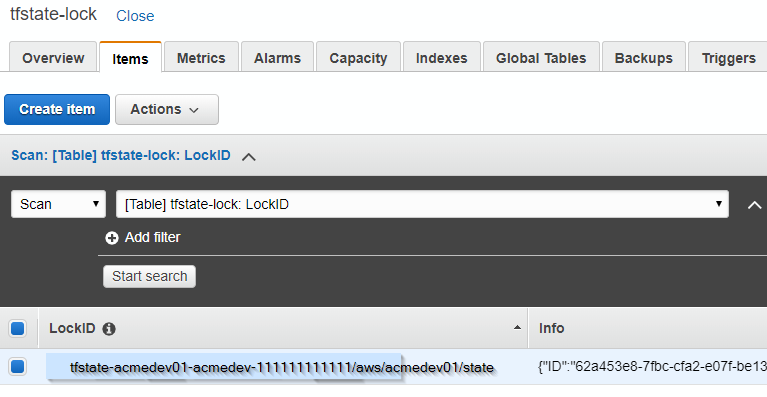

Locking

Add dynamodb_table name to backend configuration.

terraform {

version = "~> 1.0"

required_version = "= 0.11.11"

backend "s3" {

dynamodb_table = "tfstate-lock"

profile = "terraform-agent"

# assume_role {

# role_arn = "${var.aws_xaccount_role}"

# session_name = "${var.aws_xsession_name}"

# }

}

}

In AWS create dynamo-db table, named: tfsate-lock with index LockID; as on a picture below. It an event of taking a lock the entry similar to one below gets created.

{"ID":"62a453e8-7fbc-cfa2-e07f-be1381b82af3","Operation":"OperationTypePlan","Info":"","Who":"piotr@laptop1","Version":"0.11.11","Created":"2019-03-07T08:49:33.3078722Z","Path":"tfstate-acmedev01-acmedev-111111111111/aws/acmedev01/state"}

Variables

Variables can be provided via cli

terraform apply -var="image_id=ami-abc123"

terraform apply -var='image_id_list=["ami-abc123","ami-def456"]'

terraform apply -var='image_id_map={"us-east-1":"ami-abc123","us-east-2":"ami-def456"}'

Terraform also automatically loads a number of variable definitions files if they are present:

- Files named exactly

terraform.tfvarsorterraform.tfvars.json. - Any files with names ending in

.auto.tfvarsor.auto.tfvars.json.

Syntax

Terraform 0.12.0<

Terrafom 0.12 introduces stricter validation for followings but allows map keys to be set dynamically from expressions. Note of "=" sign.

- a map attribute - usually have user-defined keys, like we see in the tags example

- a nested block always has a fixed set of supported arguments defined by the resource type schema, which Terraform will validate

resource "aws_instance" "example" {

instance_type = "t2.micro"

ami = "ami-abcd1234"

tags = { # <- a map attribute, requires '='

Name = "example instance"

}

ebs_block_device { # <- a nested block, no '='

device_name = "sda2"

volume_type = "gp2"

volume_size = 24

}

}

Syntax auto-update

terraform 0.12upgrade # Rewrites pre-0.12 module source code for v0.12

For_each

| main.tf | variables.tf and outputs.tf |

|---|---|

# vi main.tf

resource "aws_vpc" "tf_vpc" {

cidr_block = "${var.vpc_cidr}"

enable_dns_hostnames = true

enable_dns_support = true

tags = { #<-note of '=' as this is an argument

Name = "tf_vpc"

}

}

resource "aws_security_group" "tf_public_sg" {

name = "tf_public_sg"

description = "Used for access to the public instances"

vpc_id = "${aws_vpc.tf_vpc.id}"

dynamic "ingress" {

for_each = [ for s in var.service_ports: {

from_port = s.from_port

to_port = s.to_port }]

content {

from_port = ingress.value.from_port

to_port = ingress.value.to_port

protocol = "tcp"

cidr_blocks = [ var.accessip ]

}

}

# Commented block has been replaced by 'dynamic "ingress"'

# ingress { #SSH

# from_port = 22

# to_port = 22

# protocol = "tcp"

# cidr_blocks = ["${var.accessip}"]

# }

# ingress { #HTTP

# from_port = 80

# to_port = 80

# protocol = "tcp"

# cidr_blocks = ["${var.accessip}"]

# }

egress {

from_port = 0

to_port = 0

protocol = "-1"

cidr_blocks = ["0.0.0.0/0"]

}

}

|

# vi variables.tf

variable "vpc_cidr" { default = "10.123.0.0/16" }

variable "accessip" { default = "0.0.0.0/0" }

variable "service_ports" {

type = "list"

default = [

{ from_port = 22, to_port = 22 },

{ from_port = 80, to_port = 80 }

]

}

# vi outputs.tf

output "public_sg" {

value = aws_security_group.tf_public_sg.id

}

output "ingress_port_mapping" {

value = {

for ingress in aws_security_group.tf_public_sg.ingress:

format("From %d", ingress.from_port) => format("To %d", ingress.to_port)

}

}

# Computed 'Outputs:'

ingress_port_mapping = {

"From 22" = "To 22"

"From 80" = "To 80"

}

public_sg = sg-04d51b5ae10e6f0b0

|

Plan is more readable and explicit

| terraform plan | col2-title |

|---|---|

cat plan.log

Refreshing Terraform state in-memory prior to plan...

The refreshed state will be used to calculate this plan, but will not be

persisted to local or remote state storage.

------------------------------------------------------------------------

An execution plan has been generated and is shown below.

Resource actions are indicated with the following symbols:

+ create

Terraform will perform the following actions:

# aws_security_group.tf_public_sg will be created

+ resource "aws_security_group" "tf_public_sg" {

+ arn = (known after apply)

+ description = "Used for access to the public instances"

+ egress = [

+ {

+ cidr_blocks = [

+ "0.0.0.0/0",

]

+ description = ""

+ from_port = 0

+ ipv6_cidr_blocks = []

+ prefix_list_ids = []

+ protocol = "-1"

+ security_groups = []

+ self = false

+ to_port = 0

},

]

+ id = (known after apply)

+ ingress = [

+ {

+ cidr_blocks = [

+ "0.0.0.0/0",

]

+ description = ""

+ from_port = 22

+ ipv6_cidr_blocks = []

+ prefix_list_ids = []

+ protocol = "tcp"

+ security_groups = []

+ self = false

+ to_port = 22

},

+ {

+ cidr_blocks = [

+ "0.0.0.0/0",

]

+ description = ""

+ from_port = 80

+ ipv6_cidr_blocks = []

+ prefix_list_ids = []

+ protocol = "tcp"

+ security_groups = []

+ self = false

+ to_port = 80

},

]

+ name = "tf_public_sg"

+ owner_id = (known after apply)

+ revoke_rules_on_delete = false

+ vpc_id = (known after apply)

}

|

# aws_vpc.tf_vpc will be created

+ resource "aws_vpc" "tf_vpc" {

+ arn = (known after apply)

+ assign_generated_ipv6_cidr_block = false

+ cidr_block = "10.123.0.0/16"

+ default_network_acl_id = (known after apply)

+ default_route_table_id = (known after apply)

+ default_security_group_id = (known after apply)

+ dhcp_options_id = (known after apply)

+ enable_classiclink = (known after apply)

+ enable_classiclink_dns_support = (known after apply)

+ enable_dns_hostnames = true

+ enable_dns_support = true

+ id = (known after apply)

+ instance_tenancy = "default"

+ ipv6_association_id = (known after apply)

+ ipv6_cidr_block = (known after apply)

+ main_route_table_id = (known after apply)

+ owner_id = (known after apply)

+ tags = {

+ "Name" = "tf_vpc"

}

}

Plan: 2 to add, 0 to change, 0 to destroy.

------------------------------------------------------------------------

Note: You didn't specify an "-out" parameter to save this plan, so Terraform

can't guarantee that exactly these actions will be performed if

"terraform apply" is subsequently run.

|

References

If statements

Old versions Terraform doesn't support if- or if-else statement but we can take an advantage of a boolean count attribute that most of resources have.

boolean true = 1 boolean false = 0

Newer version support if statements, the conditional syntax is the well-known ternary operation:

CONDITION ? TRUEVAL : FALSEVAL

domain = "${var.frontend_domain != "" ? var.frontend_domain : var.domain}"

The support operators are:

- Equality: == and !=

- Numerical comparison: >, <, >=, <=

- Boolean logic: &&, ||, unary ! (|| is logical OR; “short-circuit” OR)

Modules

Modules are used in Terraform to modularize and encapsulate groups of resources in your infrastructure.

When calling a module from .tf file you passing values for variables that are defined in a module to create resources to your specification. Before you can use any module it needs to be downloaded. Use

$ terraform get

to download modules. You will notice that .terraform directory will be created that contains symlinks to the module.

- TF file ~/git/dev101/vpc.tf calling 'vpc' module

variable "vpc_name" { description = "value comes from terrafrom.tfvars" }

variable "vpc_cidr_base" { description = "value comes from terrafrom.tfvars" }

variable "vpc_cidr_range" { description = "value comes from terrafrom.tfvars" }

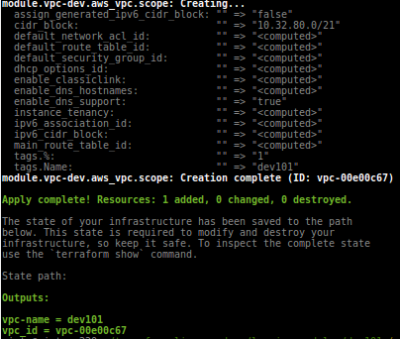

module "vpc-dev" {

source = "../modules/vpc"

name = "${var.vpc_name}" #here we assign a value to 'name' variable

cidr = "${var.vpc_cidr_base}.${var.vpc_cidr_range}" }

output "vpc-name" { value = "${var.vpc_name }"}

output "vpc_id" { value = "${module.vpc-dev.id-from_module }"}

- Module in ~/git/modules/vpc/main.tf

variable "name" { description = "variable local to the module, value comes when calling the module" }

variable "cidr" { description = "local to the module, value passed on when calling the module" }

resource "aws_vpc" "scope" {

cidr_block = "${var.cidr}"

tags { Name = "${var.name}" }}

output "id-from_module" { value = "${aws_vpc.scope.id}" }

Output variables is a way to output important data back when running terraform apply. These variables also can be recalled when .tfstate file has been populated using terraform output VARIABLE-NAME command.

$ terraform apply #this will use 'vpc' module

Notice Outputs. These outputs can be recalled also by:

$ terraform output vpc-name $ terraform output vpc_id dev101 vpc-00e00c67

Templates

Dump a rendered data.template_file into a file to preview correctness of interpolations

#Dumps rendered template

resource "null_resource" "export_rendered_template" {

triggers = {

uid = "${uuid()}" #this causes to always run this resource

}

provisioner "local-exec" {

command = "cat > waf-policy.output.txt <<EOL\n${data.template_file.waf-whitelist-policy.rendered}\nEOL"

}

}

Example of creating

resource "aws_instance" "microservices" {

count = "${var.instance_count}"

subnet_id = "${element("${data.aws_subnet.private.*.id }", count.index)}"

user_data = "${element("${data.template_file.userdata.*.rendered}", count.index)}"

...

}

data "template_file" "userdata" {

count = "${var.instance_count}"

template = "${file("${path.root}/templates/user-data.tpl")}"

vars = {

vmname = "ms-${count.index + 1}-${var.vpc_name}"

}

}

#For debugging you can display an array of rendered templates with the output below:

output "userdata" { value = "${data.template_file.userdata.*.rendered}" }

Worth to note:

- resource

template_file is deprecatedin favour ofdata template_file - Terraform 0.12+ offers new

templatefunction without a need of using adataobject

Log user_data to console logs

In Linux add a line below after she-bang

exec > >(tee /var/log/user-data.log|logger -t user-data -s 2>/dev/console)

Now you can go and open System Logs in AWS Console to view user-data script logs.

Resource

- example of unique templates per instance

- recommendation of how to create unique templates per instance

Execute arbitrary code using null_resource and local-exec

The null_resource allows to create terraform managed resource also saved in the state file but it uses 3rd party provisoners like local-exec, remote-exec, etc., allowing for arbitrary code execution. This should be only used when Terraform core does not provide the solution for your use case.

resource "null_resource" "attach_alb_am_wkr_ext" {

#depends_on sets up a dependency. So it depends on completion of another resource

#and it won't run if the resource does not change

#depends_on = [ "aws_cloudformation_stack.waf-alb" ]

#triggers save computed strings in tfstate file, if value changes on the next run it triggers a resource to be created

triggers = {

waf_id = "${aws_cloudformation_stack.waf-alb.outputs.wafWebACL}" #produces WAF_id

alb_id = "${module.balancer_external_alb_instance.arn }" #produces full ALB_arn name

}

provisioner "local-exec" {

when = "create" #runs on: terraform apply

command = <<EOF

ALBARN=$(aws elbv2 describe-load-balancers --region ${var.region} \

--name ${var.vpc}-${var.alb_class} \

--output text --query 'LoadBalancers[0].LoadBalancerArn') &&

aws waf-regional associate-web-acl --web-acl-id "${aws_cloudformation_stack.waf-alb.outputs.wafWebACL}" \

--resource-arn $ALBARN --region ${var.region}

EOF

}

provisioner "local-exec" {

when = "destroy" #runs only on: terraform destruct

command = <<EOF

ALBARN=$(aws elbv2 describe-load-balancers --region ${var.region} \

--name ${var.vpc}-${var.alb_class} \

--output text --query 'LoadBalancers[0].LoadBalancerArn') &&

aws waf-regional disassociate-web-acl --resource-arn $ALBARN --region ${var.region}

EOF

}

}

Note: By default the local-exec provisioner will use /bin/sh -c "your<<EOFscript" so it will not strip down any meta-characters like "double quotes" causing aws cli to fail. Therefore the output has been forced as text.

terraform providers

List all providers in your project to see versions and dependencies.

$ terraform providers . ├── provider.aws ~> 2.44 ├── provider.external ~> 1.2 ├── provider.null ~> 2.1 ├── provider.random ~> 2.2 ├── provider.template ~> 2.1 ├── module.kubernetes │ ├── module.config │ │ ├── provider.aws │ │ ├── provider.helm ~> 0.10.4 │ │ ├── provider.kubernetes ~> 1.10.0 │ │ ├── provider.null (inherited) │ │ ├── module.alb_ingress_controller (...)

Resources

- Comprehensive-guide-to-terraform gruntwork.io

- Terraform Modules for Fun and Profit

- Terraform good practices namin conventions, etc..

- Atlantis Terraform Pull Request Automation, Listens for webhooks from GitHub/GitLab/Bitbucket/Azure DevOps, Runs terraform commands remotely and comments back with their output.