Difference between revisions of "Kubernetes/Security and RBAC"

| Line 375: | Line 375: | ||

Run test pod | Run test pod | ||

<source lang=bash> | <source lang=bash> | ||

kubectl run nginx --image=nginx --replicas=3 | kubectl run --generator=run-pod/v1 nginx --image=nginx # single pod | ||

kubectl create deployment nginx --image=nginx # create a deployment | |||

kubectl scale rs nginx-554b9c67f9 --replicas=3 # scale deployment | |||

#kubectl run nginx --image=nginx --replicas=3 #deprecated command | |||

kubectl expose deployment nginx --port=80 | kubectl expose deployment nginx --port=80 | ||

# try to access a nginx service from another pod | # try to access a nginx service from another pod | ||

kubectl run | kubectl run --generator=run-pod/v1 busybox --image=busybox -- sleep 3600 | ||

kubectl exec busybox -it -- /bin/sh #this often crashes | |||

/ # wget --spider --timeout=1 nginx #this should timeout | / # wget --spider --timeout=1 nginx #this should timeout | ||

#--spider does not download just browses | #--spider does not download just browses | ||

kubectl exec -ti busybox -- wget --spider --timeout=1 nginx | |||

kubectl exec -ti busybox -- ping -c3 <nginx-pod-ip> | |||

# kubectl run busybox --rm -it --image=busybox /bin/sh #deprecated | |||

</source> | </source> | ||

| Line 390: | Line 400: | ||

kind: NetworkPolicy | kind: NetworkPolicy | ||

metadata: | metadata: | ||

name: | name: web-netpolicy | ||

spec: | spec: | ||

podSelector: | podSelector: # apply policy | ||

matchLabels: | matchLabels: # to these pods | ||

app: | app: web | ||

ingress: | ingress: | ||

- from: | - from: | ||

- podSelector: | - podSelector: # allow traffic | ||

matchLabels: | matchLabels: # from these pods | ||

app: | app: busybox | ||

ports: | ports: | ||

- port: | - port: 80 | ||

</source> | </source> | ||

Revision as of 08:37, 20 August 2019

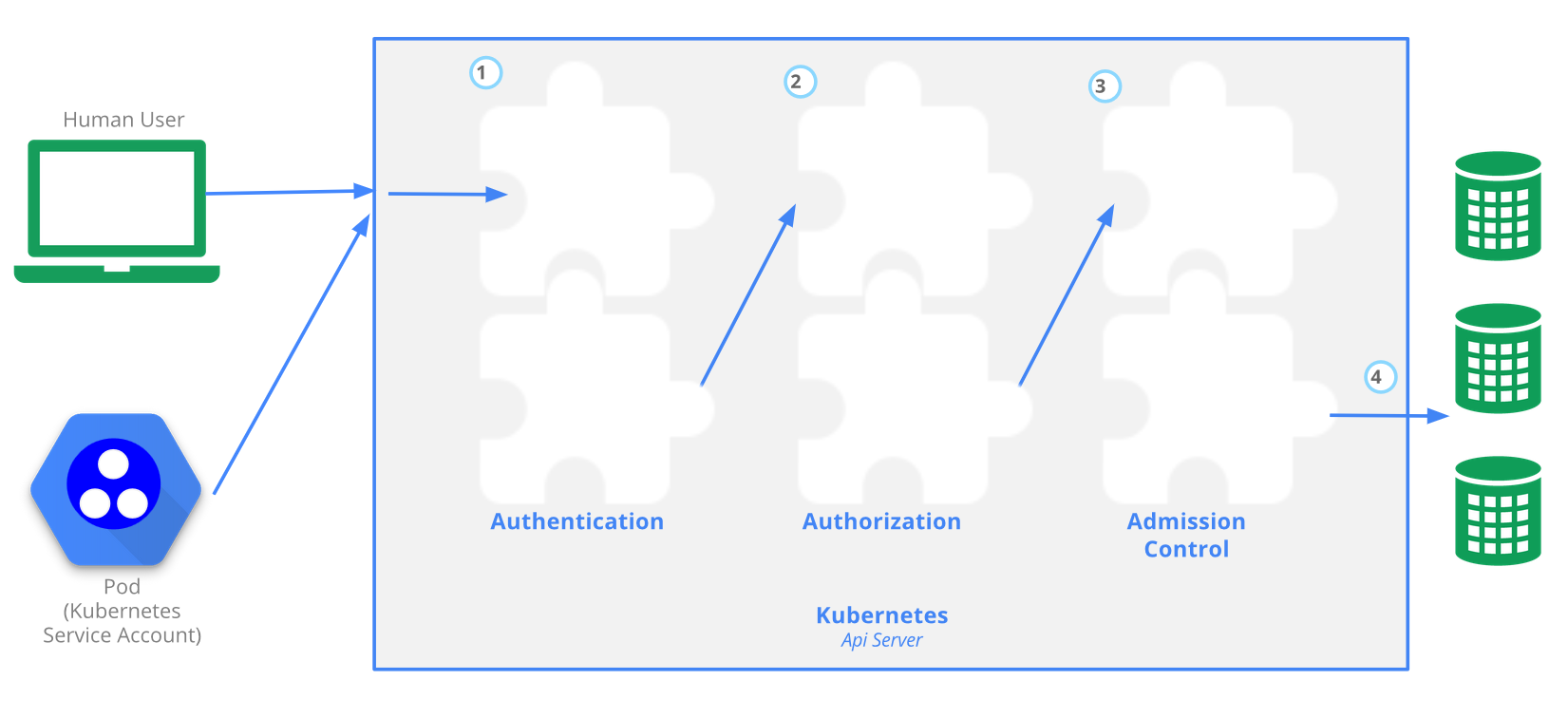

API Server and Role Base Access Control

Once the API server has determined who you are (whether a pod or a user), the authorization is handled by RBAC.

To prevent unauthorized users from modifying the cluster state, RBAC is used by defining roles and role bindings for a user. A service account resource is created for a pod to determine what control has over the cluster state. For example, the default service account will not allow you to list the services in a namespace.

The Kubernetes API server provides CRUD actions (Create, Read, Update, Delete) interface for interacting with cluster state over a RESTful API. API calls can come only from 2 sources:

- kubectl

- POD

There is 4 stage process

- Authentication

- Authorization

- Admission

- Writing the configuration state CRUD actions to persistent store etcd database

Example plugins:

- serviceaccount plugin applies default serviceaccount to pods that don't explicitly specify

RBAC is managed by 4 resources, divided over 2 groups

| Group-1 namespace resources | Group-2 cluster level resources | resources type |

|---|---|---|

| roles | cluster roles | defines what can be done |

| role bindings | cluster role bindings | defines who can do it |

When deploying a pod a default serviceaccount is assigned if not specified in the pod manifest. The serviceaccount represents an identity of an app running on a pod. Token file holds authentication token. Let's create a namespace and create a test pod to try to list available services.

kubectl create ns rbac kubectl run apitest --image=nginx -n rbac #create test container, to run API call test from

Each pod has serviceaccount, the API authentication token is on a pod. When a pod makes API call uses the token, this allows to assumes the serviceaccount, so it gets identity. You can preview the token on the pod.

kubectl -n rbac1 exec -it apitest-<UID> -- /bin/sh #connect to the container shell

#display token and namespace that allows to connect to API server from this pod

root$ cat /var/run/secrets/kubernetes.io/serviceaccount/{token,namespace}

#call API server to list K8s services in 'rbac' namespace

root$ curl localhost:8001/api/v1/namespaces/rbac/services

List all serviceaccounts. Serviceaccounts can only be used within the same namespace.

kubectl get serviceaccounts -n rbac kubectl get secrets NAME TYPE DATA AGE default-token-qqzc7 kubernetes.io/service-account-token 3 39h kubectl get secrets default-token-qqzc7 -o yaml #display secrets

ServiceAccount

The API server is first evaluating if the request is coming from a service account or a normal user /or normal user account meeting, a private key, a user store or even a file with a list of user names and passwords. Kubernetes doesn't have objects that represent normal user accounts, and normal users cannot be added to the cluster through.

kubectl get serviceaccounts #or 'sa' in short kubectl create serviceaccount jenkins kubectl get serviceaccounts jenkins -o yaml apiVersion: v1 kind: ServiceAccount metadata: creationTimestamp: "2019-08-05T07:10:40Z" name: jenkins namespace: default resourceVersion: "678" selfLink: /api/v1/namespaces/default/serviceaccounts/jenkins uid: 21cba4bb-b750-11e9-86b3-0800274143a9 secrets: - name: jenkins-token-cspjm kubectl get secret [secret_name]

Assign ServiceAccoubt to a pod

apiVersion: v1

kind: Pod

metadata:

name: busybox

namespace: default

spec:

serviceAccountName: jenkins #<-- ServiceAccount

containers:

- image: busybox:1.28.4

command:

- sleep

- "3600"

imagePullPolicy: IfNotPresent

name: busybox

restartPolicy: Always

#Verify

kubectl.exe get pods -o yaml | sls serviceAccount

{"apiVersion":"v1","kind":"Pod","metadata":{"annotations":{},"name":"busybox","namespace":"default"},"spec":{"c

ontainers":[{"command":["sleep","3600"],"image":"busybox:1.28.4","imagePullPolicy":"IfNotPresent","name":"busybox"}],"r

estartPolicy":"Always","serviceAccountName":"jenkins"}}

- mountPath: /var/run/secrets/kubernetes.io/serviceaccount

serviceAccount: jenkins

serviceAccountName: jenkins

Create Administrative account

This is a process of setting up a new remote administrator.

kubectl.exe config set-credentials piotr --username=piotr --password=password

#new section in ~/.kube/config has been added:

users:

- name: user1

...

- name: piotr

user:

password: password

username: piotr

#create clusterrolebinding, this is for authonomus users not-recommended

kubectl create clusterrolebinding cluster-system-anonymous --clusterrole=cluster-admin --user=system:anonymous

clusterrolebinding.rbac.authorization.k8s.io/cluster-system-anonymous created

#copy server ca.crt

laptop$ scp ubuntu@k8s-cluster.acme.com:/etc/kubernetes/pki/ca.crt .

#set kubeconfig

kubectl config set-cluster kubernetes --server=https://k8s-cluster.acme.com:6443 --certificate-authority=ca.crt --embed-certs=true

#Create context

kubectl config set-context kubernetes --cluster=kubernetes --user=piotr --namespace=default

#Use contect to current

kubectl config use-context kubernetes

Create a role (namespaced permissions)

The role describes what actions can be performed. This role allows to list services from a web namespace.

apiVersion: rbac.authorization.k8s.io/v1 kind: Role metadata: namespace: web #this need to be created beforehand name: service-reader rules: - apiGroups: [""] verbs: ["get", "list"] resources: ["services"]

The role does not specify who can do it. Thus we create a roleBinding with a user, serviceAccount or group. The roleBinding can only reference a single role, but can bind to multi: users, serviceAccounts or groups

kubectl create rolebinding roleBinding-test --role=service-reader --serviceaccount=web:default -n web # Verify access has been granted curl localhost:8001/api/v1/namespaces/web/services

Create a clusterrole (cluster-wide permissions)

In this example we create ClusterRole that can access persitenvolumes APIs, then we will create ClusterRolebinding (pv-test) with a default ServiceAccount (name: default) in 'web' namespace. The SA is a account that pod assumes/uses by default when getting Authenticated by API-server. When we then attach to the container and try to list cluster-wide resource - persitenvolumes , this will be allowed because of ClusterRole, that the pod has assumed.

# Create a ClusterRole to access PersistentVolumes: kubectl create clusterrole pv-reader --verb=get,list --resource=persistentvolumes # Create a ClusterRoleBinding for the cluster role: kubectl create clusterrolebinding pv-test --clusterrole=pv-reader --serviceaccount=web:default

The YAML for a pod that includes a curl and proxy container:

apiVersion: v1

kind: Pod

metadata:

name: curlpod

namespace: web

spec:

containers:

- image: tutum/curl

command: ["sleep", "9999999"]

name: main

- image: linuxacademycontent/kubectl-proxy

name: proxy

restartPolicy: Always

Create the pod that will allow you to curl directly from the container:

kubectl apply -f curlpod.yaml

kubectl get pods -n web # Get the pods in the web namespace

kubectl exec -it curlpod -n web -- sh # Open a shell to the container:

#Verify you can access PersistentVolumes (cluster-level) from the pod

/ # curl localhost:8001/api/v1/persistentvolumes

{

"kind": "PersistentVolumeList",

"apiVersion": "v1",

"metadata": {

"selfLink": "/api/v1/persistentvolumes",

"resourceVersion": "7173"

},

"items": []

}/ #

List all API resources

PS C:> kubectl.exe proxy Starting to serve on 127.0.0.1:8001

Network policies

Network policies allow you to specify which pods can talk to other pods. The example Calico's plugin allows for securing communication by:

- applying network policy based on:

- pod label-selector

- namespace label-selector

- CIDR block range

- securing communication (who can access pods) by setting up:

- ingress rules

- egress rules

POSTing any NetworkPolicy manifest to the API server will have no effect unless your chosen networking solution supports network policy. Network Policy is just an API resource that defines a set of rules for Pod access. However, to enable a network policy, we need a network plugin that supports it. We have a few options:

- Calico, Cilium, Kube-router, Romana, Weave Net

minikube

If you plan to use Minikube with its default settings, the NetworkPolicy resources will have no effect due to the absence of a network plugin and you’ll have to start it with --network-plugin=cni.

minikube start --network-plugin=cni --memory=4096

- Minikube local cluster with NetworkPolicy powered by Cilium

- network-policies on your laptop by banzaicloud

Install Calico network policies

What is the Canal? Tigera and CoreOS’s was a project to integrate Calico and flannel, read more...

Install Calico =<v3.5 canal network policies plugin:

wget -O canal.yaml https://docs.projectcalico.org/v3.5/getting-started/kubernetes/installation/hosted/canal/canal.yaml curl https://docs.projectcalico.org/v3.8/manifests/canal.yaml -O curl https://docs.projectcalico.org/v3.8/manifests/calico-policy-only.yaml -O # Update Pod IPs to '--cluster-cidr', changing this value after installation has no affect ## Get Pod's cidr kubectl cluster-info dump | grep -m 1 service-cluster-ip-range kubectl cluster-info dump | grep -m 1 cluster-cidr ## Minikube cidr can change, so exec to Pod is best option eventually you can check in minikube config grep ~/.minikube/profiles/minikube/config.json | grep ServiceCIDR ## Update config POD_CIDR="<your-pod-cidr>" sed -i -e "s?10.244.0.0/16?$POD_CIDR?g" canal.yaml kubectl apply -f canal.yaml

Cilium - networkPolicies

Cilium DaemonSet will place one Pod per node. Each Pod then will enforce network policies on the traffic using Berkeley Packet Filter (BPF).

minikube start --network-plugin=cni --memory=4096 #--kubernetes-version=1.13 kubectl create -f https://raw.githubusercontent.com/cilium/cilium/v1.5/examples/kubernetes/1.14/cilium-minikube.yaml

Create network policies

Create a 'default' isolation policy for a namespace by creating a NetworkPolicy that selects all pods but does not allow any ingress traffic to those pods

| Default deny all ingress traffic | Default allow all ingress traffic |

|---|---|

apiVersion: networking.k8s.io/v1

kind: NetworkPolicy

metadata:

name: deny-all

# namespace: default

spec:

podSelector: {} # select all pods in ns

policyTypes: # inherit this policy

- Ingress

|

apiVersion: networking.k8s.io/v1

kind: NetworkPolicy

metadata:

name: allow-all

# namespace: default

spec:

podSelector: {}

ingress:

- {}

policyTypes:

- Ingress

|

Create NetworkPolicy

$ kubectl.exe apply -f policy-denyall.yaml networkpolicy.networking.k8s.io/deny-all created $ kubectl.exe get networkPolicy -A NAMESPACE NAME POD-SELECTOR AGE default deny-all <none> 6s

Run test pod

kubectl run --generator=run-pod/v1 nginx --image=nginx # single pod

kubectl create deployment nginx --image=nginx # create a deployment

kubectl scale rs nginx-554b9c67f9 --replicas=3 # scale deployment

#kubectl run nginx --image=nginx --replicas=3 #deprecated command

kubectl expose deployment nginx --port=80

# try to access a nginx service from another pod

kubectl run --generator=run-pod/v1 busybox --image=busybox -- sleep 3600

kubectl exec busybox -it -- /bin/sh #this often crashes

/ # wget --spider --timeout=1 nginx #this should timeout

#--spider does not download just browses

kubectl exec -ti busybox -- wget --spider --timeout=1 nginx

kubectl exec -ti busybox -- ping -c3 <nginx-pod-ip>

# kubectl run busybox --rm -it --image=busybox /bin/sh #deprecated

Create NetworkPolicy that allows ingress port 5432 from pods with 'web' label

apiVersion: networking.k8s.io/v1

kind: NetworkPolicy

metadata:

name: web-netpolicy

spec:

podSelector: # apply policy

matchLabels: # to these pods

app: web

ingress:

- from:

- podSelector: # allow traffic

matchLabels: # from these pods

app: busybox

ports:

- port: 80

Label a pod to get the NetworkPolicy:

kubectl label pods [pod_name] app=db kubectl run busybox --rm -it --image=busybox /bin/sh #wget --spider --timeout=1 nginx #this should timeout

| namespace NetworkPolicy | IP block NetworkPolicy | egress NetworkPolicy |

|---|---|---|

apiVersion: networking.k8s.io/v1

kind: NetworkPolicy

metadata:

name: ns-netpolicy

spec:

podSelector:

matchLabels:

app: db

ingress:

- from:

- namespaceSelector:

matchLabels:

tenant: web

ports:

- port: 5432

|

apiVersion: networking.k8s.io/v1

kind: NetworkPolicy

metadata:

name: ipblock-netpolicy

spec:

podSelector:

matchLabels:

app: db

ingress:

- from:

- ipBlock:

cidr: 192.168.1.0/24

|

apiVersion: networking.k8s.io/v1

kind: NetworkPolicy

metadata:

name: egress-netpol

spec:

podSelector:

matchLabels:

app: web

egress:

- to:

- podSelector:

matchLabels:

app: db

ports:

- port: 5432

|