Difference between revisions of "Kubernetes/Istio"

| (127 intermediate revisions by the same user not shown) | |||

| Line 1: | Line 1: | ||

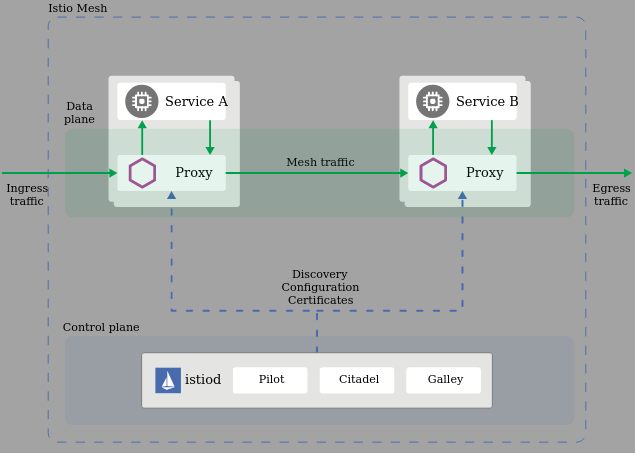

= Architecture Istio v1.7 = | = Architecture Istio ~v1.7+ = | ||

:[[File:ClipCapIt-200930-172701.PNG]] | :[[File:ClipCapIt-200930-172701.PNG]] | ||

| Line 20: | Line 20: | ||

{{Note|Proxy term meaning is when someone has authority to represent someone. In software proxy components are invisible to clients. proxies }} | {{Note|Proxy term meaning is when someone has authority to represent someone. In software proxy components are invisible to clients. proxies }} | ||

Security Architecture | |||

:[[File:ClipCapIt-210120-161513.PNG|600px]] | |||

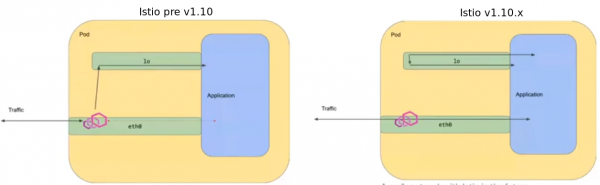

[https://istio.io/latest/blog/2021/upcoming-networking-changes/ Pod networking changed v1.10] matching default Kubernetes behaviour | |||

:[[File:ClipCapIt-210531-145953.PNG|600px]] | |||

[https://istio.io/latest/docs/ops/deployment/requirements/ Ports] | |||

<source lang=bash> | |||

service/istiod: | |||

15010 - grpc-xds | |||

15012 - https-dns | |||

15014 - http-monitoring | |||

443 - https-webhook, container port 15017 | |||

</source> | |||

= Istio components = | = Istio components = | ||

| Line 85: | Line 102: | ||

<source lang=bash> | <source lang=bash> | ||

# Minimum requirements are 8G and 4 CPUs | # Minimum requirements are 8G and 4 CPUs | ||

K8SVERSION=v1.18.9 | |||

minikube | PROFILE=minikube-$K8SVERSION-istio | ||

minikube | minikube profile $PROFILE # set default profile | ||

minikube | minikube start --kubernetes-version=$K8SVERSION | ||

minikube addons enable istio | minikube start --memory=8192 --cpus=4 --kubernetes-version=$K8SVERSION --driver minikube | ||

minikube start --memory=8192 --cpus=4 --kubernetes-version=$K8SVERSION --driver kvm | |||

minikube tunnel | |||

minikube addons enable istio # [1] error | |||

</source> | </source> | ||

| Line 104: | Line 125: | ||

</source> | </source> | ||

= Install istioctl | |||

Install [https://istio.io/latest/docs/examples/bookinfo/#deploying-the-application bookinfo] example app. | |||

= Install = | |||

== istioctl cli == | |||

''The <code>curl ISTIO_VERSION=1.10.0 sh -</code> just downloads istio files into its own directory. It does not install anything'' | |||

<source lang=bash> | <source lang=bash> | ||

# | # Option_1 - the official approach | ||

curl -L https://istio.io/downloadIstio | ISTIO_VERSION=1. | curl -L https://istio.io/downloadIstio | ISTIO_VERSION=1.10.0 sh - | ||

cd istio-1. | cd istio-1.10.0/ # istio package directory | ||

export PATH=$PWD/bin:$PATH | export PATH=$PWD/bin:$PATH | ||

# | # Option_2 | ||

export ISTIO_VERSION=1. | export ISTIO_VERSION=1.10.0 | ||

curl -L https://istio.io/downloadIstio | sh - | curl -L https://istio.io/downloadIstio | sh - | ||

export PATH=$PWD/istio-$ISTIO_VERSION/bin:$PATH | export PATH=$PWD/istio-$ISTIO_VERSION/bin:$PATH | ||

| Line 120: | Line 147: | ||

# Check version | # Check version | ||

istioctl version --remote | istioctl version --remote | ||

client version: 1. | client version: 1.9.1 | ||

control plane version: 1. | control plane version: 1.9.1 | ||

data plane version: 1. | data plane version: 1.9.1 (7 proxies) | ||

# Pre-flight check | |||

istioctl x precheck | |||

# | # Verify the install | ||

istioctl verify-install | istioctl verify-install | ||

... | ... | ||

| Line 133: | Line 163: | ||

Istio is installed successfully | Istio is installed successfully | ||

# Verify mesh coverage and config status | # Verify mesh coverage and the config status | ||

istioctl proxy-status | istioctl proxy-status | ||

NAME CDS LDS EDS RDS ISTIOD VERSION | NAME CDS LDS EDS RDS ISTIOD VERSION | ||

details-v1-5974b67c8-xbp8l.default SYNCED SYNCED SYNCED SYNCED istiod-5c6b7b5b8f-9npdz 1. | details-v1-5974b67c8-xbp8l.default SYNCED SYNCED SYNCED SYNCED istiod-5c6b7b5b8f-9npdz 1.9.1 | ||

istio-ingressgateway-5689f7c67-gvrh8.istio-system SYNCED SYNCED SYNCED SYNCED istiod-5c6b7b5b8f-9npdz 1. | istio-ingressgateway-5689f7c67-gvrh8.istio-system SYNCED SYNCED SYNCED SYNCED istiod-5c6b7b5b8f-9npdz 1.9.1 | ||

... | ... | ||

# Analyse the mesh configuration | |||

istioctl analyze --all-namespaces | |||

</source> | </source> | ||

= | == control plane == | ||

*[https://istio.io/latest/docs/setup/install/istioctl/ Install with Istioctl] - recommended | |||

*[https://istio.io/latest/docs/setup/install/operator/ Istio Operator Install] | |||

*[https://istio.io/latest/docs/setup/install/helm/ Install with Helm] | |||

Istio maintainers with increasing complexity of the project that goes against the user friendliness still support ''helm manifest'' based configuration although there is fair movement towards the operator pattern. See below for differences, v1.6 and v1.7 still support both methods. | Istio maintainers with increasing complexity of the project that goes against the user friendliness still support ''helm manifest'' based configuration although there is fair movement towards the operator pattern. See below for differences, v1.6 and v1.7 still support both methods. | ||

# | There are a few operational modes to configure control plane: | ||

* [https://github.com/istio/istio/tree/master/operator istio operator] | |||

** install - <code>istioctl install</code> with <code>--set</code> or <code>-f istio-overlay.yaml</code> will be overlayed on top of a chosen profile | |||

** reconfigure - <code>istioctl manifest apply -f istio-install.yaml --dry-run</code> | |||

** <code>istio operator init</code> installs the operator pod <code>istio-operator</code>. Then using <code>kubectl apply -f istio-overlay.yaml</code> will trigger the operator to sync the changes. Also manually changing <code>istio-system/istiooperators.install.istio.io/installed-state</code> object will trigger an the operator event to sync the config and reconfigure teh control-plane. | |||

* [deprecated] <code>istioctl manifest install</code> with <code>--set</code> or <code>-f <manifests.yaml></code> | |||

== Install == | |||

<source lang=bash> | |||

# Tested with 1.7.3 [depreciating method via helm charts], --set values are prefixed with 'values.' | |||

istioctl manifest install --skip-confirmation --set profile=default \ | istioctl manifest install --skip-confirmation --set profile=default \ | ||

--set values.kiali.enabled=true \ | --set values.kiali.enabled=true \ | ||

--set values.prometheus.enabled=true \ | --set values.prometheus.enabled=true \ | ||

--dry-run | --dry-run | ||

# Tested with 1.8.2 [istio.operator via 'istioctl' cli] | |||

istioctl x precheck | |||

istioctl install --skip-confirmation --set profile=default --dry-run # via operator | |||

istioctl upgrade --skip-confirmation --set profile=default --dry-run # via operator | |||

</source> | </source> | ||

= Uninstall | |||

{| class="wikitable" | |||

|+ Using istio.operator via manifest yaml | |||

|- | |||

! Basic | |||

! +Kiali,Prometheus | |||

! +ServiceEntry, mesh traffic only | |||

! +egress | |||

|- style="vertical-align:top;" | |||

| <syntaxhighlightjs lang=yaml> | |||

istioctl manifest install -f <(cat <<EOF | |||

apiVersion: install.istio.io/v1alpha1 | |||

kind: IstioOperator | |||

spec: | |||

profile: default | |||

EOF | |||

) | |||

</syntaxhighlightjs> | |||

| <syntaxhighlightjs lang=yaml> | |||

istioctl manifest install -f <(cat <<EOF | |||

apiVersion: install.istio.io/v1alpha1 | |||

kind: IstioOperator | |||

spec: | |||

profile: default | |||

values: | |||

kiali: | |||

enabled: true | |||

prometheus: | |||

enabled: true | |||

EOF | |||

) | |||

</syntaxhighlightjs> | |||

| <syntaxhighlightjs lang=yaml> | |||

istioctl manifest install -f <(cat <<EOF | |||

apiVersion: install.istio.io/v1alpha1 | |||

kind: IstioOperator | |||

spec: | |||

profile: default | |||

values: | |||

kiali: | |||

enabled: true | |||

# Kiali uses Prometheus to populate its dashboards | |||

prometheus: | |||

enabled: true | |||

meshConfig: | |||

# debugging | |||

accessLogFile: /dev/stdout | |||

outboundTrafficPolicy: | |||

mode: REGISTRY_ONLY | |||

EOF | |||

) | |||

</syntaxhighlightjs> | |||

| <syntaxhighlightjs lang=yaml> | |||

istioctl manifest install -f <(cat <<EOF | |||

apiVersion: install.istio.io/v1alpha1 | |||

kind: IstioOperator | |||

spec: | |||

profile: default | |||

components: | |||

egressGateways: | |||

- enabled: true | |||

name: istio-egressgateway | |||

values: | |||

kiali: | |||

enabled: true | |||

prometheus: | |||

enabled: true | |||

meshConfig: | |||

accessLogFile: /dev/stdout | |||

outboundTrafficPolicy: | |||

mode: REGISTRY_ONLY | |||

EOF | |||

) | |||

</syntaxhighlightjs> | |||

|} | |||

;Uninstall | |||

[https://istio.io/v1.6/docs/setup/getting-started/#uninstall Uninstall v1.6.8], it's safe to ignore RBAC not existing resources. | [https://istio.io/v1.6/docs/setup/getting-started/#uninstall Uninstall v1.6.8], it's safe to ignore RBAC not existing resources. | ||

<source lang=bash> | <source lang=bash> | ||

| Line 168: | Line 287: | ||

</source> | </source> | ||

[https://istio.io/v1.7/docs/setup/getting-started/#uninstall Uninstall v1.7.x] | [https://istio.io/v1.7/docs/setup/getting-started/#uninstall Uninstall v1.7.x] - view [[Kubernetes/Istio-logs-default-install#Uninstall|logs]] | ||

<source lang=bash> | <source lang=bash> | ||

istioctl x uninstall --purge | # Removes istio-system resources and istio-operator | ||

istioctl x uninstall --purge # cmd:experimental, aliases: experimental, x, exp | |||

✔ Uninstall complete | ✔ Uninstall complete | ||

</source> | </source> | ||

== Install - day 2 operation == | |||

{{Note|Update 2021-06-01 the guide focus on Istio v1.10}} | |||

This should be a preferred method for day 2 operations - [https://istio.io/latest/docs/setup/upgrade/canary/ canary upgrade] where control-plane gets installed with revisions in <code>istio-system</code> namespace and ingress-gateway(s) components are installed separately in another namespace eg. <code>istio-ingress</code>. | |||

;Pre checks | |||

<source lang=bash> | |||

istioctl experimental precheck | |||

istioctl analyze -A | |||

# Optional labels for data plane operations | |||

## Automatic proxy side injection | |||

kubectl label namespace default istio-injection=enabled | |||

kubectl label namespace default istio-injection=disabled | |||

kubectl label namespace default istio.io/rev=1-10-0 # revision label | |||

## Discovery namespace selectors v1.10+ !!!Warning labels should exist before enabling this feature otherwise you may break cp what can wach from api-server | |||

kubectl label namespace istio-ingress istio-discovery=enabled # ingressgateway namespaces also need to be labelled (if in different ns than cp), otherwise the traffic won't be able to flow from ingress to our services | |||

kubectl label namespace default istio-discovery=enabled | |||

</source> | |||

=== Install control-plane === | |||

* [https://istio.io/latest/blog/2021/revision-tags/ Safely upgrade the Istio control plane with revisions and tags] | |||

* [https://istio.io/latest/docs/setup/upgrade/canary/#stable-revision-labels-experimental Stable revision labels (experimental)] | |||

{{Note|For installing v1.9.x you should first install without revisions}} eg.<code>REVISION=""</code> this is because revisions upgrade expects to have <code>default</code> revision set. | |||

{{Note|Due to [https://github.com/istio/istio/issues/28880 a bug] in the creation of the ValidatingWebhookConfiguration during install, '''initial installations of Istio must not specify a revision'''. A workaround is to run command below, where <REVISION> is the name of the revision that should handle validation. This command creates an istiod service pointed to the target revision. | |||

<source lang=bash> | |||

kubectl get service -n istio-system -o json istiod-<REVISION> | jq '.metadata.name = "istiod" | del(.spec.clusterIP) | del(.spec.clusterIPs)' | kubectl apply -f - | |||

</source>}} | |||

{{Note|The difference in between operations <code>install</code> and <code>upgrade</code> is fuzzy. Upgrade safely upgrades components, and show diff in between the profile and what is being configured. On the other hand for eg. discoverySelectors feature only the install applies the config where using upgrade seems not having any effect.}} | |||

<source lang=bash> | |||

REVISION="" # don't use revisions | |||

REVISION='--revision 1-10-0' | |||

istioctl install -y -n istio-system $REVISION -f <(cat <<EOF | |||

apiVersion: install.istio.io/v1alpha1 | |||

kind: IstioOperator | |||

metadata: | |||

name: control-plane | |||

#namespace: istio-system # it's default | |||

spec: | |||

profile: minimal # installs only control-plane | |||

hub: gcr.io/istio-release | |||

components: | |||

pilot: | |||

k8s: | |||

hpaSpec: | |||

minReplicas: 2 | |||

env: | |||

- name: PILOT_FILTER_GATEWAY_CLUSTER_CONFIG # Reduce Gateway Config to services that have routing rules defined | |||

value: "true" | |||

meshConfig: | |||

defaultConfig: | |||

proxyMetadata: | |||

# https://preliminary.istio.io/latest/blog/2020/dns-proxy/ | |||

# curl localhost:8080/debug/nsdz?proxyID=<POD-NAME> | |||

# dig @localhost -p 15053 postgres-stolon-proxy.sample.svc | |||

ISTIO_META_DNS_CAPTURE: "true" | |||

enablePrometheusMerge: true | |||

#discoverySelectors: # new in v1.10, choose which namespaces Istiod should watch (from api-server) | |||

#- matchLabels: # | for building the internal mesh registry based on configurations of services,endpoints,deployments | |||

# | dynamically restrict the set of namespaces that are part of the mesh | |||

# istio-discovery: enabled # <- example label | |||

# #env: prod | |||

# #region: us-east-1 | |||

#- matchExpressions: | |||

# - key: app | |||

# operator: In | |||

# values: | |||

# - cassandrs | |||

# - spark | |||

EOF | |||

) --dry-run | |||

</source> | |||

;Verify if there any clients are connected to Istio v1.10.0 | |||

<source lang=bash> | |||

kubectl create deployment sleep --image=curlimages/curl -- /bin/sleep 3650d | |||

kubectl exec -it deploy/sleep -- curl istiod-1-10-0.istio-system:15014/debug/connections | jq . | |||

{ | |||

"totalClients": 0, | |||

"clients": null | |||

} | |||

# example of output when client(s) are conencted | |||

{ | |||

"totalClients": 1, | |||

"clients": [ | |||

{ | |||

"connectionId": "sidecar~172.17.0.8~sleep-76f59b4b9c-4sw6t.default~default.svc.cluster.local-3", | |||

"connectedAt": "2021-06-01T04:57:10.663650097Z", | |||

"address": "172.17.0.1:8415", | |||

"watches": null | |||

} | |||

] | |||

} | |||

# Show all discovered endpoints | |||

kubectl exec -it deploy/sleep -- curl istiod-1-10-0.istio-system:15014/debug/endpointz | jq . # ~1800+ lines for empty Minikube | |||

</source> | |||

;Sidecar proxy automatic injection by telling a namespace which revision (aka control plane) to select/use. | |||

<source lang=bash> | |||

kubectl label namespace default istio.io/rev=1-10-0 istio-injection- | |||

</source> | |||

{{Note|The istio-injection label must be removed because it takes precedence over the istio.io/rev label for backward compatibility.}} | |||

;Upgrade with Canary tag revision for isolated testing | |||

<source lang=bash> | |||

# Tag the new control-plane version with 'canary' tag | |||

istioctl experimental revision tag set canary --revision 1-10-0 | |||

Revision tag "canary" created, referencing control plane revision "1-10-0". To enable injection using this | |||

revision tag, use 'kubectl label namespace <NAMESPACE> istio.io/rev=canary' | |||

# Show tagged revision | |||

istioctl experimental revision list | |||

REVISION TAG ISTIO-OPERATOR-CR PROFILE REQD-COMPONENTS | |||

1-10-0 canary istio-system/installed-state-control-plane-1-10-0 minimal base | |||

istiod | |||

# Update a namespace label to use the newly tagged control-plane for the injected sidecars to use it/connect to it | |||

kubectl label namespace default istio.io/rev=canary --overwrite | |||

# Restart deployments for new proxy to be injected, test the application and if all is good delete the "app canary deployment" and continue with full rollout of all deployments. | |||

</source> | |||

=== Install ingress-gateway === | |||

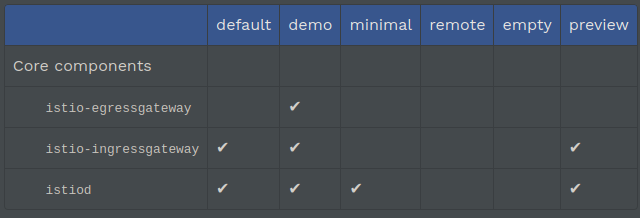

We install the ingress-gateway components separated in a separated namespace <code>istio-ingress</code>. This is to allow to upgrade control-plane and ingressgateway(s) components to be independent. We achieve this using 'minimal' profile that includes only control CP components. Then for IGW install we use 'empty' profile that adds only the ingress-gateway. See a list of what is included in each [https://istio.io/latest/docs/setup/additional-setup/config-profiles/ profile]. | |||

:[[File:ClipCapIt-210317-090509.PNG]] | |||

{{Note| '''tag''' and '''revision''' - in v1.10 ingressgateway is injected into the pod}} thus this becomes and behaves almost like any other workload. Thus the revision label should be in use eg. <code>istio.io/rev=1-10-0</code> to control the proxy version and to which control-plane the ingressgateway connects to | |||

<source lang=bash> | |||

# Create ingress own namespace | |||

kubectl create ns istio-ingress | |||

# Install revision | |||

REVISION='--revision 1-10-0' | |||

istioctl install -y -n istio-ingress $REVISION -f <(cat <<EOF | |||

apiVersion: install.istio.io/v1alpha1 | |||

kind: IstioOperator | |||

metadata: | |||

name: istio-ingressgateway | |||

spec: | |||

profile: empty # uses the 'empty' profile and enables the istio-ingressgateway component | |||

hub: gcr.io/istio-release | |||

components: | |||

ingressGateways: | |||

- name: istio-ingressgateway | |||

namespace: istio-ingress # if not set defaults to 'istio-system' | |||

enabled: true | |||

k8s: | |||

hpaSpec: | |||

minReplicas: 2 | |||

service: | |||

type: LoadBalancer | |||

overlays: | |||

- apiVersion: apps/v1 | |||

kind: Deployment | |||

name: istio-ingressgateway | |||

patches: | |||

- path: spec.template.spec.containers[name:istio-proxy].lifecycle | |||

value: | |||

preStop: | |||

exec: | |||

command: ["sh", "-c", "sleep 5"] | |||

EOF | |||

) --dry-run | |||

# Verify | |||

# | Note that 'revision' relates to control-plane thus ingressgateway 'installed-state-istio-ingressgateway-1-10-0' installation | |||

# | does hot have revision set | |||

## Show IstioOperator installations | |||

kubectl -n istio-system get istiooperators.install.istio.io | |||

NAME REVISION STATUS AGE | |||

installed-state-control-plane-1-10-0 1-10-0 165m | |||

installed-state-istio-ingressgateway-1-10-0 1-10-0 8m47s | |||

## Show revisions | |||

istioctl experimental revision list | |||

REVISION TAG ISTIO-OPERATOR-CR PROFILE REQD-COMPONENTS | |||

1-10-0 canary istio-system/installed-state-control-plane-1-10-0 minimal base | |||

istiod | |||

istio-system/installed-state-istio-ingressgateway-1-10-0 empty ingress:istio-ingressgateway | |||

## Test | |||

GATEWAY_IP=$(kubectl get svc -n istio-system istio-ingressgateway -o jsonpath="{.status.loadBalancer.ingress[0].ip}") | |||

# With Gateway and VirtualService we can route traffic to a destination service via IngressGateway | |||

curl -H "Host: example.com" http://$GATEWAY_IP | |||

</source> | |||

{{Note|As the next step IngressGateway can be [https://istio.io/latest/docs/ops/integrations/certmanager/#kubernetes-ingress integrated] with Cert Manager}} | |||

;Reduce Gateway Config | |||

<source lang=bash> | |||

# Show what services IngressGateway has discovered, the list has even those that no routing is defined | |||

istioctl pc clusters deploy/istio-ingressgateway -n istio-system | |||

# Set PILOT_FILTER_GATEWAY_CLUSTER_CONFIG environment variable in the istiod deployment | |||

# kind: IstioOperator | |||

# spec: | |||

# components: | |||

# pilot: | |||

# k8s: | |||

# env: | |||

# - name: PILOT_FILTER_GATEWAY_CLUSTER_CONFIG | |||

# value: "true" | |||

</source> | |||

;(Optional) Access logging for gateway only using EnvoyFilter | |||

* [https://istio.io/latest/docs/tasks/observability/logs/access-log/ Istio docs] explain to enable Envoy's Access Logs for the entire service mesh | |||

<source lang=bash> | |||

kubectl apply -f <(cat <<EOF | |||

apiVersion: networking.istio.io/v1alpha3 | |||

kind: EnvoyFilter | |||

metadata: | |||

name: ingressgateway-access-logging | |||

namespace: istio-system | |||

spec: | |||

workloadSelector: | |||

labels: | |||

istio: ingressgateway | |||

configPatches: | |||

- applyTo: NETWORK_FILTER | |||

match: | |||

context: GATEWAY | |||

listener: | |||

filterChain: | |||

filter: | |||

name: "envoy.filters.network.http_connection_manager" | |||

patch: | |||

operation: MERGE | |||

value: | |||

typed_config: | |||

"@type": "type.googleapis.com/envoy.extensions.filters.network.http_connection_manager.v3.HttpConnectionManager" | |||

access_log: | |||

- name: envoy.access_loggers.file | |||

typed_config: | |||

"@type": "type.googleapis.com/envoy.extensions.access_loggers.file.v3.FileAccessLog" | |||

path: /dev/stdout | |||

format: "[%START_TIME%] \"%REQ(:METHOD)% %REQ(X-ENVOY-ORIGINAL-PATH?:PATH)% %PROTOCOL%\" %RESPONSE_CODE% %RESPONSE_FLAGS% \"%UPSTREAM_TRANSPORT_FAILURE_REASON%\" %BYTES_RECEIVED% %BYTES_SENT% %DURATION% %RESP(X-ENVOY-UPSTREAM-SERVICE-TIME)% \"%REQ(X-FORWARDED-FOR)%\" \"%REQ(USER-AGENT)%\" \"%REQ(X-REQUEST-ID)%\" \"%REQ(:AUTHORITY)%\" \"%UPSTREAM_HOST%\" %UPSTREAM_CLUSTER% %UPSTREAM_LOCAL_ADDRESS% %DOWNSTREAM_LOCAL_ADDRESS% %DOWNSTREAM_REMOTE_ADDRESS% %REQUESTED_SERVER_NAME% %ROUTE_NAME%\n" | |||

EOF | |||

) --dry-run=server | |||

</source> | |||

;Check status, list and describe revisions | |||

<source lang=bash> | |||

kubectl get -n istio-system istiooperators.install.istio.io | |||

NAME REVISION STATUS AGE | |||

installed-state-control-plane 22m | |||

installed-state-control-plane-1-10-0 1-10-0 17h | |||

installed-state-istio-ingressgateway-1-10-0 1-10-0 15h | |||

# List revisions | |||

istioctl x revision list | |||

REVISION TAG ISTIO-OPERATOR-CR PROFILE REQD-COMPONENTS | |||

1-10-0 canary istio-system/installed-state-control-plane-1-10-0 minimal base | |||

istiod | |||

istio-system/installed-state-istio-ingressgateway-1-10-0 empty ingress:istio-ingressgateway | |||

default <no-tag> istio-system/installed-state-control-plane minimal base | |||

istiod | |||

# Describe revisions | |||

istioctl x revision describe 1-10-0 | |||

ISTIO-OPERATOR CUSTOM RESOURCE: (2) | |||

1. istio-system/installed-state-control-plane-1-10-0 | |||

COMPONENTS: | |||

- base | |||

- istiod | |||

CUSTOMIZATIONS: | |||

- values.global.jwtPolicy=first-party-jwt | |||

- hub=gcr.io/istio-release | |||

2. istio-system/installed-state-istio-ingressgateway-1-10-0 | |||

COMPONENTS: | |||

- ingress:istio-ingressgateway | |||

CUSTOMIZATIONS: | |||

- components.ingressGateways[0].enabled=true | |||

- values.global.jwtPolicy=first-party-jwt | |||

- hub=gcr.io/istio-release | |||

MUTATING WEBHOOK CONFIGURATIONS: (2) | |||

WEBHOOK TAG | |||

istio-revision-tag-canary canary | |||

istio-sidecar-injector-1-10-0 <no-tag> | |||

CONTROL PLANE PODS (ISTIOD): (2) | |||

NAMESPACE NAME ADDRESS STATUS AGE | |||

istio-system istiod-1-10-0-7c5b7865df-dcd92 172.17.0.5 Running 18h | |||

istio-system istiod-1-10-0-7c5b7865df-xh5r9 172.17.0.3 Running 18h | |||

INGRESS GATEWAYS: (0) | |||

Ingress gateway is enabled for this revision. However there are no such pods. It could be that it is replaced by ingress-gateway from another revision (as it is still upgraded in-place) or it could be some issue with installation | |||

EGRESS GATEWAYS: (0) | |||

Egress gateway is disabled for this revision | |||

</source> | |||

{{Note|''Ingress gateway is enabled for this revision. However there are no such pods.'' - this is a bit blury message}} | |||

;Uninstall | |||

<source lang=bash> | |||

# This command uninstalls all revisions in default namespace of 'istio-system' | |||

istioctl x uninstall --purge # uninstall all | |||

istioctl x uninstall -f <(cat <<EOF | |||

... | |||

EOF | |||

) | |||

</source> | |||

Install book info application | |||

<source lang=bash> | |||

# Namespaces | |||

kubectl create ns bookstore-1 | |||

kubectl create ns bookstore-2 | |||

kubectl label namespace bookstore-1 istio-injection=enabled | |||

kubectl label namespace bookstore-2 istio.io/rev=1-9-1 istio-injection- | |||

kubectl get ns --show-labels | |||

NAME STATUS AGE LABELS | |||

bookstore-1 Active 155m istio-injection=enabled | |||

bookstore-2 Active 155m istio.io/rev=1-10-0 | |||

# Deploy application | |||

kubectl apply -f samples/bookinfo/platform/kube/bookinfo.yaml -n bookstore-1 | |||

kubectl apply -f samples/bookinfo/platform/kube/bookinfo.yaml -n bookstore-2 | |||

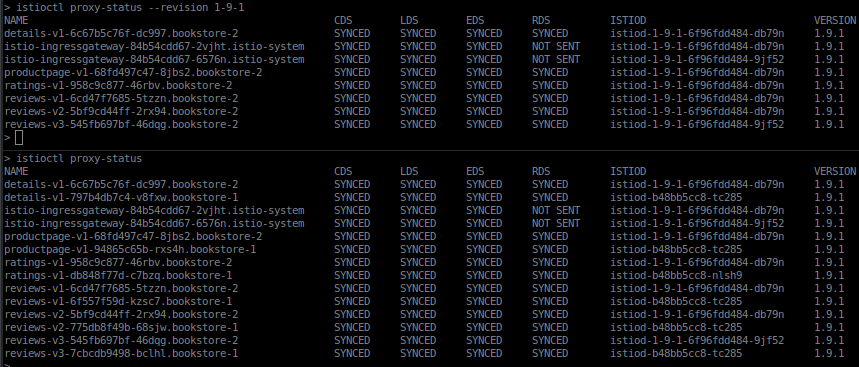

istioctl proxy-status --revision 1-10-0 # show only proxies of revision '1-10-0' | |||

istioctl proxy-status # show all proxies | |||

</source> | |||

You can see proxies connected only to revision '1-9-1' and at the bottom all proxies | |||

:[[File:ClipCapIt-210317-134114.PNG]] | |||

{{Note|Screen grab from previous Istio v1.9 version}} | |||

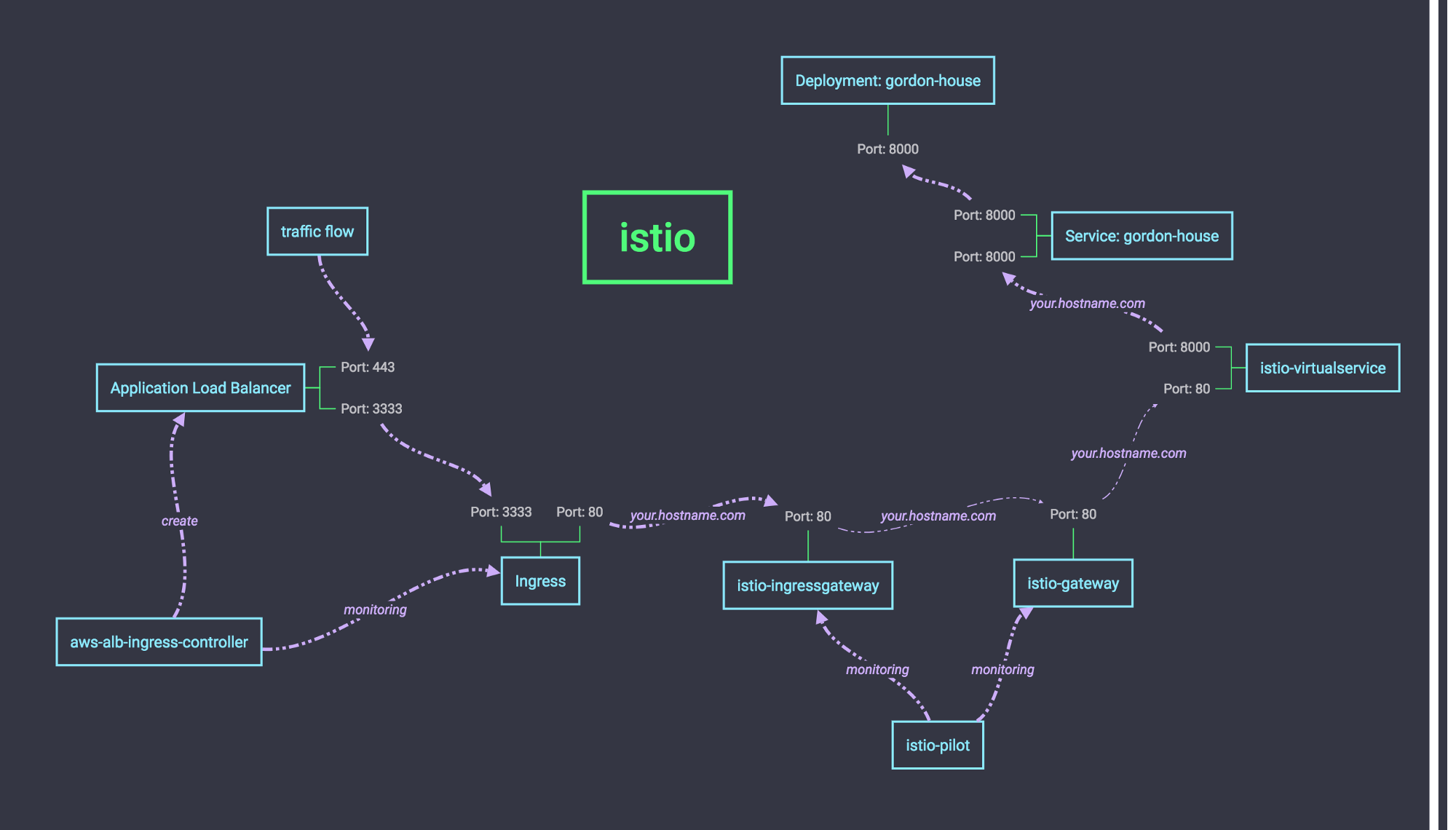

= Ingress flow from ALB to the service = | |||

This example graph shows the traffic flow from ALB -> a container | |||

:[[File:ClipCapIt-210828-101105.PNG]] | |||

= Get [https://istio.io/latest/docs/setup/install/istioctl/ info] = | = Get [https://istio.io/latest/docs/setup/install/istioctl/ info] = | ||

| Line 190: | Line 670: | ||

</source> | </source> | ||

Available dashboards commands | |||

<source lang=bash> | <source lang=bash> | ||

istioctl dashboard <dashboard> | |||

Available Commands: | |||

controlz Open ControlZ web UI | |||

envoy Open Envoy admin web UI | |||

grafana Open Grafana web UI | |||

jaeger Open Jaeger web UI | |||

kiali Open Kiali web UI | |||

prometheus Open Prometheus web UI | |||

zipkin Open Zipkin web UI | |||

</source> | |||

List all Istio CRDs | |||

<source lang=bash> | |||

k get crd -A | grep istio | cut -f1 -d" " | k get crd -A | grep istio | cut -f1 -d" " | ||

adapters.config.istio.io | adapters.config.istio.io | ||

| Line 247: | Line 712: | ||

= IstioOperator = | = IstioOperator = | ||

{{Note|This is | Output logs of istio-operator [[Kubernetes/Istio-logs-default-install]] when installing default istio+kiali=prometheus. | ||

== Initialize [https://github.com/istio/istio/tree/master/operator istio.operator] == | |||

{{Note|This is being unfolded, check out [[Kubernetes/Istio#Install_the_control_plane|here]] the second paragraph. }} | |||

<source lang=bash> | <source lang=bash> | ||

istioctl operator init | istioctl operator init # dump, remove, --force | ||

Using operator Deployment image: docker.io/istio/operator:1.7.3 | Using operator Deployment image: docker.io/istio/operator:1.7.3 | ||

✔ Istio operator installed | ✔ Istio operator installed | ||

| Line 270: | Line 738: | ||

NAME DESIRED CURRENT READY AGE | NAME DESIRED CURRENT READY AGE | ||

replicaset.apps/istio-operator-9dc6b7fd7 1 1 1 5m42s | replicaset.apps/istio-operator-9dc6b7fd7 1 1 1 5m42s | ||

</source> | |||

Istio operator (v1.7.3), this is not super clear to me | |||

<source lang=bash> | |||

find . -iname *operator* | |||

./samples/operator | |||

./samples/addons/extras/prometheus-operator.yaml | |||

./manifests/charts/istio-operator # it's a chart to deploy operator | |||

./manifests/charts/istio-operator/crds/crd-operator.yaml | |||

./manifests/charts/base/crds/crd-operator.yaml | |||

./manifests/charts/istio-telemetry/prometheusOperator | |||

./manifests/deploy/crds/istio_v1alpha1_istiooperator_crd.yaml | |||

./manifests/deploy/crds/istio_v1alpha1_istiooperator_cr.yaml | |||

./manifests/deploy/operator.yaml # this [1] | |||

./manifests/examples/customresource/istio_v1alpha1_istiooperator_cr.yaml | |||

</source> | |||

* [1] <code>manifests/deploy/operator.yaml</code> | |||

<syntaxhighlightjs lang=yaml> | |||

--- | |||

apiVersion: apps/v1 | |||

kind: Deployment | |||

metadata: | |||

namespace: istio-operator | |||

name: istio-operator | |||

spec: | |||

replicas: 1 | |||

selector: | |||

matchLabels: | |||

name: istio-operator | |||

... | |||

</syntaxhighlightjs> | |||

This API get always installed | |||

<source lang=bash> | |||

k -n istio-system get istiooperators.install.istio.io # kind: IstioOperator | |||

</source> | </source> | ||

= CRDs aka new <code>kind:</code> objects - demystified = | = CRDs aka new <code>kind:</code> objects - demystified = | ||

* [https://istio.io/latest/about/contribute/terminology/ Istio terminology] | |||

** Use ''workload'' over ''service'' that can be misinterpreted by some | |||

* [https://www.envoyproxy.io/docs/envoy/latest/intro/arch_overview/intro/terminology Envoy terminiology] Host, Downstream, Upstream, Listener, Cluster, Mesh, Runtime configuration | * [https://www.envoyproxy.io/docs/envoy/latest/intro/arch_overview/intro/terminology Envoy terminiology] Host, Downstream, Upstream, Listener, Cluster, Mesh, Runtime configuration | ||

** Cluster: A cluster is a group of logically similar upstream hosts that Envoy connects to, eg. group of pods <code>reviews</code> service v1, v2 and v3. | ** Cluster: A cluster is a group of logically similar upstream hosts that Envoy connects to, eg. group of pods <code>reviews</code> service v1, v2 and v3. | ||

| Line 318: | Line 827: | ||

* [https://www.learncloudnative.com/blog/2020-01-09-deploying_multiple_gateways_with_istio/ Deploying multiple Istio Ingress Gateways] | * [https://www.learncloudnative.com/blog/2020-01-09-deploying_multiple_gateways_with_istio/ Deploying multiple Istio Ingress Gateways] | ||

* [https://medium.com/@anshul.prakash17/creating-an-internal-load-balancer-in-aws-eks-using-istio-3defcb51c6a2 Creating an Internal Load Balancer in AWS EKS using Istio] | * [https://medium.com/@anshul.prakash17/creating-an-internal-load-balancer-in-aws-eks-using-istio-3defcb51c6a2 Creating an Internal Load Balancer in AWS EKS using Istio] | ||

* [https://stackoverflow.com/questions/62407364/how-to-set-aws-alb-instead-of-elb-in-istio how-to-set-aws-alb-instead-of-elb-in-istio] | |||

* [https://rtfm.co.ua/en/istio-external-aws-application-loadbalancer-and-istio-ingress-gateway/ Istio: external AWS Application LoadBalancer and Istio Ingress Gateway] published on 2021-04-22 | |||

Gateways are a special type of component, since multiple ingress and egress gateways can be defined. In the IstioOperator API, gateways are defined as a list type. The default profile installs one ingress gateway, called istio-ingressgateway. | Gateways are a special type of component, since multiple ingress and egress gateways can be defined. In the IstioOperator API, gateways are defined as a list type. The default profile installs one ingress gateway, called istio-ingressgateway. | ||

| Line 340: | Line 850: | ||

istioctl install \ | istioctl install \ | ||

--set profile=default \ | --set profile=default \ | ||

--set components.ingressGateways[0].enabled="true" \ | --set components.ingressGateways[0].enabled="true" \ | ||

--set components.ingressGateways[0].k8s.serviceAnnotations."service\.beta\.kubernetes\.io/aws-load-balancer-internal"=\"true\" | --set components.ingressGateways[0].k8s.serviceAnnotations."service\.beta\.kubernetes\.io/aws-load-balancer-internal"=\"true\" | ||

| Line 365: | Line 871: | ||

ingressGateways: | ingressGateways: | ||

- enabled: true # default is true for default profile | - enabled: true # default is true for default profile | ||

name: istio-ingressgateway # required key | |||

egressGateways: | egressGateways: | ||

- enabled: true | - enabled: true | ||

name: istio-egressgateway # required key | |||

EOF | EOF | ||

) | ) --dry-run | ||

</source> | </source> | ||

| Line 388: | Line 896: | ||

# Verify | # Verify | ||

env | grep INGRESS | env | grep INGRESS | ||

</source> | |||

== Ingressgateway Kubernetes service == | |||

The istio <code>istio-ingressgateway</code> works as any other ingress controller, it has service type: LoadBalancer, that creates an endpoint with 4 ports, these in turn make external-loadbalancer to listen on these ports: | |||

<source> | |||

Port Name Protocol | |||

8443 https TCP | |||

15443 tls TCP | |||

15021 status-port TCP | |||

8080 http2 TCP | |||

</source> | |||

so, any incoming traffic via the ext-lb will end up being processed by <code>istio-egressgateway-54884d6c57-mqgbg</code> pod according to it's configuration that in reality is Envoy config built by pilot using using Istio CRDs. | |||

Describe the service and the endpoint | |||

<source lang=bash> | |||

vagrant@vagrant:~$ k -n istio-system describe svc istio-ingressgateway | |||

Name: istio-ingressgateway | |||

Namespace: istio-system | |||

Labels: app=istio-ingressgateway | |||

... | |||

Selector: app=istio-ingressgateway,istio=ingressgateway | |||

Type: LoadBalancer | |||

IP: 10.119.253.21 | |||

LoadBalancer Ingress: 34.76.42.198 | |||

Port: status-port 15021/TCP | |||

TargetPort: 15021/TCP | |||

NodePort: status-port 32231/TCP | |||

Endpoints: 10.116.19.12:15021 | |||

Port: http2 80/TCP | |||

TargetPort: 8080/TCP | |||

NodePort: http2 31604/TCP | |||

Endpoints: 10.116.19.12:8080 | |||

Port: https 443/TCP | |||

TargetPort: 8443/TCP | |||

NodePort: https 30747/TCP | |||

Endpoints: 10.116.19.12:8443 | |||

Port: tls 15443/TCP | |||

TargetPort: 15443/TCP | |||

NodePort: tls 31901/TCP | |||

Endpoints: 10.116.19.12:15443 | |||

vagrant@vagrant:~$ k -n istio-system describe ep istio-ingressgateway | |||

Name: istio-ingressgateway | |||

Namespace: istio-system | |||

Labels: app=istio-ingressgateway | |||

... | |||

Annotations: <none> | |||

Subsets: | |||

Addresses: 10.116.19.12 # -> target: istio-ingressgateway-86f88b6f6-7mzf7 | |||

NotReadyAddresses: <none> | |||

Ports: | |||

Name Port Protocol | |||

---- ---- -------- | |||

https 8443 TCP | |||

tls 15443 TCP | |||

status-port 15021 TCP | |||

http2 8080 TCP | |||

</source> | |||

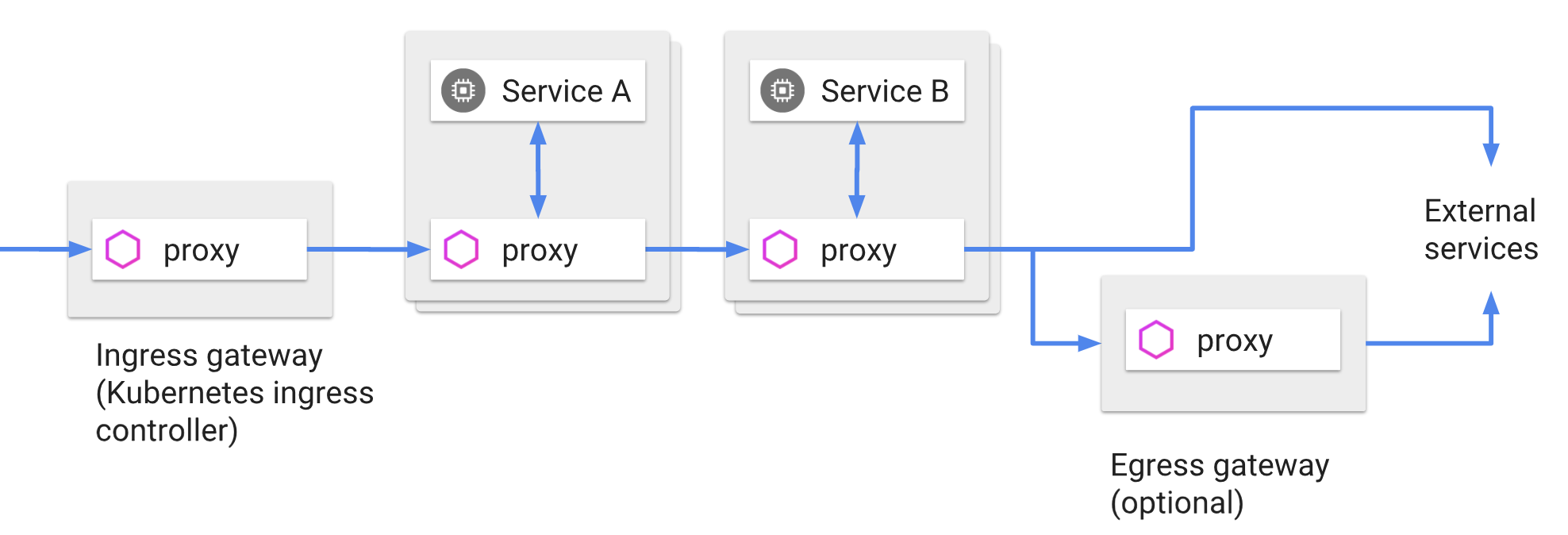

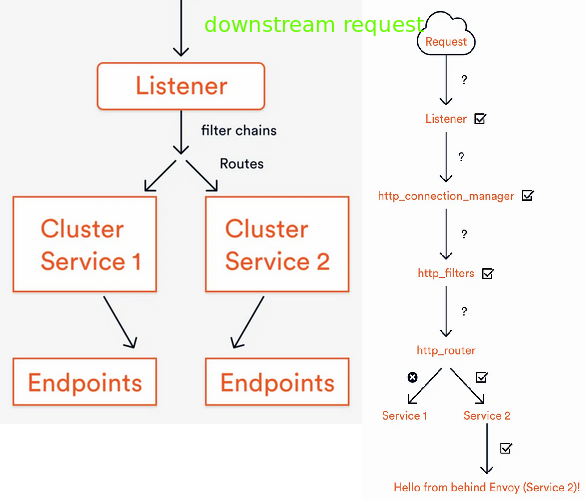

== General traffic flow == | |||

{{Note|This overview applies to each workload including Ingress-gateways}} | |||

The arrows in the diagram show the flow of a request through the configuration, and the five key elements are the ‘listener,’ ‘filter chains,’ ‘routes,’ ‘clusters,’ and ‘endpoints’. The route is part of the filter chain, which is part of the listener. | |||

:[[File:ClipCapIt-210503-164443.PNG]] | |||

* listener - 'binds' to a port and listens for inbound requests. Any request that comes in via another port would not be seen or handled by Envoy. In Envoy terms, it manages downstream requests | |||

* filter chain - after being accepted by 'the listener', describes how the request should be handled once it’s entered Envoy | |||

* route - extension of the filter chain, takes the HTTP request information and directs it to the correct service | |||

* cluster - address IP:port that are the backend for a service. A cluster is a group of logically similar upstream hosts that Envoy connects to. Envoy discovers the members of a cluster via CDS(Cluster Discovery Service). | |||

* endpoints - network nodes that implement a logical service. They are grouped into clusters. Endpoints in a cluster are upstream of an Envoy proxy. | |||

== Ingress-gateway - routes verification == | |||

<source lang=bash> | |||

INGRESS_GATEWAY_LABEL=ingressgateway # ingressgateway-internal|external | |||

INGRESS_GATEWAY_POD=$(kubectl -n istio-system get pod -l istio=$INGRESS_GATEWAY_LABEL -o jsonpath='{.items[0].metadata.name}') | |||

# Get listeners, other options: bootstrap,cluster,endpoint,listener,log,route,secret | |||

istioctl proxy-config listeners -n istio-system $INGRESS_GATEWAY_POD | |||

ADDRESS PORT MATCH DESTINATION | |||

0.0.0.0 8080 ALL Route: http.80 | |||

0.0.0.0 15021 ALL Inline Route: /healthz/ready* | |||

0.0.0.0 15090 ALL Inline Route: /stats/prometheus* | |||

# Show routes, and virtual-services | |||

istioctl proxy-config routes -n istio-system $INGRESS_GATEWAY_POD | |||

NOTE: This output only contains routes loaded via RDS. | |||

NAME DOMAINS MATCH VIRTUAL SERVICE | |||

http.80 alertmanager.staging.net /* alertmanager.monitoring | |||

http.80 grafana.staging.net /* grafana.monitoring | |||

http.80 prometheus.staging.net /* prometheus.monitoring | |||

* /healthz/ready* | |||

* /stats/prometheus* | |||

</source> | </source> | ||

| Line 572: | Line 1,175: | ||

== peerauthentications and requestauthentications == | == peerauthentications and requestauthentications == | ||

;<code>peerauthentications</code>: defines what authenticated traffic within the cluster is allowed to access what workloads; used for service-to-service authentication to verify the client making the connection. mutualTLS is used for transport authentication, Authentication policies apply to requests that a service receives. | ;<code>peerauthentications</code>: defines what authenticated traffic within the cluster is allowed to access what workloads; used for service-to-service authentication to verify the client making the connection. mutualTLS is used for transport authentication, Authentication policies apply to requests that a service receives. | ||

<syntaxhighlight lang="js" | <syntaxhighlight lang="js"> | ||

apiVersion: "security.istio.io/v1beta1" | apiVersion: "security.istio.io/v1beta1" | ||

kind: "PeerAuthentication" | kind: "PeerAuthentication" | ||

| Line 588: | Line 1,191: | ||

;<code>requestauthentications</code>: it's for humans outside of the cluster to validate their identity and decide to allow enter the cluster, used for end-user authentication to verify credentials attached to the request. | ;<code>requestauthentications</code>: it's for humans outside of the cluster to validate their identity and decide to allow enter the cluster, used for end-user authentication to verify credentials attached to the request. | ||

<syntaxhighlight lang="js" | <syntaxhighlight lang="js"> | ||

apiVersion: "security.istio.io/v1beta1" | apiVersion: "security.istio.io/v1beta1" | ||

kind: "RequestAuthentication" | kind: "RequestAuthentication" | ||

| Line 615: | Line 1,218: | ||

If any <tt>AuthorizationPolicy</tt> applies to a workload, access to that workload is denied by default, unless explicitly allowed by a rule declared in the policy. | If any <tt>AuthorizationPolicy</tt> applies to a workload, access to that workload is denied by default, unless explicitly allowed by a rule declared in the policy. | ||

<syntaxhighlight lang="js" | <syntaxhighlight lang="js"> | ||

# allow-all policy full access to all workloads in the default namespace | # allow-all policy full access to all workloads in the default namespace | ||

apiVersion: security.istio.io/v1beta1 | apiVersion: security.istio.io/v1beta1 | ||

| Line 648: | Line 1,251: | ||

</syntaxhighlight> | </syntaxhighlight> | ||

= Kiali visualisations = | = [https://istio.io/latest/docs/ops/integrations/ Addons and integrations] = | ||

{{Note| Example needs redoing | {{Note| In v1.7.x the installation of telemetry addons by istioctl is deprecated, these are being managed now using addons integrations.}} | ||

== Kiali visualisations == | |||

* [https://istio.io/latest/docs/tasks/observability/kiali/ Visualizing Your Mesh] | |||

{{Note| Example needs redoing. Istio v1.6.8 requires kiali secret, v1.7.x by default no authentication is enabled | |||

<source lang=bash> | <source lang=bash> | ||

! values.kiali.enabled is deprecated; use the samples/addons/ deployments instead | ! values.kiali.enabled is deprecated; use the samples/addons/ deployments instead | ||

| Line 675: | Line 1,284: | ||

EOF | EOF | ||

) | ) | ||

</source> | |||

# Option | ;Install - Option 1 | ||

<source lang=bash> | |||

cd istio-1.#.#/samples/addons | |||

kubectl apply -f kiali.yaml | |||

</source> | |||

;Deprecated installation options | |||

Option 2 - istioctl - deprecated in v1.8 | |||

<source lang=bash> | |||

istioctl manifest install \ | istioctl manifest install \ | ||

--set values.kiali.enabled=true \ | --set values.kiali.enabled=true \ | ||

--set values.prometheus.enabled=true | --set values.prometheus.enabled=true | ||

</source> | |||

Option 3 - IstioOperator manifest, this is desired configuration defaults are set is not specified, then installed what is set, any resources not default and not specified will be pruned. Deprecated in v1.8. | |||

{| class="wikitable" | |||

|+ IstioOperator manifest to install Kiali | |||

|- | |||

! Basic | |||

! No auth | |||

|- style="vertical-align:top;" | |||

| <syntaxhighlightjs lang=bash> | |||

istioctl manifest install -f <(cat <<EOF | istioctl manifest install -f <(cat <<EOF | ||

apiVersion: install.istio.io/v1alpha1 | apiVersion: install.istio.io/v1alpha1 | ||

| Line 695: | Line 1,323: | ||

EOF | EOF | ||

) | ) | ||

</syntaxhighlightjs> | |||

| <syntaxhighlightjs lang=bash> | |||

istioctl manifest install -f <(cat <<EOF | |||

apiVersion: install.istio.io/v1alpha1 | |||

kind: IstioOperator | |||

spec: | |||

profile: default | |||

meshConfig: | |||

# For debugging | |||

accessLogFile: /dev/stdout | |||

addonComponents: | |||

# Kiali uses Prometheus to populate its dashboards | |||

prometheus: | |||

enabled: true | |||

kiali: | |||

enabled: true | |||

namespace: kiali | |||

k8s: | |||

podAnnotations: | |||

sidecar.istio.io/inject: "true" | |||

values: | |||

kiali: | |||

prometheusAddr: http://prometheus.istio-system:9090 | |||

dashboard: | |||

auth: | |||

strategy: anonymous | |||

EOF | |||

) | |||

</syntaxhighlightjs> | |||

|} | |||

Verify installation and access the console | |||

<source lang=bash> | |||

kubectl wait --for=condition=Available deployment/kiali -n istio-system --timeout=300s | kubectl wait --for=condition=Available deployment/kiali -n istio-system --timeout=300s | ||

| Line 729: | Line 1,390: | ||

add: | add: | ||

"key2": "def" | "key2": "def" | ||

</source> | |||

= Handling Istio Sidecars in Kubernetes Jobs = | |||

A Job/CronJob is not considered complete until all containers have stopped running, and Istio Sidecars run indefinitely. | |||

Option 1: Disabling Istio Sidecar injection | |||

<source lang=yaml> | |||

spec: | |||

template: | |||

metadata: | |||

annotations: | |||

sidecar.istio.io/inject: 'false' | |||

</source> | |||

Option 2: Use `pkill` to stop the Istio Process | |||

Add <code>pkill -f /usr/local/bin/pilot-agent</code> inside Dockerfile command and <code>shareProcessNamespace: true</code> to the Pod spec (not the Job spec). | |||

Istio 1.3 and Core k8s Support (unverified) | |||

<source lang=bash> | |||

<job shell script aka command:> | |||

curl -X POST http://127.0.0.0/quitquitquit | |||

</source> | |||

= [https://istio.io/latest/docs/ops/diagnostic-tools/ Debugging] = | |||

* [https://istio.io/latest/docs/ops/diagnostic-tools/proxy-cmd/ Debugging Envoy and Istiod] | |||

Analize | |||

<source lang=bash> | |||

istioctl analyze --all-namespaces | |||

istioctl analyze samples/bookinfo/networking/*.yaml | |||

istioctl analyze --use-kube=false samples/bookinfo/networking/*.yaml | |||

# Enable live analyzer, it will add to the status field eg.<code>status.validationMessages.type.code: IST0101</code> | |||

istioctl install --set values.global.istiod.enableAnalysis=true | |||

</source> | |||

Debug a singular pod or a deployment pods | |||

<syntaxhighlight lang="yaml"> | |||

apiVersion: v1 | |||

kind: Namespace | |||

metadata: | |||

name: redis | |||

labels: | |||

istio.io/rev: asm-173-6 | |||

--- | |||

apiVersion: apps/v1 | |||

kind: Deployment | |||

metadata: | |||

name: debug-pod | |||

namespace: redis | |||

labels: | |||

app: debug-pod | |||

spec: | |||

replicas: 1 | |||

selector: | |||

matchLabels: | |||

app: debug-pod | |||

template: | |||

metadata: | |||

labels: | |||

app: debug-pod | |||

annotations: | |||

sidecar.istio.io/logLevel: debug # <- enable debug mode | |||

spec: | |||

containers: | |||

- image: samos123/docker-toolbox | |||

name: debug-pod | |||

</syntaxhighlight> | |||

Istiocli debug commands | |||

<source lang=bash> | |||

istioctl experimental describe pod <pod-name>[.<namespace>] | |||

istioctl proxy-status | |||

# | SYNCED means that Envoy has acknowledged the last configuration Istiod has sent to it. | |||

# | NOT SENT means that Istiod hasn’t sent anything to Envoy. This usually is because Istiod has nothing to send. | |||

# | STALE means that Istiod has sent an update to Envoy but has not received an acknowledgement. This usually indicates a networking issue between Envoy and Istiod or a bug with Istio itself. | |||

# Retrieve information about cluster configuration for the Envoy instance in a specific pod: | |||

istioctl proxy-config cluster <pod-name> [flags] | |||

# To retrieve information about bootstrap configuration for the Envoy instance in a specific pod: | |||

istioctl proxy-config bootstrap <pod-name> [flags] | |||

# To retrieve information about listener configuration for the Envoy instance in a specific pod: | |||

istioctl proxy-config listener <pod-name> [flags] | |||

# To retrieve information about route configuration for the Envoy instance in a specific pod: | |||

istioctl proxy-config route <pod-name> [flags] | |||

# To retrieve information about endpoint configuration for the Envoy instance in a specific pod: | |||

istioctl proxy-config endpoints <pod-name> [flags] | |||

istioctl proxy-config endpoints productpage-v1-6c886ff494-7vxhs --cluster "outbound|9080||reviews.default.svc.cluster.local" | |||

</source> | |||

= [https://github.com/salesforce/helm-starter-istio Istio helm starter] = | |||

Project by Salesforce to scaffold a helm chart with Istio that uses helm-starter plugin. | |||

<source lang=bash> | |||

# Install `helm-starter` helm plugin | |||

helm plugin install https://github.com/salesforce/helm-starter.git | |||

# Fetch starter templates: mesh-service, ingress-service, mesh-egress, auth-policy | |||

helm starter fetch https://github.com/salesforce/helm-starter-istio.git | |||

# Create a helm chart from the starter | |||

helm create NAME --starter helm-starter-istio/[starter-name] | |||

for starter in mesh-service ingress-service mesh-egress auth-policy; do helm create authservice-$starter --starter helm-starter-istio/$starter; done | |||

</source> | |||

== Important labels == | |||

Deployment | |||

<syntaxhighlightjs lang="yaml"> | |||

apiVersion: apps/v1 | |||

kind: Deployment | |||

spec: | |||

selector: | |||

matchLabels: | |||

app: {{ .Values.service | lower | quote }} # <- Istio label | |||

version: {{ .Values.version | quote }} # <- Istio label | |||

template: | |||

metadata: | |||

labels: | |||

# Kubernetes recommended labels | |||

app.kubernetes.io/name: {{ .Values.service | lower | quote }} | |||

app.kubernetes.io/part-of: {{ .Values.system | quote }} | |||

app.kubernetes.io/version: {{ .Values.version | quote }} | |||

# Isio required labels | |||

app: {{ .Values.service | lower | quote }} # <- Istio label | |||

version: {{ .Values.version | quote }} # <- Istio label | |||

{{- if .Values.configMap }} | |||

annotations: | |||

checksum/config: {{ include (print $.Template.BasePath "/configmap.yaml") . | sha256sum }} | |||

{{- end }} | |||

spec: | |||

containers: | |||

{{- range .Values.ports }} | |||

- name: {{ .name | quote }} | |||

containerPort: {{ .targetPort }} | |||

protocol: TCP # <-- TCP|HTTP or any other that is accepted | |||

{{- end }} | |||

</syntaxhighlightjs> | |||

Service | |||

<syntaxhighlightjs lang="yaml"> | |||

apiVersion: v1 | |||

kind: Service | |||

spec: | |||

ports: | |||

{{- range .Values.ports }} | |||

- name: {{ .name | quote }} # <- tcp-<name>, http-<name>, grcp-<name> | |||

port: {{ .port }} | |||

targetPort: {{ .targetPort }} | |||

protocol: TCP # <- TCP|HTTP or any other that is accepted | |||

{{- end }} | |||

</syntaxhighlightjs> | |||

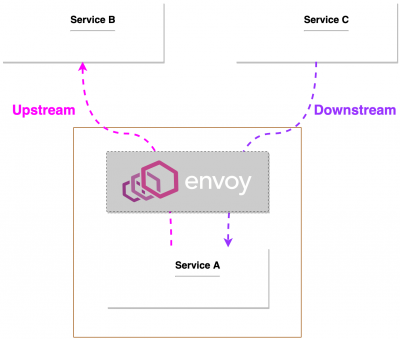

= Envoy = | |||

* Upstream connections are the service Envoy is initiating the connection to. | |||

* Downstream connections are the client that is initiating a request through Envoy. | |||

:[[File:ClipCapIt-210525-080849.PNG|none|left|400px]] | |||

Terminology in my own words: | |||

;Listeners: Declaration of ports to open on the proxy and listen for incoming connections | |||

;Clusters: Backend service to which we can route traffic. Also a group of logically similar upstream hosts that Envoy connects to | |||

;Envoy Discovery (XDS) | |||

Envoy is driven by a set of APIs that configure certain aspects of the proxy. We saw earlier how we specified clusters, listeners, and routes. We can also configure those over Envoy's xDS APIs: | |||

* Listener discovery service (LDS) | |||

* Route discovery service (RDS) | |||

* Cluster discovery service (CDS) | |||

* Service discovery service (SDS/EDS) | |||

* Aggregated discovery service (ADS) | |||

Now we don't have to specify listeners ahead of time. We can open them up or close them at runtime. Below example of Envoy config for dynamic Listeners configuration, set/received from <code>api_config_source</code>. | |||

<syntaxhighlightjs lang="yaml"> | |||

dynamic_resources: | |||

lds_config: | |||

api_config_source: | |||

api_type: GRPC | |||

grpc_services: | |||

- envoy_grpc: | |||

cluster_name: xds_cluster | |||

</syntaxhighlightjs> | |||

Configuring Envoy through this API is exactly what Istio's control plane does. With Istio we specify configurations in a more user-friendly format and Istio translates that configuration into something Envoy can understand and delivers this configuration through Envoy's xDS API. | |||

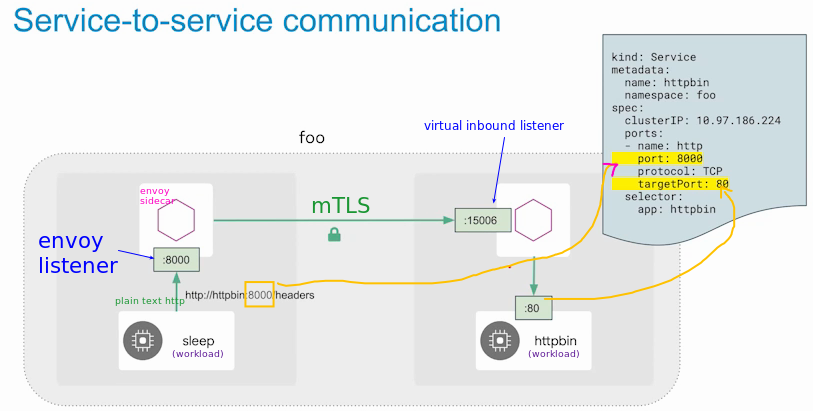

== Service-to-service communication == | |||

:[[File:ClipCapIt-210324-180344.PNG]] | |||

This is zoom in on inbound connection from the <code>sleep</code> to <code>http</code> pod. | |||

[[File:istio-envoy-2.jpg|left|Istio]] | |||

. | |||

== Troubleshooting == | |||

<source lang=bash> | |||

# Interrogate envoy stats endpoint | |||

kubectl exec -it deploy/sleep -- curl http://envoy:15000/stats| grep retry | |||

</source> | |||

= Troubleshooting istio = | |||

Grab whole mesh config (new in v1.10) and paste into https://envoyui.solo.io/ analyzer | |||

<source lang=bash> | |||

istioctl proxy-config all deploy/sleep -n default -o json > sleep.deploy.all-config_dump.json | |||

</source> | |||

Check istiod endpoints, get registry | |||

<source lang=bash> | |||

# Get all services in the Istio registry, workloads are also included even if they are not officially part of the mesh | |||

kubectl exec -n istio-system -it deploy/istiod-1-8-4 -- pilot-discovery request GET /debug/registryz | |||

kubectl exec -n istio-system -it deploy/istiod-1-8-4 -- pilot-discovery version # [discovery,help,request,version] | |||

</source> | |||

Debug info from istiod control-plane | |||

<source lang=bash> | |||

# istiod has istiod:8080/debug endpoint 'internal-debug' enables this using secure channel | |||

## curl /debug endpoint | |||

kubectl -n istio-system port-forward deploy/istiod 8080 | |||

firefox http://127.0.0.1:8080/debug # all debug endpoints | |||

firefox http://127.0.0.1:8080/debug/mesh # show mesh config | |||

## internal-debug (not working) | |||

istioctl x internal-debug syncz | |||

</source> | |||

Verify an app/pod iptables modified by istio-proxy. This rules are in the Network kernel namespace hold by the pod. | |||

<source lang=bash> | |||

# List iptables rules - this should fail | |||

kubectl -n default exec -it deploy/httpbin -c istio-proxy -- sudo iptables -L -t nat | |||

sudo: effective uid is not 0, is /usr/bin/sudo on a file system with the 'nosuid' option set or an NFS file system without root privileges? | |||

# Grant the proxy sidecar privileges escalation | |||

kubectl edit deploy/httpbin -n default | |||

containers: | |||

- name: istio-proxy | |||

image: docker.io/istio/proxyv2:1.8.4 | |||

securityContext: | |||

allowPrivilegeEscalation: true # by default it's false | |||

privileged: true # by default it's false | |||

# Show iptables - 15006(incoming) and 15001(outgoing) traffic interception | |||

kubectl -n default exec -it deploy/httpbin -c istio-proxy -- sudo iptables -L -t nat | grep -e ISTIO_IN_REDIRECT -e 15006 -e ISTIO_REDIRECT -e 15001 | |||

ISTIO_IN_REDIRECT tcp -- anywhere anywhere | |||

Chain ISTIO_IN_REDIRECT (3 references) | |||

REDIRECT tcp -- anywhere anywhere redir ports 15006 | |||

ISTIO_IN_REDIRECT all -- anywhere !localhost owner UID match istio-proxy | |||

ISTIO_IN_REDIRECT all -- anywhere !localhost owner GID match istio-proxy | |||

ISTIO_REDIRECT all -- anywhere anywhere | |||

Chain ISTIO_REDIRECT (1 references) | |||

REDIRECT tcp -- anywhere anywhere redir ports 15001 | |||

</source> | |||

We can see here iptables is used to redirect incoming and outgoing traffic to Istio's data plane proxy. Incoming traffic goes to port <code>15006</code> of the Istio proxy while outgoing traffic will go through <code>15001</code>. If we check the Envoy listeners for those ports, we can see exactly how the traffic gets handled. | |||

;IngressGateway | |||

Query the gateway configuration to get all routes | |||

<source lang=bash> | |||

istioctl proxy-config routes deploy/istio-ingressgateway.istio-system | |||

NOTE: This output only contains routes loaded via RDS. | |||

NAME DOMAINS MATCH VIRTUAL SERVICE | |||

http.80 example.com /* web-api-gw-vs.default | |||

* /stats/prometheus* | |||

* /healthz/ready* | |||

# Show details of a single route | |||

istioctl proxy-config routes deploy/istio-ingressgateway.istio-system --name http.80 -o json | |||

</source> | </source> | ||

| Line 744: | Line 1,683: | ||

* [https://www.youtube.com/watch?v=zVlepkF-hJc Istio Architecture Part 3 - The Control Plane] | * [https://www.youtube.com/watch?v=zVlepkF-hJc Istio Architecture Part 3 - The Control Plane] | ||

* [https://www.youtube.com/watch?v=BlQryF29L3Q Istio Architecture Part 4 - Going Deeper into Envoy] | * [https://www.youtube.com/watch?v=BlQryF29L3Q Istio Architecture Part 4 - Going Deeper into Envoy] | ||

* [https://getistio.io/istio-cheatsheet/ istio-cheatsheet] by Tetratate A+++ | |||

Istio v1.7 | Istio v1.7 | ||

* [https://www.youtube.com/watch?v=HZonKiu7onk VM <-> K8s mesh] demo at 30 min | * [https://www.youtube.com/watch?v=HZonKiu7onk VM <-> K8s mesh] demo at 30 min | ||

Semi-related | |||

* [https://github.com/csantanapr/knative-kind Knative on Kind (KonK)] | |||

Related technology | |||

* [https://developers.google.com/web/fundamentals/performance/http2 Introduction to HTTP/2] http/2 is used by Envoy internally | |||

Tutorials and demos: | |||

* Upgrading Istio without Downtime 1-9-7 to 1-10-3 | |||

** [https://www.solo.io/blog/upgrading-istio-without-downtime/ upgrading-istio-without-downtime] Blog | |||

** [https://github.com/nmnellis/istio-upgrade-demo istio-upgrade-demo] GitHub | |||

* [https://dzone.com/articles/safely-upgrade-the-istio-control-plane-with-revisi?oid&utm_medium=email&_hsmi=148688354&_hsenc=p2ANqtz-8dBVjZA0BJoWbS4e-Guer014AS5F2-5lgkBH63X87QKdnr5N4XB7d4AmRHjjWXDO7NfmsE4hVgOG-y-RLAtNawPzLvQbEXuVEfsRJVcAadDTxrxJ8&utm_content=148687162&utm_source=hs_email# Safely Upgrade the Istio Control Plane With Revisions and Tags] 05-Aug-2021 | |||

Investigate TLS: | |||

* [https://redhat-scholars.github.io/istio-tutorial/istio-tutorial/1.6.x/8mTLS.html Mutual TLS and Istio] inspect with tcpdump and ksniff | |||

* [https://www.programmersought.com/article/35504882940/ Use ksniff and Wireshark to verify service mesh TLS in Kubernetes] | |||

Solutions: | |||

* [https://dev.to/peterj/what-are-sticky-sessions-and-how-to-configure-them-with-istio-1e1a What are sticky sessions and how to configure them with Istio?] | |||

* [https://medium.com/@cy.chiang/how-to-integrate-aws-alb-with-istio-v1-0-b17e07cae156 integrate AWS ALB with istio] 2018 | |||

Latest revision as of 10:20, 28 August 2021

Architecture Istio ~v1.7+

Namespace: <app namespace> | app1 | | app2 | # main container | proxy | <----------> | proxy | # Data Plane (all Envoy sidecar proxies) | pod | | pod | Namespace: istio-system | |citadel| |mixer| |pilot| | | | pod | | pod | | pod | | | C o n t r o l P l a n e A P I | ----------------------------------------

Note: All proxies are collectively named Data Plane and everything else that Istio deployed is called Control Plane

Note: Proxy term meaning is when someone has authority to represent someone. In software proxy components are invisible to clients. proxies

Security Architecture

Pod networking changed v1.10 matching default Kubernetes behaviour

service/istiod: 15010 - grpc-xds 15012 - https-dns 15014 - http-monitoring 443 - https-webhook, container port 15017

Istio components

- Istio-telemetry

- Istio-pilot

- Istio-tracing

| Envoy L7 proxy | Pilot | Citadel | Mixer[deprecate] | Galley |

|---|---|---|---|---|

|

Converts Istio configuration into a format that Envoy can understand. Aware about pods health, what pods are available and sends to the proxy pods that are alive with any other configuration updates.

|

Manages certificates, allows to enable TLS/SSL across entire cluster.

Pods

It's certificate store. |

It has a lot of modules/plugins. Pods: istio-policy-* istio-telemetry-* |

Interface for underlying Istio API gateway(aka server). It reads in k8s yaml and transforms it into internal structure Istio understand. |

Istio UI components:

- grafana:3000 - dashboards

- kiali:31000 - visualisation, tells what services are part of istio, how are they connected and performing

- jaeger:31001 - tracing

- Noticeable changes

- In Istio 1.6, completed transition and fully moved functionality into Istiod. This has allow to remove the separate deployments for Citadel, the sidecar injector, and Galley.

Istio on minikube

# Minimum requirements are 8G and 4 CPUs K8SVERSION=v1.18.9 PROFILE=minikube-$K8SVERSION-istio minikube profile $PROFILE # set default profile minikube start --kubernetes-version=$K8SVERSION minikube start --memory=8192 --cpus=4 --kubernetes-version=$K8SVERSION --driver minikube minikube start --memory=8192 --cpus=4 --kubernetes-version=$K8SVERSION --driver kvm minikube tunnel minikube addons enable istio # [1] error

Troubleshooting

- [1] - no matches for kind "IstioOperator"

💣 enable failed: run callbacks: running callbacks: [sudo KUBECONFIG=/var/lib/minikube/kubeconfig /var/lib/minikube/binaries/v1.17.6/kubectl apply -f /etc/kubernetes/addons/istio-default-profile.yaml: Process exited with status 1 stdout: namespace/istio-system unchanged stderr: error: unable to recognize "/etc/kubernetes/addons/istio-default-profile.yaml": no matches for kind "IstioOperator" in version "install.istio.io/v1alpha1"

Install bookinfo example app.

Install

istioctl cli

The curl ISTIO_VERSION=1.10.0 sh - just downloads istio files into its own directory. It does not install anything

# Option_1 - the official approach curl -L https://istio.io/downloadIstio | ISTIO_VERSION=1.10.0 sh - cd istio-1.10.0/ # istio package directory export PATH=$PWD/bin:$PATH # Option_2 export ISTIO_VERSION=1.10.0 curl -L https://istio.io/downloadIstio | sh - export PATH=$PWD/istio-$ISTIO_VERSION/bin:$PATH export ISTIO_INSTALL_DIR=$PWD/istio-$ISTIO_VERSION # Check version istioctl version --remote client version: 1.9.1 control plane version: 1.9.1 data plane version: 1.9.1 (7 proxies) # Pre-flight check istioctl x precheck # Verify the install istioctl verify-install ... CustomResourceDefinition: templates.config.istio.io.default checked successfully CustomResourceDefinition: istiooperators.install.istio.io.default checked successfully Checked 25 custom resource definitions Checked 1 Istio Deployments Istio is installed successfully # Verify mesh coverage and the config status istioctl proxy-status NAME CDS LDS EDS RDS ISTIOD VERSION details-v1-5974b67c8-xbp8l.default SYNCED SYNCED SYNCED SYNCED istiod-5c6b7b5b8f-9npdz 1.9.1 istio-ingressgateway-5689f7c67-gvrh8.istio-system SYNCED SYNCED SYNCED SYNCED istiod-5c6b7b5b8f-9npdz 1.9.1 ... # Analyse the mesh configuration istioctl analyze --all-namespaces

control plane

- Install with Istioctl - recommended

- Istio Operator Install

- Install with Helm

Istio maintainers with increasing complexity of the project that goes against the user friendliness still support helm manifest based configuration although there is fair movement towards the operator pattern. See below for differences, v1.6 and v1.7 still support both methods.

There are a few operational modes to configure control plane:

- istio operator

- install -

istioctl installwith--setor-f istio-overlay.yamlwill be overlayed on top of a chosen profile - reconfigure -

istioctl manifest apply -f istio-install.yaml --dry-run istio operator initinstalls the operator podistio-operator. Then usingkubectl apply -f istio-overlay.yamlwill trigger the operator to sync the changes. Also manually changingistio-system/istiooperators.install.istio.io/installed-stateobject will trigger an the operator event to sync the config and reconfigure teh control-plane.

- install -

- [deprecated]

istioctl manifest installwith--setor-f <manifests.yaml>

Install

# Tested with 1.7.3 [depreciating method via helm charts], --set values are prefixed with 'values.' istioctl manifest install --skip-confirmation --set profile=default \ --set values.kiali.enabled=true \ --set values.prometheus.enabled=true \ --dry-run # Tested with 1.8.2 [istio.operator via 'istioctl' cli] istioctl x precheck istioctl install --skip-confirmation --set profile=default --dry-run # via operator istioctl upgrade --skip-confirmation --set profile=default --dry-run # via operator

| Basic | +Kiali,Prometheus | +ServiceEntry, mesh traffic only | +egress |

|---|---|---|---|

| <syntaxhighlightjs lang=yaml>

istioctl manifest install -f <(cat <<EOF apiVersion: install.istio.io/v1alpha1 kind: IstioOperator spec: profile: default EOF ) </syntaxhighlightjs> |

<syntaxhighlightjs lang=yaml>

istioctl manifest install -f <(cat <<EOF apiVersion: install.istio.io/v1alpha1 kind: IstioOperator spec: profile: default

values:

kiali:

enabled: true

prometheus:

enabled: true

EOF ) </syntaxhighlightjs> |

<syntaxhighlightjs lang=yaml>

istioctl manifest install -f <(cat <<EOF apiVersion: install.istio.io/v1alpha1 kind: IstioOperator spec: profile: default

values:

kiali:

enabled: true

# Kiali uses Prometheus to populate its dashboards

prometheus:

enabled: true

meshConfig:

# debugging

accessLogFile: /dev/stdout

outboundTrafficPolicy:

mode: REGISTRY_ONLY

EOF ) </syntaxhighlightjs> |

<syntaxhighlightjs lang=yaml>

istioctl manifest install -f <(cat <<EOF apiVersion: install.istio.io/v1alpha1 kind: IstioOperator spec: profile: default

components:

egressGateways:

- enabled: true

name: istio-egressgateway

values:

kiali:

enabled: true

prometheus:

enabled: true

meshConfig:

accessLogFile: /dev/stdout

outboundTrafficPolicy:

mode: REGISTRY_ONLY

EOF ) </syntaxhighlightjs> |

- Uninstall

Uninstall v1.6.8, it's safe to ignore RBAC not existing resources.

istioctl manifest generate --set profile=default | kubectl delete --ignore-not-found=true -f - kubectl delete namespace istio-system

Uninstall v1.7.x - view logs

# Removes istio-system resources and istio-operator istioctl x uninstall --purge # cmd:experimental, aliases: experimental, x, exp ✔ Uninstall complete

Install - day 2 operation

Note: Update 2021-06-01 the guide focus on Istio v1.10

This should be a preferred method for day 2 operations - canary upgrade where control-plane gets installed with revisions in istio-system namespace and ingress-gateway(s) components are installed separately in another namespace eg. istio-ingress.

- Pre checks

istioctl experimental precheck istioctl analyze -A # Optional labels for data plane operations ## Automatic proxy side injection kubectl label namespace default istio-injection=enabled kubectl label namespace default istio-injection=disabled kubectl label namespace default istio.io/rev=1-10-0 # revision label ## Discovery namespace selectors v1.10+ !!!Warning labels should exist before enabling this feature otherwise you may break cp what can wach from api-server kubectl label namespace istio-ingress istio-discovery=enabled # ingressgateway namespaces also need to be labelled (if in different ns than cp), otherwise the traffic won't be able to flow from ingress to our services kubectl label namespace default istio-discovery=enabled

Install control-plane

- Safely upgrade the Istio control plane with revisions and tags

- Stable revision labels (experimental)

Note: For installing v1.9.x you should first install without revisions eg.REVISION="" this is because revisions upgrade expects to have default revision set.

Note: Due to a bug in the creation of the ValidatingWebhookConfiguration during install, initial installations of Istio must not specify a revision. A workaround is to run command below, where <REVISION> is the name of the revision that should handle validation. This command creates an istiod service pointed to the target revision.

kubectl get service -n istio-system -o json istiod-<REVISION> | jq '.metadata.name = "istiod" | del(.spec.clusterIP) | del(.spec.clusterIPs)' | kubectl apply -f -

Note: The difference in between operations install and upgrade is fuzzy. Upgrade safely upgrades components, and show diff in between the profile and what is being configured. On the other hand for eg. discoverySelectors feature only the install applies the config where using upgrade seems not having any effect.

REVISION="" # don't use revisions

REVISION='--revision 1-10-0'

istioctl install -y -n istio-system $REVISION -f <(cat <<EOF

apiVersion: install.istio.io/v1alpha1

kind: IstioOperator

metadata:

name: control-plane

#namespace: istio-system # it's default

spec:

profile: minimal # installs only control-plane

hub: gcr.io/istio-release

components:

pilot:

k8s:

hpaSpec:

minReplicas: 2

env:

- name: PILOT_FILTER_GATEWAY_CLUSTER_CONFIG # Reduce Gateway Config to services that have routing rules defined

value: "true"

meshConfig:

defaultConfig:

proxyMetadata:

# https://preliminary.istio.io/latest/blog/2020/dns-proxy/

# curl localhost:8080/debug/nsdz?proxyID=<POD-NAME>

# dig @localhost -p 15053 postgres-stolon-proxy.sample.svc

ISTIO_META_DNS_CAPTURE: "true"

enablePrometheusMerge: true

#discoverySelectors: # new in v1.10, choose which namespaces Istiod should watch (from api-server)

#- matchLabels: # | for building the internal mesh registry based on configurations of services,endpoints,deployments

# | dynamically restrict the set of namespaces that are part of the mesh

# istio-discovery: enabled # <- example label

# #env: prod

# #region: us-east-1

#- matchExpressions:

# - key: app

# operator: In

# values:

# - cassandrs

# - spark

EOF

) --dry-run

- Verify if there any clients are connected to Istio v1.10.0

kubectl create deployment sleep --image=curlimages/curl -- /bin/sleep 3650d

kubectl exec -it deploy/sleep -- curl istiod-1-10-0.istio-system:15014/debug/connections | jq .

{

"totalClients": 0,

"clients": null

}

# example of output when client(s) are conencted

{

"totalClients": 1,

"clients": [

{

"connectionId": "sidecar~172.17.0.8~sleep-76f59b4b9c-4sw6t.default~default.svc.cluster.local-3",

"connectedAt": "2021-06-01T04:57:10.663650097Z",

"address": "172.17.0.1:8415",

"watches": null

}

]

}

# Show all discovered endpoints

kubectl exec -it deploy/sleep -- curl istiod-1-10-0.istio-system:15014/debug/endpointz | jq . # ~1800+ lines for empty Minikube

- Sidecar proxy automatic injection by telling a namespace which revision (aka control plane) to select/use.

kubectl label namespace default istio.io/rev=1-10-0 istio-injection-

Note: The istio-injection label must be removed because it takes precedence over the istio.io/rev label for backward compatibility.

- Upgrade with Canary tag revision for isolated testing

# Tag the new control-plane version with 'canary' tag

istioctl experimental revision tag set canary --revision 1-10-0

Revision tag "canary" created, referencing control plane revision "1-10-0". To enable injection using this

revision tag, use 'kubectl label namespace <NAMESPACE> istio.io/rev=canary'

# Show tagged revision

istioctl experimental revision list

REVISION TAG ISTIO-OPERATOR-CR PROFILE REQD-COMPONENTS

1-10-0 canary istio-system/installed-state-control-plane-1-10-0 minimal base

istiod

# Update a namespace label to use the newly tagged control-plane for the injected sidecars to use it/connect to it

kubectl label namespace default istio.io/rev=canary --overwrite

# Restart deployments for new proxy to be injected, test the application and if all is good delete the "app canary deployment" and continue with full rollout of all deployments.

Install ingress-gateway

We install the ingress-gateway components separated in a separated namespace istio-ingress. This is to allow to upgrade control-plane and ingressgateway(s) components to be independent. We achieve this using 'minimal' profile that includes only control CP components. Then for IGW install we use 'empty' profile that adds only the ingress-gateway. See a list of what is included in each profile.

Note: tag and revision - in v1.10 ingressgateway is injected into the pod thus this becomes and behaves almost like any other workload. Thus the revision label should be in use eg. istio.io/rev=1-10-0 to control the proxy version and to which control-plane the ingressgateway connects to

# Create ingress own namespace

kubectl create ns istio-ingress

# Install revision

REVISION='--revision 1-10-0'

istioctl install -y -n istio-ingress $REVISION -f <(cat <<EOF

apiVersion: install.istio.io/v1alpha1

kind: IstioOperator

metadata:

name: istio-ingressgateway

spec:

profile: empty # uses the 'empty' profile and enables the istio-ingressgateway component

hub: gcr.io/istio-release

components:

ingressGateways:

- name: istio-ingressgateway

namespace: istio-ingress # if not set defaults to 'istio-system'

enabled: true

k8s:

hpaSpec:

minReplicas: 2

service:

type: LoadBalancer

overlays:

- apiVersion: apps/v1

kind: Deployment

name: istio-ingressgateway

patches:

- path: spec.template.spec.containers[name:istio-proxy].lifecycle

value:

preStop:

exec:

command: ["sh", "-c", "sleep 5"]

EOF

) --dry-run

# Verify

# | Note that 'revision' relates to control-plane thus ingressgateway 'installed-state-istio-ingressgateway-1-10-0' installation

# | does hot have revision set

## Show IstioOperator installations

kubectl -n istio-system get istiooperators.install.istio.io

NAME REVISION STATUS AGE

installed-state-control-plane-1-10-0 1-10-0 165m

installed-state-istio-ingressgateway-1-10-0 1-10-0 8m47s

## Show revisions

istioctl experimental revision list

REVISION TAG ISTIO-OPERATOR-CR PROFILE REQD-COMPONENTS

1-10-0 canary istio-system/installed-state-control-plane-1-10-0 minimal base

istiod

istio-system/installed-state-istio-ingressgateway-1-10-0 empty ingress:istio-ingressgateway

## Test

GATEWAY_IP=$(kubectl get svc -n istio-system istio-ingressgateway -o jsonpath="{.status.loadBalancer.ingress[0].ip}")

# With Gateway and VirtualService we can route traffic to a destination service via IngressGateway

curl -H "Host: example.com" http://$GATEWAY_IP

Note: As the next step IngressGateway can be integrated with Cert Manager

- Reduce Gateway Config

# Show what services IngressGateway has discovered, the list has even those that no routing is defined istioctl pc clusters deploy/istio-ingressgateway -n istio-system # Set PILOT_FILTER_GATEWAY_CLUSTER_CONFIG environment variable in the istiod deployment # kind: IstioOperator # spec: # components: # pilot: # k8s: # env: # - name: PILOT_FILTER_GATEWAY_CLUSTER_CONFIG # value: "true"

- (Optional) Access logging for gateway only using EnvoyFilter

- Istio docs explain to enable Envoy's Access Logs for the entire service mesh

kubectl apply -f <(cat <<EOF

apiVersion: networking.istio.io/v1alpha3

kind: EnvoyFilter

metadata:

name: ingressgateway-access-logging

namespace: istio-system

spec:

workloadSelector:

labels:

istio: ingressgateway

configPatches:

- applyTo: NETWORK_FILTER

match:

context: GATEWAY

listener:

filterChain:

filter:

name: "envoy.filters.network.http_connection_manager"

patch:

operation: MERGE

value:

typed_config:

"@type": "type.googleapis.com/envoy.extensions.filters.network.http_connection_manager.v3.HttpConnectionManager"

access_log:

- name: envoy.access_loggers.file

typed_config:

"@type": "type.googleapis.com/envoy.extensions.access_loggers.file.v3.FileAccessLog"

path: /dev/stdout

format: "[%START_TIME%] \"%REQ(:METHOD)% %REQ(X-ENVOY-ORIGINAL-PATH?:PATH)% %PROTOCOL%\" %RESPONSE_CODE% %RESPONSE_FLAGS% \"%UPSTREAM_TRANSPORT_FAILURE_REASON%\" %BYTES_RECEIVED% %BYTES_SENT% %DURATION% %RESP(X-ENVOY-UPSTREAM-SERVICE-TIME)% \"%REQ(X-FORWARDED-FOR)%\" \"%REQ(USER-AGENT)%\" \"%REQ(X-REQUEST-ID)%\" \"%REQ(:AUTHORITY)%\" \"%UPSTREAM_HOST%\" %UPSTREAM_CLUSTER% %UPSTREAM_LOCAL_ADDRESS% %DOWNSTREAM_LOCAL_ADDRESS% %DOWNSTREAM_REMOTE_ADDRESS% %REQUESTED_SERVER_NAME% %ROUTE_NAME%\n"

EOF