Difference between revisions of "Terraform"

(→AWS) |

|||

| (119 intermediate revisions by the same user not shown) | |||

| Line 1: | Line 1: | ||

This article is about utilising a tool from HashiCorp called Terraform to build infrastructure as a code. | This article is about utilising a tool from HashiCorp called Terraform to build infrastructure as a code - IoC. | ||

{{Note| most of the paragraphs have examples of Terraform prior 0.12 version syntax that uses HCLv1. HCLv2 has been introduced with v0.12+ that contains significiant syntax and capabilites improvments. }} | |||

= Install terraform = | = Install terraform = | ||

| Line 7: | Line 10: | ||

sudo mv ./terraform /usr/local/bin | sudo mv ./terraform /usr/local/bin | ||

</source> | </source> | ||

== | == [https://github.com/kamatama41/tfenv tfenv] - manage multiple versions of Teraform == | ||

Install and usage | |||

<source lang=bash> | <source lang=bash> | ||

tfenv install 0. | git clone https://github.com/tfutils/tfenv.git ~/.tfenv | ||

tfenv use 0. | echo "[ -d $HOME/.tfenv ] && export PATH=$PATH:$HOME/.tfenv/bin/" >> ~/.bashrc # or ~/.bash_profile | ||

# Use | |||

tfenv install 1.0.6 | |||

tfenv use 1.0.6 | |||

</source> | </source> | ||

== IDE == | |||

Development I use: | |||

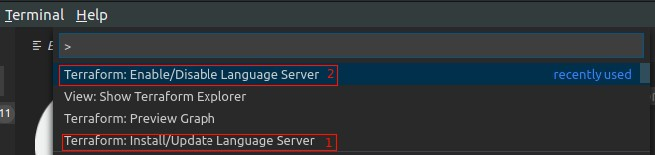

* VSCode with 1.41.1+ (for reference) with extensions: | |||

** Terraform Autocomplete by erd0s | |||

** Terraform by Mikael Olenfalk with enabled Language Server; open the command pallet with <code>Ctrl+Shift+P</code> | |||

:[[File:ClipCapIt-200202-153128.PNG]] | |||

= Basic configuration = | = Basic configuration = | ||

| Line 76: | Line 90: | ||

== AWS == | == AWS == | ||

;References | |||

*[https://www.padok.fr/en/blog/terraform-s3-bucket-aws S3 bucket for all accounts] | |||

*[https://www.padok.fr/en/blog/authentication-aws-profiles Multi account auth using aws profiles and <code>provider "aws" {}</code>] | |||

=== Local state === | |||

Local state configuration | |||

<source lang="json"> | <source lang="json"> | ||

vi | vi backend.tf | ||

terraform { | |||

version = "~> 1.0" | |||

required_version = "= 0.12.29" | |||

backend "local" {} | |||

} | |||

# } | </source> | ||

=== Remote state (single) for multi account deployments === | |||

There are many combination setting up backend and AWS credentials. Important understand is that <code>terraform { backend{} }</code> block does NOT use <code>provider "aws {}"</code> configuration in order to access the state bucket. It only uses the backend one. | |||

* exporting credentials allows working with assume roles that are different in the backend and terraform blocks. | |||

* specifying different <code>profile = </code> in each blocks | |||

;Credentials | |||

<source lang="bash"> | |||

## profile allows assumes roles in other accounts | |||

#export AWS_PROFILE="piotr" | |||

# Environment credentials for a user that can assume roles (eg. ) in other accounts: | |||

# | * arn:aws:iam::111111111111:role/terraform-s3state - save state in s3 bucket | |||

# | * arn:aws:iam::222222222222:role/terraform-crossaccount-admin - deploy resources | |||

export AWS_ACCESS_KEY_ID=AKIAIOSFODNN7EXAMPLE | |||

export AWS_SECRET_ACCESS_KEY=wJalrXUtnFEMI/K7MDENG/bPxRfiCYEXAMPLEKEY | |||

export AWS_DEFAULT_REGION=us-east-1 | |||

# unset all of them if need to | |||

unset ${!AWS@} | |||

</source> | |||

;<code>terraform {}</code> | |||

<source lang="json"> | |||

terraform { | terraform { | ||

version = "~> 1.0" | version = "~> 1.0" | ||

required_version = "= 0. | required_version = "= 0.12.29" | ||

# profile "dev-us" # we use 'role_arn' but could specify aws profile instead | |||

backend "s3" { | backend "s3" { | ||

bucket = "tfstate-${var. | bucket = "tfstate-${var.project}-${var.account-id}" # must exist beforehand | ||

key = "aws/${var. | key = "terraform/aws/${var.project}/tfstate" # this could be much simpler when working with terraform workspaces | ||

region = "${var.region}" | region = "${var.region}" | ||

role_arn = "arn:aws:iam::111111111111:role/terraform-s3state" # role to assume in an infra account that the s3 state exists | |||

role_arn = "arn:aws:iam:: | |||

} | } | ||

} | } | ||

</source> | |||

;<code>provider {}</code> | |||

<source lang="json"> | |||

provider "aws" { | provider "aws" { | ||

## We could use profiles but instead we use 'assume_role' option. Also on your laptop | |||

# shared_credentials_file = "/home/piotr/.aws/credentials" | ## it should be your creds profile eg. 'piotr-xaccount-admin' | ||

#profile = "terraform-crossaccount-admin" | |||

#shared_credentials_file = "/home/piotr/.aws/credentials" | |||

assume_role = { | assume_role = { | ||

role_arn = "arn:aws:iam:: | role_arn = "arn:aws:iam::<MY_PROD_ACCOUNT>:role/terraform-crossaccount-admin" # assume role in target account | ||

role_arn = "arn:aws:iam::${var.aws_account}:role/terraform-crossaccount-admin" # can use variables | |||

} | } | ||

region = " | region = "var.aws_region" | ||

allowed_account_ids = [ "111111111111", "222222222222" ] | allowed_account_ids = [ "111111111111", "222222222222" ] # safety net | ||

} | } | ||

</source> | |||

;Workspace configuration | |||

Dev configuration in <code>dev.tfvars</code> | |||

<source lang="json"> | |||

aws_region = "eu-west-1" | |||

aws_account = "<MY_DEV_ACCOUNT>" | |||

</source> | |||

Prod configuration in <code>prod.tfvars</code> | |||

<source lang="json"> | |||

aws_region = "eu-west-1" | |||

aws_account = "<MY_PROD_ACCOUNT>" | |||

</source> | |||

;Workspaces | |||

<source lang="bash"> | |||

terraform init | |||

terraform workspace new dev | |||

terraform workspace new prod | |||

</source> | |||

;Apply on one account | |||

<source lang="bash"> | |||

terraform workspace select dev | |||

terraform apply --var-file $(terraform workspace show).tfvars | |||

</source> | |||

== GCP Google Cloud Platform == | |||

<source lang=bash> | |||

# Generate default app credentials | |||

gcloud auth application-default login | |||

Go to the following link in your browser: | |||

https://accounts.google.com/o/oauth2/auth?response_type=code&client_id=****_challenge_method=S256 | |||

Enter verification code: *** | |||

Credentials saved to file: [/home/piotr/.config/gcloud/application_default_credentials.json] | |||

These credentials will be used by any library that requests Application Default Credentials (ADC). | |||

Quota project "test-devops-candidate1" was added to ADC which can be used by Google client libraries for billing and quota. Note that some services may still bill the project owning the resource | |||

</source> | </source> | ||

| Line 317: | Line 404: | ||

</source> | </source> | ||

= | = AWS example = | ||

Prerequisites are: | Prerequisites are: | ||

*~/.aws/credential file exists | *~/.aws/credential file exists | ||

*variables.tf exist, with context below: | *variables.tf exist, with context below: | ||

If you remove <tt>default</tt> value you will be prompted for it. | If you remove <tt>default</tt> value you will be prompted for it. | ||

<code>inputs.tf</code> also known as a variable file. | |||

<source lang=bash> | |||

$> vi inputs.tf | |||

variable "region" { default = "eu-west-1" } | |||

variable "profile" { | |||

description = "Provide AWS credentials profile you want to use, saved in ~/.aws/credentials file" | |||

default = "terraform-profile" } | |||

variable "key_name" { | |||

description = <<DESCRIPTION | |||

Provide name of the ssh private key file name, ~/.ssh will be search | |||

This is the key assosiated with the IAM user in AWS. Example: id_rsa | |||

DESCRIPTION | |||

default = "id_rsa" } | |||

variable "public_key_path" { | |||

description = <<DESCRIPTION | |||

Path to the SSH public keys for authentication. This key will be injected | |||

into all ec2 instances created by Terraform. | |||

Example: ~./ssh/terraform.pub | |||

DESCRIPTION | |||

default = "~/.ssh/id_rsa.pub" } | |||

</source> | |||

Terraform .tf file | Terraform .tf file | ||

<source lang=bash> | |||

$ vi example.tf | |||

provider "aws" { | |||

region = "${var.region}" | |||

profile = "${var.profile}" | |||

} | |||

resource "aws_vpc" "vpc" { | |||

cidr_block = "10.0.0.0/16" | |||

} | |||

# Create an internet gateway to give our subnet access to the open internet | |||

resource "aws_internet_gateway" "internet-gateway" { | |||

vpc_id = "${aws_vpc.vpc.id}" | |||

} | |||

# Give the VPC internet access on its main route table | |||

resource "aws_route" "internet_access" { | |||

route_table_id = "${aws_vpc.vpc.main_route_table_id}" | |||

destination_cidr_block = "0.0.0.0/0" | |||

gateway_id = "${aws_internet_gateway.internet-gateway.id}" | |||

} | |||

# Create a subnet to launch our instances into | |||

resource "aws_subnet" "default" { | |||

vpc_id = "${aws_vpc.vpc.id}" | |||

cidr_block = "10.0.1.0/24" | |||

map_public_ip_on_launch = true | |||

tags { | |||

Name = "Public" | |||

} | |||

} | |||

# Our default security group to access | |||

# instances over SSH and HTTP | |||

resource "aws_security_group" "default" { | |||

name = "terraform_securitygroup" | |||

description = "Used for public instances" | |||

vpc_id = "${aws_vpc.vpc.id}" | |||

# SSH access from anywhere | |||

ingress { | |||

from_port = 22 | |||

to_port = 22 | |||

protocol = "tcp" | |||

cidr_blocks = ["0.0.0.0/0"] | |||

} | |||

# HTTP access from the VPC | |||

ingress { | |||

from_port = 80 | |||

to_port = 80 | |||

protocol = "tcp" | |||

cidr_blocks = ["10.0.0.0/16"] | |||

} | |||

# outbound internet access | |||

egress { | |||

from_port = 0 | |||

to_port = 0 | |||

protocol = "-1" # all protocols | |||

cidr_blocks = ["0.0.0.0/0"] | |||

} | |||

} | |||

resource "aws_key_pair" "auth" { | |||

key_name = "${var.key_name}" | |||

public_key = "${file(var.public_key_path)}" | |||

} | |||

resource "aws_instance" "webserver" { | |||

ami = "ami-405f7226" | |||

instance_type = "t2.nano" | |||

key_name = "${aws_key_pair.auth.id}" | |||

vpc_security_group_ids = ["${aws_security_group.default.id}"] | |||

# We're going to launch into the public subnet for this. | |||

# Normally, in production environments, webservers would be in | |||

# private subnets. | |||

subnet_id = "${aws_subnet.default.id}" | |||

# The connection block tells our provisioner how to | |||

# communicate with the instance | |||

connection { | |||

user = "ubuntu" | |||

} | |||

# We run a remote provisioner on the instance after creating it | |||

# to install Nginx. By default, this should be on port 80 | |||

provisioner "remote-exec" { | |||

inline = [ | |||

"sudo apt-get -y update", | |||

"sudo apt-get -y install nginx", | |||

"sudo service nginx start" | |||

] | |||

} | |||

} | |||

</source> | |||

== Run a plan == | == Run a plan == | ||

<source> | |||

$ terraform plan | |||

var.key_name | |||

Name of the AWS key pair | |||

Enter a value: id_rsa #name of the key_pair | |||

var.profile | |||

AWS credentials profile you want to use | |||

Enter a value: terraform-profile #aws profile in ~/.aws/credentials file | |||

var.public_key_path | |||

Path to the SSH public keys for authentication. | |||

Example: ~./ssh/terraform.pub | |||

Enter a value: ~/.ssh/id_rsa.pub #path to the matching public key | |||

Refreshing Terraform state in-memory prior to plan... | |||

The refreshed state will be used to calculate this plan, but will not be | |||

persisted to local or remote state storage. | |||

The Terraform execution plan has been generated and is shown below. | |||

Resources are shown in alphabetical order for quick scanning. Green resources | |||

will be created (or destroyed and then created if an existing resource | |||

exists), yellow resources are being changed in-place, and red resources | |||

will be destroyed. Cyan entries are data sources to be read. | |||

+ aws_instance.webserver | |||

ami: "ami-405f7226" | |||

associate_public_ip_address: "<computed>" | |||

availability_zone: "<computed>" | |||

ebs_block_device.#: "<computed>" | |||

ephemeral_block_device.#: "<computed>" | |||

instance_state: "<computed>" | |||

instance_type: "t2.nano" | |||

ipv6_addresses.#: "<computed>" | |||

key_name: "${aws_key_pair.auth.id}" | |||

network_interface_id: "<computed>" | |||

placement_group: "<computed>" | |||

private_dns: "<computed>" | |||

private_ip: "<computed>" | |||

public_dns: "<computed>" | |||

public_ip: "<computed>" | |||

root_block_device.#: "<computed>" | |||

security_groups.#: "<computed>" | |||

source_dest_check: "true" | |||

subnet_id: "${aws_subnet.default.id}" | |||

tenancy: "<computed>" | |||

vpc_security_group_ids.#: "<computed>" | |||

+ aws_internet_gateway.internet-gateway | |||

vpc_id: "${aws_vpc.vpc.id}" | |||

+ aws_key_pair.auth | |||

fingerprint: "<computed>" | |||

key_name: "id_rsa" | |||

public_key: "ssh-rsa AAAAB3NzaC1yc2EAAAADAQABAAABAQDfc piotr@ubuntu" | |||

...omitted...> | |||

Plan: 7 to add, 0 to change, 0 to destroy. | |||

</source> | |||

;Plan a single target | |||

<source lang=bash> | |||

$> terraform plan -target=aws_ami_from_instance.golden | |||

</source> | |||

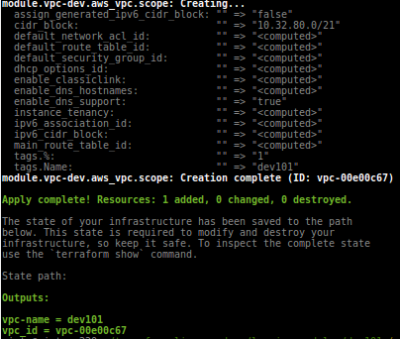

== Terraform apply == | == Terraform apply == | ||

$ terraform apply | <source lang=bash> | ||

$> terraform apply | |||

$> terraform show # shoe current resources in the state file | |||

aws_instance.webserver: | |||

id = i-09c1c665cef284235 | |||

ami = ami-405f7226 | |||

<...> | |||

aws_security_group.default: | |||

id = sg-b14bb1c8 | |||

description = Used for public instances | |||

egress.# = 1 | |||

<...> | |||

aws_subnet.default: | |||

id = subnet-6f4f510b | |||

<...> | |||

aws_vpc.vpc: | |||

id = vpc-9ba0b7ff | |||

<...> | |||

</source> | |||

;Apply a single resource using <code>-target <resource></code> | |||

<source lang=bash> | |||

$> terraform apply -target=aws_ami_from_instance.golden | |||

</source> | |||

== Terraform destroy == | |||

Run destroy command to delete all resources that were created | |||

<source lang=bash> | |||

$> terraform destroy | |||

aws_key_pair.auth: Refreshing state... (ID: id_rsa) | |||

aws_vpc.vpc: Refreshing state... (ID: vpc-9ba0b7ff) | |||

<...> | |||

Destroy complete! Resources: 7 destroyed. | |||

</source> | |||

;Destroy a single resource - targeting | |||

<source lang=bash> | <source lang=bash> | ||

$> terraform | $> terraform show | ||

$> terraform destroy -target=aws_ami_from_instance.golden | |||

</source> | |||

== Terraform taint == | |||

Get a resource list | |||

<source lang=bash> | |||

terraform state list | |||

# select item for the list # | |||

</source> | |||

;Version 0.11: resource index must be addressed as eg. <code>aws_instance.main.0</code> not <code>aws_instance.main[0]</code>. It's not possible to tain whole module | |||

<source lang=bash> | |||

terraform taint -module=<MODULE_NAME> aws_instance.main.0 | |||

</source> | |||

;Version 0.12: resources and modules can be addressed in more natural way | |||

<source lang=bash> | |||

terraform taint module.MODULE_NAME.aws_instance.main.0 | |||

</source> | |||

=Use ansible from Terraform - Provision using Ansible = | |||

Unsurr if this is the best approach due to the fact of how to store the state of local-exec Ansible run. Could be set to always run as Ansible playbooks are immutable. Exame: https://github.com/dzeban/c10k/blob/master/infrastructure/main.tf | |||

= Debug = | |||

== Output complex object == | |||

Often it is required to manipulate a data structure that is an output of <tt>resource</tt>, <tt>data.resource</tt> or simply a template that might be hidden computation not always displayed on your screen. You can use following techniques to iterate over you code output: | |||

;Output and [https://www.terraform.io/docs/providers/null/resource.html null_resource] - empty virtual container that can run any arbitrary commands | |||

* '''Problem statement:''' Display computed Terrafom <code>templatefile</code> | |||

* '''Solution:''' Use <code>null_resource</code> to create a template, such template will be shown in a <tt>plan</tt>. If such template is Json policy, invalid policies fail and you cannot see why. Plan will show the object being constructed, running <code>terraform apply</code> it can be saved into state file as output variable. Then the object can be re-used for further transformations. | |||

<syntaxhighlight lang="Terraform"> | |||

data "aws_caller_identity" "current" {} | |||

# resource "aws_kms_key" "secretmanager" { | |||

# policy = templatefile("./templates/kms_secretmanager.policy.json.tpl", ... # debugging policy with | |||

# } # null_resource and ouput | |||

resource "aws_kms_alias" "secretmanager" { | |||

name = "alias/secretmanager" | |||

target_key_id = aws_kms_key.secretmanager.key_id | |||

} | |||

resource "null_resource" "policytest" { | |||

triggers = { | |||

policytest = templatefile("./templates/kms_secretmanager.policy.json.tpl", | |||

{ | |||

arns_json = jsonencode(var.crossAccountIamUsers_arns) | |||

currentAccountId = data.aws_caller_identity.current.account_id | |||

crossAccountAccessEnabled = length([var.crossAccountIamUsers_arns]) > 0 ? true : false | |||

}) | |||

} | |||

} | |||

output "policy" { | |||

value = templatefile("./templates/kms_secretmanager.policy.json.tpl", | |||

{ | |||

arns_json = jsonencode(var.crossAccountIamUsers_arns) | |||

currentAccountId = data.aws_caller_identity.current.account_id | |||

crossAccountAccessEnabled = length([var.crossAccountIamUsers_arns]) > 0 ? true : false | |||

} | |||

) | |||

} | |||

</syntaxhighlight> | |||

Policy template file <code>./templates/kms_secretmanager.policy.json.tpl</code> | |||

<source lang=json> | |||

{ | |||

"Version": "2012-10-17", | |||

"Id": "key-consolepolicy-1", | |||

"Statement": [ | |||

{ | |||

"Sid": "Enable IAM User Permissions", | |||

"Effect": "Allow", | |||

"Principal": { | |||

"AWS": "arn:aws:iam::${currentAccountId}:root" | |||

}, | |||

"Action": "kms:*", | |||

"Resource": "*" | |||

}, | |||

%{ if crossAccountAccessEnabled == true ~} | |||

{ | |||

"Sid": "Allow cross-accounts retrieve secrets", | |||

"Effect": "Allow", | |||

"Principal": { | |||

"AWS": ${arns_json} | |||

}, | |||

"Action": [ | |||

"kms:Decrypt", | |||

"kms:DescribeKey" | |||

], | |||

"Resource": "*" | |||

} | |||

%{ endif ~} | |||

] | |||

} | |||

</source> | |||

;Run | |||

<source lang=bash> | |||

$ terraform apply -var-file=test.tfvars -target null_resource.policytest # -var-file contains 'var.crossAccountIamUsers_arns' list variable | |||

Terraform will perform the following actions: | |||

# null_resource.policytest will be created | |||

+ resource "null_resource" "policytest" { | |||

+ id = (known after apply) | |||

+ triggers = { | |||

+ "policytest" = jsonencode( | |||

{ | |||

+ Id = "key-consolepolicy-1" | |||

+ Statement = [ | |||

+ { | |||

+ Action = "kms:*" | |||

+ Effect = "Allow" | |||

+ Principal = { | |||

+ AWS = "arn:aws:iam::111111111111:root" | |||

} | |||

+ Resource = "*" | |||

+ Sid = "Enable IAM User Permissions" | |||

}, | |||

+ { | |||

+ Action = [ | |||

+ "kms:Decrypt", | |||

+ "kms:DescribeKey", | |||

] | |||

+ Effect = "Allow" | |||

+ Principal = { | |||

+ AWS = [ | |||

+ "arn:aws:iam::111111111111:user/dev", | |||

+ "arn:aws:iam::111111111111:user/test", | |||

] | |||

} | |||

+ Resource = "*" | |||

+ Sid = "Allow cross-accounts retrieve secrets" | |||

}, | |||

] | |||

+ Version = "2012-10-17" | |||

} | |||

) | |||

} | |||

} | |||

Plan: 1 to add, 0 to change, 0 to destroy. | |||

Do you want to perform these actions? | |||

Terraform will perform the actions described above. | |||

Only 'yes' will be accepted to approve. | |||

Enter a value: yes # <- manual imput | |||

Apply complete! Resources: 1 added, 0 changed, 0 destroyed. | |||

Outputs: | |||

policy = { | |||

"Version": "2012-10-17", | |||

"Id": "key-consolepolicy-1", | |||

"Statement": [ | |||

{ | |||

"Sid": "Enable IAM User Permissions", | |||

"Effect": "Allow", | |||

"Principal": { | |||

"AWS": "arn:aws:iam::111111111111:root" | |||

}, | |||

"Action": "kms:*", | |||

"Resource": "*" | |||

}, | |||

{ | |||

"Sid": "Allow cross-accounts retrieve secrets", | |||

"Effect": "Allow", | |||

"Principal": { | |||

"AWS": ["arn:aws:iam::111111111111:user/dev","arn:aws:iam::111111111111:user/test"] | |||

}, | |||

"Action": [ | |||

"kms:Decrypt", | |||

"kms:DescribeKey" | |||

], | |||

"Resource": "*" | |||

} | |||

] | |||

} | |||

</source> | |||

= Debug = | |||

== Debug and analyze logs == | |||

We are going to enable logging to a file in Terraform. Convert log file to pdf and use sheri.ai to give us the answers. | |||

<source lang=bash> | |||

# Pre req - Ubuntu 22.04 | |||

sudo apt-get update && sudo apt-get install ghostscript # for ps2pdf converter | |||

# Set Terraform logging | |||

export TF_LOG=TRACE # DEBUG | |||

export TF_LOG_PATH=/tmp/tflogs.log | |||

terraform plan|apply | |||

vim $TF_LOG_PATH -c "hardcopy > ${TF_LOG_PATH}.ps | q"; ps2pdf ${TF_LOG_PATH}.ps ${TF_LOG_PATH}-$(echo $(date +"%Y%m%d-%H%M")).pdf | |||

</source> | </source> | ||

| Line 553: | Line 867: | ||

</source> | </source> | ||

== | == Log user_data to console logs == | ||

In Linux add a line below after she-bang | |||

<source lang=bash> | |||

exec > >(tee /var/log/user-data.log|logger -t user-data -s 2>/dev/console) | |||

</source> | |||

Now you can go and open System Logs in AWS Console to view user-data script logs. | |||

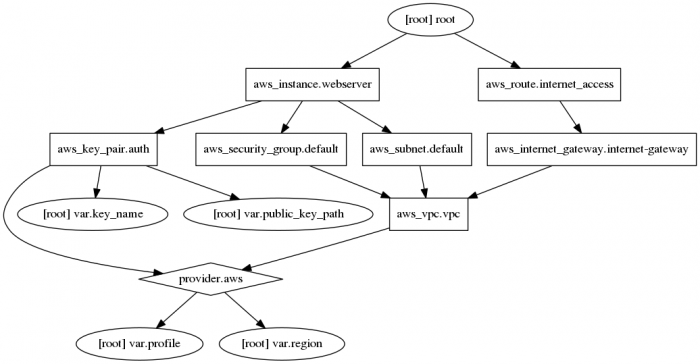

= terraform graph to visualise configuration = | |||

== Graph dependencies == | |||

Create visualised file. You may need to install <code>sudo apt-get install graphviz</code> if it is not in your system. | Create visualised file. You may need to install <code>sudo apt-get install graphviz</code> if it is not in your system. | ||

<source lang=bash> | |||

sudo apt install graphviz # installs 'dot' | |||

terraform graph | dot -Tpng > graph.png | |||

</source> | |||

[[File:Example2.png|none|left|700px|Terraform visual configuration]] | [[File:Example2.png|none|left|700px|Terraform visual configuration]] | ||

== | == [https://serverfault.com/questions/1005761/what-does-error-cycle-means-in-terraform Cycle error] == | ||

Example cycle error: | |||

<source> | |||

Error: Cycle: module.gke.google_container_node_pool.pools["low-standard-n1"] | |||

module.gke.google_container_node_pool.pools["medium-standard-n1"] | |||

module.gke.google_container_node_pool.pools["large-standard-n1"] | |||

<...> | module.gke.local.cluster_endpoint (expand) | ||

module.gke.output.endpoint (expand) | |||

provider["registry.terraform.io/gavinbunney/kubectl"] | |||

kubectl_manifest.sync["source.toolkit.fluxcd.io/v1beta1/gitrepository/flux-system/flux-system"] (destroy) | |||

module.gke.google_container_node_pool.pools["preemptible"] (destroy) | |||

module.gke.module.gcloud_delete_default_kube_dns_configmap.module.gcloud_kubectl.null_resource.additional_components[0] (destroy) | |||

module.gke.module.gcloud_delete_default_kube_dns_configmap.module.gcloud_kubectl.null_resource.run_command[0] (destroy) | |||

module.gke.module.gcloud_delete_default_kube_dns_configmap.module.gcloud_kubectl.null_resource.module_depends_on[0] (destroy) | |||

module.gke.module.gcloud_delete_default_kube_dns_configmap.module.gcloud_kubectl.null_resource.run_destroy_command[0] (destroy) | |||

module.gke.kubernetes_config_map.kube-dns[0] (destroy) | |||

module.gke.google_container_cluster.primary | |||

module.gke.local.cluster_output_master_auth (expand) | |||

module.gke.local.cluster_master_auth_list_layer1 (expand) | |||

module.gke.local.cluster_master_auth_list_layer2 (expand) | |||

module.gke.local.cluster_master_auth_map (expand) | |||

module.gke.local.cluster_ca_certificate (expand) | |||

module.gke.output.ca_certificate (expand) | |||

provider["registry.terraform.io/hashicorp/kubernetes"] | |||

</source> | |||

The <code>-draw-cycles</code> command causes Terraform to mark the arrows that are related to the cycle being reported using the color red. If you cannot visually distinguish red from black, you may wish to first edit the generated Graphviz code to replace red with some other color you can distinguish. | |||

<source lang=bash> | |||

sudo apt install graphviz | |||

terraform graph -draw-cycles -type=plan > cycle-plan.graphviz | |||

terraform graph -draw-cycles | dot -Tpng > cycles.png | |||

terraform graph -draw-cycles | dot -Tsvg > cycles.svg | |||

terraform graph -draw-cycles | dot -Tpdf > cycles.pdf | |||

# | -draw-cycles - highlight any cycles in the graph with colored edges. This helps when diagnosing cycle errors. | |||

# | -type=plan - type of graph to output. Can be: plan, plan-destroy, apply, validate, input, refresh. | |||

# For large graphs you may want to install inkscape | |||

sudo apt install inkscape --no-install-suggests --no-install-recommends | |||

</source> | |||

Awoid cycle errors in modules by structuring your config to avoid cross-module references. So instead of directly accessing an output of one module from inside another, set it up as in input parameter instead and wire everything together on the top level. | |||

;How to get it solved | |||

With the cycling dependency issue, study the graph then decide on removing from the state a resource that should be generated later. If the graph is not clear or too complex to read you may need to guess and delete from the state a resource marked for deletion, ie: | |||

<source> | |||

terraform state rm kubectl_manifest.install[\"apps/v1/deployment/flux-system/kustomize-controller\"] | |||

</source> | |||

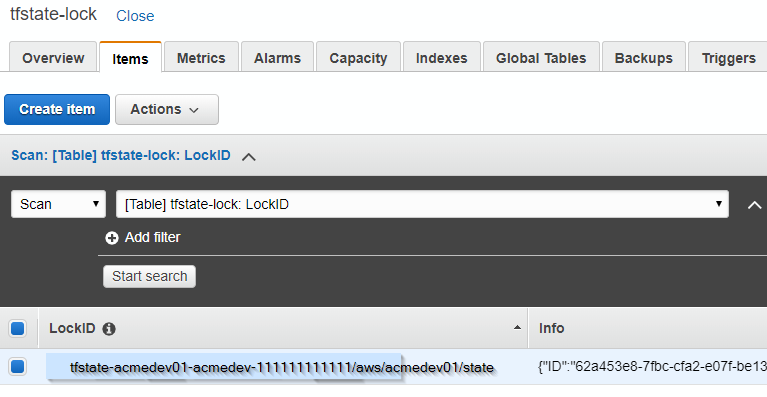

= Remote state = | = Remote state = | ||

| Line 611: | Line 975: | ||

<source lang=bash> | <source lang=bash> | ||

{"ID":"62a453e8-7fbc-cfa2-e07f-be1381b82af3","Operation":"OperationTypePlan","Info":"","Who":"piotr@laptop1","Version":"0.11.11","Created":"2019-03-07T08:49:33.3078722Z","Path":"tfstate-acmedev01-acmedev-111111111111/aws/acmedev01/state"} | {"ID":"62a453e8-7fbc-cfa2-e07f-be1381b82af3","Operation":"OperationTypePlan","Info":"","Who":"piotr@laptop1","Version":"0.11.11","Created":"2019-03-07T08:49:33.3078722Z","Path":"tfstate-acmedev01-acmedev-111111111111/aws/acmedev01/state"} | ||

</source> | |||

= Workspaces = | |||

== [https://discuss.hashicorp.com/t/how-to-change-the-name-of-a-workspace/24010 Rename a workspace / move the state file] == | |||

{{Note|The state manipulation commands run through Terraform’s automatic state upgrading processes and so best to do this with the same Terraform CLI version that you’ve most recently been using against this workspace so that the state won’t be implicitly upgraded as part of the operation.}} | |||

<source lang=bash> | |||

terraform workspace select old-name | |||

terraform state pull >old-name.tfstate | |||

terraform workspace new new-name | |||

terraform state push old-name.tfstate | |||

terraform show # confirm that the newly-imported state looks 'right', before deleting the old workspace | |||

terraform workspace delete -force old-name | |||

</source> | </source> | ||

| Line 626: | Line 1,002: | ||

* Any files with names ending in <code>.auto.tfvars</code> or <code>.auto.tfvars.json</code>. | * Any files with names ending in <code>.auto.tfvars</code> or <code>.auto.tfvars.json</code>. | ||

=Syntax= | =Syntax Terraform 0.12.6+= | ||

{{Note|This [https://alexharv074.github.io/2019/06/02/adventures-in-the-terraform-dsl-part-iii-iteration-enhancements-in-terraform-0.12.html#for-expressions for-expressions] link is a little diamond for this subject}} | |||

== Map and nested block == | |||

Terrafom 0.12 introduces stricter validation for followings but allows map keys to be set dynamically from expressions. Note of "=" sign. | Terrafom 0.12 introduces stricter validation for followings but allows map keys to be set dynamically from expressions. Note of "=" sign. | ||

* a map attribute - usually have user-defined keys, like we see in the tags example | * a map attribute - usually have user-defined keys, like we see in the tags example | ||

| Line 648: | Line 1,026: | ||

</source> | </source> | ||

== [https://alexharv074.github.io/2019/06/02/adventures-in-the-terraform-dsl-part-iii-iteration-enhancements-in-terraform-0.12.html For_each] == | |||

* [https://alexharv074.github.io/2019/06/02/adventures-in-the-terraform-dsl-part-iii-iteration-enhancements-in-terraform-0.12.html terraform iterations] | |||

* [https://discuss.hashicorp.com/t/produce-maps-from-list-of-strings-of-a-map/2197 Produce maps from list of strings of a map] | |||

terraform 0. | |||

{| class="wikitable" | {| class="wikitable" | ||

|+ For_each and new allowed formatting without the need for "${var.vpc_cidr}" syntax = var.vpc_cidr | |+ For_each and new allowed formatting without the need for "${var.vpc_cidr}" syntax = var.vpc_cidr | ||

| Line 739: | Line 1,114: | ||

|} | |} | ||

=== | |||

=== [https://www.sheldonhull.com/blog/how-to-iterate-through-a-list-of-objects-with-terraforms-for-each-function/ Iterate over list of objects] === | |||

[https://stackoverflow.com/questions/58594506/how-to-for-each-through-a-listobjects-in-terraform-0-12 how-to-for-each-through-a-listobjects] | |||

<source lang=yaml> | |||

# debug.tf | |||

locals { | |||

users = [ | |||

# list of objects | |||

{ name = "foo", is_enabled = true }, | |||

{ name = "bar", is_enabled = false }, | |||

] | |||

} | |||

resource "null_resource" "this" { | |||

for_each = { for name in local.users: name.name => name.is_enabled } | |||

connection { | |||

name = each.key | |||

email = each.value | |||

} | } | ||

} | |||

output "users_map" { | |||

value = { for name in local.users: name.name => name.is_enabled } | |||

} | |||

# terraform init | |||

# terraform apply | |||

null_resource.this["bar"]: Creating... | |||

null_resource.this["foo"]: Creating... | |||

null_resource.this["bar"]: Creation complete after 0s [id=7228791922218879597] | |||

null_resource.this["foo"]: Creation complete after 0s [id=7997705376010456213] | |||

Apply complete! Resources: 2 added, 0 changed, 0 destroyed. | |||

Plan: | Outputs: | ||

users_map = { | |||

"bar" = false | |||

" | "foo" = true | ||

} | |||

</source> | |||

== Plan is more readable and explicit == | |||

[[Terraform/plan_tf_11_vs_12|See comparison]] | |||

== [https://www.hashicorp.com/blog/terraform-0-12-rich-value-types/ Rich Value Types] - for previewing whole resource object == | |||

'''Resources and Modules as Values''' Terraform 0.12 now permits using entire resources as object values within configuration, including returning them as outputs and passing them as input variables: | |||

<source> | |||

output "vpc" { | |||

value = aws_vpc.example | |||

} | |||

</source> | |||

The type of this output value is an object type derived from the schema of the <code>aws_vpc</code> resource type. The calling module can then access attributes of this result in the same way as the returning module would use <code>aws_vpc.example</code>, such as <code>module.example.vpc.cidr_block</code>. This works also for modules with an expression like <code>module.vpc</code> evaluating to an object value with attributes corresponding to the modules's named outputs. | |||

== <code>for</code> == | |||

* [https://discuss.hashicorp.com/t/produce-maps-from-list-of-strings-of-a-map/2197 Produce maps from list of strings of a map] | |||

This is mostly used for parsing preexisting lists and maps rather than generating ones. For example, we are able to convert all elements in a list of strings to upper case using this expression. | |||

<source> | |||

local { | |||

upper_list = [for i in var.list : upper(i)] # creates a new list | |||

} | |||

</source> | |||

The For iterates over each element of the list and returns the value of upper(el) for each element in form of a list. We can also use this expression to generate maps. | |||

<source> | |||

local { | |||

upper_map = {for i in var.list : i => upper(i)} # creates a map with key = value | |||

# { i[0] = upper(i[0]) | |||

# i[1] = upper(i[1]) } | |||

} | |||

</source> | |||

Use ''if'' as a filter in ''for'' expression | |||

<source> | |||

[for i in var.list : upper(i) if i != ""] | |||

</source> | |||

</source> | |||

In this case, the original element from list now correspond to their uppercase version. | |||

Lastly, we can include an if statement as a filter in for expressions. Unfortunately, we are not able to use if in logical operations like the ternary operators we used before. The following state will try to return a list of all non-empty elements in their uppercase state. | |||

== Manipulate list and complex object == | |||

Build a new list by removing items that their string value do not match regex expression | |||

<source lang=bash> | |||

# Resource that generates an object | |||

resource "aws_acm_certificate" "main" {...} | |||

# Preview of input object 'aws_acm_certificate.main.domain_validation_options' | |||

output "domain_validation_options" { | |||

value = aws_acm_certificate.main.domain_validation_options | |||

description = "array/list of maps taken from resource object(aws_acm_certificate.issued) describing all validation domain records" | |||

} | |||

$ terraform output domain_validation_options | |||

[ # <- array starts here | |||

{ # <- an item of array the map object | |||

"domain_name" = "*.dev.example.com" | |||

"resource_record_name" = "_11111111111111111111111111111111.dev.example.com." | |||

"resource_record_type" = "CNAME" | |||

"resource_record_value" = "_22222222222222222222222222222222.mzlfeqexyx.acm-validations.aws." | |||

}, | |||

{ | |||

"domain_name" = "api.example.com" | |||

"resource_record_name" = "_31111111111111111111111111111111.api.example.com." | |||

"resource_record_type" = "CNAME" | |||

"resource_record_value" = "_42222222222222222222222222222222.vhzmpjdqfx.acm-validations.aws." | |||

}, | |||

] | |||

# 'for k, v' syntax builds a new object 'validation_domains' by iterating over array of maps | |||

# 'aws_acm_certificate.main.domain_validation_options' and conditinally changes a value of 'v' | |||

# if contains the sting "*.dev.example.com". tomap(v) is required to persist type across for expression. | |||

locals { | |||

validation_domains = [ | |||

for k, v in aws_acm_certificate.main.domain_validation_options : tomap(v) if contains( | |||

"*.dev.example.com", replace(v.domain_name, "*.", "") | |||

) | |||

] | |||

} | |||

$ terraform output local_distinct_domains | |||

local_distinct_domains = [ | |||

"api.example.com", | |||

"dev.example.com", | |||

"api-aat1.dev.example.com", | |||

"api-aat2.dev.example.com", | |||

] | |||

# 'for domain' expession builds a new list only when a domain matches regexall string. | |||

# checks regexall lengh > 0 of matched captured groups so true or false is return, so | |||

# the 'for domain : if' statment conditionally adds the item to the new list | |||

locals { | |||

distinct_domains_excluded = [ | |||

for domain in local.distinct_domains : domain if length(regexall("dev.example.com", domain)) > 0 | |||

] | |||

# Similar to the above but iterating over array of maps (k,v - key, value pairs) | |||

validation_domains = [ | |||

for k,v in local.validation_domains : tomap(v) if length(regexall("dev.example.com", v.domain_name)) > 0 | |||

] | |||

} | |||

# Example of iterating over array of maps 'aws_acm_certificate.main.domain_validation_options' to build a list | |||

# of fqdns that are store in 'aws_acm_certificate.main.domain_validation_options.resource_record_name' in .resource_record_name | |||

# key. | |||

# 'for fqdn' syntax on each iteration 'fqdn=aws_acm_certificate.main.domain_validation_options[index]', then | |||

# anything after ':' means 'set to value equals' fqdn.resource_record_name | |||

resource "aws_acm_certificate_validation" "main" { | |||

certificate_arn = aws_acm_certificate.main.arn | |||

validation_record_fqdns = [ | |||

for fqdn in aws_acm_certificate.main.domain_validation_options : fqdn.resource_record_name | |||

] | |||

} | |||

</source> | |||

== Terraform Merge on Wildcard Tuple == | |||

Ideally the solution should be as simple as: | |||

<source> | |||

merge(local.policy_definitions.*.parameters...) | |||

</source> | |||

* [https://github.com/hashicorp/terraform/issues/24645 Terraform Merge on Wildcard Tuple] TF, GitHub issue | |||

* [https://stackoverflow.com/questions/62683298/merge-list-of-objects-in-terraform merge-list-of-objects-in-terraform] Stackoverflow | |||

;Workaround | |||

<source> | |||

policy_parameters = [ | |||

for key,value in data.azurerm_policy_definition.d_policy_definitions: | |||

{ | |||

parameters = jsondecode(value.parameters) | |||

} | |||

] | |||

ph_parameters = local.policy_parameters[*].parameters | |||

input_parameter = [for item in local.ph_parameters: merge(item,local.ph_parameters...)][0] | |||

</source> | |||

;Break down | |||

Extracts the parameter values into a list of JSON values | |||

<source> | |||

policy_parameters = [ | |||

for key,value in data.azurerm_policy_definition.d_policy_definitions: | |||

{ | |||

parameters = jsondecode(value.parameters) | |||

} | |||

] | |||

</source> | |||

Reference the parameters as a variable | |||

<source> | |||

ph_parameters = local.policy_parameters[*].parameters | |||

</source> | |||

Merge all item content into each item. | |||

<source> | |||

input_parameter = [for item in local.ph_parameters: merge(item,local.ph_parameters...)] | |||

</source> | |||

The 3rd step gives all items in the list the same value, so we can use any index. | |||

;Usage | |||

<source> | |||

parameters = "${jsonencode(local.input_parameter[n])}" | |||

</source> | |||

== function: replace, regex == | |||

Snippet below removes comments and any empty lines from a <code>values.yaml.tpl</code> file. | |||

<source lang=json> | |||

locals { | |||

match_comment = "/(?U)(?m)(?s)^[[:space:]]*#.*$/" # match anyline that starts with '#' or any 'whitespace(s) + #' | |||

match_empty_line = "/(?m)(?s)(^[\r\n])/" | |||

} | |||

resource "helm_release" "myapp" { | |||

name = "myapp" | |||

chart = "${path.module}/charts/myapp" | |||

values = [ | |||

replace( | |||

replace( | |||

templatefile("${path.module}/templates/values.yaml.tpl", { | |||

}), local.match_comment, ""), local.match_empty_line, "") | |||

] | |||

</source> | |||

Explanation: | |||

* Terraform regex is using [https://github.com/google/re2/wiki/Syntax re2 library] | |||

* Regex flags are enabled by prefixinf the search: | |||

** <code>(?m)</code> - multi-line mode (default false) | |||

** <code>(?s)</code> - let . match \n (default false) | |||

** <code>(?U)</code> - ungreedy (default false), so stop matching comments at EOL | |||

== References == | |||

*[https://www.hashicorp.com/blog/hashicorp-terraform-0-12-preview-for-and-for-each HashiCorp Terraform 0.12 Preview: For and For-Each] | *[https://www.hashicorp.com/blog/hashicorp-terraform-0-12-preview-for-and-for-each HashiCorp Terraform 0.12 Preview: For and For-Each] | ||

= | = Syntax Terraform ~0.11 = | ||

== <code>if</code> statements == | |||

;Terraform ~< 0.9 | |||

Old versions Terraform doesn't support if- or if-else statement but we can take an advantage of a boolean ''count'' attribute that most of resources have. | Old versions Terraform doesn't support if- or if-else statement but we can take an advantage of a boolean ''count'' attribute that most of resources have. | ||

boolean true = 1 | boolean true = 1 | ||

boolean false = 0 | boolean false = 0 | ||

;Terrafrom ~0.11+ | |||

Newer version support if statements, the conditional syntax is the well-known ternary operation: | Newer version support if statements, the conditional syntax is the well-known ternary operation: | ||

CONDITION ? TRUEVAL : FALSEVAL | <source> | ||

domain = "${var.frontend_domain != "" ? var.frontend_domain : var.domain}" | CONDITION ? TRUEVAL : FALSEVAL | ||

CONDITION ? caseTrue : caseFalse | |||

domain = "${var.frontend_domain != "" ? var.frontend_domain : var.domain}" # tf <0.12 syntax | |||

count = var.image_publisher == "MicrosoftWindowsServer" ? 0 : 3 # tf 0.12+ syntax | |||

</source> | |||

The support operators are: | The support operators are: | ||

| Line 898: | Line 1,423: | ||

= Templates = | = Templates = | ||

{{ Note | [https://github.com/hashicorp/terraform-guides/tree/master/infrastructure-as-code/terraform-0.12-examples/new-template-syntax Terraform 0.12+ New Template Syntax Example] }} | |||

<source> | |||

# Terraform version 0.12+ template syntax | |||

%{ for name in var.names ~} | |||

%{ if name == "Mary" }${name}%{ endif ~} | |||

%{ endfor ~} | |||

</source> | |||

Dump a rendered <code>data.template_file</code> into a file to preview correctness of interpolations | Dump a rendered <code>data.template_file</code> into a file to preview correctness of interpolations | ||

<source> | <source> | ||

| Line 929: | Line 1,463: | ||

#For debugging you can display an array of rendered templates with the output below: | #For debugging you can display an array of rendered templates with the output below: | ||

output "userdata" { value = "${data.template_file.userdata.*.rendered}" } | output "userdata" { value = "${data.template_file.userdata.*.rendered}" } | ||

</source> | |||

{{ Note | | |||

* resource <code>template_file is deprecated</code> in favour of <code>data template_file</code> | |||

* Terraform 0.12+ offers new <code>template</code> function without a need of using a <code>data</code> object }} | |||

== template json files == | |||

For working with JSON structures it's [https://www.terraform.io/docs/configuration/functions/templatefile.html#generating-json-or-yaml-from-a-template recommended] to use <code>jsonencode</code> function to simplify escaping, delimiters and get validated json in return. | |||

<source lang=yaml> | |||

resource "aws_iam_policy" "s3Bucket" { | |||

name = s3Bucket" | |||

policy = templatefile("${path.module}/templates/s3Bucket.json.tpl", { | |||

S3BUCKETS = var.s3_buckets | |||

}) | |||

} | |||

variable "s3_buckets" { | |||

type = list(string) | |||

default = [ "aaa-bucket-111", "bbb-bucket-222" ] | |||

} | |||

</source> | |||

Template file | |||

<source lang=json> | |||

{ | |||

"Version": "2012-10-17", | |||

"Statement": [ | |||

{ | |||

"Effect": "Allow", | |||

"Action": "s3:ListAllMyBuckets", | |||

"Resource": "*" | |||

}, | |||

{ | |||

"Effect": "Allow", | |||

"Action": [ | |||

"s3:ListBucket", | |||

"s3:GetBucketLocation" | |||

], | |||

"Resource": ${jsonencode([for BUCKET in S3BUCKETS : "arn:aws:s3:::${BUCKET}"])} | |||

# renders json array -> [ "arn:aws:s3:::aaa-bucket-111", "arn:aws:s3:::bbb-bucket-222" ] | |||

} | |||

] | |||

} | |||

</source> | </source> | ||

Explain | |||

<source lang=bash> | <source lang=bash> | ||

substitution syntax ${} local loop variable | |||

| function jsonencode / templatefile function input variable, it's not ${} syntax | |||

| | / / | |||

${jsonencode([for BUCKET in S3BUCKETS : "arn:aws:s3:::${BUCKET}"])} | |||

/ | / |\ | |||

/ for loop template variable | function cloasing bracket | |||

indicates that the result to be an array[] closing bracket of the json array | |||

</source> | </source> | ||

== Resource == | == Resource == | ||

| Line 1,006: | Line 1,582: | ||

(...) | (...) | ||

</source> | </source> | ||

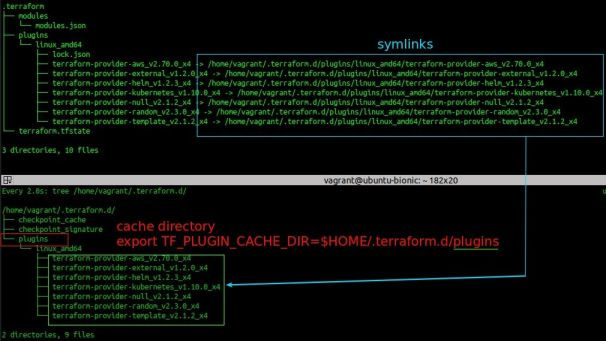

= cache terraform plugins = | |||

Set <code>TF_PLUGIN_CACHE_DIR</code> environment variable to an empty dir, then rerun <code>terraform init</code> to save downloaded providers into shared (cache) directory. | |||

<source> | |||

export TF_PLUGIN_CACHE_DIR=$HOME/.terraform.d/plugins | |||

</source> | |||

Run <code>terraform init</code>. Local <code>.terraform</code> directory has been already deleted. | |||

<source lang=terraform> | |||

terraform init -backend-config=dev.backend.tfvars | |||

Initializing the backend... | |||

Successfully configured the backend "s3"! Terraform will automatically | |||

use this backend unless the backend configuration changes. | |||

Initializing provider plugins... | |||

- Checking for available provider plugins... | |||

- Downloading plugin for provider "random" (hashicorp/random) 2.3.0... | |||

- Downloading plugin for provider "kubernetes" (hashicorp/kubernetes) 1.10.0... | |||

- Downloading plugin for provider "helm" (hashicorp/helm) 1.2.3... | |||

- Downloading plugin for provider "aws" (hashicorp/aws) 2.70.0... | |||

- Downloading plugin for provider "external" (hashicorp/external) 1.2.0... | |||

- Downloading plugin for provider "null" (hashicorp/null) 2.1.2... | |||

- Downloading plugin for provider "template" (hashicorp/template) 2.1.2... | |||

Terraform has been successfully initialized! | |||

</source> | |||

:[[File:ClipCapIt-200714-085009.PNG]] | |||

Although cache dir is used by all Terraform projects, the providers versioning still works and normal versioning restrictions apply. If you want to be sure which version is locked for use with your current project, you can inspect SHA256 of files saved in one of the files in the “.terraform” directory: | |||

<source lang=bash> | |||

$ cat .terraform/plugins/linux_amd64/lock.json | |||

{ | |||

"aws": "f08daaf64b9fca69978a40f88091d1a77fc9725fb04b0fec5e731609c53a025f", | |||

"external": "6dad56007a3cb0ae9c4655c67d13502e51e38ca2673cf0f22a5fadce6803f9e4", | |||

"helm": "09b8ccb993f7d776555e811c856de006ac12b9fedfca15b07a85a6814914fd04", | |||

"kubernetes": "7ebf3273e622d1adb736e98f6fa5cc7e664c61b9171105b13c3b5ea8f8ebc5ff", | |||

"null": "c56285e7bd25a806bf86fcd4893edbe46e621a46e20fe24ef209b6fd0b7cf5fc", | |||

"random": "791ef28ff31913d9b2ef0bedb97de98bebafe66d002bc2b9d01377e59a6cfaed", | |||

"template": "cd8665642bf0f5b5f57a53050d10fd83415428c2dc6713b85e174e007fcc93bf" | |||

} | |||

find ~/.terraform.d/plugins -type f | xargs sha256sum | |||

f08daaf64b9fca69978a40f88091d1a77fc9725fb04b0fec5e731609c53a025f /home/vagrant/.terraform.d/plugins/linux_amd64/terraform-provider-aws_v2.70.0_x4 | |||

6dad56007a3cb0ae9c4655c67d13502e51e38ca2673cf0f22a5fadce6803f9e4 /home/vagrant/.terraform.d/plugins/linux_amd64/terraform-provider-external_v1.2.0_x4 | |||

c56285e7bd25a806bf86fcd4893edbe46e621a46e20fe24ef209b6fd0b7cf5fc /home/vagrant/.terraform.d/plugins/linux_amd64/terraform-provider-null_v2.1.2_x4 | |||

791ef28ff31913d9b2ef0bedb97de98bebafe66d002bc2b9d01377e59a6cfaed /home/vagrant/.terraform.d/plugins/linux_amd64/terraform-provider-random_v2.3.0_x4 | |||

09b8ccb993f7d776555e811c856de006ac12b9fedfca15b07a85a6814914fd04 /home/vagrant/.terraform.d/plugins/linux_amd64/terraform-provider-helm_v1.2.3_x4 | |||

7ebf3273e622d1adb736e98f6fa5cc7e664c61b9171105b13c3b5ea8f8ebc5ff /home/vagrant/.terraform.d/plugins/linux_amd64/terraform-provider-kubernetes_v1.10.0_x4 | |||

cd8665642bf0f5b5f57a53050d10fd83415428c2dc6713b85e174e007fcc93bf /home/vagrant/.terraform.d/plugins/linux_amd64/terraform-provider-template_v2.1.2_x4 | |||

</source> | |||

As you can see, the SHA256 hash for AWS provider saved in the <tt>lock.json</tt> file matches the hash of providera saved in the cache directory. | |||

= AWS - [https://docs.aws.amazon.com/AmazonRDS/latest/AuroraUserGuide/AuroraMySQL.Updates.20180206.html#AuroraMySQL.Updates.20180206.CLI RDS aurora] - versioning = | |||

[https://docs.aws.amazon.com/AmazonRDS/latest/AuroraUserGuide/AuroraMySQL.Updates.20180206.html#AuroraMySQL.Updates.20180206.CLI Engine name] 'aurora-mysql' refers to engine version 5.7.x and for version 5.6.10a engine name is aurora. | |||

* The engine name for Aurora MySQL 2.x is aurora-mysql; the engine name for Aurora MySQL 1.x continues to be aurora. | |||

* The engine version for Aurora MySQL 2.x is 5.7.12; the engine version for Aurora MySQL 1.x continues to be 5.6.10ann. | |||

<syntaxhighlightjs lang=yaml> | |||

module "db" { | |||

source = "terraform-aws-modules/rds-aurora/aws" | |||

version = "2.29.0" | |||

name = "db" | |||

engine = "aurora" # v5.6 | |||

engine_version = "5.6.mysql_aurora.1.23.0" # v5.6 | |||

#engine = "aurora-mysql" # v5.7 | |||

#engine_version = "5.7.mysql_aurora.2.09.0" # v5.7 | |||

... | |||

} | |||

</syntaxhighlightjs> | |||

= [https://github.com/localstack/localstack localstack] - Mock AWS Services = | |||

<source lang="shell"> | |||

pip install localstack | |||

localstack start | |||

SERVICES=kinesis,lambda,sqs,dynamodb DEBUG=1 localstack start | |||

</source> | |||

;Examples | |||

* [https://github.com/MattSurabian/bad-terraform bad-terraform] | |||

= [https://github.com/tfsec/tfsec tfsec] - Security Scanning TF code = | |||

<source lang=bash> | |||

LATEST=$(curl --silent -L "https://api.github.com/repos/tfsec/tfsec/releases/latest" | jq -r .tag_name); echo $LATEST | |||

sudo curl -L https://github.com/tfsec/tfsec/releases/download/${LATEST}/tfsec-linux-amd64 -o /usr/local/bin/tfsec | |||

sudo chmod +x /usr/local/bin/tfsec | |||

# Use with docker | |||

docker run --rm -it -v "$(pwd):/src" liamg/tfsec /src | |||

# Usage | |||

tfsec . | |||

</source> | |||

= [https://github.com/terraform-linters/tflint tflint] - validate provider-specific issues = | |||

Requires Terraform >= 0.12 | |||

<source lang=bash> | |||

LATEST=$(curl --silent "https://api.github.com/repos/terraform-linters/tflint/releases/latest" | jq -r .tag_name); echo $LATEST | |||

TEMPDIR=$(mktemp -d) | |||

curl -L https://github.com/terraform-linters/tflint/releases/download/${LATEST}/tflint_linux_amd64.zip -o $TEMPDIR/tflint_linux_amd64.zip | |||

sudo unzip $TEMPDIR/tflint_linux_amd64.zip -d /usr/local/bin | |||

# Configure tflint | |||

# | Current directory (./.tflint.hcl) | |||

# | Home directory (~/.tflint.hcl) | |||

tflint --config other_config.hcl | |||

## Add plugins | |||

https://github.com/terraform-linters/tflint/tree/master/docs/rules | |||

cat > ./.tflint.hcl <<EOF | |||

plugin "aws" { | |||

enabled = true | |||

version = "0.5.0" | |||

source = "github.com/terraform-linters/tflint-ruleset-aws" | |||

} | |||

plugin "google" { | |||

enabled = true | |||

version = "0.15.0" | |||

source = "github.com/terraform-linters/tflint-ruleset-google" | |||

} | |||

EOF | |||

# Usage | |||

tflint --module | |||

tflint --module --var-file=dev.tfvars | |||

# Use with docker | |||

docker pull ghcr.io/terraform-linters/tflint:latest | |||

docker run --rm -v $(pwd):/src -t ghcr.io/terraform-linters/tflint:v0.34.1 | |||

docker run --rm -v $(pwd):/src -t ghcr.io/terraform-linters/tflint:v0.34.1 -v | |||

# Init and check | |||

docker run --rm -v $(pwd):/src -t --entrypoint /bin/sh ghcr.io/terraform-linters/tflint:v0.34.1 -c "tflint --init; tflint /src/" | |||

## It looks important that tflint is executed in terrafrom root path, thus `cd /src` | |||

docker run --rm -v $(pwd):/src -t -e TFLINT_LOG=debug --entrypoint /bin/sh ghcr.io/terraform-linters/tflint:v0.34.1 \ | |||

-c "cd /src; tflint --init; tflint --var-file=environments/gcp-dev.tfvars --module" | |||

</source> | |||

= [https://github.com/terraform-docs/terraform-docs terraform-docs] - generate Terraform documentation = | |||

<source lang=bash> | |||

# Install the binary | |||

VERSION=$(curl --silent "https://api.github.com/repos/terraform-docs/terraform-docs/releases/latest" | jq -r .tag_name); echo $VERSION | |||

wget https://github.com/terraform-docs/terraform-docs/releases/download/$VERSION/terraform-docs-$VERSION-linux-amd64.tar.gz | |||

tar xzvf terraform-docs-$VERSION-linux-amd64.tar.gz | |||

sudo install terraform-docs /usr/local/bin/terraform-docs | |||

# Use with docker | |||

docker run --rm --volume "$(pwd):/src" -u $(id -u) quay.io/terraform-docs/terraform-docs:0.16.0 markdown /src | |||

# Usage | |||

terraform-docs . > README.md | |||

</source> | |||

= [https://github.com/cycloidio/inframap InfraMap] - plot your Terraform state = | |||

<source lang=bash> | |||

VERSION=$(curl --silent "https://api.github.com/repos/cycloidio/inframap/releases/latest" | jq -r .tag_name); echo $VERSION | |||

TEMPDIR=$(mktemp -d) | |||

curl -L https://github.com/cycloidio/inframap/releases/download/${VERSION}/inframap-linux-amd64.tar.gz -o $TEMPDIR/inframap-linux-amd64.tar.gz | |||

tar xzvf $TEMPDIR/inframap-linux-amd64.tar.gz -C $TEMPDIR inframap-linux-amd64 | |||

sudo install $TEMPDIR/inframap-linux-amd64 /usr/local/bin/inframap | |||

# Install graphviz, it contains the `dot` program | |||

sudo apt install graphviz | |||

# Install GraphEasy | |||

## Cpan manager | |||

sudo apt install cpanminus # install perl packet managet | |||

sudo cpanm Graph::Easy # Graph-Easy-0.76 as of 2021-07 | |||

## Apt-get (tested with Ubuntu 20.04 LTS) | |||

sudo apt install libgraph-easy-perl # Graph::Easy v0.76 | |||

# a sample usage | |||

cat input.dot | graph-easy --from=dot --as_ascii | |||

</source> | |||

Usage inframap | |||

<source lang=bash> | |||

The most important subcommands are: | |||

* generate: generates the graph from STDIN or file, STDIN can be .tf files/modules or .tfstate | |||

* prune: removes all unnecessary information from the state or HCL (not supported yet) so it can be shared without any security concerns | |||

# Generate your infrastructure graph in a DOT representation from: Terraform files or state file | |||

cat terraform.tf | inframap generate --printer dot --hcl | tee graph.dot | |||

cat terraform.tfstate | inframap generate --printer dot --tfstate | tee graph.dot | |||

# `prune` command will sanitize and anonymize content of the files | |||

cat terraform.tfstate | inframap prune --canonicals --tfstate > cleaned.tfstate | |||

# Pipe all the previous commands. ASCII graph is generated using graph-easy | |||

cat terraform.tfstate | inframap prune --tfstate | inframap generate --tfstate | graph-easy | |||

# from State file - visualizing with `dot` or `graph-easy` | |||

inframap generate state.tfstate | dot -Tpng > graph.png | |||

inframap generate state.tfstate | graph-easy | |||

# from HCL | |||

inframap generate terraform.tf | graph-easy | |||

inframap generate ./my-module/ | graph-easy # or HCL module | |||

# using docker image (assuming that your Terraform files are in the working directory) | |||

docker run --rm -v ${PWD}:/opt cycloid/inframap generate /opt/terraform.tfstate | |||

</source> | |||

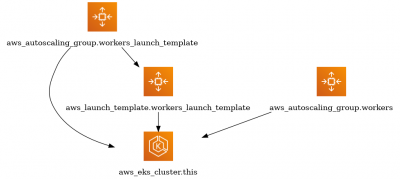

Example of EKS module | |||

:[[File:ClipCapIt-210716-090202.PNG|400px]] | |||

= [https://github.com/Pluralith/pluralith-cli/releases Pluralith] = | |||

<source lang=bash> | |||

# Install | |||

VERSION=$(curl --silent "https://api.github.com/repos/Pluralith/pluralith-cli/releases/latest" | jq -r .tag_name); echo $VERSION | |||

curl -L https://github.com/Pluralith/pluralith-cli/releases/download/${VERSION}/pluralith_cli_linux_amd64_${VERSION} -o pluralith_cli_linux_amd64_${VERSION} | |||

sudo install pluralith_cli_linux_amd64_${VERSION} /usr/local/bin/pluralith | |||

# Install pluralith-cli-graphing | |||

VERSION=$(curl --silent "https://api.github.com/repos/Pluralith/pluralith-cli-graphing-release/releases/latest" | jq -r .tag_name | tr -d v); echo $VERSION | |||

curl -L https://github.com/Pluralith/pluralith-cli-graphing-release/releases/download/v${VERSION}/pluralith_cli_graphing_linux_amd64_${VERSION} -o pluralith_cli_graphing_linux_amd64_${VERSION} | |||

sudo install pluralith_cli_graphing_linux_amd64_${VERSION} ~/Pluralith/bin/pluralith-cli-graphing | |||

# Check versions | |||

pluralith version | |||

parsing response failed -> GetGitHubRelease: %!w(<nil>) | |||

_ | |||

|_)| _ _ |._|_|_ | |||

| ||_|| (_||| | | | | |||

→ CLI Version: 0.2.2 | |||

→ Graph Module Version: 0.2.1 | |||

# Usage | |||

pluralith login --api-key $PLURALITH_API_KEY | |||

# Generate PDF graph locally | |||

pluralith <terrafom-root-folder> --var-file environments/dev.tfvars graph --local-only | |||

</source> | |||

= [https://github.com/flosell/iam-policy-json-to-terraform iam-policy-json-to-terraform] = | |||

Convert an IAM Policy in JSON format into a Terraform aws_iam_policy_document | |||

<source lang=bash> | |||

LATEST=$(curl --silent "https://api.github.com/repos/flosell/iam-policy-json-to-terraform/releases/latest" | jq -r .tag_name); echo $LATEST | |||

sudo curl -L https://github.com/flosell/iam-policy-json-to-terraform/releases/download/${LATEST}/iam-policy-json-to-terraform_amd64 -o /usr/local/bin/iam-policy-json-to-terraform | |||

sudo chmod +x /usr/local/bin/iam-policy-json-to-terraform | |||

# Usage: | |||

iam-policy-json-to-terraform < some-policy.json | |||

</source> | |||

= [https://github.com/hieven/terraform-visual terraform-visual] = | |||

<source lang=bash> | |||

# Install | |||

sudo apt-get update | |||

sudo apt install nodejs npm | |||

sudo npm install -g @terraform-visual/cli | |||

# Usage | |||

terraform plan -out=plan.out # Run plan and output as a file | |||

terraform show -json plan.out > plan.json # Read plan file and output it in JSON format | |||

terraform-visual --plan plan.json | |||

firefox terraform-visual-report/index.html | |||

</source> | |||

= [https://github.com/cloudskiff/driftctl driftctl] = | |||

Measures infrastructure as code coverage, and tracks infrastructure drift. | |||

IaC: Terraform, Cloud providers: AWS, GitHub (Azure and GCP on the roadmap for 2021). Spot discrepancies as they happen: driftctl is a free and open-source CLI that warns of infrastructure drifts and fills in the missing piece in your DevSecOps toolbox. | |||

;Features [https://docs.driftctl.com/ docs] | |||

* Scan cloud provider and map resources with IaC code | |||

* Analyze diffs, and warn about drift and unwanted unmanaged resources | |||

* Allow users to ignore resources | |||

* Multiple output formats | |||

Install | |||

<source lang=bash> | |||

curl -L https://github.com/snyk/driftctl/releases/latest/download/driftctl_linux_amd64 -o driftctl | |||

install ./driftctl /usr/local/bin/driftctl | |||

driftctl version | |||

</source> | |||

[https://docs.driftctl.com/0.39.0/usage/cmd/scan-usage Detect drift on GCP] | |||

<source lang=bash> | |||

source <(driftctl completion bash) | |||

export GOOGLE_APPLICATION_CREDENTIALS=$HOME/.config/gcloud/application_default_credentials.json | |||

export CLOUDSDK_CORE_PROJECT=<myproject_id> | |||

driftctl scan --to="gcp+tf" | |||

driftctl scan --to="gcp+tf" --deep --output html://output.html | |||

driftctl scan --to="gcp+tf" --from tfstate+gs://my-bucket/path/to/state.tfstate # Use this when working with workspaces | |||

</source> | |||

= [https://github.com/infracost/infracost infracost] = | |||

Infracost shows cloud cost estimates for infrastructure-as-code projects such as Terraform. | |||

<source lang=bash> | |||

# Downloads the CLI based on your OS/arch and puts it in /usr/local/bin | |||

curl -fsSL https://raw.githubusercontent.com/infracost/infracost/master/scripts/install.sh | sh | |||

# Register for a free API key | |||

infracost register # The key is saved in ~/.config/infracost/credentials.yml. | |||

# Show cost breakdown on live infra | |||

infracost breakdown --path terraform_nlb_static_eips | |||

# Show cost breakdown based on Terraform plan | |||

cd path/to/src_code | |||

terraform init | |||

terraform plan -out tfplan.binary | |||

terraform show -json tfplan.binary > plan.json | |||

## run via binary | |||

infracost breakdown --path plan.json | |||

infracost breakdown --path plan.json --show-skipped --format html > /vagrant/infracost.html | |||

infracost diff --path plan.json | |||

## run via Docker | |||

docker run -it --rm --volume "$(pwd):/src" -u $(id -u) -e INFRACOST_API_KEY=$INFRACOST_API_KEY infracost/infracost:0.9.15 breakdown --path /src/plan.json | |||

docker run -it --rm --volume "$(pwd):/src" -u $(id -u) -e INFRACOST_API_KEY=$INFRACOST_API_KEY infracost/infracost:0.9.15 diff --path /src/plan.json | |||

</source> | |||

Example output | |||

<source> | |||

## Cost breakdown | |||

docker run -it --rm --volume "$(pwd):/src" -u $(id -u) -e INFRACOST_API_KEY=$INFRACOST_API_KEY infracost/infracost:0.9.15 breakdown --path /src/plan.json | |||

Detected Terraform plan JSON file at /src/plan.json | |||

✔ Calculating monthly cost estimate | |||

Project: /src/plan.json | |||

Name Monthly Qty Unit Monthly Cost | |||

module.gke.google_container_cluster.primary | |||

├─ Cluster management fee 730 hours $73.00 | |||

└─ default_pool | |||

├─ Instance usage (Linux/UNIX, on-demand, e2-medium) 6,570 hours $242.16 | |||

└─ Standard provisioned storage (pd-standard) 900 GiB $36.00 | |||

module.gke.google_container_node_pool.pools["default-node-pool"] | |||

├─ Instance usage (Linux/UNIX, on-demand, e2-medium) 6,570 hours $242.16 | |||

└─ Standard provisioned storage (pd-standard) 900 GiB $36.00 | |||

OVERALL TOTAL $629.31 | |||

────────────────────────────────── | |||

11 cloud resources were detected, rerun with --show-skipped to see details: | |||

∙ 2 were estimated, 2 include usage-based costs, see https://infracost.io/usage-file | |||

∙ 9 were free | |||

## Cost difference | |||

docker run -it --rm --volume "$(pwd):/src" -u $(id -u) -e INFRACOST_API_KEY=$INFRACOST_API_KEY infracost/infracost:0.9.15 diff --path /src/plan.json | |||

Detected Terraform plan JSON file at /src/plan.json | |||

✔ Calculating monthly cost estimate | |||

Project: /src/plan.json | |||

+ module.gke.google_container_cluster.primary | |||

+$351 | |||

+ Cluster management fee | |||

+$73.00 | |||

+ default_pool | |||

+ Instance usage (Linux/UNIX, on-demand, e2-medium) | |||

+$242 | |||

+ Standard provisioned storage (pd-standard) | |||

+$36.00 | |||

+ node_pool[0] | |||

+ Instance usage (Linux/UNIX, on-demand, e2-medium) | |||

$0.00 | |||

+ Standard provisioned storage (pd-standard) | |||

$0.00 | |||

+ module.gke.google_container_node_pool.pools["default-node-pool"] | |||

+$278 | |||

+ Instance usage (Linux/UNIX, on-demand, e2-medium) | |||

+$242 | |||

+ Standard provisioned storage (pd-standard) | |||

+$36.00 | |||

Monthly cost change for /src/plan.json | |||

Amount: +$629 ($0.00 → $629) | |||

────────────────────────────────── | |||

Key: ~ changed, + added, - removed | |||

11 cloud resources were detected, rerun with --show-skipped to see details: | |||

∙ 2 were estimated, 2 include usage-based costs, see https://infracost.io/usage-file | |||

∙ 9 were free | |||

</source> | |||

Resources: | |||

* DockerHub: https://hub.docker.com/r/infracost/infracost/tags | |||

= [https://tfautomv.dev/ tfautomv - Terraform refactor] = | |||

Tfautomv writes moved blocks for you so your refactoring is quicker and less error-prone. | |||

<source lang=bash> | |||

tfautomv -dry-run | |||

tfautomv -show-analysis | |||

</source> | |||

= [https://www.davidc.net/sites/default/subnets/subnets.html?network=192.168.0.0&mask=22&division=19.3d431 Subnetting] = | |||

Very useful page for subnetting: https://www.davidc.net/sites/default/subnets/subnets.html | |||

= Resources = | = Resources = | ||

*[https://discuss.hashicorp.com/u/apparentlymart apparentlymart] The Hero! discuss.hashicorp.com | |||

*[https://blog.gruntwork.io/a-comprehensive-guide-to-terraform-b3d32832baca Comprehensive-guide-to-terraform] gruntwork.io | *[https://blog.gruntwork.io/a-comprehensive-guide-to-terraform-b3d32832baca Comprehensive-guide-to-terraform] gruntwork.io | ||

*[https://github.com/antonbabenko/terraform-best-practices Terraform good practices] naming conventions, etc.. | |||

*[https://github.com/antonbabenko/terraform-best-practices Terraform good practices] | |||

*[https://www.runatlantis.io/ Atlantis] Terraform Pull Request Automation, Listens for webhooks from GitHub/GitLab/Bitbucket/Azure DevOps, Runs terraform commands remotely and comments back with their output. | *[https://www.runatlantis.io/ Atlantis] Terraform Pull Request Automation, Listens for webhooks from GitHub/GitLab/Bitbucket/Azure DevOps, Runs terraform commands remotely and comments back with their output. | ||

Revision as of 17:36, 29 January 2024

This article is about utilising a tool from HashiCorp called Terraform to build infrastructure as a code - IoC.

Note: most of the paragraphs have examples of Terraform prior 0.12 version syntax that uses HCLv1. HCLv2 has been introduced with v0.12+ that contains significiant syntax and capabilites improvments.

Install terraform

wget https://releases.hashicorp.com/terraform/0.11.11/terraform_0.11.11_linux_amd64.zip unzip terraform_0.11.11_linux_amd64.zip sudo mv ./terraform /usr/local/bin

tfenv - manage multiple versions of Teraform

Install and usage

git clone https://github.com/tfutils/tfenv.git ~/.tfenv echo "[ -d $HOME/.tfenv ] && export PATH=$PATH:$HOME/.tfenv/bin/" >> ~/.bashrc # or ~/.bash_profile # Use tfenv install 1.0.6 tfenv use 1.0.6

IDE

Development I use:

- VSCode with 1.41.1+ (for reference) with extensions:

- Terraform Autocomplete by erd0s

- Terraform by Mikael Olenfalk with enabled Language Server; open the command pallet with

Ctrl+Shift+P

Basic configuration

When terraform is run it looks for .tf file where configuration is stored. The look up process is limited to a flat directory and never leaves the directory that runs from. Therefore if you wish to address a common file a symbolic-link needs to be created within the directory you have .tf file.

$ vi example.tf

provider "aws" {

access_key = "AK01234567890OGD6WGA"

secret_key = "N8012345678905acCY6XIc1bYjsvvlXHUXMaxOzN"

region = "eu-west-1"

}

resource "aws_instance" "webserver" {

ami = "ami-405f7226"

instance_type = "t2.nano"

}

Since version 10.8.x major changes and features have been introduced including split of providers binary. Now each provider is a separate binary. Please see below example for Azure provider and other internal Terraform developed providers.

Azure

Terraform credentials

export ARM_SUBSCRIPTION_ID="YOUR_SUBSCRIPTION_ID"

export ARM_TENANT_ID="TENANT_ID"

export ARM_CLIENT_ID="CLIENT_ID"

export ARM_CLIENT_SECRET="CLIENT_SECRET"

export TF_VAR_client_id=${ARM_CLIENT_ID}

export TF_VAR_client_secret=${ARM_CLIENT_SECRET}

Example, how to source credentials

export VAULT_CLIENT_ADDR=http://10.1.1.1:8200

export VAULT_TOKEN=11111111-1111-1111-1111-1111111111111

vault read -format=json -address=$VAULT_CLIENT_ADDR secret/azure/subscription | jq -r '.data | .subscription_id, .tenant_id'

vault read -format=json -address=$VAULT_CLIENT_ADDR secret/azure/${application} | jq -r '.data | .client_id, .client_secret'

Terraform providers, modules and backend config

$ vi providers.tf

provider "azurerm" {

version = "1.10.0"

subscription_id = "${var.subscription_id}"

tenant_id = "${var.tenant_id}"

client_id = "${var.client_id}"

client_secret = "${var.client_secret}"

}

# HashiCorp special providers https://github.com/terraform-providers

provider "template" { version = "1.0.0" }

provider "external" { version = "1.0.0" }

provider "local" { version = "1.1.0" }

terraform {

backend "local" {}

}

AWS

- References

Local state

Local state configuration

vi backend.tf

terraform {

version = "~> 1.0"

required_version = "= 0.12.29"

backend "local" {}

}

Remote state (single) for multi account deployments

There are many combination setting up backend and AWS credentials. Important understand is that terraform { backend{} } block does NOT use provider "aws {}" configuration in order to access the state bucket. It only uses the backend one.

- exporting credentials allows working with assume roles that are different in the backend and terraform blocks.

- specifying different

profile =in each blocks

- Credentials

## profile allows assumes roles in other accounts

#export AWS_PROFILE="piotr"

# Environment credentials for a user that can assume roles (eg. ) in other accounts:

# | * arn:aws:iam::111111111111:role/terraform-s3state - save state in s3 bucket

# | * arn:aws:iam::222222222222:role/terraform-crossaccount-admin - deploy resources

export AWS_ACCESS_KEY_ID=AKIAIOSFODNN7EXAMPLE

export AWS_SECRET_ACCESS_KEY=wJalrXUtnFEMI/K7MDENG/bPxRfiCYEXAMPLEKEY

export AWS_DEFAULT_REGION=us-east-1

# unset all of them if need to

unset ${!AWS@}

terraform {}

terraform {

version = "~> 1.0"

required_version = "= 0.12.29"

# profile "dev-us" # we use 'role_arn' but could specify aws profile instead

backend "s3" {

bucket = "tfstate-${var.project}-${var.account-id}" # must exist beforehand

key = "terraform/aws/${var.project}/tfstate" # this could be much simpler when working with terraform workspaces

region = "${var.region}"

role_arn = "arn:aws:iam::111111111111:role/terraform-s3state" # role to assume in an infra account that the s3 state exists

}

}

provider {}

provider "aws" {

## We could use profiles but instead we use 'assume_role' option. Also on your laptop

## it should be your creds profile eg. 'piotr-xaccount-admin'

#profile = "terraform-crossaccount-admin"

#shared_credentials_file = "/home/piotr/.aws/credentials"

assume_role = {

role_arn = "arn:aws:iam::<MY_PROD_ACCOUNT>:role/terraform-crossaccount-admin" # assume role in target account

role_arn = "arn:aws:iam::${var.aws_account}:role/terraform-crossaccount-admin" # can use variables

}

region = "var.aws_region"

allowed_account_ids = [ "111111111111", "222222222222" ] # safety net

}

- Workspace configuration

Dev configuration in dev.tfvars

aws_region = "eu-west-1" aws_account = "<MY_DEV_ACCOUNT>"

Prod configuration in prod.tfvars

aws_region = "eu-west-1" aws_account = "<MY_PROD_ACCOUNT>"

- Workspaces

terraform init terraform workspace new dev terraform workspace new prod

- Apply on one account

terraform workspace select dev terraform apply --var-file $(terraform workspace show).tfvars

GCP Google Cloud Platform

# Generate default app credentials gcloud auth application-default login Go to the following link in your browser: https://accounts.google.com/o/oauth2/auth?response_type=code&client_id=****_challenge_method=S256 Enter verification code: *** Credentials saved to file: [/home/piotr/.config/gcloud/application_default_credentials.json] These credentials will be used by any library that requests Application Default Credentials (ADC). Quota project "test-devops-candidate1" was added to ADC which can be used by Google client libraries for billing and quota. Note that some services may still bill the project owning the resource

Plan / apply

Meaning of markings in a plan output

For now, here they are, until we get it included in the docs better:

+create-destroy-/+replace (destroy and then create, or vice-versa if create-before-destroy is used)~update in-place<=applies only to data resources. You won't see this one often, because whenever possible Terraform does reads during the refresh phase. You will see it, though, if you have a data resource whose configuration depends on something that we don't know yet, such as an attribute of a resource that isn't yet created. In that case, it's necessary to wait until apply time to find out the final configuration before doing the read.

Plan and apply

Apply stage, if runs first time will create terraform.tfstate after all changes are done. This file should not be modified manually. It's used to compare what is out in cloud already so the next time APPLY stage runs it will look at the file and execute only necessary changes.

| terraform plan | terraform apply |

|---|---|

$ terraform plan Refreshing Terraform state in-memory prior to plan... The refreshed state will be used to calculate this plan, but will not be persisted to local or remote state storage. <...> + aws_instance.webserver ami: "ami-405f7226" associate_public_ip_address: "<computed>" availability_zone: "<computed>" ebs_block_device.#: "<computed>" ephemeral_block_device.#: "<computed>" instance_state: "<computed>" instance_type: "t2.nano" ipv6_addresses.#: "<computed>" key_name: "<computed>" network_interface_id: "<computed>" placement_group: "<computed>" private_dns: "<computed>" private_ip: "<computed>" public_dns: "<computed>" public_ip: "<computed>" root_block_device.#: "<computed>" security_groups.#: "<computed>" source_dest_check: "true" subnet_id: "<computed>" tenancy: "<computed>" vpc_security_group_ids.#: "<computed>" |