Difference between revisions of "Kubernetes/Security and RBAC"

| (9 intermediate revisions by the same user not shown) | |||

| Line 1: | Line 1: | ||

= API Server and Role Base Access Control = | = API Server and Role Base Access Control = | ||

[[AWS/IAM_Policy#AWS_policies.2C_in_my_own_words..]] RBAC terminology | |||

Once the API server has determined who you are (whether a pod or a user), the authorization is handled by RBAC. | Once the API server has determined who you are (whether a pod or a user), the authorization is handled by RBAC. | ||

| Line 66: | Line 68: | ||

kubectl get secrets default-token-qqzc7 -o yaml #display secrets | kubectl get secrets default-token-qqzc7 -o yaml #display secrets | ||

</source> | </source> | ||

= [https://kubernetes.io/docs/reference/access-authn-authz/service-accounts-admin/#user-accounts-vs-service-accounts User accounts vs service accounts] = | |||

Kubernetes distinguishes between the concept of a user account and a service account for a number of reasons: | |||

*User accounts are for humans. Service accounts are for processes, which run in pods. | |||

*User accounts are intended to be global. Names must be unique across all namespaces of a cluster, future user resource will not be namespaced. Service accounts are namespaced. | |||

*Typically, a cluster’s User accounts might be synced from a corporate database, where new user account creation requires special privileges and is tied to complex business processes. Service account creation is intended to be more lightweight, allowing cluster users to create service accounts for specific tasks (i.e. the principle of least privilege). | |||

*Auditing considerations for humans and service accounts may differ. | |||

*A config bundle for a complex system may include a definition of various service accounts for components of that system. Because service accounts can be created ad-hoc and have namespaced names, such config is portable | |||

= [https://stackoverflow.com/questions/42170380/how-to-add-users-to-kubernetes-kubectl Users] = | |||

Normal users are assumed to be managed by an outside, independent service. An admin distributing private keys, a user store like Keystone or Google Accounts, even a file with a list of usernames and passwords. In this regard, Kubernetes does not have objects which represent normal user accounts. Regular users cannot be added to a cluster through an API call [https://kubernetes.io/docs/admin/authentication/#users-in-kubernetes read more...] | |||

= ServiceAccount = | = ServiceAccount = | ||

The API server is first evaluating if the request is coming from a service account or a normal user /or normal user account meeting, a private key, a user store or even a file with a list of user names and passwords. Kubernetes doesn't have objects that represent normal user accounts, and normal users cannot be added to the cluster through. | <code>ServiceAccount</code> allow containers running in pods to access the Kubernetes API. Some applications may need to interact with the k8s cluster itself, and the <code>serviceaccounts</code> allow it to do securely with limited permissions. | ||

The API server is first evaluating if the request is coming from a <tt>service account</tt> or a <tt>normal user</tt> /or normal user account meeting, a private key, a user store or even a file with a list of user names and passwords. Kubernetes doesn't have objects that represent normal user accounts, and normal users cannot be added to the cluster through. | |||

Create a ServiceAccount | |||

<source lang=bash> | <source lang=bash> | ||

kubectl get | kubectl get serviceaccount # 'sa' in short | ||

kubectl create serviceaccount | kubectl create serviceaccount jenkins -oyaml --save-config --dry-run | ||

kubectl get serviceaccount jenkins -oyaml | |||

kubectl get | </source> | ||

<syntaxhighlightjs lang=yaml> | |||

apiVersion: v1 | apiVersion: v1 | ||

kind: ServiceAccount | kind: ServiceAccount | ||

| Line 85: | Line 106: | ||

secrets: | secrets: | ||

- name: jenkins-token-cspjm | - name: jenkins-token-cspjm | ||

</syntaxhighlightjs> | |||

kubectl get secret | |||

Read ServiceAccount certificate and token | |||

<source lang=bash> | |||

kubectl get secret jenkins-token-cspjm | |||

NAME TYPE DATA AGE | |||

jenkins-token-cspjm kubernetes.io/service-account-token 3 73s | |||

kubectl get secrets jenkins-token-s875g -oyaml | |||

</source> | </source> | ||

<syntaxhighlightjs lang=yaml> | |||

apiVersion: v1 | |||

data: # all data is base64 encoded | |||

ca.crt: LS0tLS1CR******UtLS0tLQo= | |||

namespace: c2VjcmV0cw== | |||

token: ZXlKaGJHY2******1RmN0VlcktRWEdQbVkyWVpxYnc= | |||

kind: Secret | |||

metadata: | |||

annotations: | |||

kubernetes.io/service-account.name: jenkins | |||

kubernetes.io/service-account.uid: 49f48d04-f43d-11e9-8421-0a39c67f4f42 | |||

creationTimestamp: "2019-10-21T19:59:28Z" | |||

name: jenkins-token-cspjm # the secret name | |||

namespace: default | |||

resourceVersion: "189166" | |||

selfLink: /api/v1/namespaces/secrets/secrets/jenkins-token-cspjm | |||

uid: 49f6ea57-f43d-11e9-8421-0a39c67f4f42 | |||

type: kubernetes.io/service-account-token | |||

</syntaxhighlightjs> | |||

Assign | Assign ServiceAccount to a pod | ||

< | <syntaxhighlightjs lang=yaml> | ||

apiVersion: v1 | apiVersion: v1 | ||

kind: Pod | kind: Pod | ||

metadata: | metadata: | ||

name: busybox | name: busybox | ||

spec: | spec: | ||

serviceAccountName: jenkins #< | serviceAccountName: jenkins # <- ServiceAccount | ||

containers: | containers: | ||

- image: busybox: | - image : busybox-serviceaccount | ||

command: | name : busybox | ||

command: ["/bin/sleep" | |||

args : ["3600"] | |||

imagePullPolicy: IfNotPresent | imagePullPolicy: IfNotPresent | ||

restartPolicy: Always | restartPolicy: Always | ||

</syntaxhighlightjs> | |||

== Resources == | |||

*[https://kubernetes.io/docs/reference/access-authn-authz/service-accounts-admin/ Managing Service Accounts] | |||

*[https://kubernetes.io/docs/tasks/configure-pod-container/configure-service-account/ Configure Service Accounts for Pods] | |||

= Create Administrative account = | = Create Administrative account = | ||

This is a process of setting up a new remote administrator. | This is a process of setting up a new remote administrator. | ||

| Line 158: | Line 199: | ||

name: service-reader | name: service-reader | ||

rules: | rules: | ||

- apiGroups: [""] | - apiGroups: [""] # "" indicates the core API group | ||

verbs: ["get", "list"] | verbs: ["get", "list"] | ||

resources: ["services"] | resources: ["services"] | ||

</source> | </source> | ||

{{Note| [https://kubernetes.io/docs/reference/using-api/#api-groups The core (also called legacy) group] indicated by <code>apiGroups: [""]</code> is found at REST path /api/v1. The core group is not specified as part of the apiVersion field, for example, apiVersion: v1.}} | |||

| Line 1,092: | Line 1,136: | ||

* [https://kubernetes.io/docs/concepts/configuration/secret/ Secrets] K8s docs | * [https://kubernetes.io/docs/concepts/configuration/secret/ Secrets] K8s docs | ||

= [https://kubernetes.io/docs/reference/access-authn-authz/admission-controllers/#podsecuritypolicy Pod Security Policy] = | |||

The Kubernetes pod security policy admission controller validates pod creation and update requests against a set of rules. You should have your policies applied to your cluster before enabling the admission controller otherwise no pods will be able to be scheduled. | |||

Read more about [https://docs.aws.amazon.com/eks/latest/userguide/pod-security-policy.html AWS EKS psp] | |||

Commands tested under | |||

<source lang=bash> | |||

$ kubectl version --short | |||

Client Version: v1.15.0 | |||

Server Version: v1.14.8-eks-b8860f | |||

</source> | |||

Default PSP policy in EKS | |||

<source lang=yaml> | |||

kubectl get psp eks.privileged -oyaml --export=true # | |||

Flag --export has been deprecated, This flag is deprecated and will be removed in future. | |||

apiVersion: extensions/v1beta1 | |||

kind: PodSecurityPolicy | |||

metadata: | |||

annotations: | |||

kubectl.kubernetes.io/last-applied-configuration: | | |||

{"apiVersion":"policy/v1beta1","kind":"PodSecurityPolicy","metadata":{"annotations":{"kubernetes.io/description":"privileged allows full unrestricted access to pod features, as if the PodSecurityPolicy controller was not enabled.","seccomp.security.alpha.kubernetes.io/allowedProfileNames":"*"},"labels":{"eks.amazonaws.com/component":"pod-security-policy","kubernetes.io/cluster-service":"true"},"name":"eks.privileged"},"spec":{"allowPrivilegeEscalation":true,"allowedCapabilities":["*"],"fsGroup":{"rule":"RunAsAny"},"hostIPC":true,"hostNetwork":true,"hostPID":true,"hostPorts":[{"max":65535,"min":0}],"privileged":true,"readOnlyRootFilesystem":false,"runAsUser":{"rule":"RunAsAny"},"seLinux":{"rule":"RunAsAny"},"supplementalGroups":{"rule":"RunAsAny"},"volumes":["*"]}} | |||

kubernetes.io/description: privileged allows full unrestricted access to pod features, | |||

as if the PodSecurityPolicy controller was not enabled. | |||

seccomp.security.alpha.kubernetes.io/allowedProfileNames: '*' | |||

creationTimestamp: null | |||

labels: | |||

eks.amazonaws.com/component: pod-security-policy | |||

kubernetes.io/cluster-service: "true" | |||

name: eks.privileged | |||

selfLink: /apis/extensions/v1beta1/podsecuritypolicies/eks.privileged | |||

spec: | |||

allowPrivilegeEscalation: true | |||

allowedCapabilities: | |||

- '*' | |||

fsGroup: | |||

rule: RunAsAny | |||

hostIPC: true | |||

hostNetwork: true | |||

hostPID: true | |||

hostPorts: | |||

- max: 65535 | |||

min: 0 | |||

privileged: true | |||

runAsUser: | |||

rule: RunAsAny | |||

seLinux: | |||

rule: RunAsAny | |||

supplementalGroups: | |||

rule: RunAsAny | |||

volumes: | |||

- '*' | |||

</source> | |||

;Verify | |||

Get available users, groups and serviceaccounts | |||

<source lang=bash> | |||

kubectl get serviceaccounts -A | |||

kubectl get role -A | |||

kubectl get clusterrole | |||

</source> | |||

<code>kubectl</code> allows you to pose as other users using <code>--as</code> to perform operations, but you can also use it to inspect permissions. | |||

<source lang=bash> | |||

$ kubectl auth can-i use deployment.apps/coredns | |||

yes | |||

# User impersonation syntax: --as=system:serviceaccount:default:default | |||

# < roleName >:< ns >:<user> | |||

kubectl auth can-i use psp/eks.privileged --as-group=system:authenticated --as=any-user | |||

Warning: resource 'podsecuritypolicies' is not namespace scoped in group 'extensions' | |||

no | |||

kubectl auth can-i list secrets --namespace dev --as dave | |||

# drain node (no namespace scope resource) | |||

kubectl auth can-i drain node/ip-10-35-65-154.eu-west-1.compute.internal --as-group=system:authenticated --as=any-user | |||

Warning: resource 'nodes' is not namespace scoped | |||

no | |||

kubectl auth can-i drain node/ip-10-35-65-154.eu-west-1.compute.internal | |||

Warning: resource 'nodes' is not namespace scoped | |||

yes | |||

# delete a namespace resource | |||

kubectl auth can-i delete svc/kube-dns -n kube-system | |||

yes | |||

kubectl auth can-i delete svc/kube-dns -n kube-system --as-group=system:authenticated --as=any-user | |||

no | |||

</source> | |||

= Resources = | = Resources = | ||

Latest revision as of 13:41, 21 December 2021

API Server and Role Base Access Control

AWS/IAM_Policy#AWS_policies.2C_in_my_own_words.. RBAC terminology

Once the API server has determined who you are (whether a pod or a user), the authorization is handled by RBAC.

To prevent unauthorized users from modifying the cluster state, RBAC is used by defining roles and role bindings for a user. A service account resource is created for a pod to determine what control has over the cluster state. For example, the default service account will not allow you to list the services in a namespace.

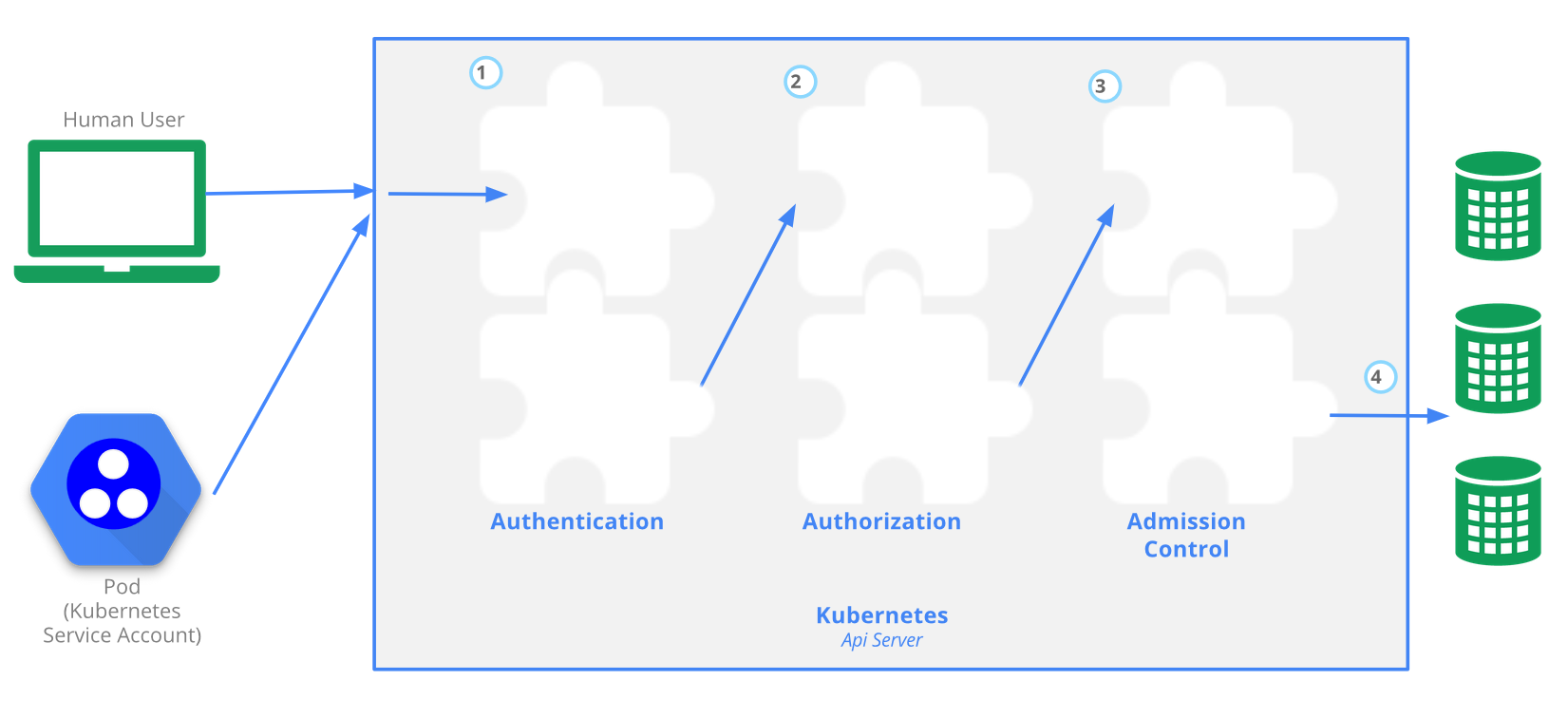

The Kubernetes API server provides CRUD actions (Create, Read, Update, Delete) interface for interacting with cluster state over a RESTful API. API calls can come only from 2 sources:

- kubectl

- POD

There is 4 stage process

- Authentication

- Authorization

- Admission

- Writing the configuration state CRUD actions to persistent store etcd database

Example plugins:

- serviceaccount plugin applies default serviceaccount to pods that don't explicitly specify

RBAC is managed by 4 resources, divided over 2 groups

| Group-1 namespace resources | Group-2 cluster level resources | resources type |

|---|---|---|

| roles | cluster roles | defines what can be done |

| role bindings | cluster role bindings | defines who can do it |

When deploying a pod a default serviceaccount is assigned if not specified in the pod manifest. The serviceaccount represents an identity of an app running on a pod. Token file holds authentication token. Let's create a namespace and create a test pod to try to list available services.

kubectl create ns rbac kubectl run apitest --image=nginx -n rbac #create test container, to run API call test from

Each pod has serviceaccount, the API authentication token is on a pod. When a pod makes API call uses the token, this allows to assumes the serviceaccount, so it gets identity. You can preview the token on the pod.

kubectl -n rbac1 exec -it apitest-<UID> -- /bin/sh #connect to the container shell

#display token and namespace that allows to connect to API server from this pod

root$ cat /var/run/secrets/kubernetes.io/serviceaccount/{token,namespace}

#call API server to list K8s services in 'rbac' namespace

root$ curl localhost:8001/api/v1/namespaces/rbac/services

List all serviceaccounts. Serviceaccounts can only be used within the same namespace.

kubectl get serviceaccounts -n rbac kubectl get secrets NAME TYPE DATA AGE default-token-qqzc7 kubernetes.io/service-account-token 3 39h kubectl get secrets default-token-qqzc7 -o yaml #display secrets

User accounts vs service accounts

Kubernetes distinguishes between the concept of a user account and a service account for a number of reasons:

- User accounts are for humans. Service accounts are for processes, which run in pods.

- User accounts are intended to be global. Names must be unique across all namespaces of a cluster, future user resource will not be namespaced. Service accounts are namespaced.

- Typically, a cluster’s User accounts might be synced from a corporate database, where new user account creation requires special privileges and is tied to complex business processes. Service account creation is intended to be more lightweight, allowing cluster users to create service accounts for specific tasks (i.e. the principle of least privilege).

- Auditing considerations for humans and service accounts may differ.

- A config bundle for a complex system may include a definition of various service accounts for components of that system. Because service accounts can be created ad-hoc and have namespaced names, such config is portable

Users

Normal users are assumed to be managed by an outside, independent service. An admin distributing private keys, a user store like Keystone or Google Accounts, even a file with a list of usernames and passwords. In this regard, Kubernetes does not have objects which represent normal user accounts. Regular users cannot be added to a cluster through an API call read more...

ServiceAccount

ServiceAccount allow containers running in pods to access the Kubernetes API. Some applications may need to interact with the k8s cluster itself, and the serviceaccounts allow it to do securely with limited permissions.

The API server is first evaluating if the request is coming from a service account or a normal user /or normal user account meeting, a private key, a user store or even a file with a list of user names and passwords. Kubernetes doesn't have objects that represent normal user accounts, and normal users cannot be added to the cluster through.

Create a ServiceAccount

kubectl get serviceaccount # 'sa' in short kubectl create serviceaccount jenkins -oyaml --save-config --dry-run kubectl get serviceaccount jenkins -oyaml

<syntaxhighlightjs lang=yaml> apiVersion: v1 kind: ServiceAccount metadata:

creationTimestamp: "2019-08-05T07:10:40Z" name: jenkins namespace: default resourceVersion: "678" selfLink: /api/v1/namespaces/default/serviceaccounts/jenkins uid: 21cba4bb-b750-11e9-86b3-0800274143a9

secrets: - name: jenkins-token-cspjm </syntaxhighlightjs>

Read ServiceAccount certificate and token

kubectl get secret jenkins-token-cspjm NAME TYPE DATA AGE jenkins-token-cspjm kubernetes.io/service-account-token 3 73s kubectl get secrets jenkins-token-s875g -oyaml

<syntaxhighlightjs lang=yaml> apiVersion: v1 data: # all data is base64 encoded

ca.crt: LS0tLS1CR******UtLS0tLQo= namespace: c2VjcmV0cw== token: ZXlKaGJHY2******1RmN0VlcktRWEdQbVkyWVpxYnc=

kind: Secret metadata:

annotations: kubernetes.io/service-account.name: jenkins kubernetes.io/service-account.uid: 49f48d04-f43d-11e9-8421-0a39c67f4f42 creationTimestamp: "2019-10-21T19:59:28Z" name: jenkins-token-cspjm # the secret name namespace: default resourceVersion: "189166" selfLink: /api/v1/namespaces/secrets/secrets/jenkins-token-cspjm uid: 49f6ea57-f43d-11e9-8421-0a39c67f4f42

type: kubernetes.io/service-account-token </syntaxhighlightjs>

Assign ServiceAccount to a pod

<syntaxhighlightjs lang=yaml>

apiVersion: v1

kind: Pod

metadata:

name: busybox

spec:

serviceAccountName: jenkins # <- ServiceAccount containers: - image : busybox-serviceaccount name : busybox command: ["/bin/sleep" args : ["3600"] imagePullPolicy: IfNotPresent restartPolicy: Always

</syntaxhighlightjs>

Resources

Create Administrative account

This is a process of setting up a new remote administrator.

kubectl.exe config set-credentials piotr --username=piotr --password=password

#new section in ~/.kube/config has been added:

users:

- name: user1

...

- name: piotr

user:

password: password

username: piotr

#create clusterrolebinding, this is for authonomus users not-recommended

kubectl create clusterrolebinding cluster-system-anonymous --clusterrole=cluster-admin --user=system:anonymous

clusterrolebinding.rbac.authorization.k8s.io/cluster-system-anonymous created

#copy server ca.crt

laptop$ scp ubuntu@k8s-cluster.acme.com:/etc/kubernetes/pki/ca.crt .

#set kubeconfig

kubectl config set-cluster kubernetes --server=https://k8s-cluster.acme.com:6443 --certificate-authority=ca.crt --embed-certs=true

#Create context

kubectl config set-context kubernetes --cluster=kubernetes --user=piotr --namespace=default

#Use contect to current

kubectl config use-context kubernetes

Create a role (namespaced permissions)

The role describes what actions can be performed. This role allows to list services from a web namespace.

apiVersion: rbac.authorization.k8s.io/v1 kind: Role metadata: namespace: web #this need to be created beforehand name: service-reader rules: - apiGroups: [""] # "" indicates the core API group verbs: ["get", "list"] resources: ["services"]

Note: The core (also called legacy) group indicated by apiGroups: [""] is found at REST path /api/v1. The core group is not specified as part of the apiVersion field, for example, apiVersion: v1.

The role does not specify who can do it. Thus we create a roleBinding with a user, serviceAccount or group. The roleBinding can only reference a single role, but can bind to multi: users, serviceAccounts or groups

kubectl create rolebinding roleBinding-test --role=service-reader --serviceaccount=web:default -n web # Verify access has been granted curl localhost:8001/api/v1/namespaces/web/services

Create a clusterrole (cluster-wide permissions)

In this example we create ClusterRole that can access persitenvolumes APIs, then we will create ClusterRolebinding (pv-test) with a default ServiceAccount (name: default) in 'web' namespace. The SA is a account that pod assumes/uses by default when getting Authenticated by API-server. When we then attach to the container and try to list cluster-wide resource - persitenvolumes , this will be allowed because of ClusterRole, that the pod has assumed.

# Create a ClusterRole to access PersistentVolumes: kubectl create clusterrole pv-reader --verb=get,list --resource=persistentvolumes # Create a ClusterRoleBinding for the cluster role: kubectl create clusterrolebinding pv-test --clusterrole=pv-reader --serviceaccount=web:default

The YAML for a pod that includes a curl and proxy container:

apiVersion: v1

kind: Pod

metadata:

name: curlpod

namespace: web

spec:

containers:

- image: tutum/curl

command: ["sleep", "9999999"]

name: main

- image: linuxacademycontent/kubectl-proxy

name: proxy

restartPolicy: Always

Create the pod that will allow you to curl directly from the container:

kubectl apply -f curlpod.yaml

kubectl get pods -n web # Get the pods in the web namespace

kubectl exec -it curlpod -n web -- sh # Open a shell to the container:

#Verify you can access PersistentVolumes (cluster-level) from the pod

/ # curl localhost:8001/api/v1/persistentvolumes

{

"kind": "PersistentVolumeList",

"apiVersion": "v1",

"metadata": {

"selfLink": "/api/v1/persistentvolumes",

"resourceVersion": "7173"

},

"items": []

}/ #

List all API resources

PS C:> kubectl.exe proxy Starting to serve on 127.0.0.1:8001

Network policies

Network policies allow you to specify which pods can talk to other pods. The example Calico's plugin allows for securing communication by:

- applying network policy based on:

- pod label-selector

- namespace label-selector

- CIDR block range

- securing communication (who can access pods) by setting up:

- ingress rules

- egress rules

POSTing any NetworkPolicy manifest to the API server will have no effect unless your chosen networking solution supports network policy. Network Policy is just an API resource that defines a set of rules for Pod access. However, to enable a network policy, we need a network plugin that supports it. We have a few options:

- Calico, Cilium, Kube-router, Romana, Weave Net

minikube

If you plan to use Minikube with its default settings, the NetworkPolicy resources will have no effect due to the absence of a network plugin and you’ll have to start it with --network-plugin=cni.

minikube start --network-plugin=cni --memory=4096

- Minikube local cluster with NetworkPolicy powered by Cilium

- network-policies on your laptop by banzaicloud

Install Calico network policies

What is the Canal? Tigera and CoreOS’s was a project to integrate Calico and flannel, read more...

Install Calico =<v3.5 canal network policies plugin:

wget -O canal.yaml https://docs.projectcalico.org/v3.5/getting-started/kubernetes/installation/hosted/canal/canal.yaml curl https://docs.projectcalico.org/v3.8/manifests/canal.yaml -O curl https://docs.projectcalico.org/v3.8/manifests/calico-policy-only.yaml -O # Update Pod IPs to '--cluster-cidr', changing this value after installation has no affect ## Get Pod's cidr kubectl cluster-info dump | grep -m 1 service-cluster-ip-range kubectl cluster-info dump | grep -m 1 cluster-cidr ## Minikube cidr can change, so exec to Pod is best option eventually you can check in minikube config grep ~/.minikube/profiles/minikube/config.json | grep ServiceCIDR ## Update config POD_CIDR="<your-pod-cidr>" sed -i -e "s?10.244.0.0/16?$POD_CIDR?g" canal.yaml kubectl apply -f canal.yaml

Cilium - networkPolicies

Cilium DaemonSet will place one Pod per node. Each Pod then will enforce network policies on the traffic using Berkeley Packet Filter (BPF).

minikube start --network-plugin=cni --memory=4096 #--kubernetes-version=1.13 kubectl create -f https://raw.githubusercontent.com/cilium/cilium/v1.5/examples/kubernetes/1.14/cilium-minikube.yaml

Create NetworkPolicy

NetworkPolicy describes what network traffic is allowed for a set of Pods, if Pods are selected but there is no rules then traffic is denied.

Create a 'default' isolation policy for a namespace by creating a NetworkPolicy that selects all pods but does not allow any ingress traffic to those pods. The example we will run in dev namespace.

Create a namespace

kubectl create ns dev kubectl explain networkpolicy.spec.ingress #get yaml schema fields

| Default deny all ingress traffic | Default allow all ingress traffic |

|---|---|

cat > deny-all-ingress.yaml << EOF

apiVersion: networking.k8s.io/v1

kind: NetworkPolicy

metadata:

name: deny-all-ingress

# namespace: dev

spec:

podSelector: {} # select all pods in a ns

ingress: # allowing rules,

# if empty no traffic allowed

policyTypes:

- Ingress

EOF

|

cat > allow-all-ingress.yaml << EOF

apiVersion: networking.k8s.io/v1

kind: NetworkPolicy

metadata:

name: allow-all-ingress

# namespace: dev

spec:

podSelector: {}

ingress: # rules to apply to selected nodes

- {} # all allowed rule

policyTypes:

- Ingress # rule types that the NetworkPolicy relates to

EOF

|

Create NetworkPolicy

$ kubectl apply -f deny-all-ingress.yaml

networkpolicy.networking.k8s.io/deny-all-ingress created

$ kubectl get networkPolicy -A

NAMESPACE NAME POD-SELECTOR AGE

default deny-all-ingress <none> 6s

$ kubectl describe networkPolicy deny-all-ingress

Name: deny-all-ingress

Namespace: dev

Created on: 2019-08-20 22:31:30 +0100 BST

Labels: <none>

Annotations: kubectl.kubernetes.io/last-applied-configuration:

{"apiVersion":"networking.k8s.io/v1","kind":"NetworkPolicy","metadata":{"annotations":{},"name":"deny-all-ingress","namespace":"dev"},"spe...

Spec:

PodSelector: <none> (Allowing the specific traffic to all pods in this namespace)

Allowing ingress traffic:

<none> (Selected pods are isolated for ingress connectivity)

Allowing egress traffic:

<none> (Selected pods are isolated for egress connectivity)

Policy Types: Ingress

Run test pod

# Single Pods in -n --namespace kubectl -n dev run --generator=run-pod/v1 busybox1 --image=busybox -- sleep 3600 kubectl -n dev run --generator=run-pod/v1 busybox2 --image=busybox -- sleep 3600 # default labels: --labels="run=busybox1" kubectl -n dev run --generator=run-pod/v1 busybox3 --image=busybox --labels="app=A" -- sleep 3600 kubectl -n dev run --generator=run-pod/v1 busybox4 --image=busybox --labels="app=B" -- sleep 3600 # Get pods with labels kubectl -n dev get pods -owide --show-labels NAMESPACE NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES LABELS dev pod/busybox1 1/1 Running 0 34m 10.15.185.121 minikube <none> <none> run=busybox1 dev pod/busybox2 1/1 Running 0 34m 10.15.171.50 minikube <none> <none> run=busybox2 dev pod/busybox3 1/1 Running 0 31m 10.15.140.94 minikube <none> <none> app=A dev pod/busybox4 1/1 Running 0 18s 10.15.174.22 minikube <none> <none> app=B dev pod/nginx1 1/1 Running 0 27m 10.15.19.151 minikube <none> <none> run=nginx1 dev pod/nginx2 1/1 Running 0 27m 10.15.104.113 minikube <none> <none> run=nginx2 # Ping, should should timeout because NetworkPolicy in place kubectl exec -ti busybox1 -- ping -c3 10.15.171.50 #<busybox2-ip> PING 10.15.171.50 (10.15.171.50): 56 data bytes --- 10.15.171.50 ping statistics --- 3 packets transmitted, 0 packets received, 100% packet loss

- Note

- If you wish to use dns names eg. busybox2 it requires to create a service, without you can't find names:

kubectl -n dev exec -ti busybox1 -- nslookup busybox2 Server: 10.96.0.10 Address: 10.96.0.10:53 ** server can't find busybox2.dev.svc.cluster.local: NXDOMAIN *** Can't find busybox2.svc.cluster.local: No answer *** Can't find busybox2.cluster.local: No answer *** Can't find busybox2.dev.svc.cluster.local: No answer *** Can't find busybox2.svc.cluster.local: No answer *** Can't find busybox2.cluster.local: No answer # Try nginx, but the container does not have ping,curl just whet --spider <dns|ip> kubectl -n dev run --generator=run-pod/v1 nginx1 --image=nginx kubectl -n dev run --generator=run-pod/v1 nginx2 --image=nginx kubectl -n dev expose pod nginx1 --port=80 kubectl -n dev get services NAMESPACE NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR LABELS dev service/nginx1 ClusterIP 10.97.69.143 <none> 80/TCP 22m run=nginx1 run=nginx1 # Call by dns, fqdn: nginx1.dev.svc.cluster.local kubectl -n dev exec -ti busybox1 -- /bin/wget --spider http://nginx1 Connecting to nginx1 (10.97.69.143:80) remote file exists

Create allow-A-to-B.yaml policy

cat > allow-A-to-B.yaml << EOF

apiVersion: networking.k8s.io/v1

kind: NetworkPolicy

metadata:

name: allow-a-to-b #must be lowercase req.DNS-1123

# namespace: dev

spec:

podSelector: {}

ingress:

- from:

- podSelector:

matchLabels:

app: A

egress:

- to:

- podSelector:

matchLabels:

app: B

policyTypes:

- Ingress

- Egress

EOF

kubectl -n dev create -f allow-A-to-B.yaml

networkpolicy.networking.k8s.io/allow-out-to-in created

Test allow policy

kubectl -n dev exec -ti busybox1 -- ping -c3 10.15.171.50 # fail kubectl -n dev exec -ti busybox3 -- ping -c3 10.15.174.22 # success # Apply labels from: A to: B kubectl -n dev label pod busybox1 app=A kubectl -n dev label pod busybox2 app=B kubectl -n dev exec -ti busybox1 -- ping -c3 10.15.171.50 # success # Tidy up kubectl -n dev delete networkpolicy --all

- Deployment (optional test)

kubectl create deployment nginx --image=nginx # create a deployment

kubectl scale rs nginx-554b9c67f9 --replicas=3 # scale deployment

#kubectl run nginx --image=nginx --replicas=3 #deprecated command

kubectl expose deployment nginx --port=80

# Try accessing a service from another pod

kubectl run --generator=run-pod/v1 busybox --image=busybox -- sleep 3600

kubectl exec busybox -it -- /bin/sh #this often crashes

/ # wget --spider --timeout=1 nginx #this should timeout

#--spider does not download just browses

kubectl exec -ti busybox -- wget --spider --timeout=1 nginx

Create NetworkPolicy that allows ingress port 5432 from pods with 'web' label

apiVersion: networking.k8s.io/v1

kind: NetworkPolicy

metadata:

name: web-netpolicy

spec:

podSelector: # apply policy

matchLabels: # to these pods

app: web

ingress:

- from:

- podSelector: # allow traffic

matchLabels: # from these pods

app: busybox

ports:

- port: 80

Label a pod to get the NetworkPolicy:

kubectl label pods [pod_name] app=db kubectl run busybox --rm -it --image=busybox /bin/sh #wget --spider --timeout=1 nginx #this should timeout

| namespace NetworkPolicy | IP block NetworkPolicy | egress NetworkPolicy |

|---|---|---|

apiVersion: networking.k8s.io/v1

kind: NetworkPolicy

metadata:

name: ns-netpolicy

spec:

podSelector:

matchLabels:

app: db

ingress:

- from:

- namespaceSelector:

matchLabels:

tenant: web

ports:

- port: 5432

|

apiVersion: networking.k8s.io/v1

kind: NetworkPolicy

metadata:

name: ipblock-netpolicy

spec:

podSelector:

matchLabels:

app: db

ingress:

- from:

- ipBlock:

cidr: 192.168.1.0/24

|

apiVersion: networking.k8s.io/v1

kind: NetworkPolicy

metadata:

name: egress-netpol

spec:

podSelector:

matchLabels:

app: web

egress:

- to:

- podSelector:

matchLabels:

app: db

ports:

- port: 5432

|

TLS Certificates

Pods communicate with API server using mutual TLS. The ca-bundle is automatically mounted in a pod /var/run/secrets/kubernetes.io/serviceaccount as ca.crt file using default service account.

. API Server <-------[crt]- Pod[crt signed] trusts certs by CA signed by CA

Show automatically installed ca-bundle

kubectl exec busybox1 -- ls /var/run/secrets/kubernetes.io/serviceaccount ca.crt namespace token

Generate a new certificate

Kubernetes has build-in api to generate custom certificates.

Create a namespace and a pod that will use the new certificate while calling the server-api.

kubectl create ns my-namespace kubectl -n my-namespace run --generator=run-pod/v1 my-pod --labels="app=busybox" --image=busybox -- sleep 3600 # add create a service 'my-svc'

Install SSL toolkit used, to generate certs CFSSL - CloudFlare's

Manifest to create csr

| csr | CertificateSigningRequest |

|---|---|

cat <<EOF | cfssl genkey - | cfssljson -bare server

{

"hosts": [

"my-svc.my-namespace.svc.cluster.local",

"my-pod.my-namespace.pod.cluster.local",

"172.168.0.24",

"10.0.34.2"

],

"CN": "my-pod.my-namespace.pod.cluster.local",

"key": {

"algo": "ecdsa",

"size": 256

}

}

EOF

2019/08/22 21:23:24 [INFO] generate received request

2019/08/22 21:23:24 [INFO] received CSR

2019/08/22 21:23:24 [INFO] generating key: ecdsa-256

2019/08/22 21:23:24 [INFO] encoded CSR

$ ls -l

...

-rw-r--r-- 1 ubuntu ubuntu 558 Aug 22 21:23 server.csr

-rw------- 1 ubuntu ubuntu 227 Aug 22 21:23 server-key.pem

|

cat <<EOF | kubectl create -f - apiVersion: certificates.k8s.io/v1beta1 kind: CertificateSigningRequest metadata: name: pod-csr.web spec: groups: - system:authenticated request: $(cat server.csr | base64 | tr -d '\n') usages: - digital signature - key encipherment - server auth EOF # this only work with here-docs as kubectl cannot interpret # bash when passing -f .yaml file |

Sign the request

$ kubectl.exe -n dev get csr

NAME AGE REQUESTOR CONDITION

my-pod-csr 9m51s minikube-user Pending #<- status

$ kubectl.exe -n dev describe csr

Name: my-pod-csr

Labels: <none>

Annotations: <none>

CreationTimestamp: Thu, 22 Aug 2019 21:46:28 +0100

Requesting User: minikube-user

Status: Pending #<- changes to Approved,Issued once approved

Subject:

Common Name: my-pod.my-namespace.pod.cluster.local

Serial Number:

Subject Alternative Names:

DNS Names: my-svc.my-namespace.svc.cluster.local

my-pod.my-namespace.pod.cluster.local

IP Addresses: 172.168.0.24

10.0.34.2

Events: <none>

# Approve

$ kubectl.exe -n dev certificate approve my-pod-csr

certificatesigningrequest.certificates.k8s.io/my-pod-csr approved

$ kubectl.exe -n dev get csr

NAME AGE REQUESTOR CONDITION

my-pod-csr 14m minikube-user Approved,Issued

# Preview the certificate, it's part of 'CertificateSigningRequest' object

$ kubectl.exe -n dev get csr my-pod-csr -o yaml

apiVersion: v1

items:

- apiVersion: certificates.k8s.io/v1beta1

kind: CertificateSigningRequest

metadata:

creationTimestamp: "2019-08-22T20:46:28Z"

name: my-pod-csr

resourceVersion: "8027"

selfLink: /apis/certificates.k8s.io/v1beta1/certificatesigningrequests/my-pod-csr

uid: 409e7164-c06d-4a5f-896a-30b7329c5156

spec:

groups:

- system:masters

- system:authenticated

request: LS0tLS1CRUdJTiBDRVJUSUZJQ0FURSBSRVFVRVNULS0...SRVFVRVNULS0tLS0K

usages:

- digital signature

- key encipherment

- server auth

username: minikube-user

status:

certificate: LS0tLS1CRUdJTiBDRVJUSUZJQ0FURS0tLS0tCk1JSUN3ekNDQWF1Z0F3...DQVRFLS0tLS0K

conditions:

- lastUpdateTime: "2019-08-22T21:00:45Z"

message: This CSR was approved by kubectl certificate approve.

reason: KubectlApprove

type: Approved

kind: List

metadata:

resourceVersion: ""

selfLink: ""

# Extract certificate

kubectl get csr my-pod-csr -o jsonpath='{.status.certificate}' | base64 --decode > server.crt

Security context aka runas

This feature allows to assign security context at container or pod level (applies to all containers), defining permissions and a user of running processes. Elsewhere it'd run as root if not defined. Help:

kubectl explain pod.spec.containers.securityContext

Show default security context

kubectl -n dev run --generator=run-pod/v1 alpine-with-defaults --image=alpine --restart Never -- /bin/sleep 99999 kubectl -n dev exec alpine-with-defaults -- id uid=0(root) gid=0(root) groups=0(root),1(bin),2(daemon),3(sys),4(adm),6(disk),10(wheel),11(floppy),20(dialout),26(tape),27(video)

Different security contexts

| Container context | Add/drop capabilities | Read only filesystem |

|---|---|---|

| <syntaxhighlightjs lang=yaml>

apiVersion: v1 kind: Pod metadata: name: alpine-nonroot spec: containers: - name: alpine-main image: alpine command: ["/bin/sleep", "9999"] securityContext:

runAsUser: 1111 # [1]

runAsNonRoot: true # [2]

</syntaxhighlightjs> |

<syntaxhighlightjs lang=yaml>

apiVersion: v1 kind: Pod metadata: name: alpine-changedate spec: containers: - name: alpine-main image: alpine command: ["/bin/sleep", "9999"] securityContext:

capabilities:

add: # allow date change

- SYS_TIME # the date will \

drop: # change back in ~5s

- CHOWN # won't allow \

# change owner

</syntaxhighlightjs> |

<syntaxhighlightjs lang=bash>

apiVersion: v1 kind: Pod metadata: name: alpine-ro-filesystem spec: containers: - name: alpine-main image: alpine command: ["/bin/sleep", "9999"] securityContext:

readOnlyRootFilesystem: true

volumeMounts:

- name: rw-volume

mountPath: /volume

readOnly: false

volumes:

- name: rw-volume

emptyDir:

kubectl exec -it alpine-ro-filesystem -- touch /volume/aaa

kubectl exec -it alpine-ro-filesystem -- touch aaa </syntaxhighlightjs> |

- Pod security context

kubectl exec -it alpine-nonroot -- ps PID USER TIME COMMAND 1 1111 0:00 sleep 9999 # <- process runAs securityContext specified user 16 1111 0:00 ps kubectl explain pod.spec.securityContext

Manifest

<syntaxhighlightjs lang=yaml>

apiVersion: v1

kind: Pod

metadata:

name: alpine-group-context

spec:

securityContext:

fsGroup: 555 # special supplemental group that applies to all containers in a pod

supplementalGroups: [666, 777]

containers:

- name: first

image: alpine

command: ["/bin/sleep", "9999"]

securityContext:

runAsUser: 1111

volumeMounts:

- name: shared-volume

mountPath: /volume

readOnly: false

- name: second

image: alpine

command: ["/bin/sleep", "9999"]

securityContext:

runAsUser: 2222

volumeMounts:

- name: shared-volume

mountPath: /volume

readOnly: false

volumes:

- name: shared-volume

emptyDir:

</syntaxhighlightjs>

Verification commands:

$ kubectl exec -it group-context -c first -- id uid=1111 gid=0(root) groups=555,666,777 # user 1111 $ kubectl exec -it group-context -c second -- id uid=2222 gid=0(root) groups=555,666,777 # user 2222 $ kubectl exec -it group-context -c first -- touch /tmp/file0 $ kubectl exec -it group-context -c first -- ls -l /tmp/file0 -rw-r--r-- 1 1111 root 0 Aug 26 16:08 /tmp/file0 # default group $ kubectl exec -it group-context -c first -- touch /volume/file1 $ kubectl exec -it group-context -c first -- ls -l /volume/file1 -rw-r--r-- 1 1111 555 0 Aug 26 16:06 /volume/file1 # group specified at Pod level

Secrets, secure KV pairs

Secrets are maps that hold key value pairs, they persist beyond pod lifecycle. They can be passed to a container as environemnt variable but mounting secrets as volume is recommended way. This way, secrets never get written to disk because are stored in an in-memory filesystem (tmpfs) and mounted as a volume. Applications will read secrets as files from the mounted path.

Each pod has default secret (holds service-account token) volume attached to it.

kubectl get secrets -A # get all secrets

# Start a pod to see mounted secret

kubectl -n dev run --generator=run-pod/v1 alpine-with-defaults-1 --image=alpine:3.7 --restart Never -- /bin/sleep 99999

kubectl -n dev describe pod alpine-with-defaults-1 | grep -A4 -e Volumes -e Mounts

Mounts:

/var/run/secrets/kubernetes.io/serviceaccount from default-token-sk6hd (ro)

Conditions:

Type Status

Initialized True

--

Volumes:

default-token-sk6hd:

Type: Secret (a volume populated by a Secret)

SecretName: default-token-sk6hd

Optional: false

# Describe the secret

kubectl -n dev describe secrets default-token-sk6hd

Name: default-token-sk6hd

Namespace: dev

Labels: <none>

Annotations: kubernetes.io/service-account.name: default

kubernetes.io/service-account.uid: c3ed7359-d815-4ecb-9862-0d25996b6ebe

Type: kubernetes.io/service-account-token

Data

====

token: eyJhbGciOiJSUzI1NiIs**********1qxmToQLwCUdhUqSYEag

ca.crt: 1066 bytes

namespace: 3 bytes

# Preview volume mounts of secrets

kubectl -n dev exec -it alpine-with-defaults-1 -- mount | grep kubernetes

tmpfs on /run/secrets/kubernetes.io/serviceaccount type tmpfs (ro,relatime)

# Preview the secret value from a container

kubectl -n dev exec -it alpine-with-defaults-1 -- cat /var/run/secrets/kubernetes.io/serviceaccount/token

eyJhbGciOiJSUzI1NiIs**********1qxmToQLwCUdhUqSYEag

- Store certificates in secrets

openssl genrsa -out https.key 2048 openssl rand -hex 10 > ~/.rnd # avoid ERR: 140468465254848:error:2406F079:random number generator:RAND_load_file:Cannot open file:../crypto/rand/randfile.c:88:Filename=/home/<user>/.rnd openssl req -new -x509 -key https.key -out https.cert -days 365 -subj /CN=www.example.com touch file kubectl -n dev create secret generic https-cert --from-file=https.key --from-file=https.cert --from-file=file kubectl -n dev describe secrets https-cert Name: https-cert Namespace: dev Labels: <none> Annotations: <none> Type: Opaque Data ==== file: 0 bytes https.cert: 1131 bytes https.key: 1675 bytes # Decoded version in base64 format $ kubectl -n dev get secrets https-cert -oyaml apiVersion: v1 data: file: "" https.cert: LS0tLS1CRUdJTiBDRVJUSUZJQ0F******lDQVRFLS0tLS0K https.key: LS0tLS1CRUdJTi*****IFJTQSBQUklWQVRFIEtFWS0tLS0tCg== kind: Secret metadata: creationTimestamp: "2019-08-27T07:12:59Z" name: https-cert namespace: dev resourceVersion: "21864" selfLink: /api/v1/namespaces/dev/secrets/https-cert uid: e9e7941c-46ac-4996-99d2-f53bc8e2a1ba type: Opaque

Use the secrets with nginx now

| nginx-https manifest | https-cert secret manifest |

|---|---|

apiVersion: v1

kind: Pod

metadata:

name: nginx-https

spec:

containers:

- image: nginx

name: html-web

env:

- name: INTERVAL

valueFrom:

configMapKeyRef:

name: config

key: sleep-interval

volumeMounts:

- name: html

mountPath: /var/htdocs

- image: nginx:alpine

name: web-server

volumeMounts:

- name: html

mountPath: /usr/share/nginx/html

readOnly: true

- name: config

mountPath: /etc/nginx/conf.d

readOnly: true

- name: certs

mountPath: /etc/nginx/certs/

readOnly: true

ports:

- containerPort: 80

- containerPort: 443

volumes:

- name: html

emptyDir: {}

- name: config

configMap:

name: config

items:

- key: nginx-config.conf

path: https.conf

- name: certs

secret:

secretName: https-cert

|

apiVersion: v1

kind: ConfigMap

metadata:

name: config

data:

nginx-config.conf: |

server {

listen 80;

listen 443 ssl;

server_name www.example.com;

ssl_certificate certs/https.cert;

ssl_certificate_key certs/https.key;

ssl_protocols TLSv1 TLSv1.1 TLSv1.2;

ssl_ciphers HIGH:!aNULL:!MD5;

location / {

root /usr/share/nginx/html;

index index.html index.htm;

}

}

sleep-interval: |

25

|

kubectl port-forward example-https 8443:443 & curl -k https://localhost:8443

- Secrets K8s docs

Pod Security Policy

The Kubernetes pod security policy admission controller validates pod creation and update requests against a set of rules. You should have your policies applied to your cluster before enabling the admission controller otherwise no pods will be able to be scheduled.

Read more about AWS EKS psp

Commands tested under

$ kubectl version --short Client Version: v1.15.0 Server Version: v1.14.8-eks-b8860f

Default PSP policy in EKS

kubectl get psp eks.privileged -oyaml --export=true #

Flag --export has been deprecated, This flag is deprecated and will be removed in future.

apiVersion: extensions/v1beta1

kind: PodSecurityPolicy

metadata:

annotations:

kubectl.kubernetes.io/last-applied-configuration: |

{"apiVersion":"policy/v1beta1","kind":"PodSecurityPolicy","metadata":{"annotations":{"kubernetes.io/description":"privileged allows full unrestricted access to pod features, as if the PodSecurityPolicy controller was not enabled.","seccomp.security.alpha.kubernetes.io/allowedProfileNames":"*"},"labels":{"eks.amazonaws.com/component":"pod-security-policy","kubernetes.io/cluster-service":"true"},"name":"eks.privileged"},"spec":{"allowPrivilegeEscalation":true,"allowedCapabilities":["*"],"fsGroup":{"rule":"RunAsAny"},"hostIPC":true,"hostNetwork":true,"hostPID":true,"hostPorts":[{"max":65535,"min":0}],"privileged":true,"readOnlyRootFilesystem":false,"runAsUser":{"rule":"RunAsAny"},"seLinux":{"rule":"RunAsAny"},"supplementalGroups":{"rule":"RunAsAny"},"volumes":["*"]}}

kubernetes.io/description: privileged allows full unrestricted access to pod features,

as if the PodSecurityPolicy controller was not enabled.

seccomp.security.alpha.kubernetes.io/allowedProfileNames: '*'

creationTimestamp: null

labels:

eks.amazonaws.com/component: pod-security-policy

kubernetes.io/cluster-service: "true"

name: eks.privileged

selfLink: /apis/extensions/v1beta1/podsecuritypolicies/eks.privileged

spec:

allowPrivilegeEscalation: true

allowedCapabilities:

- '*'

fsGroup:

rule: RunAsAny

hostIPC: true

hostNetwork: true

hostPID: true

hostPorts:

- max: 65535

min: 0

privileged: true

runAsUser:

rule: RunAsAny

seLinux:

rule: RunAsAny

supplementalGroups:

rule: RunAsAny

volumes:

- '*'

- Verify

Get available users, groups and serviceaccounts

kubectl get serviceaccounts -A kubectl get role -A kubectl get clusterrole

kubectl allows you to pose as other users using --as to perform operations, but you can also use it to inspect permissions.

$ kubectl auth can-i use deployment.apps/coredns yes # User impersonation syntax: --as=system:serviceaccount:default:default # < roleName >:< ns >:<user> kubectl auth can-i use psp/eks.privileged --as-group=system:authenticated --as=any-user Warning: resource 'podsecuritypolicies' is not namespace scoped in group 'extensions' no kubectl auth can-i list secrets --namespace dev --as dave # drain node (no namespace scope resource) kubectl auth can-i drain node/ip-10-35-65-154.eu-west-1.compute.internal --as-group=system:authenticated --as=any-user Warning: resource 'nodes' is not namespace scoped no kubectl auth can-i drain node/ip-10-35-65-154.eu-west-1.compute.internal Warning: resource 'nodes' is not namespace scoped yes # delete a namespace resource kubectl auth can-i delete svc/kube-dns -n kube-system yes kubectl auth can-i delete svc/kube-dns -n kube-system --as-group=system:authenticated --as=any-user no